Entries tagged as linux

Related tags

1und1 bios dmidecode hardware lspci motherboard pciids support 3d aiglx blob compiz driver freesoftware gentoo graphics metacity nouveau nvidia opengl xgl xorg 4k assembler breakpoint ccc demoscene entropia gpn gpn5 hacker programming win32 ac100 android laptop notebook smartbook subnotebook toshiba ubuntu addresssanitizer afl americanfuzzylop asan bufferoverflow c clang fuzzing gcc heartbleed memorysafety openssl security useafterfree cacert ccwn come2linux cryptography darmstadt essen games gentoo. compiz hash md5 mrmcd mrmcd100b mrmcd101b passwörter server sha1 unicode utf-8 vortrag wiesbaden wine certificate cloudflare facebook https sha256 ssl tls updates windowsxp apache certificates datenschutz datensparsamkeit encryption grsecurity itsecurity javascript karlsruhe letsencrypt mod_rewrite nginx ocsp ocspstapling php revocation serendipity sni symlink userdir web web20 webhosting webmontag websecurity apt deb debian fedora gnupg gpg openpgp packagemanagement pgp rpm signatures artikel pcmagazin ati ffmpeg gatos presse r300 radeon randr12 tv tvout augsburg augsburgerallgemeine lit07 lug luga openstreetmap zeitung backnang drm film installparty kino kubuntu macos murrhardt softwarefreedomday vista waiblingen windows bahn db demokratie fahrkartenautomat fahrpreisnacherhebung frankreich freifunk ice infoscore mobil nancy plebiszit politik privacy rmll sncf steckdose strom stuttgart stuttgart21 umwelt usability verkehr volksabstimmung wlan bash altushost base64 css ircbot malware script shellbot shellshock vulnerability berlin 129a 1mai 23c3 24c3 27c3 a100 abgeordnetenhaus akongress akw allianz almaty anarchiekongress antiatom asia asia2013 atheismus atomkraft autobahn bahnhof belene bild brandenburg bundestagunited bus buskampagne bz cellular china copyright creativecommons dataretention demonstration dose elephantsdream energie energietisch energiewende erlian freeculture freedomnotfear freiheitstattangst frequencies frequency fsfe gott gsm informationsfreiheit itu journey jugendumweltbewegung jukss kamera kameraüberwachung kazakhstan kernkraft klima klimaschutz klimawandel kohle kohlekraft kongress königswusterhausen kyoto lessig mcplanet mobilephones moleculeman moscow musik ökologie ökostrom openbsc openbts osm osmocombb papst peterschaar petropavl piratenpartei poland polizeigewalt preise privatsphäre ratzinger re-publica re-publica09 religion rp09 rwe science sony springer stromnetz tagebaue taz theory thermen topberlin train transsiberian travel travelling treptow tuberlin überwachung umweltschutz unserwasser urgewald videoüberwachung volksbegehren vorratsdatenspeicherung wahl warsaw wasser wg wiki wiretapping wirklimaretter wos wos4 yining youtube zensur zimmer beryl compizfusion composite benchmark cd dvd eltorito grub harddisk iso lenovo memdisk mod optiarc performance sata ssd syslinux t61 thinkpad bonn froscon froscon2007 siegburg talk gimp sunras code sizeof blog ca calendar eff email english ipv6 kubik ludwigsburg lugbk observatory planet rc2 rsa schokokeks simplesharingextensions smime stadtmitte webinale x509 camera canon chdk digitalcamera gadgets gammu gnokii gphoto ixus mobile nokia ptp time saturn cardreader memorystick pcmcia ricoh samsung sd sdricohcs bewußtsein braunschweig bsideshn c4 cctv dvb easterhegg gpn6 gpn7 hannover licenses mysmartgrid papierlos philosophie programmieren publicdomain querfunk radio rsaoaep rsapss slides surveillance tpm transvalid überwachungskameras wahlcomputer wahlmaschinen inkscape chemnitz clt lpi messe chromium chrome crash cve diffiehellman firefox forwardsecrecy ghost glibc keyexchange redhat cinelerra gkm kohlekraftwerk mannheim thesource video videoediting kde compression rar theunarchiver unar console homebrew wii wiibrew 68 aacs abmahnung alecempire aliens amusementpark art banksy barrierefrei beijing berneconvention bittorrent bitv blueray boluo brigittezypries buch bücher bundestag cedric chaosradio cinderella copycat corruptibles cpdl culturalflatrate culture di disney disneyland ebook epetition eu eucopyrightdirective evrimsen fake fanart fanfiction filesharing freizeitpark funkerspuk gema gemavermutung geodaten graffiti guangzhou gutenberg gvl hallstatt hddvd hiddenfrontier html huizhou ifpi illegalart iromance jankroemer journalismus justizministerium kinderlieder kopierschutz kriegderwelten kts kultur laterne laternenumzug luoyang markenrecht metis movies mpaa musikindustrie musikpiraten negativland netradio nocopy patent patente penguinbooks petition piratbyrån piratebay pressefreiheit radiopolitik raubdruck raubkopie remix sanktmartin savenetradio sciencefiction shijingshan spiritlevel startrek stepmania tagesschau thepiratebay tomcruise trip2011 trips tuxmas tuxmas07 usa usbstick verwertungsgesellschaft vgmusikeditionen vgwort vlc w3c warez webradio wipo wizo core coredump segfault webroot webserver cpu cpufreq overheatd overheating aavepyörä br breakcore copycan developingworld fdl freemusic fruechtedeszorns goa internet jamendo license microsoft movie nerosdaysatdisneyland ogg paniq pioneerone presserat python series vorbis algorithm badkeys browser cccamp cccamp15 cmi crypto dell deolalikar diploma diplomarbeit edellroot enigma fortigate fortinet gsoc http key keyserver leak libressl maninthemiddle math milleniumproblems mitm modulobias nist nss openbsd openid openidconnect password pnp privatekey provablesecurity pss random revoke schlüssel sha2 sha512 sso superfish thesis university verschlüsselung wordpress gobi helma xss distributions fma86t delilinux distribution hp omnibook 0a000h altparty antinuclear bingen c64 crest demoparty ecology evoke ferry finland helsinki jux köln loviisa painstation sid spiegelberg ultrasound welleerdball desktop gnome mandriva metisse babelfish chinese csrf etymologie googletranslate josm journalist language mandarin media merkaartor moodle russia russian s9y translation universaltranslator writing geo gps esslingen exe ico icons icoutils wrestool ape audacious codecs fileformats flash flv gstreamer imagemagick konqueror legacy libav monkeysaudio mplayer multimedia realaudio realmedia realvideo retro retrocomputing rv30 rv40 shn shorten totem vc-1 voc vqf win32codecs wmv xine berserk bleichenbacher clickjacking ftp mozilla poodle xsa zzuf france freedesktop gtk kgtk qt standards 3ddrucker adobe ddwrt evince firmware freewvs freiegesellschaft fsf gaia gargoyle google googleearth ibm kpdf lpic lsusb nessus okular olpc openexpo opensourceexpo openvas pdf phoronix poppler rapidprototyping reprap retrogames reverseengineering router sfd simcity society sqlinjection sumatrapdf usbids windowsrefund gedelitz modding p30 wendland wendlandcamp arcade computergames computerspiele donkeykong escapa gajim gameandwatch gameboy instantmessaging jabber konsole konsolen lemmings lucasarts luigi mario monkeyisland nes nintendo nintendo64 retrogaming scumm spiele supermario supermariogalaxy tictactoe videogames xmpp zkm censorship developer freedomofspeech gebabbel gpsbabel idn iputils mobiletrailexplorer ping politics x1carbon factoring bypass geographie googlemaps passwordalert aok computer cybercrimeconvention gesetze hacktools passwort wiesbadenerkurier magnet neodym recycling smart smartmontools usb atm display dsl energiesparen garmin hddtemp lm_sensors mapsource ntbba p35 quest routing howto iptables network proxy squid administration rfc akademy ct modem winmodem webinale07 schäuble wga argentinia cinema cubacrisis magneto nazi prequel xmen xmenfirstclass development featurephone future gadget smartphone technology certificateauthority symantec badcannstatt bnn cannstatt cccs earth exif geocaching geotagging gpx jpeg kaisersbach mars osm2poly osmosis press rtl spiegel tomorrow otr content-security-policy cookie csp drupal gallery infoleak mantis mysql pdo session sniffing squirrelmail stacktrace deutschewelle gentechnik oberboihingen schwäbischestaglatt sexismus ruby blowfish onlinetvrecorder otrkey schneier provider 0days adguard aead aes afra antivir antivirus auskunftsanspruch axfr azure barcamp bias bigbluebutton bodensee botnetz bsi bugbounty bundesdatenschutzgesetz bundestrojaner bundesverfassungsgericht busby cbc cccamp11 cfb chcounter clamav cms conflictofinterest dingens dns domain eplus fileexfiltration firewall freak git gnutls hackerone hacking informationdisclosure internetscan jodconverter joomla kaspersky komodia libreoffice luckythirteen mephisto napster netfiltersdk newspaper ntp ntpd onlinedurchsuchung openleaks owncloud padding panda phishing privdog protocolfilters rand rhein rss salinecourier sicherheit snallygaster spam staatsanwaltschaft study subdomain tlsdate toendacms unicef update virus vulnerabilities webapps zerodays zugangsdaten halloween rootserver yacy breach cookies crime date heist ntimed ntpsec openntpd picture roughtime samesite securetime autoradio flac kabel mp3 ard ardget audio cartoon deutschland download feuerwerk filter fireworks flvstreamer fußball kapitalismuskritik kickit krisis mediathek mitschnitt nationalismus rfid rtmp rtmpdump theorie wm folien ifg informationsfreiheitsgesetz bugtracker github nextcloud absturz faithfighter gnash molleindustria religionskritik swfdec hotline vodafoneFriday, September 13. 2019

Security Issues with PGP Signatures and Linux Package Management

In discussions around the PGP ecosystem one thing I often hear is that while PGP has its problems, it's an important tool for package signatures in Linux distributions. I therefore want to highlight a few issues I came across in this context that are rooted in problems in the larger PGP ecosystem.

Let's look at an example of the use of PGP signatures for deb packages, the Ubuntu Linux installation instructions for HHVM. HHVM is an implementation of the HACK programming language and developed by Facebook. I'm just using HHVM as an example here, as it nicely illustrates two attacks I want to talk about, but you'll find plenty of similar installation instructions for other software packages. I have reported these issues to Facebook, but they decided not to change anything.

The instructions for Ubuntu (and very similarly for Debian) recommend that users execute these commands in order to install HHVM from the repository of its developers:

The crucial part here is the line starting with apt-key. It fetches the key that is used to sign the repository from the Ubuntu key server, which itself is part of the PGP keyserver network.

Attack 1: Flooding Key with Signatures

Attack 1: Flooding Key with Signatures

The first possible attack is actually quite simple: One can make the signature key offered here unusable by appending many signatures.

A key concept of the PGP keyservers is that they operate append-only. New data gets added, but never removed. PGP keys can sign other keys and these signatures can also be uploaded to the keyservers and get added to a key. Crucially the owner of a key has no influence on this.

This means everyone can grow the size of a key by simply adding many signatures to it. Lately this has happened to a number of keys, see the blog posts by Daniel Kahn Gillmor and Robert Hansen, two members of the PGP community who have personally been affected by this. The effect of this is that when GnuPG tries to import such a key it becomes excessively slow and at some point will simply not work any more.

For the above installation instructions this means anyone can make them unusable by attacking the referenced release key. In my tests I was still able to import one of the attacked keys with apt-key after several minutes, but these keys "only" have a few ten thousand signatures, growing them to a few megabytes size. There's no reason an attacker couldn't use millions of signatures and grow single keys to gigabytes.

Attack 2: Rogue packages with a colliding Key Id

The installation instructions reference the key as 0xB4112585D386EB94, which is a 64 bit hexadecimal key id.

Key ids are a central concept in the PGP ecosystem. The key id is a truncated SHA1 hash of the public key. It's possible to either use the last 32 bit, 64 bit or the full 160 bit of the hash.

It's been pointed out in the past that short key ids allow colliding key ids. This means an attacker can generate a different key with the same key id where he owns the private key simply by bruteforcing the id. In 2014 Richard Klafter and Eric Swanson showed with the Evil32 attack how to create colliding key ids for all keys in the so-called strong set (meaning all keys that are connected with most other keys in the web of trust). Later someone unknown uploaded these keys to the key servers causing quite some confusion.

It should be noted that the issue of colliding key ids was known and discussed in the community way earlier, see for example this discussion from 2002.

The practical attacks targeted 32 bit key ids, but the same attack works against 64 bit key ids, too, it just costs more. I contacted the authors of the Evil32 attack and Eric Swanson estimated in a back of the envelope calculation that it would cost roughly $ 120.000 to perform such an attack with GPUs on cloud providers. This is expensive, but within the possibilities of powerful attackers. Though one can also find similar installation instructions using a 32 bit key id, where the attack is really cheap.

Going back to the installation instructions from above we can imagine the following attack: A man in the middle network attacker can intercept the connection to the keyserver - it's not encrypted or authenticated - and provide the victim a colliding key. Afterwards the key is imported by the victim, so the attacker can provide repositories with packages signed by his key, ultimately leading to code execution.

You may notice that there's a problem with this attack: The repository provided by HHVM is using HTTPS. Thus the attacker can not simply provide a rogue HHVM repository. However the attack still works.

The imported PGP key is not bound to any specific repository. Thus if the victim has any non-HTTPS repository configured in his system the attacker can provide a rogue repository on the next call of "apt update". Notably by default both Debian and Ubuntu use HTTP for their repositories (a Debian developer even runs a dedicated web page explaining why this is no big deal).

Attack 3: Key over HTTP

Issues with package keys aren't confined to Debian/APT-based distributions. I found these installation instructions at Dropbox (Link to Wayback Machine, as Dropbox has changed them after I reported this):

It should be obvious what the issue here is: Both the key and the repository are fetched over HTTP, a network attacker can simply provide his own key and repository.

Discussion

The standard answer you often get when you point out security problems with PGP-based systems is: "It's not PGP/GnuPG, people are just using it wrong". But I believe these issues show some deeper problems with the PGP ecosystem. The key flooding issue is inherited from the systemically flawed concept of the append only key servers.

The other issue here is lack of deprecation. Short key ids are problematic, it's been known for a long time and there have been plenty of calls to get rid of them. This begs the question why no effort has been made to deprecate support for them. One could have said at some point: Future versions of GnuPG will show a warning for short key ids and in three years we will stop supporting them.

This reminds of other issues like unauthenticated encryption, where people have been arguing that this was fixed back in 1999 by the introduction of the MDC. Yet in 2018 it was still exploitable, because the unauthenticated version was never properly deprecated.

Fix

For all people having installation instructions for external repositories my recommendation would be to avoid any use of public key servers. Host the keys on your own infrastructure and provide them via HTTPS. Furthermore any reference to 32 bit or 64 bit key ids should be avoided.

Update: Some people have pointed out to me that the Debian Wiki contains guidelines for third party repositories that avoid the issues mentioned here.

Let's look at an example of the use of PGP signatures for deb packages, the Ubuntu Linux installation instructions for HHVM. HHVM is an implementation of the HACK programming language and developed by Facebook. I'm just using HHVM as an example here, as it nicely illustrates two attacks I want to talk about, but you'll find plenty of similar installation instructions for other software packages. I have reported these issues to Facebook, but they decided not to change anything.

The instructions for Ubuntu (and very similarly for Debian) recommend that users execute these commands in order to install HHVM from the repository of its developers:

apt-get update

apt-get install software-properties-common apt-transport-https

apt-key adv --recv-keys --keyserver hkp://keyserver.ubuntu.com:80 0xB4112585D386EB94

add-apt-repository https://dl.hhvm.com/ubuntu

apt-get update

apt-get install hhvm

apt-get install software-properties-common apt-transport-https

apt-key adv --recv-keys --keyserver hkp://keyserver.ubuntu.com:80 0xB4112585D386EB94

add-apt-repository https://dl.hhvm.com/ubuntu

apt-get update

apt-get install hhvm

The crucial part here is the line starting with apt-key. It fetches the key that is used to sign the repository from the Ubuntu key server, which itself is part of the PGP keyserver network.

Attack 1: Flooding Key with Signatures

Attack 1: Flooding Key with SignaturesThe first possible attack is actually quite simple: One can make the signature key offered here unusable by appending many signatures.

A key concept of the PGP keyservers is that they operate append-only. New data gets added, but never removed. PGP keys can sign other keys and these signatures can also be uploaded to the keyservers and get added to a key. Crucially the owner of a key has no influence on this.

This means everyone can grow the size of a key by simply adding many signatures to it. Lately this has happened to a number of keys, see the blog posts by Daniel Kahn Gillmor and Robert Hansen, two members of the PGP community who have personally been affected by this. The effect of this is that when GnuPG tries to import such a key it becomes excessively slow and at some point will simply not work any more.

For the above installation instructions this means anyone can make them unusable by attacking the referenced release key. In my tests I was still able to import one of the attacked keys with apt-key after several minutes, but these keys "only" have a few ten thousand signatures, growing them to a few megabytes size. There's no reason an attacker couldn't use millions of signatures and grow single keys to gigabytes.

Attack 2: Rogue packages with a colliding Key Id

The installation instructions reference the key as 0xB4112585D386EB94, which is a 64 bit hexadecimal key id.

Key ids are a central concept in the PGP ecosystem. The key id is a truncated SHA1 hash of the public key. It's possible to either use the last 32 bit, 64 bit or the full 160 bit of the hash.

It's been pointed out in the past that short key ids allow colliding key ids. This means an attacker can generate a different key with the same key id where he owns the private key simply by bruteforcing the id. In 2014 Richard Klafter and Eric Swanson showed with the Evil32 attack how to create colliding key ids for all keys in the so-called strong set (meaning all keys that are connected with most other keys in the web of trust). Later someone unknown uploaded these keys to the key servers causing quite some confusion.

It should be noted that the issue of colliding key ids was known and discussed in the community way earlier, see for example this discussion from 2002.

The practical attacks targeted 32 bit key ids, but the same attack works against 64 bit key ids, too, it just costs more. I contacted the authors of the Evil32 attack and Eric Swanson estimated in a back of the envelope calculation that it would cost roughly $ 120.000 to perform such an attack with GPUs on cloud providers. This is expensive, but within the possibilities of powerful attackers. Though one can also find similar installation instructions using a 32 bit key id, where the attack is really cheap.

Going back to the installation instructions from above we can imagine the following attack: A man in the middle network attacker can intercept the connection to the keyserver - it's not encrypted or authenticated - and provide the victim a colliding key. Afterwards the key is imported by the victim, so the attacker can provide repositories with packages signed by his key, ultimately leading to code execution.

You may notice that there's a problem with this attack: The repository provided by HHVM is using HTTPS. Thus the attacker can not simply provide a rogue HHVM repository. However the attack still works.

The imported PGP key is not bound to any specific repository. Thus if the victim has any non-HTTPS repository configured in his system the attacker can provide a rogue repository on the next call of "apt update". Notably by default both Debian and Ubuntu use HTTP for their repositories (a Debian developer even runs a dedicated web page explaining why this is no big deal).

Attack 3: Key over HTTP

Issues with package keys aren't confined to Debian/APT-based distributions. I found these installation instructions at Dropbox (Link to Wayback Machine, as Dropbox has changed them after I reported this):

Add the following to /etc/yum.conf.

name=Dropbox Repository

baseurl=http://linux.dropbox.com/fedora/\$releasever/

gpgkey=http://linux.dropbox.com/fedora/rpm-public-key.asc

name=Dropbox Repository

baseurl=http://linux.dropbox.com/fedora/\$releasever/

gpgkey=http://linux.dropbox.com/fedora/rpm-public-key.asc

It should be obvious what the issue here is: Both the key and the repository are fetched over HTTP, a network attacker can simply provide his own key and repository.

Discussion

The standard answer you often get when you point out security problems with PGP-based systems is: "It's not PGP/GnuPG, people are just using it wrong". But I believe these issues show some deeper problems with the PGP ecosystem. The key flooding issue is inherited from the systemically flawed concept of the append only key servers.

The other issue here is lack of deprecation. Short key ids are problematic, it's been known for a long time and there have been plenty of calls to get rid of them. This begs the question why no effort has been made to deprecate support for them. One could have said at some point: Future versions of GnuPG will show a warning for short key ids and in three years we will stop supporting them.

This reminds of other issues like unauthenticated encryption, where people have been arguing that this was fixed back in 1999 by the introduction of the MDC. Yet in 2018 it was still exploitable, because the unauthenticated version was never properly deprecated.

Fix

For all people having installation instructions for external repositories my recommendation would be to avoid any use of public key servers. Host the keys on your own infrastructure and provide them via HTTPS. Furthermore any reference to 32 bit or 64 bit key ids should be avoided.

Update: Some people have pointed out to me that the Debian Wiki contains guidelines for third party repositories that avoid the issues mentioned here.

Posted by Hanno Böck

in Code, English, Linux, Security

at

14:17

| Comments (5)

| Trackback (1)

Defined tags for this entry: apt, cryptography, deb, debian, fedora, gnupg, gpg, linux, openpgp, packagemanagement, pgp, rpm, security, signatures, ubuntu

Thursday, June 15. 2017

Don't leave Coredumps on Web Servers

Coredumps are a feature of Linux and other Unix systems to analyze crashing software. If a software crashes, for example due to an invalid memory access, the operating system can save the current content of the application's memory to a file. By default it is simply called

Coredumps are a feature of Linux and other Unix systems to analyze crashing software. If a software crashes, for example due to an invalid memory access, the operating system can save the current content of the application's memory to a file. By default it is simply called core.While this is useful for debugging purposes it can produce a security risk. If a web application crashes the coredump may simply end up in the web server's root folder. Given that its file name is known an attacker can simply download it via an URL of the form

https://example.org/core. As coredumps contain an application's memory they may expose secret information. A very typical example would be passwords.PHP used to crash relatively often. Recently a lot of these crash bugs have been fixed, in part because PHP now has a bug bounty program. But there are still situations in which PHP crashes. Some of them likely won't be fixed.

How to disclose?

With a scan of the Alexa Top 1 Million domains for exposed core dumps I found around 1.000 vulnerable hosts. I was faced with a challenge: How can I properly disclose this? It is obvious that I wouldn't write hundreds of manual mails. So I needed an automated way to contact the site owners.

Abusix runs a service where you can query the abuse contacts of IP addresses via a DNS query. This turned out to be very useful for this purpose. One could also imagine contacting domain owners directly, but that's not very practical. The domain whois databases have rate limits and don't always expose contact mail addresses in a machine readable way.

Using the abuse contacts doesn't reach all of the affected host operators. Some abuse contacts were nonexistent mail addresses, others didn't have abuse contacts at all. I also got all kinds of automated replies, some of them asking me to fill out forms or do other things, otherwise my message wouldn't be read. Due to the scale I ignored those. I feel that if people make it hard for me to inform them about security problems that's not my responsibility.

I took away two things that I changed in a second batch of disclosures. Some abuse contacts seem to automatically search for IP addresses in the abuse mails. I originally only included affected URLs. So I changed that to include the affected IPs as well.

In many cases I was informed that the affected hosts are not owned by the company I contacted, but by a customer. Some of them asked me if they're allowed to forward the message to them. I thought that would be obvious, but I made it explicit now. Some of them asked me that I contact their customers, which again, of course, is impractical at scale. And sorry: They are your customers, not mine.

How to fix and prevent it?

If you have a coredump on your web host, the obvious fix is to remove it from there. However you obviously also want to prevent this from happening again.

There are two settings that impact coredump creation: A limits setting, configurable via

/etc/security/limits.conf and ulimit and a sysctl interface that can be found under /proc/sys/kernel/core_pattern.The limits setting is a size limit for coredumps. If it is set to zero then no core dumps are created. To set this as the default you can add something like this to your

limits.conf:* soft core 0The sysctl interface sets a pattern for the file name and can also contain a path. You can set it to something like this:

/var/log/core/core.%e.%p.%h.%tThis would store all coredumps under

/var/log/core/ and add the executable name, process id, host name and timestamp to the filename. The directory needs to be writable by all users, you should use a directory with the sticky bit (chmod +t).If you set this via the proc file interface it will only be temporary until the next reboot. To set this permanently you can add it to

/etc/sysctl.conf:kernel.core_pattern = /var/log/core/core.%e.%p.%h.%tSome Linux distributions directly forward core dumps to crash analysis tools. This can be done by prefixing the pattern with a pipe (|). These tools like apport from Ubuntu or abrt from Fedora have also been the source of security vulnerabilities in the past. However that's a separate issue.

Look out for coredumps

My scans showed that this is a relatively common issue. Among popular web pages around one in a thousand were affected before my disclosure attempts. I recommend that pentesters and developers of security scan tools consider checking for this. It's simple: Just try download the

/core file and check if it looks like an executable. In most cases it will be an ELF file, however sometimes it may be a Mach-O (OS X) or an a.out file (very old Linux and Unix systems).Image credit: NASA/JPL-Université Paris Diderot

Posted by Hanno Böck

in English, Gentoo, Linux, Security

at

11:20

| Comments (0)

| Trackback (1)

Defined tags for this entry: core, coredump, crash, linux, php, segfault, vulnerability, webroot, websecurity, webserver

Tuesday, January 26. 2016

Safer use of C code - running Gentoo with Address Sanitizer

Update: When I wrote this blog post it was an open question for me whether using Address Sanitizer in production is a good idea. A recent analysis posted on the oss-security mailing list explains in detail why using Asan in its current form is almost certainly not a good idea. Having any suid binary built with Asan enables a local root exploit - and there are various other issues. Therefore using Gentoo with Address Sanitizer is only recommended for developing and debugging purposes.

Address Sanitizer is a remarkable feature that is part of the gcc and clang compilers. It can be used to find many typical C bugs - invalid memory reads and writes, use after free errors etc. - while running applications. It has found countless bugs in many software packages. I'm often surprised that many people in the free software community seem to be unaware of this powerful tool.

Address Sanitizer is a remarkable feature that is part of the gcc and clang compilers. It can be used to find many typical C bugs - invalid memory reads and writes, use after free errors etc. - while running applications. It has found countless bugs in many software packages. I'm often surprised that many people in the free software community seem to be unaware of this powerful tool.

Address Sanitizer is mainly intended to be a debugging tool. It is usually used to test single applications, often in combination with fuzzing. But as Address Sanitizer can prevent many typical C security bugs - why not use it in production? It doesn't come for free. Address Sanitizer takes significantly more memory and slows down applications by 50 - 100 %. But for some security sensitive applications this may be a reasonable trade-off. The Tor project is already experimenting with this with its Hardened Tor Browser.

One project I've been working on in the past months is to allow a Gentoo system to be compiled with Address Sanitizer. Today I'm publishing this and want to allow others to test it. I have created a page in the Gentoo Wiki that should become the central documentation hub for this project. I published an overlay with several fixes and quirks on Github.

I see this work as part of my Fuzzing Project. (I'm posting it here because the Gentoo category of my personal blog gets indexed by Planet Gentoo.)

I am not sure if using Gentoo with Address Sanitizer is reasonable for a production system. One thing that makes me uneasy in suggesting this for high security requirements is that it's currently incompatible with Grsecurity. But just creating this project already caused me to find a whole number of bugs in several applications. Some notable examples include Coreutils/shred, Bash ([2], [3]), man-db, Pidgin-OTR, Courier, Syslog-NG, Screen, Claws-Mail ([2], [3]), ProFTPD ([2], [3]) ICU, TCL ([2]), Dovecot. I think it was worth the effort.

I will present this work in a talk at FOSDEM in Brussels this Saturday, 14:00, in the Security Devroom.

Address Sanitizer is a remarkable feature that is part of the gcc and clang compilers. It can be used to find many typical C bugs - invalid memory reads and writes, use after free errors etc. - while running applications. It has found countless bugs in many software packages. I'm often surprised that many people in the free software community seem to be unaware of this powerful tool.

Address Sanitizer is a remarkable feature that is part of the gcc and clang compilers. It can be used to find many typical C bugs - invalid memory reads and writes, use after free errors etc. - while running applications. It has found countless bugs in many software packages. I'm often surprised that many people in the free software community seem to be unaware of this powerful tool.Address Sanitizer is mainly intended to be a debugging tool. It is usually used to test single applications, often in combination with fuzzing. But as Address Sanitizer can prevent many typical C security bugs - why not use it in production? It doesn't come for free. Address Sanitizer takes significantly more memory and slows down applications by 50 - 100 %. But for some security sensitive applications this may be a reasonable trade-off. The Tor project is already experimenting with this with its Hardened Tor Browser.

One project I've been working on in the past months is to allow a Gentoo system to be compiled with Address Sanitizer. Today I'm publishing this and want to allow others to test it. I have created a page in the Gentoo Wiki that should become the central documentation hub for this project. I published an overlay with several fixes and quirks on Github.

I see this work as part of my Fuzzing Project. (I'm posting it here because the Gentoo category of my personal blog gets indexed by Planet Gentoo.)

I am not sure if using Gentoo with Address Sanitizer is reasonable for a production system. One thing that makes me uneasy in suggesting this for high security requirements is that it's currently incompatible with Grsecurity. But just creating this project already caused me to find a whole number of bugs in several applications. Some notable examples include Coreutils/shred, Bash ([2], [3]), man-db, Pidgin-OTR, Courier, Syslog-NG, Screen, Claws-Mail ([2], [3]), ProFTPD ([2], [3]) ICU, TCL ([2]), Dovecot. I think it was worth the effort.

I will present this work in a talk at FOSDEM in Brussels this Saturday, 14:00, in the Security Devroom.

Posted by Hanno Böck

in Code, English, Gentoo, Linux, Security

at

01:40

| Comments (5)

| Trackbacks (0)

Defined tags for this entry: addresssanitizer, asan, bufferoverflow, c, clang, gcc, gentoo, linux, memorysafety, security, useafterfree

Tuesday, June 23. 2015

The tricky security issue with FollowSymLinks and Apache

tl;dr Most servers running a multi-user webhosting setup with Apache HTTPD probably have a security problem. Unless you're using Grsecurity there is no easy fix.

I am part of a small webhosting business that I run as a side project since quite a while. We offer customers user accounts on our servers running Gentoo Linux and webspace with the typical Apache/PHP/MySQL combination. We recently became aware of a security problem regarding Symlinks. I wanted to share this, because I was appalled by the fact that there was no obvious solution.

Apache has an option FollowSymLinks which basically does what it says. If a symlink in a webroot is accessed the webserver will follow it. In a multi-user setup this is a security problem. Here's why: If I know that another user on the same system is running a typical web application - let's say Wordpress - I can create a symlink to his config file (for Wordpress that's wp-config.php). I can't see this file with my own user account. But the webserver can see it, so I can access it with the browser over my own webpage. As I'm usually allowed to disable PHP I'm able to prevent the server from interpreting the file, so I can read the other user's database credentials. The webserver needs to be able to see all files, therefore this works. While PHP and CGI scripts usually run with user's rights (at least if the server is properly configured) the files are still read by the webserver. For this to work I need to guess the path and name of the file I want to read, but that's often trivial. In our case we have default paths in the form /home/[username]/websites/[hostname]/htdocs where webpages are located.

So the obvious solution one might think about is to disable the FollowSymLinks option and forbid users to set it themselves. However symlinks in web applications are pretty common and many will break if you do that. It's not feasible for a common webhosting server.

Apache supports another Option called SymLinksIfOwnerMatch. It's also pretty self-explanatory, it will only follow symlinks if they belong to the same user. That sounds like it solves our problem. However there are two catches: First of all the Apache documentation itself says that "this option should not be considered a security restriction". It is still vulnerable to race conditions.

But even leaving the race condition aside it doesn't really work. Web applications using symlinks will usually try to set FollowSymLinks in their .htaccess file. An example is Drupal which by default comes with such an .htaccess file. If you forbid users to set FollowSymLinks then the option won't be just ignored, the whole webpage won't run and will just return an error 500. What you could do is changing the FollowSymLinks option in the .htaccess manually to SymlinksIfOwnerMatch. While this may be feasible in some cases, if you consider that you have a lot of users you don't want to explain to all of them that in case they want to install some common web application they have to manually edit some file they don't understand. (There's a bug report for Drupal asking to change FollowSymLinks to SymlinksIfOwnerMatch, but it's been ignored since several years.)

So using SymLinksIfOwnerMatch is neither secure nor really feasible. The documentation for Cpanel discusses several possible solutions. The recommended solutions require proprietary modules. None of the proposed fixes work with a plain Apache setup, which I think is a pretty dismal situation. The most common web server has a severe security weakness in a very common situation and no usable solution for it.

The one solution that we chose is a feature of Grsecurity. Grsecurity is a Linux kernel patch that greatly enhances security and we've been very happy with it in the past. There are a lot of reasons to use this patch, I'm often impressed that local root exploits very often don't work on a Grsecurity system.

Grsecurity has an option like SymlinksIfOwnerMatch (CONFIG_GRKERNSEC_SYMLINKOWN) that operates on the kernel level. You can define a certain user group (which in our case is the "apache" group) for which this option will be enabled. For us this was the best solution, as it required very little change.

I haven't checked this, but I'm pretty sure that we were not alone with this problem. I'd guess that a lot of shared web hosting companies are vulnerable to this problem.

Here's the German blog post on our webpage and here's the original blogpost from an administrator at Uberspace (also German) which made us aware of this issue.

I am part of a small webhosting business that I run as a side project since quite a while. We offer customers user accounts on our servers running Gentoo Linux and webspace with the typical Apache/PHP/MySQL combination. We recently became aware of a security problem regarding Symlinks. I wanted to share this, because I was appalled by the fact that there was no obvious solution.

Apache has an option FollowSymLinks which basically does what it says. If a symlink in a webroot is accessed the webserver will follow it. In a multi-user setup this is a security problem. Here's why: If I know that another user on the same system is running a typical web application - let's say Wordpress - I can create a symlink to his config file (for Wordpress that's wp-config.php). I can't see this file with my own user account. But the webserver can see it, so I can access it with the browser over my own webpage. As I'm usually allowed to disable PHP I'm able to prevent the server from interpreting the file, so I can read the other user's database credentials. The webserver needs to be able to see all files, therefore this works. While PHP and CGI scripts usually run with user's rights (at least if the server is properly configured) the files are still read by the webserver. For this to work I need to guess the path and name of the file I want to read, but that's often trivial. In our case we have default paths in the form /home/[username]/websites/[hostname]/htdocs where webpages are located.

So the obvious solution one might think about is to disable the FollowSymLinks option and forbid users to set it themselves. However symlinks in web applications are pretty common and many will break if you do that. It's not feasible for a common webhosting server.

Apache supports another Option called SymLinksIfOwnerMatch. It's also pretty self-explanatory, it will only follow symlinks if they belong to the same user. That sounds like it solves our problem. However there are two catches: First of all the Apache documentation itself says that "this option should not be considered a security restriction". It is still vulnerable to race conditions.

But even leaving the race condition aside it doesn't really work. Web applications using symlinks will usually try to set FollowSymLinks in their .htaccess file. An example is Drupal which by default comes with such an .htaccess file. If you forbid users to set FollowSymLinks then the option won't be just ignored, the whole webpage won't run and will just return an error 500. What you could do is changing the FollowSymLinks option in the .htaccess manually to SymlinksIfOwnerMatch. While this may be feasible in some cases, if you consider that you have a lot of users you don't want to explain to all of them that in case they want to install some common web application they have to manually edit some file they don't understand. (There's a bug report for Drupal asking to change FollowSymLinks to SymlinksIfOwnerMatch, but it's been ignored since several years.)

So using SymLinksIfOwnerMatch is neither secure nor really feasible. The documentation for Cpanel discusses several possible solutions. The recommended solutions require proprietary modules. None of the proposed fixes work with a plain Apache setup, which I think is a pretty dismal situation. The most common web server has a severe security weakness in a very common situation and no usable solution for it.

The one solution that we chose is a feature of Grsecurity. Grsecurity is a Linux kernel patch that greatly enhances security and we've been very happy with it in the past. There are a lot of reasons to use this patch, I'm often impressed that local root exploits very often don't work on a Grsecurity system.

Grsecurity has an option like SymlinksIfOwnerMatch (CONFIG_GRKERNSEC_SYMLINKOWN) that operates on the kernel level. You can define a certain user group (which in our case is the "apache" group) for which this option will be enabled. For us this was the best solution, as it required very little change.

I haven't checked this, but I'm pretty sure that we were not alone with this problem. I'd guess that a lot of shared web hosting companies are vulnerable to this problem.

Here's the German blog post on our webpage and here's the original blogpost from an administrator at Uberspace (also German) which made us aware of this issue.

Friday, January 30. 2015

What the GHOST tells us about free software vulnerability management

GHOST itself is a Heap Overflow in the name resolution function of the Glibc. The Glibc is the standard C library on Linux systems, almost every software that runs on a Linux system uses it. It is somewhat unclear right now how serious GHOST really is. A lot of software uses the affected function gethostbyname(), but a lot of conditions have to be met to make this vulnerability exploitable. Right now the most relevant attack is against the mail server exim where Qualys has developed a working exploit which they plan to release soon. There have been speculations whether GHOST might be exploitable through Wordpress, which would make it much more serious.

Technically GHOST is a heap overflow, which is a very common bug in C programming. C is inherently prone to these kinds of memory corruption errors and there are essentially two things here to move forwards: Improve the use of exploit mitigation techniques like ASLR and create new ones (levee is an interesting project, watch this 31C3 talk). And if possible move away from C altogether and develop core components in memory safe languages (I have high hopes for the Mozilla Servo project, watch this linux.conf.au talk).

GHOST was discovered three times

But the thing I want to elaborate here is something different about GHOST: It turns out that it has been discovered independently three times. It was already fixed in 2013 in the Glibc Code itself. The commit message didn't indicate that it was a security vulnerability. Then in early 2014 developers at Google found it again using Address Sanitizer (which – by the way – tells you that all software developers should use Address Sanitizer more often to test their software). Google fixed it in Chrome OS and explicitly called it an overflow and a vulnerability. And then recently Qualys found it again and made it public.

Now you may wonder why a vulnerability fixed in 2013 made headlines in 2015. The reason is that it widely wasn't fixed because it wasn't publicly known that it was serious. I don't think there was any malicious intent. The original Glibc fix was probably done without anyone noticing that it is serious and the Google devs may have thought that the fix is already public, so they don't need to make any noise about it. But we can clearly see that something doesn't work here. Which brings us to a discussion how the Linux and free software world in general and vulnerability management in particular work.

The “Never touch a running system” principle

Quite early when I came in contact with computers I heard the phrase “Never touch a running system”. This may have been a reasonable approach to IT systems back then when computers usually weren't connected to any networks and when remote exploits weren't a thing, but it certainly isn't a good idea today in a world where almost every computer is part of the Internet. Because once new security vulnerabilities become public you should change your system and fix them. However that doesn't change the fact that many people still operate like that.

A number of Linux distributions provide “stable” or “Long Time Support” versions. Basically the idea is this: At some point they take the current state of their systems and further updates will only contain important fixes and security updates. They guarantee to fix security vulnerabilities for a certain time frame. This is kind of a compromise between the “Never touch a running system” approach and reasonable security. It tries to give you a system that will basically stay the same, but you get fixes for security issues. Popular examples for this approach are the stable branch of Debian, Ubuntu LTS versions and the Enterprise versions of Red Hat and SUSE.

To give you an idea about time frames, Debian currently supports the stable trees Squeeze (6.0) which was released 2011 and Wheezy (7.0) which was released 2013. Red Hat Enterprise Linux has currently 4 supported version (4, 5, 6, 7), the oldest one was originally released in 2005. So we're talking about pretty long time frames that these systems get supported. Ubuntu and Suse have similar long time supported Systems.

These systems are delivered with an implicit promise: We will take care of security and if you update regularly you'll have a system that doesn't change much, but that will be secure against know threats. Now the interesting question is: How well do these systems deliver on that promise and how hard is that?

Vulnerability management is chaotic and fragile

I'm not sure how many people are aware how vulnerability management works in the free software world. It is a pretty fragile and chaotic process. There is no standard way things work. The information is scattered around many different places. Different people look for vulnerabilities for different reasons. Some are developers of the respective projects themselves, some are companies like Google that make use of free software projects, some are just curious people interested in IT security or researchers. They report a bug through the channels of the respective project. That may be a mailing list, a bug tracker or just a direct mail to the developer. Hopefully the developers fix the issue. It does happen that the person finding the vulnerability first has to explain to the developer why it actually is a vulnerability. Sometimes the fix will happen in a public code repository, sometimes not. Sometimes the developer will mention that it is a vulnerability in the commit message or the release notes of the new version, sometimes not. There are notorious projects that refuse to handle security vulnerabilities in a transparent way. Sometimes whoever found the vulnerability will post more information on his/her blog or on a mailing list like full disclosure or oss-security. Sometimes not. Sometimes vulnerabilities get a CVE id assigned, sometimes not.

Add to that the fact that in many cases it's far from clear what is a security vulnerability. It is absolutely common that if you ask the people involved whether this is serious the best and most honest answer they can give is “we don't know”. And very often bugs get fixed without anyone noticing that it even could be a security vulnerability.

Then there are projects where the number of security vulnerabilities found and fixed is really huge. The latest Chrome 40 release had 62 security fixes, version 39 had 42. Chrome releases a new version every two months. Browser vulnerabilities are found and fixed on a daily basis. Not that extreme but still high is the vulnerability count in PHP, which is especially worrying if you know that many webhosting providers run PHP versions not supported any more.

So you probably see my point: There is a very chaotic stream of information in various different places about bugs and vulnerabilities in free software projects. The number of vulnerabilities is huge. Making a promise that you will scan all this information for security vulnerabilities and backport the patches to your operating system is a big promise. And I doubt anyone can fulfill that.

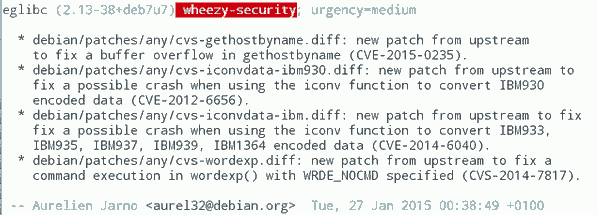

GHOST is a single example, so you might ask how often these things happen. At some point right after GHOST became public this excerpt from the Debian Glibc changelog caught my attention (excuse the bad quality, had to take the image from Twitter because I was unable to find that changelog on Debian's webpages):

What you can see here: While Debian fixed GHOST (which is CVE-2015-0235) they also fixed CVE-2012-6656 – a security issue from 2012. Admittedly this is a minor issue, but it's a vulnerability nevertheless. A quick look at the Debian changelog of Chromium both in squeeze and wheezy will tell you that they aren't fixing all the recent security issues in it. (Debian already had discussions about removing Chromium and in Wheezy they don't stick to a single version.)

It would be an interesting (and time consuming) project to take a package like PHP and check for all the security vulnerabilities whether they are fixed in the latest packages in Debian Squeeze/Wheezy, all Red Hat Enterprise versions and other long term support systems. PHP is probably more interesting than browsers, because the high profile targets for these vulnerabilities are servers. What worries me: I'm pretty sure some people already do that. They just won't tell you and me, instead they'll write their exploits and sell them to repressive governments or botnet operators.

Then there are also stories like this: Tavis Ormandy reported a security issue in Glibc in 2012 and the people from Google's Project Zero went to great lengths to show that it is actually exploitable. Reading the Glibc bug report you can learn that this was already reported in 2005(!), just nobody noticed back then that it was a security issue and it was minor enough that nobody cared to fix it.

There are also bugs that require changes so big that backporting them is essentially impossible. In the TLS world a lot of protocol bugs have been highlighted in recent years. Take Lucky Thirteen for example. It is a timing sidechannel in the way the TLS protocol combines the CBC encryption, padding and authentication. I like to mention this bug because I like to quote it as the TLS bug that was already mentioned in the specification (RFC 5246, page 23: "This leaves a small timing channel"). The real fix for Lucky Thirteen is not to use the erratic CBC mode any more and switch to authenticated encryption modes which are part of TLS 1.2. (There's another possible fix which is using Encrypt-then-MAC, but it is hardly deployed.) Up until recently most encryption libraries didn't support TLS 1.2. Debian Squeeze and Red Hat Enterprise 5 ship OpenSSL versions that only support TLS 1.0. There is no trivial patch that could be backported, because this is a huge change. What they likely backported are workarounds that avoid the timing channel. This will stop the attack, but it is not a very good fix, because it keeps the problematic old protocol and will force others to stay compatible with it.

LTS and stable distributions are there for a reason

The big question is of course what to do about it. OpenBSD developer Ted Unangst wrote a blog post yesterday titled Long term support considered harmful, I suggest you read it. He argues that we should get rid of long term support completely and urge users to upgrade more often. OpenBSD has a 6 month release cycle and supports two releases, so one version gets supported for one year.

Given what I wrote before you may think that I agree with him, but I don't. While I personally always avoided to use too old systems – I 'm usually using Gentoo which doesn't have any snapshot releases at all and does rolling releases – I can see the value in long term support releases. There are a lot of systems out there – connected to the Internet – that are never updated. Taking away the option to install systems and let them run with relatively little maintenance overhead over several years will probably result in more systems never receiving any security updates. With all its imperfectness running a Debian Squeeze with the latest updates is certainly better than running an operating system from 2011 that stopped getting security fixes in 2012.

Improving the information flow

I don't think there is a silver bullet solution, but I think there are things we can do to improve the situation. What could be done is to coordinate and share the work. Debian, Red Hat and other distributions with stable/LTS versions could agree that their next versions are based on a specific Glibc version and they collaboratively work on providing patch sets to fix all the vulnerabilities in it. This already somehow happens with upstream projects providing long term support versions, the Linux kernel does that for example. Doing that at scale would require vast organizational changes in the Linux distributions. They would have to agree on a roughly common timescale to start their stable versions.

What I'd consider the most crucial thing is to improve and streamline the information flow about vulnerabilities. When Google fixes a vulnerability in Chrome OS they should make sure this information is shared with other Linux distributions and the public. And they should know where and how they should share this information.

One mechanism that tries to organize the vulnerability process is the system of CVE ids. The idea is actually simple: Publicly known vulnerabilities get a fixed id and they are in a public database. GHOST is CVE-2015-0235 (the scheme will soon change because four digits aren't enough for all the vulnerabilities we find every year). I got my first CVEs assigned in 2007, so I have some experiences with the CVE system and they are rather mixed. Sometimes I briefly mention rather minor issues in a mailing list thread and a CVE gets assigned right away. Sometimes I explicitly ask for CVE assignments and never get an answer.

I would like to see that we just assign CVEs for everything that even remotely looks like a security vulnerability. However right now I think the process is to unreliable to deliver that. There are other public vulnerability databases like OSVDB, I have limited experience with them, so I can't judge if they'd be better suited. Unfortunately sometimes people hesitate to request CVE ids because others abuse the CVE system to count assigned CVEs and use this as a metric how secure a product is. Such bad statistics are outright dangerous, because it gives people an incentive to downplay vulnerabilities or withhold information about them.

This post was partly inspired by some discussions on oss-security

Posted by Hanno Böck

in Code, English, Gentoo, Linux, Security

at

00:52

| Comments (5)

| Trackbacks (0)

Monday, October 6. 2014

How to stop Bleeding Hearts and Shocking Shells

The free software community was recently shattered by two security bugs called Heartbleed and Shellshock. While technically these bugs where quite different I think they still share a lot.

The free software community was recently shattered by two security bugs called Heartbleed and Shellshock. While technically these bugs where quite different I think they still share a lot.Heartbleed hit the news in April this year. A bug in OpenSSL that allowed to extract privat keys of encrypted connections. When a bug in Bash called Shellshock hit the news I was first hesistant to call it bigger than Heartbleed. But now I am pretty sure it is. While Heartbleed was big there were some things that alleviated the impact. It took some days till people found out how to practically extract private keys - and it still wasn't fast. And the most likely attack scenario - stealing a private key and pulling off a Man-in-the-Middle-attack - seemed something that'd still pose some difficulties to an attacker. It seemed that people who update their systems quickly (like me) weren't in any real danger.

Shellshock was different. It's astonishingly simple to use and real attacks started hours after it became public. If circumstances had been unfortunate there would've been a very real chance that my own servers could've been hit by it. I usually feel the IT stuff under my responsibility is pretty safe, so things like this scare me.

What OpenSSL and Bash have in common

Shortly after Heartbleed something became very obvious: The OpenSSL project wasn't in good shape. The software that pretty much everyone in the Internet uses to do encryption was run by a small number of underpaid people. People trying to contribute and submit patches were often ignored (I know that, I tried it). The truth about Bash looks even grimmer: It's a project mostly run by a single volunteer. And yet almost every large Internet company out there uses it. Apple installs it on every laptop. OpenSSL and Bash are crucial pieces of software and run on the majority of the servers that run the Internet. Yet they are very small projects backed by few people. Besides they are both quite old, you'll find tons of legacy code in them written more than a decade ago.

People like to rant about the code quality of software like OpenSSL and Bash. However I am not that concerned about these two projects. This is the upside of events like these: OpenSSL is probably much securer than it ever was and after the dust settles Bash will be a better piece of software. If you want to ask yourself where the next Heartbleed/Shellshock-alike bug will happen, ask this: What projects are there that are installed on almost every Linux system out there? And how many of them have a healthy community and received a good security audit lately?

Software installed on almost any Linux system

Let me propose a little experiment: Take your favorite Linux distribution, make a minimal installation without anything and look what's installed. These are the software projects you should worry about. To make things easier I did this for you. I took my own system of choice, Gentoo Linux, but the results wouldn't be very different on other distributions. The results are at at the bottom of this text. (I removed everything Gentoo-specific.) I admit this is oversimplifying things. Some of these provide more attack surface than others, we should probably worry more about the ones that are directly involved in providing network services.

After Heartbleed some people already asked questions like these. How could it happen that a project so essential to IT security is so underfunded? Some large companies acted and the result is the Core Infrastructure Initiative by the Linux Foundation, which already helped improving OpenSSL development. This is a great start and an example for an initiative of which we should have more. We should ask the large IT companies who are not part of that initiative what they are doing to improve overall Internet security.

Just to put this into perspective: A thorough security audit of a project like Bash would probably require a five figure number of dollars. For a small, volunteer driven project this is huge. For a company like Apple - the one that installed Bash on all their laptops - it's nearly nothing.

There's another recent development I find noteworthy. Google started Project Zero where they hired some of the brightest minds in IT security and gave them a single job: Search for security bugs. Not in Google's own software. In every piece of software out there. This is not merely an altruistic project. It makes sense for Google. They want the web to be a safer place - because the web is where they earn their money. I like that approach a lot and I have only one question to ask about it: Why doesn't every large IT company have a Project Zero?

Sparking interest

There's another aspect I want to talk about. After Heartbleed people started having a closer look at OpenSSL and found a number of small and one other quite severe issue. After Bash people instantly found more issues in the function parser and we now have six CVEs for Shellshock and friends. When a piece of software is affected by a severe security bug people start to look for more. I wonder what it'd take to have people looking at the projects that aren't in the spotlight.

I was brainstorming if we could have something like a "free software audit action day". A regular call where an important but neglected project is chosen and the security community is asked to have a look at it. This is just a vague idea for now, if you like it please leave a comment.

That's it. I refrain from having discussions whether bugs like Heartbleed or Shellshock disprove the "many eyes"-principle that free software advocates like to cite, because I think these discussions are a pointless waste of time. I'd like to discuss how to improve things. Let's start.

Here's the promised list of Gentoo packages in the standard installation:

bzip2

gzip

tar

unzip

xz-utils

nano

ca-certificates

mime-types

pax-utils

bash

build-docbook-catalog

docbook-xml-dtd

docbook-xsl-stylesheets

openjade

opensp

po4a

sgml-common

perl

python

elfutils

expat

glib

gmp

libffi

libgcrypt

libgpg-error

libpcre

libpipeline

libxml2

libxslt

mpc

mpfr

openssl

popt

Locale-gettext

SGMLSpm

TermReadKey

Text-CharWidth

Text-WrapI18N

XML-Parser

gperf

gtk-doc-am

intltool

pkgconfig

iputils

netifrc

openssh

rsync

wget

acl

attr

baselayout

busybox

coreutils

debianutils

diffutils

file

findutils

gawk

grep

groff

help2man

hwids

kbd

kmod

less

man-db

man-pages

man-pages-posix

net-tools

sed

shadow

sysvinit

tcp-wrappers

texinfo

util-linux

which

pambase

autoconf

automake

binutils

bison

flex

gcc

gettext

gnuconfig

libtool

m4

make

patch

e2fsprogs

udev

linux-headers

cracklib

db

e2fsprogs-libs

gdbm

glibc

libcap

ncurses

pam

readline

timezone-data

zlib

procps

psmisc

shared-mime-info

Posted by Hanno Böck

in English, Gentoo, Linux, Security

at

23:35

| Comments (10)

| Trackback (1)

Defined tags for this entry: bash, freesoftware, heartbleed, linux, openssl, security, shellshock, vulnerability

Friday, October 3. 2014

New laptop Lenovo Thinkpad X1 Carbon 20A7

While I got along well with my Thinkpad T61 laptop, for quite some time I had the plan to get a new one soon. It wasn't an easy decision and I looked in detail at the models available in recent months. I finally decided to buy one of Lenovo's Thinkpad X1 Carbon laptops in its 2014 edition. The X1 Carbon was introduced in 2012, however a completely new variant which is very different from the first one was released early 2014. To distinguish it from other models it is the 20A7 model.

While I got along well with my Thinkpad T61 laptop, for quite some time I had the plan to get a new one soon. It wasn't an easy decision and I looked in detail at the models available in recent months. I finally decided to buy one of Lenovo's Thinkpad X1 Carbon laptops in its 2014 edition. The X1 Carbon was introduced in 2012, however a completely new variant which is very different from the first one was released early 2014. To distinguish it from other models it is the 20A7 model.Judging from the first days of use I think I made the right decision. I hadn't seen the device before I bought it because it seems rarely shops keep this device in stock. I assume this is due to the relatively high price.

I was a bit worried because Lenovo made some unusual decisions for the keyboard, however having used it for a few days I don't feel that it has any severe downsides. The most unusual thing about it is that it doesn't have normal F1-F12 keys, instead it has what Lenovo calls an adaptive keyboard: A touch sensitive line which can display different kinds of keys. The idea is that different applications can have their own set of special keys there. However, just letting them display the normal F-keys works well and not having "real" keys there doesn't feel like a big disadvantage. Beside that Lenovo removed the Caps lock and placed Pos1/End there, which is a bit unusual but also nothing I worried about. I also hadn't seen any pictures of the German keyboard before I bought the device. The ^/°-key is not where it's used to be (small downside), but the </>/| key is where it belongs(big plus, many laptop vendors get that wrong).

Good things:

* Lightweight, Ultrabook, no unnecessary stuff like CD/DVD drive

* High resolution (2560x1440)

* Hardware is up-to-date (Haswell chipset)

Downsides:

* Due to ultrabook / integrated design easy changing battery, ram or HD

* No SD card reader

* Have some trouble getting used to the touchpad (however there are lots of possibilities to configure it, I assume by playing with it that'll get better)

It used to be the case that people wrote docs how to get all the hardware in a laptop running on Linux which I did my previous laptops. These days this usually boils down to "run a recent Linux distribution with the latest kernels and xorg packages and most things will be fine". However I thought having a central place where I collect relevant information would be nice so I created one again. As usual I'm running Gentoo Linux.

For people who plan to run Linux without a dual boot it may be worth mentioning that there seem to be troublesome errors in earlier versions of the BIOS and the SSD firmware. You may want to update them before removing Windows. On my device they were already up-to-date.

Posted by Hanno Böck

in Computer culture, English, Gentoo, Life, Linux

at

23:05

| Comments (3)

| Trackbacks (0)

Saturday, July 12. 2014

LibreSSL on Gentoo

Yesterday the LibreSSL project released the first portable version that works on Linux. LibreSSL is a fork of OpenSSL and was created by the OpenBSD team in the aftermath of the Heartbleed bug.

Yesterday the LibreSSL project released the first portable version that works on Linux. LibreSSL is a fork of OpenSSL and was created by the OpenBSD team in the aftermath of the Heartbleed bug.Yesterday and today I played around with it on Gentoo Linux. I was able to replace my system's OpenSSL completely with LibreSSL and with few exceptions was able to successfully rebuild all packages using OpenSSL.

After getting this running on my own system I installed it on a test server. The Webpage tlsfun.de runs on that server. The functionality changes are limited, the only thing visible from the outside is the support for the experimental, not yet standardized ChaCha20-Poly1305 cipher suites, which is a nice thing.

A warning ahead: This is experimental, in no way stable or supported and if you try any of this you do it at your own risk. Please report any bugs you have with my overlay to me or leave a comment and don't disturb anyone else (from Gentoo or LibreSSL) with it. If you want to try it, you can get a portage overlay in a subversion repository. You can check it out with this command:

svn co https://svn.hboeck.de/libressl-overlay/

git clone https://github.com/gentoo/libressl.git

This is what I had to do to get things running:

LibreSSL itself

First of all the Gentoo tree contains a lot of packages that directly depend on openssl, so I couldn't just replace that. The correct solution to handle such issues would be to create a virtual package and change all packages depending directly on openssl to depend on the virtual. This is already discussed in the appropriate Gentoo bug, but this would mean patching hundreds of packages so I skipped it and worked around it by leaving a fake openssl package in place that itself depends on libressl.

LibreSSL deprecates some APIs from OpenSSL. The first thing that stopped me was that various programs use the functions RAND_egd() and RAND_egd_bytes(). I didn't know until yesterday what egd is. It stands for Entropy Gathering Daemon and is a tool written in perl meant to replace the functionality of /dev/(u)random on non-Linux-systems. The LibreSSL-developers consider it insecure and after having read what it is I have to agree. However, the removal of those functions causes many packages not to build, upon them wget, python and ruby. My workaround was to add two dummy functions that just return -1, which is the error code if the Entropy Gathering Daemon is not available. So the API still behaves like expected. I also posted the patch upstream, but the LibreSSL devs don't like it. So on the long term it's probably better to fix applications to stop trying to use egd, but for now these dummy functions make it easier for me to build my system.

The second issue popping up was that the libcrypto.so from libressl contains an undefined main() function symbol which causes linking problems with a couple of applications (subversion, xorg-server, hexchat). According to upstream this undefined symbol is intended and most likely these are bugs in the applications having linking problems. However, for now it was easier for me to patch the symbol out instead of fixing all the apps. Like the egd issue on the long term fixing the applications is better.

The third issue was that LibreSSL doesn't ship pkg-config (.pc) files, some apps use them to get the correct compilation flags. I grabbed the ones from openssl and adjusted them accordingly.

OpenSSH

This was the most interesting issue from all of them.

To understand this you have to understand how both LibreSSL and OpenSSH are developed. They are both from OpenBSD and they use some functions that are only available there. To allow them to be built on other systems they release portable versions which ship the missing OpenBSD-only-functions. One of them is arc4random().

Both LibreSSL and OpenSSH ship their compatibility version of arc4random(). The one from OpenSSH calls RAND_bytes(), which is a function from OpenSSL. The RAND_bytes() function from LibreSSL however calls arc4random(). Due to the linking order OpenSSH uses its own arc4random(). So what we have here is a nice recursion. arc4random() and RAND_bytes() try to call each other. The result is a segfault.

I fixed it by using the LibreSSL arc4random.c file for OpenSSH. I had to copy another function called arc4random_stir() from OpenSSH's arc4random.c and the header file thread_private.h. Surprisingly, this seems to work flawlessly.

Net-SSLeay

This package contains the perl bindings for openssl. The problem is a check for the openssl version string that expected the name OpenSSL and a version number with three numbers and a letter (like 1.0.1h). LibreSSL prints the version 2.0. I just hardcoded the OpenSSL version numer, which is not a real fix, but it works for now.

SpamAssassin

SpamAssassin's code for spamc requires SSLv2 functions to be available. SSLv2 is heavily insecure and should not be used at all and therefore the LibreSSL devs have removed all SSLv2 function calls. Luckily, Debian had a patch to remove SSLv2 that I could use.

libesmtp / gwenhywfar

Some DES-related functions (DES is the old Data Encryption Standard) in OpenSSL are available in two forms: With uppercase DES_ and with lowercase des_. I can only guess that the des_ variants are for backwards compatibliity with some very old versions of OpenSSL. According to the docs the DES_ variants should be used. LibreSSL has removed the des_ variants.

For gwenhywfar I wrote a small patch and sent it upstream. For libesmtp all the code was in ntlm. After reading that ntlm is an ancient, proprietary Microsoft authentication protocol I decided that I don't need that anyway so I just added --disable-ntlm to the ebuild.

Dovecot

In Dovecot two issues popped up. LibreSSL removed the SSL Compression functionality (which is good, because since the CRIME attack we know it's not secure). Dovecot's configure script checks for it, but the check doesn't work. It checks for a function that LibreSSL keeps as a stub. For now I just disabled the check in the configure script. The solution is probably to remove all remaining stub functions. The configure script could probably also be changed to work in any case.

The second issue was that the Dovecot code has some #ifdef clauses that check the openssl version number for the ECDH auto functionality that has been added in OpenSSL 1.0.2 beta versions. As the LibreSSL version number 2.0 is higher than 1.0.2 it thinks it is newer and tries to enable it, but the code is not present in LibreSSL. I changed the #ifdefs to check for the actual functionality by checking a constant defined by the ECDH auto code.

Apache httpd

The Apache http compilation complained about a missing ENGINE_CTRL_CHIL_SET_FORKCHECK. I have no idea what it does, but I found a patch to fix the issue, so I didn't investigate it further.

Further reading:

Someone else tried to get things running on Sabotage Linux.

Update: I've abandoned my own libressl overlay, a LibreSSL overlay by various Gentoo developers is now maintained at GitHub.

Posted by Hanno Böck

in Code, Cryptography, English, Gentoo, Linux, Security

at

20:31

| Comments (8)

| Trackbacks (5)

Wednesday, September 4. 2013

My AC100 travel laptop

I recently noted that I have never blogged about this nice little device I now own for a couple of years. I originally bought the Toshiba AC100 before a two-month-long trip through Russia and China.

I recently noted that I have never blogged about this nice little device I now own for a couple of years. I originally bought the Toshiba AC100 before a two-month-long trip through Russia and China.I was looking for a possibility to have a basic laptop, but without much weight. The AC100 is an ARM-based laptop which originally ships with the Android operating system. It weights less than 800 gramms and thus is lighter than the usual subnotebooks. According to my knowledge, it's not produced any more, but it can still be bought on ebay.

The nice thing is: You can install Linux on it and thus it will give you the possibility to run an almost full desktop-system. Though a warning ahead: While basic things work, it is quite a hacky business and you should expect to see problems. If you aren't prepared to solve them, this is probably not the solution for you.

Originally I was running Gentoo Linux on it (and it did well on my two-month trip), but now I'm running Ubuntu. The reason is that it was just too hard to get anything fixed if it didn't work. I rarely could find help anywhere, I assume there are only a handful of people that ever tried installing Gentoo on this Device. Ubuntu up until version 12.10 has reasonable support.

The great thing is: This is probably one of the lightest solutions to have a desktop/laptop-like machine with a real keyboard. Perfect for travelling. As it's running Linux, you can have access to a large number of standard applications. With lightweight apps like Abiword or Claws-Mail you can use basic applications.