Entries tagged as php

Related tags

apache certificates cryptography datenschutz datensparsamkeit encryption grsecurity https itsecurity javascript karlsruhe letsencrypt linux mod_rewrite nginx ocsp ocspstapling openssl revocation security serendipity sni ssl symlink tls userdir vortrag web web20 webhosting webmontag websecurity browser ajax certificate clickjacking content-security-policy csp dell edellroot firefox html khtml konqueror maninthemiddle microsoft rss superfish vulnerability webcore xss c addresssanitizer asan bufferoverflow clang code gcc gentoo memorysafety programming sizeof useafterfree chromium chrome crash cve debian diffiehellman forwardsecrecy freesoftware ghost glibc keyexchange redhat csrf freewvs cookie bigbluebutton drupal fileexfiltration gallery jodconverter libreoffice mantis session sniffing squirrelmail core coredump segfault webroot webserver gobi helma apt deb distributions fedora fma86t gnupg gpg hardware laptop notebook openpgp packagemanagement pgp rpm signatures ubuntu wlan developer berserk bleichenbacher ffmpeg flash flv ftp gstreamer mozilla mplayer multimedia nss poodle video vlc xine xsa youtube zzuf 23c3 27c3 3d 3ddrucker adobe amusementpark asia ati backnang base64 bash beijing berlin beryl bios blob bonn camera canon ccc cellular chdk chemnitz china cinderella cinelerra clt codecs compiz compizfusion composite compression console copyright creativecommons css ddwrt desktop developingworld digitalcamera disney disneyland driver dvd eltorito evince exe fake film firmware france freeculture freiegesellschaft freifunk frequencies frequency froscon froscon2007 fsf fsfe gaia games gargoyle gimp gnome google googleearth graphics grub gsm heartbleed homebrew ibm ico icons icoutils iso itu ixus jugendumweltbewegung jukss kde königswusterhausen kpdf lenovo lessig license lpi lpic lspci lsusb lug memdisk messe mobilephones movie musik nancy nessus nouveau nvidia ökologie okular olpc openbsc openbts openexpo opengl opensourceexpo openstreetmap openvas osmocombb pciids pdf phoronix poppler presse privacy rapidprototyping rar realmedia realvideo reprap retrogames reverseengineering rmll router rv30 rv40 s9y science sciencefiction script sfd shellshock shijingshan siegburg simcity society softwarefreedomday sqlinjection stepmania sumatrapdf sunras syslinux talk theory thesource theunarchiver thinkpad travel trip2011 tuxmas unar usbids videoediting wii wiibrew wiki windows windowsrefund wiretapping wos wos4 wrestool xorg ac100 aiglx android censorship english freedomofspeech gebabbel gps gpsbabel idn iputils josm libressl merkaartor metacity mobiletrailexplorer openbsd ping politics smartbook subnotebook toshiba x1carbon xgl zensur infoleak mysql pdo stacktrace 1und1 4k artikel assembler augsburg augsburgerallgemeine bahn cacert cardreader ccwn come2linux cpu cpufreq darmstadt delilinux demoscene distribution dmidecode drm entropia essen esslingen frankreich freedesktop gadgets gammu gatos gnokii gphoto gpn gpn5 gtk hacker harddisk hash hddtemp howto hp http inkscape installparty iptables kgtk kubuntu lit07 lm_sensors ludwigsburg luga lugbk macos mandriva md5 memorystick metisse mobile motherboard mrmcd mrmcd101b network nokia omnibook overheatd overheating passwörter pcmagazin pcmcia proxy ptp qt r300 radeon randr12 ricoh samsung schokokeks sd sdricohcs server sha1 smart smartmontools sncf squid standards support t61 time tv tvout unicode usability usb utf-8 vc-1 vista waiblingen webinale win32codecs wine wmv zeitung barcamp bodensee informationdisclosure rhein ruby 0days adguard aead aes afra algorithm altushost antivir antivirus aok auskunftsanspruch axfr azure badkeys bias blog botnetz braunschweig bsi bsideshn bugbounty bundesdatenschutzgesetz bundestrojaner bundesverfassungsgericht busby bypass ca cbc cccamp11 cfb chcounter clamav cloudflare cmi cms conflictofinterest crypto deolalikar dingens diploma diplomarbeit dns domain easterhegg eff email eplus facebook firewall fortigate fortinet freak fuzzing gajim git gnutls gsoc hackerone hacking hannover internetscan ircbot jabber joomla kaspersky key komodia leak luckythirteen malware math mephisto milleniumproblems mitm modulobias moodle mpaa mrmcd100b napster netfiltersdk newspaper nist ntp ntpd observatory onlinedurchsuchung openid openidconnect openleaks otr owncloud padding panda papierlos password passwordalert passwort phishing pnp privatekey privdog protocolfilters provablesecurity pss python rand random rc2 rsa rsapss salinecourier schlüssel sha2 sha256 sha512 shellbot sicherheit slides smime snallygaster spam sso staatsanwaltschaft study stuttgart subdomain taz thesis tlsdate toendacms transvalid überwachung unicef update updates verschlüsselung virus vulnerabilities webapps wiesbaden windowsxp wordpress x509 xmpp zerodays zugangsdaten halloween rootserver calendar cccamp cccamp15 certificateauthority ipv6 planet stadtmitte symantec bugtracker github nextcloud breach cookies crime heist samesite okteThursday, June 15. 2017

Don't leave Coredumps on Web Servers

Coredumps are a feature of Linux and other Unix systems to analyze crashing software. If a software crashes, for example due to an invalid memory access, the operating system can save the current content of the application's memory to a file. By default it is simply called

Coredumps are a feature of Linux and other Unix systems to analyze crashing software. If a software crashes, for example due to an invalid memory access, the operating system can save the current content of the application's memory to a file. By default it is simply called core.While this is useful for debugging purposes it can produce a security risk. If a web application crashes the coredump may simply end up in the web server's root folder. Given that its file name is known an attacker can simply download it via an URL of the form

https://example.org/core. As coredumps contain an application's memory they may expose secret information. A very typical example would be passwords.PHP used to crash relatively often. Recently a lot of these crash bugs have been fixed, in part because PHP now has a bug bounty program. But there are still situations in which PHP crashes. Some of them likely won't be fixed.

How to disclose?

With a scan of the Alexa Top 1 Million domains for exposed core dumps I found around 1.000 vulnerable hosts. I was faced with a challenge: How can I properly disclose this? It is obvious that I wouldn't write hundreds of manual mails. So I needed an automated way to contact the site owners.

Abusix runs a service where you can query the abuse contacts of IP addresses via a DNS query. This turned out to be very useful for this purpose. One could also imagine contacting domain owners directly, but that's not very practical. The domain whois databases have rate limits and don't always expose contact mail addresses in a machine readable way.

Using the abuse contacts doesn't reach all of the affected host operators. Some abuse contacts were nonexistent mail addresses, others didn't have abuse contacts at all. I also got all kinds of automated replies, some of them asking me to fill out forms or do other things, otherwise my message wouldn't be read. Due to the scale I ignored those. I feel that if people make it hard for me to inform them about security problems that's not my responsibility.

I took away two things that I changed in a second batch of disclosures. Some abuse contacts seem to automatically search for IP addresses in the abuse mails. I originally only included affected URLs. So I changed that to include the affected IPs as well.

In many cases I was informed that the affected hosts are not owned by the company I contacted, but by a customer. Some of them asked me if they're allowed to forward the message to them. I thought that would be obvious, but I made it explicit now. Some of them asked me that I contact their customers, which again, of course, is impractical at scale. And sorry: They are your customers, not mine.

How to fix and prevent it?

If you have a coredump on your web host, the obvious fix is to remove it from there. However you obviously also want to prevent this from happening again.

There are two settings that impact coredump creation: A limits setting, configurable via

/etc/security/limits.conf and ulimit and a sysctl interface that can be found under /proc/sys/kernel/core_pattern.The limits setting is a size limit for coredumps. If it is set to zero then no core dumps are created. To set this as the default you can add something like this to your

limits.conf:* soft core 0The sysctl interface sets a pattern for the file name and can also contain a path. You can set it to something like this:

/var/log/core/core.%e.%p.%h.%tThis would store all coredumps under

/var/log/core/ and add the executable name, process id, host name and timestamp to the filename. The directory needs to be writable by all users, you should use a directory with the sticky bit (chmod +t).If you set this via the proc file interface it will only be temporary until the next reboot. To set this permanently you can add it to

/etc/sysctl.conf:kernel.core_pattern = /var/log/core/core.%e.%p.%h.%tSome Linux distributions directly forward core dumps to crash analysis tools. This can be done by prefixing the pattern with a pipe (|). These tools like apport from Ubuntu or abrt from Fedora have also been the source of security vulnerabilities in the past. However that's a separate issue.

Look out for coredumps

My scans showed that this is a relatively common issue. Among popular web pages around one in a thousand were affected before my disclosure attempts. I recommend that pentesters and developers of security scan tools consider checking for this. It's simple: Just try download the

/core file and check if it looks like an executable. In most cases it will be an ELF file, however sometimes it may be a Mach-O (OS X) or an a.out file (very old Linux and Unix systems).Image credit: NASA/JPL-Université Paris Diderot

Posted by Hanno Böck

in English, Gentoo, Linux, Security

at

11:20

| Comments (0)

| Trackback (1)

Defined tags for this entry: core, coredump, crash, linux, php, segfault, vulnerability, webroot, websecurity, webserver

Saturday, April 8. 2017

And then I saw the Password in the Stack Trace

I want to tell a little story here. I am usually relatively savvy in IT security issues. Yet I was made aware of a quite severe mistake today that caused a security issue in my web page. I want to learn from mistakes, but maybe also others can learn something as well.

I have a private web page. Its primary purpose is to provide a list of links to articles I wrote elsewhere. It's probably not a high value target, but well, being an IT security person I wanted to get security right.

Of course the page uses TLS-encryption via HTTPS. It also uses HTTP Strict Transport Security (HSTS), TLS 1.2 with an AEAD and forward secrecy, has a CAA record and even HPKP (although I tend to tell people that they shouldn't use HPKP, because it's too easy to get wrong). Obviously it has an A+ rating on SSL Labs.

Surely I thought about Cross Site Scripting (XSS). While an XSS on the page wouldn't be very interesting - it doesn't have any kind of login or backend and doesn't use cookies - and also quite unlikely – no user supplied input – I've done everything to prevent XSS. I set a strict Content Security Policy header and other security headers. I have an A-rating on securityheaders.io (no A+, because after several HPKP missteps I decided to use a short timeout).

I also thought about SQL injection. While an SQL injection would be quite unlikely – you remember, no user supplied input – I'm using prepared statements, so SQL injections should be practically impossible.

All in all I felt that I have a pretty secure web page. So what could possibly go wrong?

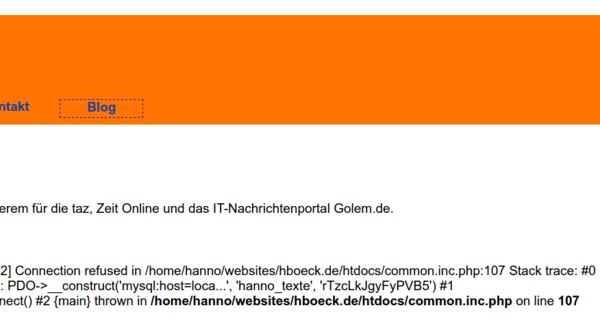

Well, this morning someone send me this screenshot:

And before you ask: Yes, this was the real database password. (I changed it now.)

So what happened? The mysql server was down for a moment. It had crashed for reasons unrelated to this web page. I had already taken care of that and hadn't noted the password leak. The crashed mysql server subsequently let to an error message by PDO (PDO stands for PHP Database Object and is the modern way of doing database operations in PHP).

The PDO error message contains a stack trace of the function call including function parameters. And this led to the password leak: The password is passed to the PDO constructor as a parameter.

There are a few things to note here. First of all for this to happen the PHP option display_errors needs to be enabled. It is recommended to disable this option in production systems, however it is enabled by default. (Interesting enough the PHP documentation about display_errors doesn't even tell you what the default is.)

display_errors wasn't enabled by accident. It was actually disabled in the past. I made a conscious decision to enable it. Back when we had display_errors disabled on the server I once tested a new PHP version where our custom config wasn't enabled yet. I noticed several bugs in PHP pages. So my rationale was that disabling display_errors hides bugs, thus I'd better enable it. In hindsight it was a bad idea. But well... hindsight is 20/20.

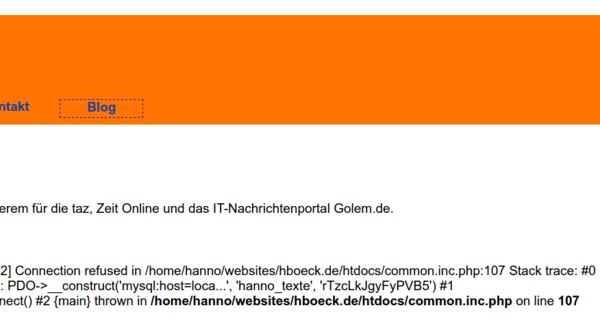

The second thing to note is that this only happens because PDO throws an exception that is unhandled. To be fair, the PDO documentation mentions this risk. Other kinds of PHP bugs don't show stack traces. If I had used mysqli – the second supported API to access MySQL databases in PHP – the error message would've looked like this:

While this still leaks the username, it's much less dangerous. This is a subtlety that is far from obvious. PHP functions have different modes of error reporting. Object oriented functions – like PDO – throw exceptions. Unhandled exceptions will lead to stack traces. Other functions will just report error messages without stack traces.

If you wonder about the impact: It's probably minor. People could've seen the password, but I haven't noticed any changes in the database. I obviously changed it immediately after being notified. I'm pretty certain that there is no way that a database compromise could be used to execute code within the web page code. It's far too simple for that.

Of course there are a number of ways this could've been prevented, and I've implemented several of them. I'm now properly handling exceptions from PDO. I also set a general exception handler that will inform me (and not the web page visitor) if any other unhandled exceptions occur. And finally I've changed the server's default to display_errors being disabled.

While I don't want to shift too much blame here, I think PHP is making this far too easy to happen. There exists a bug report about the leaking of passwords in stack traces from 2014, but nothing happened. I think there are a variety of unfortunate decisions made by PHP. If display_errors is dangerous and discouraged for production systems then it shouldn't be enabled by default.

PHP could avoid sending stack traces by default and make this a separate option from display_errors. It could also introduce a way to make exceptions fatal for functions so that calling those functions is prevented outside of a try/catch block that handles them. (However that obviously would introduce compatibility problems with existing applications, as Craig Young pointed out to me.)

So finally maybe a couple of takeaways:

I have a private web page. Its primary purpose is to provide a list of links to articles I wrote elsewhere. It's probably not a high value target, but well, being an IT security person I wanted to get security right.

Of course the page uses TLS-encryption via HTTPS. It also uses HTTP Strict Transport Security (HSTS), TLS 1.2 with an AEAD and forward secrecy, has a CAA record and even HPKP (although I tend to tell people that they shouldn't use HPKP, because it's too easy to get wrong). Obviously it has an A+ rating on SSL Labs.

Surely I thought about Cross Site Scripting (XSS). While an XSS on the page wouldn't be very interesting - it doesn't have any kind of login or backend and doesn't use cookies - and also quite unlikely – no user supplied input – I've done everything to prevent XSS. I set a strict Content Security Policy header and other security headers. I have an A-rating on securityheaders.io (no A+, because after several HPKP missteps I decided to use a short timeout).

I also thought about SQL injection. While an SQL injection would be quite unlikely – you remember, no user supplied input – I'm using prepared statements, so SQL injections should be practically impossible.

All in all I felt that I have a pretty secure web page. So what could possibly go wrong?

Well, this morning someone send me this screenshot:

And before you ask: Yes, this was the real database password. (I changed it now.)

So what happened? The mysql server was down for a moment. It had crashed for reasons unrelated to this web page. I had already taken care of that and hadn't noted the password leak. The crashed mysql server subsequently let to an error message by PDO (PDO stands for PHP Database Object and is the modern way of doing database operations in PHP).

The PDO error message contains a stack trace of the function call including function parameters. And this led to the password leak: The password is passed to the PDO constructor as a parameter.

There are a few things to note here. First of all for this to happen the PHP option display_errors needs to be enabled. It is recommended to disable this option in production systems, however it is enabled by default. (Interesting enough the PHP documentation about display_errors doesn't even tell you what the default is.)

display_errors wasn't enabled by accident. It was actually disabled in the past. I made a conscious decision to enable it. Back when we had display_errors disabled on the server I once tested a new PHP version where our custom config wasn't enabled yet. I noticed several bugs in PHP pages. So my rationale was that disabling display_errors hides bugs, thus I'd better enable it. In hindsight it was a bad idea. But well... hindsight is 20/20.

The second thing to note is that this only happens because PDO throws an exception that is unhandled. To be fair, the PDO documentation mentions this risk. Other kinds of PHP bugs don't show stack traces. If I had used mysqli – the second supported API to access MySQL databases in PHP – the error message would've looked like this:

PHP Warning: mysqli::__construct(): (HY000/1045): Access denied for user 'test'@'localhost' (using password: YES) in /home/[...]/mysqli.php on line 3While this still leaks the username, it's much less dangerous. This is a subtlety that is far from obvious. PHP functions have different modes of error reporting. Object oriented functions – like PDO – throw exceptions. Unhandled exceptions will lead to stack traces. Other functions will just report error messages without stack traces.

If you wonder about the impact: It's probably minor. People could've seen the password, but I haven't noticed any changes in the database. I obviously changed it immediately after being notified. I'm pretty certain that there is no way that a database compromise could be used to execute code within the web page code. It's far too simple for that.

Of course there are a number of ways this could've been prevented, and I've implemented several of them. I'm now properly handling exceptions from PDO. I also set a general exception handler that will inform me (and not the web page visitor) if any other unhandled exceptions occur. And finally I've changed the server's default to display_errors being disabled.

While I don't want to shift too much blame here, I think PHP is making this far too easy to happen. There exists a bug report about the leaking of passwords in stack traces from 2014, but nothing happened. I think there are a variety of unfortunate decisions made by PHP. If display_errors is dangerous and discouraged for production systems then it shouldn't be enabled by default.

PHP could avoid sending stack traces by default and make this a separate option from display_errors. It could also introduce a way to make exceptions fatal for functions so that calling those functions is prevented outside of a try/catch block that handles them. (However that obviously would introduce compatibility problems with existing applications, as Craig Young pointed out to me.)

So finally maybe a couple of takeaways:

- display_errors is far more dangerous than I was aware of.

- Unhandled exceptions introduce unexpected risks that I wasn't aware of.

- In the past I was recommending that people should use PDO with prepared statements if they use MySQL with PHP. I wonder if I should reconsider that, given the circumstances mysqli seems safer (it also supports prepared statements).

- I'm not sure if there's a general takeaway, but at least for me it was quite surprising that I could have such a severe security failure in a project and code base where I thought I had everything covered.

Tuesday, June 23. 2015

The tricky security issue with FollowSymLinks and Apache

tl;dr Most servers running a multi-user webhosting setup with Apache HTTPD probably have a security problem. Unless you're using Grsecurity there is no easy fix.

I am part of a small webhosting business that I run as a side project since quite a while. We offer customers user accounts on our servers running Gentoo Linux and webspace with the typical Apache/PHP/MySQL combination. We recently became aware of a security problem regarding Symlinks. I wanted to share this, because I was appalled by the fact that there was no obvious solution.

Apache has an option FollowSymLinks which basically does what it says. If a symlink in a webroot is accessed the webserver will follow it. In a multi-user setup this is a security problem. Here's why: If I know that another user on the same system is running a typical web application - let's say Wordpress - I can create a symlink to his config file (for Wordpress that's wp-config.php). I can't see this file with my own user account. But the webserver can see it, so I can access it with the browser over my own webpage. As I'm usually allowed to disable PHP I'm able to prevent the server from interpreting the file, so I can read the other user's database credentials. The webserver needs to be able to see all files, therefore this works. While PHP and CGI scripts usually run with user's rights (at least if the server is properly configured) the files are still read by the webserver. For this to work I need to guess the path and name of the file I want to read, but that's often trivial. In our case we have default paths in the form /home/[username]/websites/[hostname]/htdocs where webpages are located.

So the obvious solution one might think about is to disable the FollowSymLinks option and forbid users to set it themselves. However symlinks in web applications are pretty common and many will break if you do that. It's not feasible for a common webhosting server.

Apache supports another Option called SymLinksIfOwnerMatch. It's also pretty self-explanatory, it will only follow symlinks if they belong to the same user. That sounds like it solves our problem. However there are two catches: First of all the Apache documentation itself says that "this option should not be considered a security restriction". It is still vulnerable to race conditions.

But even leaving the race condition aside it doesn't really work. Web applications using symlinks will usually try to set FollowSymLinks in their .htaccess file. An example is Drupal which by default comes with such an .htaccess file. If you forbid users to set FollowSymLinks then the option won't be just ignored, the whole webpage won't run and will just return an error 500. What you could do is changing the FollowSymLinks option in the .htaccess manually to SymlinksIfOwnerMatch. While this may be feasible in some cases, if you consider that you have a lot of users you don't want to explain to all of them that in case they want to install some common web application they have to manually edit some file they don't understand. (There's a bug report for Drupal asking to change FollowSymLinks to SymlinksIfOwnerMatch, but it's been ignored since several years.)

So using SymLinksIfOwnerMatch is neither secure nor really feasible. The documentation for Cpanel discusses several possible solutions. The recommended solutions require proprietary modules. None of the proposed fixes work with a plain Apache setup, which I think is a pretty dismal situation. The most common web server has a severe security weakness in a very common situation and no usable solution for it.

The one solution that we chose is a feature of Grsecurity. Grsecurity is a Linux kernel patch that greatly enhances security and we've been very happy with it in the past. There are a lot of reasons to use this patch, I'm often impressed that local root exploits very often don't work on a Grsecurity system.

Grsecurity has an option like SymlinksIfOwnerMatch (CONFIG_GRKERNSEC_SYMLINKOWN) that operates on the kernel level. You can define a certain user group (which in our case is the "apache" group) for which this option will be enabled. For us this was the best solution, as it required very little change.

I haven't checked this, but I'm pretty sure that we were not alone with this problem. I'd guess that a lot of shared web hosting companies are vulnerable to this problem.

Here's the German blog post on our webpage and here's the original blogpost from an administrator at Uberspace (also German) which made us aware of this issue.

I am part of a small webhosting business that I run as a side project since quite a while. We offer customers user accounts on our servers running Gentoo Linux and webspace with the typical Apache/PHP/MySQL combination. We recently became aware of a security problem regarding Symlinks. I wanted to share this, because I was appalled by the fact that there was no obvious solution.

Apache has an option FollowSymLinks which basically does what it says. If a symlink in a webroot is accessed the webserver will follow it. In a multi-user setup this is a security problem. Here's why: If I know that another user on the same system is running a typical web application - let's say Wordpress - I can create a symlink to his config file (for Wordpress that's wp-config.php). I can't see this file with my own user account. But the webserver can see it, so I can access it with the browser over my own webpage. As I'm usually allowed to disable PHP I'm able to prevent the server from interpreting the file, so I can read the other user's database credentials. The webserver needs to be able to see all files, therefore this works. While PHP and CGI scripts usually run with user's rights (at least if the server is properly configured) the files are still read by the webserver. For this to work I need to guess the path and name of the file I want to read, but that's often trivial. In our case we have default paths in the form /home/[username]/websites/[hostname]/htdocs where webpages are located.

So the obvious solution one might think about is to disable the FollowSymLinks option and forbid users to set it themselves. However symlinks in web applications are pretty common and many will break if you do that. It's not feasible for a common webhosting server.

Apache supports another Option called SymLinksIfOwnerMatch. It's also pretty self-explanatory, it will only follow symlinks if they belong to the same user. That sounds like it solves our problem. However there are two catches: First of all the Apache documentation itself says that "this option should not be considered a security restriction". It is still vulnerable to race conditions.

But even leaving the race condition aside it doesn't really work. Web applications using symlinks will usually try to set FollowSymLinks in their .htaccess file. An example is Drupal which by default comes with such an .htaccess file. If you forbid users to set FollowSymLinks then the option won't be just ignored, the whole webpage won't run and will just return an error 500. What you could do is changing the FollowSymLinks option in the .htaccess manually to SymlinksIfOwnerMatch. While this may be feasible in some cases, if you consider that you have a lot of users you don't want to explain to all of them that in case they want to install some common web application they have to manually edit some file they don't understand. (There's a bug report for Drupal asking to change FollowSymLinks to SymlinksIfOwnerMatch, but it's been ignored since several years.)

So using SymLinksIfOwnerMatch is neither secure nor really feasible. The documentation for Cpanel discusses several possible solutions. The recommended solutions require proprietary modules. None of the proposed fixes work with a plain Apache setup, which I think is a pretty dismal situation. The most common web server has a severe security weakness in a very common situation and no usable solution for it.

The one solution that we chose is a feature of Grsecurity. Grsecurity is a Linux kernel patch that greatly enhances security and we've been very happy with it in the past. There are a lot of reasons to use this patch, I'm often impressed that local root exploits very often don't work on a Grsecurity system.

Grsecurity has an option like SymlinksIfOwnerMatch (CONFIG_GRKERNSEC_SYMLINKOWN) that operates on the kernel level. You can define a certain user group (which in our case is the "apache" group) for which this option will be enabled. For us this was the best solution, as it required very little change.

I haven't checked this, but I'm pretty sure that we were not alone with this problem. I'd guess that a lot of shared web hosting companies are vulnerable to this problem.

Here's the German blog post on our webpage and here's the original blogpost from an administrator at Uberspace (also German) which made us aware of this issue.

Friday, January 30. 2015

What the GHOST tells us about free software vulnerability management

GHOST itself is a Heap Overflow in the name resolution function of the Glibc. The Glibc is the standard C library on Linux systems, almost every software that runs on a Linux system uses it. It is somewhat unclear right now how serious GHOST really is. A lot of software uses the affected function gethostbyname(), but a lot of conditions have to be met to make this vulnerability exploitable. Right now the most relevant attack is against the mail server exim where Qualys has developed a working exploit which they plan to release soon. There have been speculations whether GHOST might be exploitable through Wordpress, which would make it much more serious.

Technically GHOST is a heap overflow, which is a very common bug in C programming. C is inherently prone to these kinds of memory corruption errors and there are essentially two things here to move forwards: Improve the use of exploit mitigation techniques like ASLR and create new ones (levee is an interesting project, watch this 31C3 talk). And if possible move away from C altogether and develop core components in memory safe languages (I have high hopes for the Mozilla Servo project, watch this linux.conf.au talk).

GHOST was discovered three times

But the thing I want to elaborate here is something different about GHOST: It turns out that it has been discovered independently three times. It was already fixed in 2013 in the Glibc Code itself. The commit message didn't indicate that it was a security vulnerability. Then in early 2014 developers at Google found it again using Address Sanitizer (which – by the way – tells you that all software developers should use Address Sanitizer more often to test their software). Google fixed it in Chrome OS and explicitly called it an overflow and a vulnerability. And then recently Qualys found it again and made it public.

Now you may wonder why a vulnerability fixed in 2013 made headlines in 2015. The reason is that it widely wasn't fixed because it wasn't publicly known that it was serious. I don't think there was any malicious intent. The original Glibc fix was probably done without anyone noticing that it is serious and the Google devs may have thought that the fix is already public, so they don't need to make any noise about it. But we can clearly see that something doesn't work here. Which brings us to a discussion how the Linux and free software world in general and vulnerability management in particular work.

The “Never touch a running system” principle

Quite early when I came in contact with computers I heard the phrase “Never touch a running system”. This may have been a reasonable approach to IT systems back then when computers usually weren't connected to any networks and when remote exploits weren't a thing, but it certainly isn't a good idea today in a world where almost every computer is part of the Internet. Because once new security vulnerabilities become public you should change your system and fix them. However that doesn't change the fact that many people still operate like that.

A number of Linux distributions provide “stable” or “Long Time Support” versions. Basically the idea is this: At some point they take the current state of their systems and further updates will only contain important fixes and security updates. They guarantee to fix security vulnerabilities for a certain time frame. This is kind of a compromise between the “Never touch a running system” approach and reasonable security. It tries to give you a system that will basically stay the same, but you get fixes for security issues. Popular examples for this approach are the stable branch of Debian, Ubuntu LTS versions and the Enterprise versions of Red Hat and SUSE.

To give you an idea about time frames, Debian currently supports the stable trees Squeeze (6.0) which was released 2011 and Wheezy (7.0) which was released 2013. Red Hat Enterprise Linux has currently 4 supported version (4, 5, 6, 7), the oldest one was originally released in 2005. So we're talking about pretty long time frames that these systems get supported. Ubuntu and Suse have similar long time supported Systems.

These systems are delivered with an implicit promise: We will take care of security and if you update regularly you'll have a system that doesn't change much, but that will be secure against know threats. Now the interesting question is: How well do these systems deliver on that promise and how hard is that?

Vulnerability management is chaotic and fragile

I'm not sure how many people are aware how vulnerability management works in the free software world. It is a pretty fragile and chaotic process. There is no standard way things work. The information is scattered around many different places. Different people look for vulnerabilities for different reasons. Some are developers of the respective projects themselves, some are companies like Google that make use of free software projects, some are just curious people interested in IT security or researchers. They report a bug through the channels of the respective project. That may be a mailing list, a bug tracker or just a direct mail to the developer. Hopefully the developers fix the issue. It does happen that the person finding the vulnerability first has to explain to the developer why it actually is a vulnerability. Sometimes the fix will happen in a public code repository, sometimes not. Sometimes the developer will mention that it is a vulnerability in the commit message or the release notes of the new version, sometimes not. There are notorious projects that refuse to handle security vulnerabilities in a transparent way. Sometimes whoever found the vulnerability will post more information on his/her blog or on a mailing list like full disclosure or oss-security. Sometimes not. Sometimes vulnerabilities get a CVE id assigned, sometimes not.

Add to that the fact that in many cases it's far from clear what is a security vulnerability. It is absolutely common that if you ask the people involved whether this is serious the best and most honest answer they can give is “we don't know”. And very often bugs get fixed without anyone noticing that it even could be a security vulnerability.

Then there are projects where the number of security vulnerabilities found and fixed is really huge. The latest Chrome 40 release had 62 security fixes, version 39 had 42. Chrome releases a new version every two months. Browser vulnerabilities are found and fixed on a daily basis. Not that extreme but still high is the vulnerability count in PHP, which is especially worrying if you know that many webhosting providers run PHP versions not supported any more.

So you probably see my point: There is a very chaotic stream of information in various different places about bugs and vulnerabilities in free software projects. The number of vulnerabilities is huge. Making a promise that you will scan all this information for security vulnerabilities and backport the patches to your operating system is a big promise. And I doubt anyone can fulfill that.

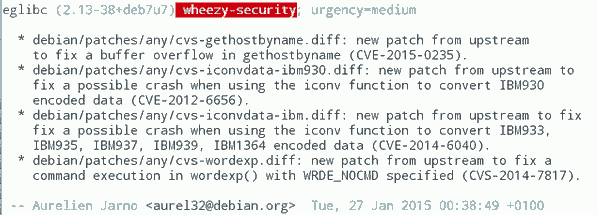

GHOST is a single example, so you might ask how often these things happen. At some point right after GHOST became public this excerpt from the Debian Glibc changelog caught my attention (excuse the bad quality, had to take the image from Twitter because I was unable to find that changelog on Debian's webpages):

What you can see here: While Debian fixed GHOST (which is CVE-2015-0235) they also fixed CVE-2012-6656 – a security issue from 2012. Admittedly this is a minor issue, but it's a vulnerability nevertheless. A quick look at the Debian changelog of Chromium both in squeeze and wheezy will tell you that they aren't fixing all the recent security issues in it. (Debian already had discussions about removing Chromium and in Wheezy they don't stick to a single version.)

It would be an interesting (and time consuming) project to take a package like PHP and check for all the security vulnerabilities whether they are fixed in the latest packages in Debian Squeeze/Wheezy, all Red Hat Enterprise versions and other long term support systems. PHP is probably more interesting than browsers, because the high profile targets for these vulnerabilities are servers. What worries me: I'm pretty sure some people already do that. They just won't tell you and me, instead they'll write their exploits and sell them to repressive governments or botnet operators.

Then there are also stories like this: Tavis Ormandy reported a security issue in Glibc in 2012 and the people from Google's Project Zero went to great lengths to show that it is actually exploitable. Reading the Glibc bug report you can learn that this was already reported in 2005(!), just nobody noticed back then that it was a security issue and it was minor enough that nobody cared to fix it.

There are also bugs that require changes so big that backporting them is essentially impossible. In the TLS world a lot of protocol bugs have been highlighted in recent years. Take Lucky Thirteen for example. It is a timing sidechannel in the way the TLS protocol combines the CBC encryption, padding and authentication. I like to mention this bug because I like to quote it as the TLS bug that was already mentioned in the specification (RFC 5246, page 23: "This leaves a small timing channel"). The real fix for Lucky Thirteen is not to use the erratic CBC mode any more and switch to authenticated encryption modes which are part of TLS 1.2. (There's another possible fix which is using Encrypt-then-MAC, but it is hardly deployed.) Up until recently most encryption libraries didn't support TLS 1.2. Debian Squeeze and Red Hat Enterprise 5 ship OpenSSL versions that only support TLS 1.0. There is no trivial patch that could be backported, because this is a huge change. What they likely backported are workarounds that avoid the timing channel. This will stop the attack, but it is not a very good fix, because it keeps the problematic old protocol and will force others to stay compatible with it.

LTS and stable distributions are there for a reason

The big question is of course what to do about it. OpenBSD developer Ted Unangst wrote a blog post yesterday titled Long term support considered harmful, I suggest you read it. He argues that we should get rid of long term support completely and urge users to upgrade more often. OpenBSD has a 6 month release cycle and supports two releases, so one version gets supported for one year.

Given what I wrote before you may think that I agree with him, but I don't. While I personally always avoided to use too old systems – I 'm usually using Gentoo which doesn't have any snapshot releases at all and does rolling releases – I can see the value in long term support releases. There are a lot of systems out there – connected to the Internet – that are never updated. Taking away the option to install systems and let them run with relatively little maintenance overhead over several years will probably result in more systems never receiving any security updates. With all its imperfectness running a Debian Squeeze with the latest updates is certainly better than running an operating system from 2011 that stopped getting security fixes in 2012.

Improving the information flow

I don't think there is a silver bullet solution, but I think there are things we can do to improve the situation. What could be done is to coordinate and share the work. Debian, Red Hat and other distributions with stable/LTS versions could agree that their next versions are based on a specific Glibc version and they collaboratively work on providing patch sets to fix all the vulnerabilities in it. This already somehow happens with upstream projects providing long term support versions, the Linux kernel does that for example. Doing that at scale would require vast organizational changes in the Linux distributions. They would have to agree on a roughly common timescale to start their stable versions.

What I'd consider the most crucial thing is to improve and streamline the information flow about vulnerabilities. When Google fixes a vulnerability in Chrome OS they should make sure this information is shared with other Linux distributions and the public. And they should know where and how they should share this information.

One mechanism that tries to organize the vulnerability process is the system of CVE ids. The idea is actually simple: Publicly known vulnerabilities get a fixed id and they are in a public database. GHOST is CVE-2015-0235 (the scheme will soon change because four digits aren't enough for all the vulnerabilities we find every year). I got my first CVEs assigned in 2007, so I have some experiences with the CVE system and they are rather mixed. Sometimes I briefly mention rather minor issues in a mailing list thread and a CVE gets assigned right away. Sometimes I explicitly ask for CVE assignments and never get an answer.

I would like to see that we just assign CVEs for everything that even remotely looks like a security vulnerability. However right now I think the process is to unreliable to deliver that. There are other public vulnerability databases like OSVDB, I have limited experience with them, so I can't judge if they'd be better suited. Unfortunately sometimes people hesitate to request CVE ids because others abuse the CVE system to count assigned CVEs and use this as a metric how secure a product is. Such bad statistics are outright dangerous, because it gives people an incentive to downplay vulnerabilities or withhold information about them.

This post was partly inspired by some discussions on oss-security

Posted by Hanno Böck

in Code, English, Gentoo, Linux, Security

at

00:52

| Comments (5)

| Trackbacks (0)

Friday, September 19. 2014

Some experience with Content Security Policy

I recently started playing around with Content Security Policy (CSP). CSP is a very neat feature and a good example how to get IT security right.

I recently started playing around with Content Security Policy (CSP). CSP is a very neat feature and a good example how to get IT security right.The main reason CSP exists are cross site scripting vulnerabilities (XSS). Every time a malicious attacker is able to somehow inject JavaScript or other executable code into your webpage this is called an XSS. XSS vulnerabilities are amongst the most common vulnerabilities in web applications.

CSP fixes XSS for good

The approach to fix XSS in the past was to educate web developers that they need to filter or properly escape their input. The problem with this approach is that it doesn't work. Even large websites like Amazon or Ebay don't get this right. The problem, simply stated, is that there are just too many places in a complex web application to create XSS vulnerabilities. Fixing them one at a time doesn't scale.

CSP tries to fix this in a much more generic way: How can we prevent XSS from happening at all? The way to do this is that the web server is sending a header which defines where JavaScript and other content (images, objects etc.) is allowed to come from. If used correctly CSP can prevent XSS completely. The problem with CSP is that it's hard to add to an already existing project, because if you want CSP to be really secure you have to forbid inline JavaScript. That often requires large re-engineering of existing code. Preferrably CSP should be part of the development process right from the beginning. If you start a web project keep that in mind and educate your developers to use restrictive CSP before they write any code. Starting a new web page without CSP these days is irresponsible.

To play around with it I added a CSP header to my personal webpage. This was a simple target, because it's a very simple webpage. I'm essentially sure that my webpage is XSS free because it doesn't use any untrusted input, I mainly wanted to have an easy target to do some testing. I also tried to add CSP to this blog, but this turned out to be much more complicated.

For my personal webpage this is what I did (PHP code):

header("Content-Security-Policy:default-src 'none';img-src 'self';style-src 'self';report-uri /c/");

The default policy is to accept nothing. The only things I use on my webpage are images and stylesheets and they all are located on the same webspace as the webpage itself, so I allow these two things.

This is an extremely simple CSP policy. To give you an idea how a more realistic policy looks like this is the one from Github:

Content-Security-Policy: default-src *; script-src assets-cdn.github.com www.google-analytics.com collector-cdn.github.com; object-src assets-cdn.github.com; style-src 'self' 'unsafe-inline' 'unsafe-eval' assets-cdn.github.com; img-src 'self' data: assets-cdn.github.com identicons.github.com www.google-analytics.com collector.githubapp.com *.githubusercontent.com *.gravatar.com *.wp.com; media-src 'none'; frame-src 'self' render.githubusercontent.com gist.github.com www.youtube.com player.vimeo.com checkout.paypal.com; font-src assets-cdn.github.com; connect-src 'self' ghconduit.com:25035 live.github.com uploads.github.com s3.amazonaws.com

Reporting feature

You may have noticed in my CSP header line that there's a "report-uri" command at the end. The idea is that whenever a browser blocks something by CSP it is able to report this to the webpage owner. Why should we do this? Because we still want to fix XSS issues (there are browsers with little or no CSP support (I'm looking at you Internet Explorer) and we want to know if our policy breaks anything that is supposed to work. The way this works is that a json file with details is sent via a POST request to the URL given.

While this sounds really neat in theory, in practise I found it to be quite disappointing. As I said above I'm almost certain my webpage has no XSS issues, so I shouldn't get any reports at all. However I get lots of them and they are all false positives. The problem are browser extensions that execute things inside a webpage's context. Sometimes you can spot them (when source-file starts with "chrome-extension" or "safari-extension"), sometimes you can't (source-file will only say "data"). Sometimes this is triggered not by single extensions but by combinations of different ones (I found out that a combination of HTTPS everywhere and Adblock for Chrome triggered a CSP warning). I'm not sure how to handle this and if this is something that should be reported as a bug either to the browser vendors or the extension developers.

Conclusion

If you start a web project use CSP. If you have a web page that needs extra security use CSP (my bank doesn't - does yours?). CSP reporting is neat, but it's usefulness is limited due to too many false positives.

Then there's the bigger picture of IT security in general. Fixing single security bugs doesn't work. Why? XSS is as old as JavaScript (1995) and it's still a huge problem. An example for a simliar technology are prepared statements for SQL. If you use them you won't have SQL injections. SQL injections are the second most prevalent web security problem after XSS. By using CSP and prepared statements you eliminate the two biggest issues in web security. Sounds like a good idea to me.

Buffer overflows where first documented 1972 and they still are the source of many security issues. Fixing them for good is trickier but it is also possible.

Thursday, September 25. 2008

SSL Session hijacking

Recently, two publications raised awareness of a problem with ssl secured websites.

If a website is configured to always forward traffic to ssl, one would assume that all traffic is safe and nothing can be sniffed. Though, if one is able to sniff network traffic and also has the ability to forward the victim to a crafted site (which can, e. g., be just sending him some »hey, read this, interesting text« message), he can then force the victim to open a http-connection. If the cookie has not set the secured flag, the attacker can sniff the cookie and take over the session of the user (assuming it's using some kind of cookie-based session, which is pretty standard on today's webapps).

The solution to this is that a webapp should always check if the connection is ssl and set the secured flag accordingly. For PHP, this could be something like this:

I've recently investigated all web applications I'm using myself and reported the issue (Mantis / CVE-2008-3102, Drupal / CVE-2008-3661, Gallery / CVE-2008-3662, Squirrelmail / CVE-2008-3663). I have some more pending I want to investigate further.

I call security researchers to add this issue to their list of common things one has to look after. I find the firefox-extension CookieMonster very useful for this.

The result of my reports was quite mixed. While the gallery team took the issue very serious (and even payed me a bounty for my report, many thanks for that), the drupal team thinks there is no issue and did nothing. The others have not released updates yet, but fixed the issue in their code.

And for a final word, I want to share a mail with you I got after posting the gallery issue to full disclosure:

for fuck's sake dude! half of the planet, military, government, financial sites suffer from this and the best you could come up with is a fucking photo album no one uses! do everybody a favor and die you lame fuck!

If a website is configured to always forward traffic to ssl, one would assume that all traffic is safe and nothing can be sniffed. Though, if one is able to sniff network traffic and also has the ability to forward the victim to a crafted site (which can, e. g., be just sending him some »hey, read this, interesting text« message), he can then force the victim to open a http-connection. If the cookie has not set the secured flag, the attacker can sniff the cookie and take over the session of the user (assuming it's using some kind of cookie-based session, which is pretty standard on today's webapps).

The solution to this is that a webapp should always check if the connection is ssl and set the secured flag accordingly. For PHP, this could be something like this:

if ($_SERVER['HTTPS']) session_set_cookie_params( 0, '/', '', true, true );

I've recently investigated all web applications I'm using myself and reported the issue (Mantis / CVE-2008-3102, Drupal / CVE-2008-3661, Gallery / CVE-2008-3662, Squirrelmail / CVE-2008-3663). I have some more pending I want to investigate further.

I call security researchers to add this issue to their list of common things one has to look after. I find the firefox-extension CookieMonster very useful for this.

The result of my reports was quite mixed. While the gallery team took the issue very serious (and even payed me a bounty for my report, many thanks for that), the drupal team thinks there is no issue and did nothing. The others have not released updates yet, but fixed the issue in their code.

And for a final word, I want to share a mail with you I got after posting the gallery issue to full disclosure:

for fuck's sake dude! half of the planet, military, government, financial sites suffer from this and the best you could come up with is a fucking photo album no one uses! do everybody a favor and die you lame fuck!

Saturday, December 15. 2007

Security and »mature applications«

Recently I had a discussion with someone about the security of various linux distributions where he claimed Debian stable to be very secure and that they use old versions where they backport all occurances of security issues. This is a common assumption, too bad that it's wrong.

I want to document this to demonstrate the dangerousness of opinions like »stay with the old software«, »never touch a running system« and alike that are not limited but often found in the Debian community.

I had a look at the security policy on a package recently very popular for it's vast number of issues, namely php. Their last php-update on php5 was in july. They have a heavily patched version of php 5.2.0 and according to their changelog the last thing they patched was CVE-2007-1864.

Now that probably means that CVE-2007-3996, CVE-2007-3378, CVE-2007-3997, CVE-2007-4652, CVE-2007-4658, CVE-2007-4659, CVE-2007-4670, CVE-2007-4657, CVE-2007-4662, CVE-2007-3998, CVE-2007-5898, CVE-2007-5899, CVE-2007-5900 are unfixed. Oh, and before you ask: This list certainly is incomplete, it's just what I found without much hassle.

Not only looking at php5, php4 is still officially distributed by many vendors, some hosters still use it. You can't really blame them, as php.net announced it to be supported until 2007-12-31. But that's theory, in fact, nobody looks if the various recent issues also apply to php 4, most recent advisories don't mention it. Fact is, at least CVE-2007-3378, CVE-2007-3997, CVE-2007-4657, CVE-2007-3998 also apply to php 4 and are unfixed in their latest release 4.4.7 (not neccessary to mention that they're not fixed in the debian stable php4 package).

If someone prefers to stay with old software, it's up to them. But be serious. It's probably a hell-job to get all the recent security issues in an app like php backported to some ancient version. A quick check showed me that debian isn't able to do that. If you can't do that, don't claim it's in any way »supported«. Because doing that put's other people in dangerous situations.

I want to document this to demonstrate the dangerousness of opinions like »stay with the old software«, »never touch a running system« and alike that are not limited but often found in the Debian community.

I had a look at the security policy on a package recently very popular for it's vast number of issues, namely php. Their last php-update on php5 was in july. They have a heavily patched version of php 5.2.0 and according to their changelog the last thing they patched was CVE-2007-1864.

Now that probably means that CVE-2007-3996, CVE-2007-3378, CVE-2007-3997, CVE-2007-4652, CVE-2007-4658, CVE-2007-4659, CVE-2007-4670, CVE-2007-4657, CVE-2007-4662, CVE-2007-3998, CVE-2007-5898, CVE-2007-5899, CVE-2007-5900 are unfixed. Oh, and before you ask: This list certainly is incomplete, it's just what I found without much hassle.

Not only looking at php5, php4 is still officially distributed by many vendors, some hosters still use it. You can't really blame them, as php.net announced it to be supported until 2007-12-31. But that's theory, in fact, nobody looks if the various recent issues also apply to php 4, most recent advisories don't mention it. Fact is, at least CVE-2007-3378, CVE-2007-3997, CVE-2007-4657, CVE-2007-3998 also apply to php 4 and are unfixed in their latest release 4.4.7 (not neccessary to mention that they're not fixed in the debian stable php4 package).

If someone prefers to stay with old software, it's up to them. But be serious. It's probably a hell-job to get all the recent security issues in an app like php backported to some ancient version. A quick check showed me that debian isn't able to do that. If you can't do that, don't claim it's in any way »supported«. Because doing that put's other people in dangerous situations.

Sunday, August 19. 2007

Welcome a new Gentoo Dev: Christian Hoffmann

I'm happy to announce that I mentored Christian Hoffmann to become a new Gentoo Developer.

Christian did some PHP-security work for Gentoo recently, which is very important due to the high amount of security issues php had recently. Welcome on board and continue your good work.

Christian did some PHP-security work for Gentoo recently, which is very important due to the high amount of security issues php had recently. Welcome on board and continue your good work.

Friday, September 29. 2006

PHP braindamage

Okay, I knew PHP isn't the most well-designed language in the world. I knew it grew up from a hobbyist language and that it is used for things today it never was meant for. I knew that it's function names sometimes aren't very intuitive.

But today I started to hate it. Now, beside various other strange things, this one let's me wonder which drugs the person that implemented this was consuming:

PHP is, by design, syntactical similar to C, meaning it has curly brackets, same comment syntax, same syntax for if, for and other basic commands. Now, one would probably think that a function named the same in C and PHP would do the same. Or at least something similar.

Due to the fact that C is very low-level, it has a function called sizeof() that gives you the amount of bytes a variable type uses in memory. E. g., an integer is the basic type of the current architecture, not always the same size (4 byte on i386, 8 byte on amd64). With sizeof() you can get the size of the type.

Now, I was looking for a function to get the number of elements in an array. I searched and found, well, sizeof(). Which I couldn't really believe. Due to the abstract structure of PHP, it seemed quite impossible to me to have something like sizeof() in php. It really gives you the number of elements in an array.

But today I started to hate it. Now, beside various other strange things, this one let's me wonder which drugs the person that implemented this was consuming:

PHP is, by design, syntactical similar to C, meaning it has curly brackets, same comment syntax, same syntax for if, for and other basic commands. Now, one would probably think that a function named the same in C and PHP would do the same. Or at least something similar.

Due to the fact that C is very low-level, it has a function called sizeof() that gives you the amount of bytes a variable type uses in memory. E. g., an integer is the basic type of the current architecture, not always the same size (4 byte on i386, 8 byte on amd64). With sizeof() you can get the size of the type.

Now, I was looking for a function to get the number of elements in an array. I searched and found, well, sizeof(). Which I couldn't really believe. Due to the abstract structure of PHP, it seemed quite impossible to me to have something like sizeof() in php. It really gives you the number of elements in an array.

(Page 1 of 1, totaling 9 entries)