Entries tagged as ssl

Related tags

algorithm certificate cryptography eff observatory pss rsa security android ac100 cloudflare facebook gentoo hash https laptop linux notebook sha1 sha256 smartbook subnotebook tls toshiba ubuntu updates windowsxp antivirus bundestrojaner freak kaspersky mcaffee mitm onlinedurchsuchung virus vulnerability apache certificates datenschutz datensparsamkeit encryption grsecurity itsecurity javascript karlsruhe letsencrypt mod_rewrite nginx ocsp ocspstapling openssl php revocation serendipity sni symlink userdir vortrag web web20 webhosting webmontag websecurity berserk bleichenbacher chrome firefox nss poodle browser ajax clickjacking content-security-policy csp dell edellroot html khtml konqueror maninthemiddle microsoft rss superfish webcore xss ca cacert ccc certificateauthority md5 privatekey sha2 symantec transvalid x509 aiglx blog calendar come2linux compiz email english essen games ipv6 kubik ludwigsburg lug lugbk openstreetmap planet rc2 schokokeks simplesharingextensions smime stadtmitte webinale wine xgl cbc gnutls luckythirteen padding 23c3 24c3 4k assembler berlin bewußtsein braunschweig bsideshn c4 cctv copyright darmstadt drm dvb easterhegg entropia freesoftware gpn gpn5 gpn6 gpn7 hacker hannover jugendumweltbewegung jukss kongress königswusterhausen licenses mrmcd mrmcd100b mrmcd101b mysmartgrid ökologie papierlos passwörter pgp philosophie privacy programmieren programming publicdomain querfunk radio rsaoaep rsapss science server slides stuttgart surveillance talk theory tpm überwachung überwachungskameras unicode utf-8 wahl wahlcomputer wahlmaschinen wiesbaden wiki cccamp cccamp15 cccamp11 openleaks taz chromium crash diffiehellman forwardsecrecy keyexchange cve debian ghost glibc redhat cookie bigbluebutton drupal fileexfiltration gallery jodconverter libreoffice mantis session sniffing squirrelmail core coredump segfault webroot webserver crypto http apt badkeys cmi deb deolalikar diploma diplomarbeit enigma fedora fortigate fortinet gnupg gpg gsoc key keyserver leak libressl math milleniumproblems modulobias nist openbsd openid openidconnect openpgp packagemanagement password pnp provablesecurity random revoke rpm schlüssel sha512 signatures sso thesis university verschlüsselung wordpress abuse aok boranet botnet ddos etymologie lg ncable spam sprache aead aes cfb cms gajim jabber joomla otr owncloud update xmpp babelfish camera canon china chinese csrf gadgets gammu gnokii googletranslate gphoto josm journalist language mandarin media merkaartor mobile moodle nokia ptp russia russian s9y time translation travel universaltranslator writing ffmpeg flash flv ftp gstreamer mozilla mplayer multimedia video vlc xine xsa youtube zzuf 3d addresssanitizer asan beryl bufferoverflow c censorship clang compizfusion composite developer france freedomofspeech gcc gebabbel gps gpsbabel idn iputils kde lenovo memorysafety metacity mobiletrailexplorer nancy ping politics rmll thinkpad useafterfree x1carbon xorg zensur administration howto iptables network proxy rfc squid adguard breach cookies crime heist komodia netfiltersdk privdog protocolfilters samesite ip rootserver agenda21 barcamp bnn bodensee bundesverfassungsgericht co2 computerspiele demonstration ejc enbw energie freeculture fricard gamer garmin geo geocaching geodaten informationdisclosure jonglieren killerspiele klimaschutz kohlekraftwerk mysql openexpo opensourceexpo press presse rfid rhein steinkohle umweltschutz uni vorratsdatenspeicherung zkm 1und1 artikel ati augsburg augsburgerallgemeine backnang bahn bash bios bonn cardreader ccwn chemnitz cinelerra clt compression console cpu cpufreq creativecommons delilinux demoscene desktop developingworld distribution dmidecode driver eltorito esslingen exe fma86t frankreich freedesktop freifunk frequencies froscon froscon2007 gaia gatos gnome google googleearth graphics grub gtk harddisk hardware hddtemp heartbleed homebrew hp ibm ico icons icoutils inkscape installparty iso itu kgtk kubuntu lessig license lit07 lm_sensors lpi lpic lspci lsusb luga macos mandriva memdisk memorystick messe metisse motherboard movie nouveau nvidia olpc omnibook opengl overheatd overheating pciids pcmagazin pcmcia qt r300 radeon randr12 rar reverseengineering ricoh samsung sd sdricohcs shellshock siegburg smart smartmontools sncf softwarefreedomday standards support syslinux t61 thesource theunarchiver tv tvout unar usability usb usbids vc-1 videoediting vista waiblingen wii wiibrew win32codecs windows windowsrefund wlan wmv wos wos4 wrestool zeitung afl americanfuzzylop fuzzing code distributions infoleak pdo sizeof stacktrace blogdieb earth fdl feed hackergotchi harvester lawblog mars python recht rechtsanwalt udovetter factoring diplomathesis simple upgoerfive words 0days 27c3 adobe afra altushost antivir auskunftsanspruch axfr azure bias botnetz bsi bugbounty bundesdatenschutzgesetz busby bypass cellular chcounter clamav conflictofinterest dingens dns domain eplus firewall freewvs frequency fsfe gimp git gobi gsm hackerone hacking helma internetscan ircbot malware mephisto mobilephones mpaa napster nessus newspaper ntp ntpd openbsc openbts openvas osmocombb panda passwordalert passwort phishing rand salinecourier shellbot sicherheit snallygaster sqlinjection staatsanwaltschaft study subdomain sunras tlsdate toendacms unicef vulnerabilities webapps wiretapping zerodays zugangsdaten klima klimacamp strom stromsparen wachstum wirtschaftswachstum absturz atari blinkenlights brownbox cccs db demokratie environment fsf k21 lightwerk mappus painstation patents plebiszit police policeviolence politik pong pongmechanik pongmythos protest publictransport rech retrogames s21 schuster softwarepatents stallman stuttgart21 traffic umwelt verkehr volksabstimmung wien wkv informationsfreiheit unserwasser volksbegehren wasser bugtracker github nextcloud acid3 base64 css midori script webdesign webkit webstandards topberlinThursday, July 20. 2017

How I tricked Symantec with a Fake Private Key

Lately, some attention was drawn to a widespread problem with TLS certificates. Many people are accidentally publishing their private keys. Sometimes they are released as part of applications, in Github repositories or with common filenames on web servers.

Lately, some attention was drawn to a widespread problem with TLS certificates. Many people are accidentally publishing their private keys. Sometimes they are released as part of applications, in Github repositories or with common filenames on web servers.If a private key is compromised, a certificate authority is obliged to revoke it. The Baseline Requirements – a set of rules that browsers and certificate authorities agreed upon – regulate this and say that in such a case a certificate authority shall revoke the key within 24 hours (Section 4.9.1.1 in the current Baseline Requirements 1.4.8). These rules exist despite the fact that revocation has various problems and doesn’t work very well, but that’s another topic.

I reported various key compromises to certificate authorities recently and while not all of them reacted in time, they eventually revoked all certificates belonging to the private keys. I wondered however how thorough they actually check the key compromises. Obviously one would expect that they cryptographically verify that an exposed private key really is the private key belonging to a certificate.

I registered two test domains at a provider that would allow me to hide my identity and not show up in the whois information. I then ordered test certificates from Symantec (via their brand RapidSSL) and Comodo. These are the biggest certificate authorities and they both offer short term test certificates for free. I then tried to trick them into revoking those certificates with a fake private key.

Forging a private key

To understand this we need to get a bit into the details of RSA keys. In essence a cryptographic key is just a set of numbers. For RSA a public key consists of a modulus (usually named N) and a public exponent (usually called e). You don’t have to understand their mathematical meaning, just keep in mind: They’re nothing more than numbers.

An RSA private key is also just numbers, but more of them. If you have heard any introductory RSA descriptions you may know that a private key consists of a private exponent (called d), but in practice it’s a bit more. Private keys usually contain the full public key (N, e), the private exponent (d) and several other values that are redundant, but they are useful to speed up certain things. But just keep in mind that a public key consists of two numbers and a private key is a public key plus some additional numbers. A certificate ultimately is just a public key with some additional information (like the host name that says for which web page it’s valid) signed by a certificate authority.

A naive check whether a private key belongs to a certificate could be done by extracting the public key parts of both the certificate and the private key for comparison. However it is quite obvious that this isn’t secure. An attacker could construct a private key that contains the public key of an existing certificate and the private key parts of some other, bogus key. Obviously such a fake key couldn’t be used and would only produce errors, but it would survive such a naive check.

I created such fake keys for both domains and uploaded them to Pastebin. If you want to create such fake keys on your own here’s a script. To make my report less suspicious I searched Pastebin for real, compromised private keys belonging to certificates. This again shows how problematic the leakage of private keys is: I easily found seven private keys for Comodo certificates and three for Symantec certificates, plus several more for other certificate authorities, which I also reported. These additional keys allowed me to make my report to Symantec and Comodo less suspicious: I could hide my fake key report within other legitimate reports about a key compromise.

Symantec revoked a certificate based on a forged private key

Comodo didn’t fall for it. They answered me that there is something wrong with this key. Symantec however answered me that they revoked all certificates – including the one with the fake private key.

Comodo didn’t fall for it. They answered me that there is something wrong with this key. Symantec however answered me that they revoked all certificates – including the one with the fake private key.No harm was done here, because the certificate was only issued for my own test domain. But I could’ve also fake private keys of other peoples' certificates. Very likely Symantec would have revoked them as well, causing downtimes for those sites. I even could’ve easily created a fake key belonging to Symantec’s own certificate.

The communication by Symantec with the domain owner was far from ideal. I first got a mail that they were unable to process my order. Then I got another mail about a “cancellation request”. They didn’t explain what really happened and that the revocation happened due to a key uploaded on Pastebin.

I then informed Symantec about the invalid key (from my “real” identity), claiming that I just noted there’s something wrong with it. At that point they should’ve been aware that they revoked the certificate in error. Then I contacted the support with my “domain owner” identity and asked why the certificate was revoked. The answer: “I wanted to inform you that your FreeSSL certificate was cancelled as during a log check it was determined that the private key was compromised.”

To summarize: Symantec never told the domain owner that the certificate was revoked due to a key leaked on Pastebin. I assume in all the other cases they also didn’t inform their customers. Thus they may have experienced a certificate revocation, but don’t know why. So they can’t learn and can’t improve their processes to make sure this doesn’t happen again. Also, Symantec still insisted to the domain owner that the key was compromised even after I already had informed them that the key was faulty.

How to check if a private key belongs to a certificate?

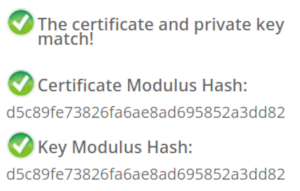

In case you wonder how you properly check whether a private key belongs to a certificate you may of course resort to a Google search. And this was fascinating – and scary – to me: I searched Google for “check if private key matches certificate”. I got plenty of instructions. Almost all of them were wrong. The first result is a page from SSLShopper. They recommend to compare the MD5 hash of the modulus. That they use MD5 is not the problem here, the problem is that this is a naive check only comparing parts of the public key. They even provide a form to check this. (That they ask you to put your private key into a form is a different issue on its own, but at least they have a warning about this and recommend to check locally.)

In case you wonder how you properly check whether a private key belongs to a certificate you may of course resort to a Google search. And this was fascinating – and scary – to me: I searched Google for “check if private key matches certificate”. I got plenty of instructions. Almost all of them were wrong. The first result is a page from SSLShopper. They recommend to compare the MD5 hash of the modulus. That they use MD5 is not the problem here, the problem is that this is a naive check only comparing parts of the public key. They even provide a form to check this. (That they ask you to put your private key into a form is a different issue on its own, but at least they have a warning about this and recommend to check locally.)Furthermore we get the same wrong instructions from the University of Wisconsin, Comodo (good that their engineers were smart enough not to rely on their own documentation), tbs internet (“SSL expert since 1996”), ShellHacks, IBM and RapidSSL (aka Symantec). A post on Stackexchange is the only result that actually mentions a proper check for RSA keys. Two more Stackexchange posts are not related to RSA, I haven’t checked their solutions in detail.

Going to Google results page two among some unrelated links we find more wrong instructions and tools from Symantec (Update 2020: Link offline), SSL247 (“Symantec Specialist Partner Website Security” - they learned from the best) and some private blog. A documentation by Aspera (belonging to IBM) at least mentions that you can check the private key, but in an unrelated section of the document. Also we get more tools that ask you to upload your private key and then not properly check it from SSLChecker.com, the SSL Store (Symantec “Website Security Platinum Partner”), GlobeSSL (“in SSL we trust”) and - well - RapidSSL.

Documented Security Vulnerability in OpenSSL

So if people google for instructions they’ll almost inevitably end up with non-working instructions or tools. But what about other options? Let’s say we want to automate this and have a tool that verifies whether a certificate matches a private key using OpenSSL. We may end up finding that OpenSSL has a function

x509_check_private_key() that can be used to “check the consistency of a private key with the public key in an X509 certificate or certificate request”. Sounds like exactly what we need, right?Well, until you read the full docs and find out that it has a BUGS section: “The check_private_key functions don't check if k itself is indeed a private key or not. It merely compares the public materials (e.g. exponent and modulus of an RSA key) and/or key parameters (e.g. EC params of an EC key) of a key pair.”

I think this is a security vulnerability in OpenSSL (discussion with OpenSSL here). And that doesn’t change just because it’s a documented security vulnerability. Notably there are downstream consumers of this function that failed to copy that part of the documentation, see for example the corresponding PHP function (the limitation is however mentioned in a comment by a user).

So how do you really check whether a private key matches a certificate?

Ultimately there are two reliable ways to check whether a private key belongs to a certificate. One way is to check whether the various values of the private key are consistent and then check whether the public key matches. For example a private key contains values p and q that are the prime factors of the public modulus N. If you multiply them and compare them to N you can be sure that you have a legitimate private key. It’s one of the core properties of RSA that it’s secure based on the assumption that it’s not feasible to calculate p and q from N.

You can use OpenSSL to check the consistency of a private key:

openssl rsa -in [privatekey] -checkFor my forged keys it will tell you:

RSA key error: n does not equal p qYou can then compare the public key, for example by calculating the so-called SPKI SHA256 hash:

openssl pkey -in [privatekey] -pubout -outform der | sha256sumopenssl x509 -in [certificate] -pubkey |openssl pkey -pubin -pubout -outform der | sha256sumAnother way is to sign a message with the private key and then verify it with the public key. You could do it like this:

openssl x509 -in [certificate] -noout -pubkey > pubkey.pemdd if=/dev/urandom of=rnd bs=32 count=1openssl rsautl -sign -pkcs -inkey [privatekey] -in rnd -out sigopenssl rsautl -verify -pkcs -pubin -inkey pubkey.pem -in sig -out checkcmp rnd checkrm rnd check sig pubkey.pemIf cmp produces no output then the signature matches.

As this is all quite complex due to OpenSSLs arcane command line interface I have put this all together in a script. You can pass a certificate and a private key, both in ASCII/PEM format, and it will do both checks.

Summary

Symantec did a major blunder by revoking a certificate based on completely forged evidence. There’s hardly any excuse for this and it indicates that they operate a certificate authority without a proper understanding of the cryptographic background.

Apart from that the problem of checking whether a private key and certificate match seems to be largely documented wrong. Plenty of erroneous guides and tools may cause others to fall for the same trap.

Update: Symantec answered with a blog post.

Posted by Hanno Böck

in Cryptography, English, Linux, Security

at

16:58

| Comments (12)

| Trackback (1)

Defined tags for this entry: ca, certificate, certificateauthority, openssl, privatekey, rsa, ssl, symantec, tls, x509

Friday, May 19. 2017

The Problem with OCSP Stapling and Must Staple and why Certificate Revocation is still broken

Update (2020-09-16): While three years old, people still find this blog post when looking for information about Stapling problems. For Apache the situation has improved considerably in the meantime: mod_md, which is part of recent apache releases, comes with a new stapling implementation which you can enable with the setting MDStapling on.

Today the OCSP servers from Let’s Encrypt were offline for a while. This has caused far more trouble than it should have, because in theory we have all the technologies available to handle such an incident. However due to failures in how they are implemented they don’t really work.

We have to understand some background. Encrypted connections using the TLS protocol like HTTPS use certificates. These are essentially cryptographic public keys together with a signed statement from a certificate authority that they belong to a certain host name.

CRL and OCSP – two technologies that don’t work

Certificates can be revoked. That means that for some reason the certificate should no longer be used. A typical scenario is when a certificate owner learns that his servers have been hacked and his private keys stolen. In this case it’s good to avoid that the stolen keys and their corresponding certificates can still be used. Therefore a TLS client like a browser should check that a certificate provided by a server is not revoked.

That’s the theory at least. However the history of certificate revocation is a history of two technologies that don’t really work.

One method are certificate revocation lists (CRLs). It’s quite simple: A certificate authority provides a list of certificates that are revoked. This has an obvious limitation: These lists can grow. Given that a revocation check needs to happen during a connection it’s obvious that this is non-workable in any realistic scenario.

The second method is called OCSP (Online Certificate Status Protocol). Here a client can query a server about the status of a single certificate and will get a signed answer. This avoids the size problem of CRLs, but it still has a number of problems. Given that connections should be fast it’s quite a high cost for a client to make a connection to an OCSP server during each handshake. It’s also concerning for privacy, as it gives the operator of an OCSP server a lot of information.

However there’s a more severe problem: What happens if an OCSP server is not available? From a security point of view one could say that a certificate that can’t be OCSP-checked should be considered invalid. However OCSP servers are far too unreliable. So practically all clients implement OCSP in soft fail mode (or not at all). Soft fail means that if the OCSP server is not available the certificate is considered valid.

That makes the whole OCSP concept pointless: If an attacker tries to abuse a stolen, revoked certificate he can just block the connection to the OCSP server – and thus a client can’t learn that it’s revoked. Due to this inherent security failure Chrome decided to disable OCSP checking altogether. As a workaround they have something called CRLsets and Mozilla has something similar called OneCRL, which is essentially a big revocation list for important revocations managed by the browser vendor. However this is a weak workaround that doesn’t cover most certificates.

OCSP Stapling and Must Staple to the rescue?

There are two technologies that could fix this: OCSP Stapling and Must-Staple.

OCSP Stapling moves the querying of the OCSP server from the client to the server. The server gets OCSP replies and then sends them within the TLS handshake. This has several advantages: It avoids the latency and privacy implications of OCSP. It also allows surviving short downtimes of OCSP servers, because a TLS server can cache OCSP replies (they’re usually valid for several days).

However it still does not solve the security issue: If an attacker has a stolen, revoked certificate it can be used without Stapling. The browser won’t know about it and will query the OCSP server, this request can again be blocked by the attacker and the browser will accept the certificate.

Therefore an extension for certificates has been introduced that allows us to require Stapling. It’s usually called OCSP Must-Staple and is defined in RFC 7633 (although the RFC doesn’t mention the name Must-Staple, which can cause some confusion). If a browser sees a certificate with this extension that is used without OCSP Stapling it shouldn’t accept it.

So we should be fine. With OCSP Stapling we can avoid the latency and privacy issues of OCSP and we can avoid failing when OCSP servers have short downtimes. With OCSP Must-Staple we fix the security problems. No more soft fail. All good, right?

The OCSP Stapling implementations of Apache and Nginx are broken

Well, here come the implementations. While a lot of protocols use TLS, the most common use case is the web and HTTPS. According to Netcraft statistics by far the biggest share of active sites on the Internet run on Apache (about 46%), followed by Nginx (about 20 %). It’s reasonable to say that if these technologies should provide a solution for revocation they should be usable with the major products in that area. On the server side this is only OCSP Stapling, as OCSP Must Staple only needs to be checked by the client.

What would you expect from a working OCSP Stapling implementation? It should try to avoid a situation where it’s unable to send out a valid OCSP response. Thus roughly what it should do is to fetch a valid OCSP response as soon as possible and cache it until it gets a new one or it expires. It should furthermore try to fetch a new OCSP response long before the old one expires (ideally several days). And it should never throw away a valid response unless it has a newer one. Google developer Ryan Sleevi wrote a detailed description of what a proper OCSP Stapling implementation could look like.

Apache does none of this.

If Apache tries to renew the OCSP response and gets an error from the OCSP server – e. g. because it’s currently malfunctioning – it will throw away the existing, still valid OCSP response and replace it with the error. It will then send out stapled OCSP errors. Which makes zero sense. Firefox will show an error if it sees this. This has been reported in 2014 and is still unfixed.

Now there’s an option in Apache to avoid this behavior: SSLStaplingReturnResponderErrors. It’s defaulting to on. If you switch it off you won’t get sane behavior (that is – use the still valid, cached response), instead Apache will disable Stapling for the time it gets errors from the OCSP server. That’s better than sending out errors, but it obviously makes using Must Staple a no go.

It gets even crazier. I have set this option, but this morning I still got complaints that Firefox users were seeing errors. That’s because in this case the OCSP server wasn’t sending out errors, it was completely unavailable. For that situation Apache has a feature that will fake a tryLater error to send out to the client. If you’re wondering how that makes any sense: It doesn’t. The “tryLater” error of OCSP isn’t useful at all in TLS, because you can’t try later during a handshake which only lasts seconds.

This is controlled by another option: SSLStaplingFakeTryLater. However if we read the documentation it says “Only effective if SSLStaplingReturnResponderErrors is also enabled.” So if we disabled SSLStapingReturnResponderErrors this shouldn’t matter, right? Well: The documentation is wrong.

There are more problems: Apache doesn’t get the OCSP responses on startup, it only fetches them during the handshake. This causes extra latency on the first connection and increases the risk of hitting a situation where you don’t have a valid OCSP response. Also cached OCSP responses don’t survive server restarts, they’re kept in an in-memory cache.

There’s currently no way to configure Apache to handle OCSP stapling in a reasonable way. Here’s the configuration I use, which will at least make sure that it won’t send out errors and cache the responses a bit longer than it does by default:

I’m less familiar with Nginx, but from what I hear it isn’t much better either. According to this blogpost it doesn’t fetch OCSP responses on startup and will send out the first TLS connections without stapling even if it’s enabled. Here’s a blog post that recommends to work around this by connecting to all configured hosts after the server has started.

To summarize: This is all a big mess. Both Apache and Nginx have OCSP Stapling implementations that are essentially broken. As long as you’re using either of those then enabling Must-Staple is a reliable way to shoot yourself in the foot and get into trouble. Don’t enable it if you plan to use Apache or Nginx.

Certificate revocation is broken. It has been broken since the invention of SSL and it’s still broken. OCSP Stapling and OCSP Must-Staple could fix it in theory. But that would require working and stable implementations in the most widely used server products.

Today the OCSP servers from Let’s Encrypt were offline for a while. This has caused far more trouble than it should have, because in theory we have all the technologies available to handle such an incident. However due to failures in how they are implemented they don’t really work.

We have to understand some background. Encrypted connections using the TLS protocol like HTTPS use certificates. These are essentially cryptographic public keys together with a signed statement from a certificate authority that they belong to a certain host name.

CRL and OCSP – two technologies that don’t work

Certificates can be revoked. That means that for some reason the certificate should no longer be used. A typical scenario is when a certificate owner learns that his servers have been hacked and his private keys stolen. In this case it’s good to avoid that the stolen keys and their corresponding certificates can still be used. Therefore a TLS client like a browser should check that a certificate provided by a server is not revoked.

That’s the theory at least. However the history of certificate revocation is a history of two technologies that don’t really work.

One method are certificate revocation lists (CRLs). It’s quite simple: A certificate authority provides a list of certificates that are revoked. This has an obvious limitation: These lists can grow. Given that a revocation check needs to happen during a connection it’s obvious that this is non-workable in any realistic scenario.

The second method is called OCSP (Online Certificate Status Protocol). Here a client can query a server about the status of a single certificate and will get a signed answer. This avoids the size problem of CRLs, but it still has a number of problems. Given that connections should be fast it’s quite a high cost for a client to make a connection to an OCSP server during each handshake. It’s also concerning for privacy, as it gives the operator of an OCSP server a lot of information.

However there’s a more severe problem: What happens if an OCSP server is not available? From a security point of view one could say that a certificate that can’t be OCSP-checked should be considered invalid. However OCSP servers are far too unreliable. So practically all clients implement OCSP in soft fail mode (or not at all). Soft fail means that if the OCSP server is not available the certificate is considered valid.

That makes the whole OCSP concept pointless: If an attacker tries to abuse a stolen, revoked certificate he can just block the connection to the OCSP server – and thus a client can’t learn that it’s revoked. Due to this inherent security failure Chrome decided to disable OCSP checking altogether. As a workaround they have something called CRLsets and Mozilla has something similar called OneCRL, which is essentially a big revocation list for important revocations managed by the browser vendor. However this is a weak workaround that doesn’t cover most certificates.

OCSP Stapling and Must Staple to the rescue?

There are two technologies that could fix this: OCSP Stapling and Must-Staple.

OCSP Stapling moves the querying of the OCSP server from the client to the server. The server gets OCSP replies and then sends them within the TLS handshake. This has several advantages: It avoids the latency and privacy implications of OCSP. It also allows surviving short downtimes of OCSP servers, because a TLS server can cache OCSP replies (they’re usually valid for several days).

However it still does not solve the security issue: If an attacker has a stolen, revoked certificate it can be used without Stapling. The browser won’t know about it and will query the OCSP server, this request can again be blocked by the attacker and the browser will accept the certificate.

Therefore an extension for certificates has been introduced that allows us to require Stapling. It’s usually called OCSP Must-Staple and is defined in RFC 7633 (although the RFC doesn’t mention the name Must-Staple, which can cause some confusion). If a browser sees a certificate with this extension that is used without OCSP Stapling it shouldn’t accept it.

So we should be fine. With OCSP Stapling we can avoid the latency and privacy issues of OCSP and we can avoid failing when OCSP servers have short downtimes. With OCSP Must-Staple we fix the security problems. No more soft fail. All good, right?

The OCSP Stapling implementations of Apache and Nginx are broken

Well, here come the implementations. While a lot of protocols use TLS, the most common use case is the web and HTTPS. According to Netcraft statistics by far the biggest share of active sites on the Internet run on Apache (about 46%), followed by Nginx (about 20 %). It’s reasonable to say that if these technologies should provide a solution for revocation they should be usable with the major products in that area. On the server side this is only OCSP Stapling, as OCSP Must Staple only needs to be checked by the client.

What would you expect from a working OCSP Stapling implementation? It should try to avoid a situation where it’s unable to send out a valid OCSP response. Thus roughly what it should do is to fetch a valid OCSP response as soon as possible and cache it until it gets a new one or it expires. It should furthermore try to fetch a new OCSP response long before the old one expires (ideally several days). And it should never throw away a valid response unless it has a newer one. Google developer Ryan Sleevi wrote a detailed description of what a proper OCSP Stapling implementation could look like.

Apache does none of this.

If Apache tries to renew the OCSP response and gets an error from the OCSP server – e. g. because it’s currently malfunctioning – it will throw away the existing, still valid OCSP response and replace it with the error. It will then send out stapled OCSP errors. Which makes zero sense. Firefox will show an error if it sees this. This has been reported in 2014 and is still unfixed.

Now there’s an option in Apache to avoid this behavior: SSLStaplingReturnResponderErrors. It’s defaulting to on. If you switch it off you won’t get sane behavior (that is – use the still valid, cached response), instead Apache will disable Stapling for the time it gets errors from the OCSP server. That’s better than sending out errors, but it obviously makes using Must Staple a no go.

It gets even crazier. I have set this option, but this morning I still got complaints that Firefox users were seeing errors. That’s because in this case the OCSP server wasn’t sending out errors, it was completely unavailable. For that situation Apache has a feature that will fake a tryLater error to send out to the client. If you’re wondering how that makes any sense: It doesn’t. The “tryLater” error of OCSP isn’t useful at all in TLS, because you can’t try later during a handshake which only lasts seconds.

This is controlled by another option: SSLStaplingFakeTryLater. However if we read the documentation it says “Only effective if SSLStaplingReturnResponderErrors is also enabled.” So if we disabled SSLStapingReturnResponderErrors this shouldn’t matter, right? Well: The documentation is wrong.

There are more problems: Apache doesn’t get the OCSP responses on startup, it only fetches them during the handshake. This causes extra latency on the first connection and increases the risk of hitting a situation where you don’t have a valid OCSP response. Also cached OCSP responses don’t survive server restarts, they’re kept in an in-memory cache.

There’s currently no way to configure Apache to handle OCSP stapling in a reasonable way. Here’s the configuration I use, which will at least make sure that it won’t send out errors and cache the responses a bit longer than it does by default:

SSLStaplingCache shmcb:/var/tmp/ocsp-stapling-cache/cache(128000000)

SSLUseStapling on

SSLStaplingResponderTimeout 2

SSLStaplingReturnResponderErrors off

SSLStaplingFakeTryLater off

SSLStaplingStandardCacheTimeout 86400I’m less familiar with Nginx, but from what I hear it isn’t much better either. According to this blogpost it doesn’t fetch OCSP responses on startup and will send out the first TLS connections without stapling even if it’s enabled. Here’s a blog post that recommends to work around this by connecting to all configured hosts after the server has started.

To summarize: This is all a big mess. Both Apache and Nginx have OCSP Stapling implementations that are essentially broken. As long as you’re using either of those then enabling Must-Staple is a reliable way to shoot yourself in the foot and get into trouble. Don’t enable it if you plan to use Apache or Nginx.

Certificate revocation is broken. It has been broken since the invention of SSL and it’s still broken. OCSP Stapling and OCSP Must-Staple could fix it in theory. But that would require working and stable implementations in the most widely used server products.

Posted by Hanno Böck

in Cryptography, English, Linux, Security

at

23:25

| Comments (5)

| Trackback (1)

Defined tags for this entry: apache, certificates, cryptography, encryption, https, letsencrypt, nginx, ocsp, ocspstapling, revocation, ssl, tls

Friday, December 11. 2015

What got us into the SHA1 deprecation mess?

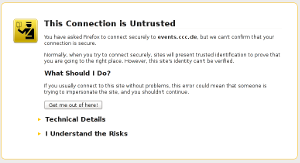

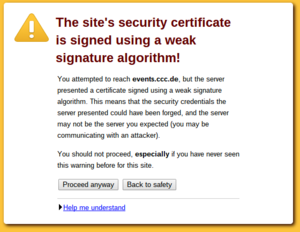

Important notice: After I published this text Adam Langley pointed out that a major assumption is wrong: Android 2.2 actually has no problems with SHA256-signed certificates. I checked this myself and in an emulated Android 2.2 instance I was able to connect to a site with a SHA256-signed certificate. I apologize for that error, I trusted the Cloudflare blog post on that. This whole text was written with that assumption in mind, so it's hard to change without rewriting it from scratch. I have marked the parts that are likely to be questioned. Most of it is still true and Android 2 has a problematic TLS stack (no SNI), but the specific claim regarding SHA256-certificates seems wrong.

This week both Cloudflare and Facebook announced that they want to delay the deprecation of certificates signed with the SHA1 algorithm. This spurred some hot debates whether or not this is a good idea – with two seemingly good causes: On the one side people want to improve security, on the other side access to webpages should remain possible for users of old devices, many of them living in poor countries. I want to give some background on the issue and ask why that unfortunate situation happened in the first place, because I think it highlights some of the most important challenges in the TLS space and more generally in IT security.

This week both Cloudflare and Facebook announced that they want to delay the deprecation of certificates signed with the SHA1 algorithm. This spurred some hot debates whether or not this is a good idea – with two seemingly good causes: On the one side people want to improve security, on the other side access to webpages should remain possible for users of old devices, many of them living in poor countries. I want to give some background on the issue and ask why that unfortunate situation happened in the first place, because I think it highlights some of the most important challenges in the TLS space and more generally in IT security.

SHA1 broken since 2005

The SHA1 algorithm is a cryptographic hash algorithm and it has been know for quite some time that its security isn't great. In 2005 the Chinese researcher Wang Xiaoyun published an attack that would allow to create a collision for SHA1. The attack wasn't practically tested, because it is quite expensive to do so, but it was clear that a financially powerful adversary would be able to perform such an attack. A year before the even older hash function MD5 was broken practically, in 2008 this led to a practical attack against the issuance of TLS certificates. In the past years browsers pushed for the deprecation of SHA1 certificates and it was agreed that starting January 2016 no more certificates signed with SHA1 must be issued, instead the stronger algorithm SHA256 should be used. Many felt this was already far too late, given that it's been ten years since we knew that SHA1 is broken.

A few weeks before the SHA1 deadline Cloudflare and Facebook now question this deprecation plan. They have some strong arguments. According to Cloudflare's numbers there is still a significant number of users that use browsers without support for SHA256-certificates. And those users are primarily in relatively poor, repressive or war-ridden countries. The top three on the list are China, Cameroon and Yemen. Their argument, which is hard to argue with, is that cutting of SHA1 support will primarily affect the poorest users.

Cloudflare and Facebook propose a new mechanism to get legacy validated certificates. These certificates should only be issued to site operators that will use a technology to separate users based on their TLS handshake and only show the SHA1 certificate to those that use an older browser. Facebook already published the code to do that, Cloudflare also announced that they will release the code of their implementation. Right now it's still possible to get SHA1 certificates, therefore those companies could just register them now and use them for three years to come. Asking for this legacy validation process indicates that Cloudflare and Facebook don't see this as a short-term workaround, instead they seem to expect that this will be a solution they use for years to come, without any decided end date.

It's a tough question whether or not this is a good idea. But I want to ask a different question: Why do we have this problem in the first place, why is it hard to fix and what can we do to prevent similar things from happening in the future? One thing is remarkable about this problem: It's a software problem. In theory software can be patched and the solution to a software problem is to update the software. So why can't we just provide updates and get rid of these legacy problems?

Windows XP and Android Froyo

According to Cloudflare there are two main reason why so many users can't use sites with SHA256 certificates: Windows XP and old versions of Android (SHA256 support was added in Android 2.3, so this affects mostly Android 2.2 aka Froyo). We all know that Windows XP shouldn't be used any more, that its support has ended in 2014. But that clearly clashes with realities. People continue using old systems and Windows XP is still alive in many countries, especially in China.

But I'm inclined to say that Windows XP is probably the smaller problem here. With Service Pack 3 Windows XP introduced support for SHA256 certificates. By using an alternative browser (Firefox is still supported on Windows XP if you install SP3) it is even possible to have a relatively safe browsing experience. I'm not saying that I recommend it, but given the circumstances advising people how to update their machines and to install an alternative browser can party provide a way to reduce the reliance on broken algorithms.

The Updatability Gap

But the problem with Android is much, much worse, and I think this brings us to probably the biggest problem in IT security we have today. For years one of the most important messages to users in IT security was: Keep your software up to date. But at the same time the industry has created new software ecosystems where very often that just isn't an option.

In the Android case Google says that it's the responsibility of device vendors and carriers to deliver security updates. The dismal reality is that in most cases they just don't do that. But even if device vendors are willing to provide updates it usually only happens for a very short time frame. Google only supports the latest two Android major versions. For them Android 2.2 is ancient history, but for a significant portion of users it is still the operating system they use.

What we have here is a huge gap between the time frame devices get security updates and the time frame users use these devices. And pretty much everything tells us that the vendors in the Internet of Things ignore these problems even more and the updatability gap will become larger. Many became accustomed to the idea that phones get only used for a year, but it's hard to imagine how that's going to work for a fridge. What's worse: Whether you look at phones or other devices, they often actively try to prevent users from replacing the software on their own.

This is a hard problem to tackle, but it's probably the biggest problem IT security is facing in the upcoming years. We need to get a working concept for updates – a concept that matches the real world use of devices.

Substandard TLS implementations

But there's another part of the SHA1 deprecation story. As I wrote above since 2005 it was clear that SHA1 needs to go away. That was three years before Android was even published. But in 2010 Android still wasn't capable of supporting SHA256 certificates. Google has to take a large part of the blame here. While these days they are at the forefront of deploying high quality and up to date TLS stacks, they shipped a substandard and outdated TLS implementation in Android 2. (Another problem is that all Android 2 versions don't support Server Name Indication, a technology that allows to use different certificates for different hosts on one IP address.)

This is not the first such problem we are facing. With the POODLE vulnerability it became clear that the old SSL version 3 is broken beyond repair and it's impossible to use it safely. The only option was to deprecate it. However doing so was painful, because a lot of devices out there didn't support better protocols. The successor protocol TLS 1.0 (SSL/TLS versions are confusing, I know) was released in 1999. But the problem wasn't that people were using devices older than 1999. The problem was that many vendors shipped devices and software that only supported SSLv3 in recent years.

One example was Windows Phone 7. In 2011 this was the operating system on Microsoft's and Nokia's flagship product, the Lumia 800. Its mail client is unable to connect to servers not supporting SSLv3. It is just inexcusable that in 2011 Microsoft shipped a product which only supported a protocol that was deprecated 12 years earlier. It's even more inexcusable that they refused to fix it later, because it only came to light when Windows Phone 7 was already out of support.

The takeaway from this is that sloppiness from the past can bite you many years later. And this is what we're seeing with Android 2.2 now.

But you might think given these experiences this has stopped today. It hasn't. The largest deployer of substandard TLS implementations these days is Apple. Up until recently (before El Capitan) Safari on OS X didn't support any authenticated encryption cipher suites with AES-GCM and relied purely on the CBC block mode. The CBC cipher suites are a hot candidate for the next deprecation plan, because 2013 the Lucky 13 attack has shown that they are really hard to implement safely. The situation for applications other than the browser (Mail etc.) is even worse on Apple devices. They only support the long deprecated TLS 1.0 protocol – and that's still the case on their latest systems.

There is widespread agreement in the TLS and cryptography community that the only really safe way to use TLS these days is TLS 1.2 with a cipher suite using forward secrecy and authenticated encryption (AES-GCM is the only standardized option for that right now, however ChaCha20/Poly1304 will come soon).

Conclusions

For the specific case of the SHA1 deprecation I would propose the following: Cloudflare and Facebook should go ahead with their handshake workaround for the next years, as long as their current certificates are valid. But this time should be used to find solutions. Reach out to those users visiting your sites and try to understand what could be done to fix the situation. For the Windows XP users this is relatively easy – help them updating their machines and preferably install another browser, most likely that'd be Firefox. For Android there is probably no easy solution, but we have some of the largest Internet companies involved here. Please seriously ask the question: Is it possible to retrofit Android 2.2 with a reasonable TLS stack? What ways are there to get that onto the devices? Is it possible to install a browser app with its own TLS stack on at least some of those devices? This probably doesn't work in most cases, because on many cheap phones there just isn't enough space to install large apps. In the long term I hope that the tech community will start tackling the updatability problem.

In the TLS space I think we need to make sure that no more substandard TLS deployments get shipped today. Point out the vendors that do so and pressure them to stop. It wasn't acceptable in 2010 to ship TLS with long-known problems and it isn't acceptable today.

Image source: Wikimedia Commons

This week both Cloudflare and Facebook announced that they want to delay the deprecation of certificates signed with the SHA1 algorithm. This spurred some hot debates whether or not this is a good idea – with two seemingly good causes: On the one side people want to improve security, on the other side access to webpages should remain possible for users of old devices, many of them living in poor countries. I want to give some background on the issue and ask why that unfortunate situation happened in the first place, because I think it highlights some of the most important challenges in the TLS space and more generally in IT security.

This week both Cloudflare and Facebook announced that they want to delay the deprecation of certificates signed with the SHA1 algorithm. This spurred some hot debates whether or not this is a good idea – with two seemingly good causes: On the one side people want to improve security, on the other side access to webpages should remain possible for users of old devices, many of them living in poor countries. I want to give some background on the issue and ask why that unfortunate situation happened in the first place, because I think it highlights some of the most important challenges in the TLS space and more generally in IT security.SHA1 broken since 2005

The SHA1 algorithm is a cryptographic hash algorithm and it has been know for quite some time that its security isn't great. In 2005 the Chinese researcher Wang Xiaoyun published an attack that would allow to create a collision for SHA1. The attack wasn't practically tested, because it is quite expensive to do so, but it was clear that a financially powerful adversary would be able to perform such an attack. A year before the even older hash function MD5 was broken practically, in 2008 this led to a practical attack against the issuance of TLS certificates. In the past years browsers pushed for the deprecation of SHA1 certificates and it was agreed that starting January 2016 no more certificates signed with SHA1 must be issued, instead the stronger algorithm SHA256 should be used. Many felt this was already far too late, given that it's been ten years since we knew that SHA1 is broken.

A few weeks before the SHA1 deadline Cloudflare and Facebook now question this deprecation plan. They have some strong arguments. According to Cloudflare's numbers there is still a significant number of users that use browsers without support for SHA256-certificates. And those users are primarily in relatively poor, repressive or war-ridden countries. The top three on the list are China, Cameroon and Yemen. Their argument, which is hard to argue with, is that cutting of SHA1 support will primarily affect the poorest users.

Cloudflare and Facebook propose a new mechanism to get legacy validated certificates. These certificates should only be issued to site operators that will use a technology to separate users based on their TLS handshake and only show the SHA1 certificate to those that use an older browser. Facebook already published the code to do that, Cloudflare also announced that they will release the code of their implementation. Right now it's still possible to get SHA1 certificates, therefore those companies could just register them now and use them for three years to come. Asking for this legacy validation process indicates that Cloudflare and Facebook don't see this as a short-term workaround, instead they seem to expect that this will be a solution they use for years to come, without any decided end date.

It's a tough question whether or not this is a good idea. But I want to ask a different question: Why do we have this problem in the first place, why is it hard to fix and what can we do to prevent similar things from happening in the future? One thing is remarkable about this problem: It's a software problem. In theory software can be patched and the solution to a software problem is to update the software. So why can't we just provide updates and get rid of these legacy problems?

Windows XP and Android Froyo

According to Cloudflare there are two main reason why so many users can't use sites with SHA256 certificates: Windows XP and old versions of Android (SHA256 support was added in Android 2.3, so this affects mostly Android 2.2 aka Froyo). We all know that Windows XP shouldn't be used any more, that its support has ended in 2014. But that clearly clashes with realities. People continue using old systems and Windows XP is still alive in many countries, especially in China.

But I'm inclined to say that Windows XP is probably the smaller problem here. With Service Pack 3 Windows XP introduced support for SHA256 certificates. By using an alternative browser (Firefox is still supported on Windows XP if you install SP3) it is even possible to have a relatively safe browsing experience. I'm not saying that I recommend it, but given the circumstances advising people how to update their machines and to install an alternative browser can party provide a way to reduce the reliance on broken algorithms.

The Updatability Gap

But the problem with Android is much, much worse, and I think this brings us to probably the biggest problem in IT security we have today. For years one of the most important messages to users in IT security was: Keep your software up to date. But at the same time the industry has created new software ecosystems where very often that just isn't an option.

In the Android case Google says that it's the responsibility of device vendors and carriers to deliver security updates. The dismal reality is that in most cases they just don't do that. But even if device vendors are willing to provide updates it usually only happens for a very short time frame. Google only supports the latest two Android major versions. For them Android 2.2 is ancient history, but for a significant portion of users it is still the operating system they use.

What we have here is a huge gap between the time frame devices get security updates and the time frame users use these devices. And pretty much everything tells us that the vendors in the Internet of Things ignore these problems even more and the updatability gap will become larger. Many became accustomed to the idea that phones get only used for a year, but it's hard to imagine how that's going to work for a fridge. What's worse: Whether you look at phones or other devices, they often actively try to prevent users from replacing the software on their own.

This is a hard problem to tackle, but it's probably the biggest problem IT security is facing in the upcoming years. We need to get a working concept for updates – a concept that matches the real world use of devices.

Substandard TLS implementations

But there's another part of the SHA1 deprecation story. As I wrote above since 2005 it was clear that SHA1 needs to go away. That was three years before Android was even published. But in 2010 Android still wasn't capable of supporting SHA256 certificates. Google has to take a large part of the blame here. While these days they are at the forefront of deploying high quality and up to date TLS stacks, they shipped a substandard and outdated TLS implementation in Android 2. (Another problem is that all Android 2 versions don't support Server Name Indication, a technology that allows to use different certificates for different hosts on one IP address.)

This is not the first such problem we are facing. With the POODLE vulnerability it became clear that the old SSL version 3 is broken beyond repair and it's impossible to use it safely. The only option was to deprecate it. However doing so was painful, because a lot of devices out there didn't support better protocols. The successor protocol TLS 1.0 (SSL/TLS versions are confusing, I know) was released in 1999. But the problem wasn't that people were using devices older than 1999. The problem was that many vendors shipped devices and software that only supported SSLv3 in recent years.

One example was Windows Phone 7. In 2011 this was the operating system on Microsoft's and Nokia's flagship product, the Lumia 800. Its mail client is unable to connect to servers not supporting SSLv3. It is just inexcusable that in 2011 Microsoft shipped a product which only supported a protocol that was deprecated 12 years earlier. It's even more inexcusable that they refused to fix it later, because it only came to light when Windows Phone 7 was already out of support.

The takeaway from this is that sloppiness from the past can bite you many years later. And this is what we're seeing with Android 2.2 now.

But you might think given these experiences this has stopped today. It hasn't. The largest deployer of substandard TLS implementations these days is Apple. Up until recently (before El Capitan) Safari on OS X didn't support any authenticated encryption cipher suites with AES-GCM and relied purely on the CBC block mode. The CBC cipher suites are a hot candidate for the next deprecation plan, because 2013 the Lucky 13 attack has shown that they are really hard to implement safely. The situation for applications other than the browser (Mail etc.) is even worse on Apple devices. They only support the long deprecated TLS 1.0 protocol – and that's still the case on their latest systems.

There is widespread agreement in the TLS and cryptography community that the only really safe way to use TLS these days is TLS 1.2 with a cipher suite using forward secrecy and authenticated encryption (AES-GCM is the only standardized option for that right now, however ChaCha20/Poly1304 will come soon).

Conclusions

For the specific case of the SHA1 deprecation I would propose the following: Cloudflare and Facebook should go ahead with their handshake workaround for the next years, as long as their current certificates are valid. But this time should be used to find solutions. Reach out to those users visiting your sites and try to understand what could be done to fix the situation. For the Windows XP users this is relatively easy – help them updating their machines and preferably install another browser, most likely that'd be Firefox. For Android there is probably no easy solution, but we have some of the largest Internet companies involved here. Please seriously ask the question: Is it possible to retrofit Android 2.2 with a reasonable TLS stack? What ways are there to get that onto the devices? Is it possible to install a browser app with its own TLS stack on at least some of those devices? This probably doesn't work in most cases, because on many cheap phones there just isn't enough space to install large apps. In the long term I hope that the tech community will start tackling the updatability problem.

In the TLS space I think we need to make sure that no more substandard TLS deployments get shipped today. Point out the vendors that do so and pressure them to stop. It wasn't acceptable in 2010 to ship TLS with long-known problems and it isn't acceptable today.

Image source: Wikimedia Commons

Posted by Hanno Böck

in Cryptography, English, Security

at

15:24

| Comment (1)

| Trackbacks (0)

Defined tags for this entry: android, certificate, cloudflare, cryptography, facebook, hash, https, security, sha1, sha256, ssl, tls, updates, windowsxp

Monday, November 30. 2015

A little POODLE left in GnuTLS (old versions)

tl;dr Older GnuTLS versions (2.x) fail to check the first byte of the padding in CBC modes. Various stable Linux distributions, including Ubuntu LTS and Debian wheezy (oldstable) use this version. Current GnuTLS versions are not affected.

tl;dr Older GnuTLS versions (2.x) fail to check the first byte of the padding in CBC modes. Various stable Linux distributions, including Ubuntu LTS and Debian wheezy (oldstable) use this version. Current GnuTLS versions are not affected.A few days ago an email on the ssllabs mailing list catched my attention. A Canonical developer had observed that the SSL Labs test would report the GnuTLS version used in Ubuntu 14.04 (the current long time support version) as vulnerable to the POODLE TLS vulnerability, while other tests for the same vulnerability showed no such issue.

A little background: The original POODLE vulnerability is a weakness of the old SSLv3 protocol that's now officially deprecated. POODLE is based on the fact that SSLv3 does not specify the padding of the CBC modes and the padding bytes can contain arbitrary bytes. A while after POODLE Adam Langley reported that there is a variant of POODLE in TLS, however while the original POODLE is a protocol issue the POODLE TLS vulnerability is an implementation issue. TLS specifies the values of the padding bytes, but some implementations don't check them. Recently Yngve Pettersen reported that there are different variants of this POODLE TLS vulnerability: Some implementations only check parts of the padding. This is the reason why sometimes different tests lead to different results. A test that only changes one byte of the padding will lead to different results than one that changes all padding bytes. Yngve Pettersen uncovered POODLE variants in devices from Cisco (Cavium chip) and Citrix.

I looked at the Ubuntu issue and found that this was exactly such a case of an incomplete padding check: The first byte wasn't checked. I believe this might explain some of the vulnerable hosts Yngve Pettersen found. This is the code:

for (i = 2; i <= pad; i++)

{

if (ciphertext.data[ciphertext.size - i] != pad)

pad_failed = GNUTLS_E_DECRYPTION_FAILED;

}The padding in TLS is defined that the rightmost byte of the last block contains the length of the padding. This value is also used in all padding bytes. However the length field itself is not part of the padding. Therefore if we have e. g. a padding length of three this would result in four bytes with the value 3. The above code misses one byte. i goes from 2 (setting block length minus 2) to pad (block length minus pad length), which sets pad length minus one bytes. To correct it we need to change the loop to end with pad+1. The code is completely reworked in current GnuTLS versions, therefore they are not affected. Upstream has officially announced the end of life for GnuTLS 2, but some stable Linux distributions still use it.

The story doesn't end here: After I found this bug I talked about it with Juraj Somorovsky. He mentioned that he already read about this before: In the paper of the Lucky Thirteen attack. That was published in 2013 by Nadhem AlFardan and Kenny Paterson. Here's what the Lucky Thirteen paper has to say about this issue on page 13:

for (i = 2; i < pad; i++)

{

if (ciphertext->data[ciphertext->size - i] != ciphertext->data[ciphertext->size - 1])

pad_failed = GNUTLS_E_DECRYPTION_FAILED;

}It is not hard to see that this loop should also cover the edge case i=pad in order to carry out a full padding check. This means that one byte of what should be padding actually has a free format.

If you look closely you will see that this code is actually different from the one I quoted above. The reason is that the GnuTLS version in question already contained a fix that was applied in response to the Lucky Thirteen paper. However what the Lucky Thirteen paper missed is that the original check was off by two bytes, not just one byte. Therefore it only got an incomplete fix reducing the attack surface from two bytes to one.

In a later commit this whole code was reworked in response to the Lucky Thirteen attack and there the problem got fixed for good. However that change never made it into version 2 of GnuTLS. Red Hat / CentOS packages contain a backport patch of those changes, therefore they are not affected.

You might wonder what the impact of this bug is. I'm not totally familiar with the details of all the possible attacks, but the POODLE attack gets increasingly harder if fewer bytes of the padding can be freely set. It most likely is impossible if there is only one byte. The Lucky Thirteen paper says: "This would enable, for example, a variant of the short MAC attack of [28] even if variable length padding was not supported.". People that know more about crypto than I do should be left with the judgement whether this might be practically exploitabe.

Fixing this bug is a simple one-line patch I have attached here. This will silence all POODLE checks, however this doesn't apply all the changes that were made in response to the Lucky Thirteen attack. I'm not sure if the code is practically vulnerable, but Lucky Thirteen is a tricky issue, recently a variant of that attack was shown against Amazon's s2n library.

The missing padding check for the first byte got CVE-2015-8313 assigned. Currently I'm aware of Ubuntu LTS (now fixed) and Debian oldstable (Wheezy) being affected.

Posted by Hanno Böck

in Code, Cryptography, English, Linux, Security

at

20:32

| Comments (0)

| Trackbacks (0)

Defined tags for this entry: cbc, gnutls, luckythirteen, padding, poodle, security, ssl, tls, vulnerability

Monday, November 23. 2015

Superfish 2.0: Dangerous Certificate on Dell Laptops breaks encrypted HTTPS Connections

tl;dr Dell laptops come preinstalled with a root certificate and a corresponding private key. That completely compromises the security of encrypted HTTPS connections. I've provided an online check, affected users should delete the certificate.

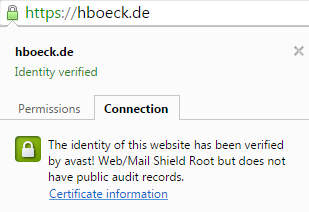

tl;dr Dell laptops come preinstalled with a root certificate and a corresponding private key. That completely compromises the security of encrypted HTTPS connections. I've provided an online check, affected users should delete the certificate.It seems that Dell hasn't learned anything from the Superfish-scandal earlier this year: Laptops from the company come with a preinstalled root certificate that will be accepted by browsers. The private key is also installed on the system and has been published now. Therefore attackers can use Man in the Middle attacks against Dell users to show them manipulated HTTPS webpages or read their encrypted data.

The certificate, which is installed in the system's certificate store under the name "eDellRoot", gets installed by a software called Dell Foundation Services. This software is still available on Dell's webpage. According to the somewhat unclear description from Dell it is used to provide "foundational services facilitating customer serviceability, messaging and support functions".

The private key of this certificate is marked as non-exportable in the Windows certificate store. However this provides no real protection, there are Tools to export such non-exportable certificate keys. A user of the plattform Reddit has posted the Key there.

For users of the affected Laptops this is a severe security risk. Every attacker can use this root certificate to create valid certificates for arbitrary web pages. Even HTTP Public Key Pinning (HPKP) does not protect against such attacks, because browser vendors allow locally installed certificates to override the key pinning protection. This is a compromise in the implementation that allows the operation of so-called TLS interception proxies.

I was made aware of this issue a while ago by Kristof Mattei. We asked Dell for a statement three weeks ago and didn't get any answer.

It is currently unclear which purpose this certificate served. However it seems unliklely that it was placed there deliberately for surveillance purposes. In that case Dell wouldn't have installed the private key on the system.

Affected are only users that use browsers or other applications that use the system's certificate store. Among the common Windows browsers this affects the Internet Explorer, Edge and Chrome. Not affected are Firefox-users, Mozilla's browser has its own certificate store.

Users of Dell laptops can check if they are affected with an online check tool. Affected users should immediately remove the certificate in the Windows certificate manager. The certificate manager can be started by clicking "Start" and typing in "certmgr.msc". The "eDellRoot" certificate can be found under "Trusted Root Certificate Authorities". You also need to remove the file Dell.Foundation.Agent.Plugins.eDell.dll, Dell has now posted an instruction and a removal tool.

This incident is almost identical with the Superfish-incident. Earlier this year it became public that Lenovo had preinstalled a software called Superfish on its Laptops. Superfish intercepts HTTPS-connections to inject ads. It used a root certificate for that and the corresponding private key was part of the software. After that incident several other programs with the same vulnerability were identified, they all used a software module called Komodia. Similar vulnerabilities were found in other software products, for example in Privdog and in the ad blocker Adguard.

This article is mostly a translation of a German article I wrote for Golem.de.

Image source and license: Wistula / Wikimedia Commons, Creative Commons by 3.0

Update (2015-11-24): Second Dell root certificate DSDTestProvider

I just found out that there is a second root certificate installed with some Dell software that causes exactly the same issue. It is named DSDTestProvider and comes with a software called Dell System Detect. Unlike the Dell Foundations Services this one does not need a Dell computer to be installed, therefore it was trivial to extract the certificate and the private key. My online test now checks both certificates. This new certificate is not covered by Dell's removal instructions yet.

Dell has issued an official statement on their blog and in the comment section a user mentioned this DSDTestProvider certificate. After googling what DSD might be I quickly found it. There have been concerns about the security of Dell System Detect before, Malwarebytes has an article about it from April mentioning that it was vulnerable to a remote code execution vulnerability.

Update (2015-11-26): Service tag information disclosure

Another unrelated issue on Dell PCs was discovered in a tool called Dell Foundation Services. It allows webpages to read an unique service tag. There's also an online check.

Posted by Hanno Böck

in Cryptography, English, Security

at

17:39

| Comments (7)

| Trackbacks (0)

Defined tags for this entry: browser, certificate, cryptography, dell, edellroot, encryption, https, maninthemiddle, security, ssl, superfish, tls, vulnerability

Saturday, September 5. 2015

TLS interception considered harmful - video and slides

At the recent Chaos Communication Camp I held a talk summarizing the problems with TLS interception or Man-in-the-Middle proxies. This was initially motivated by the occurence of Superfish and my own investigations on Privdog, but I learned in the past month that this is a far bigger problem. I was surprised and somewhat shocked to learn that it seems to be almost a default feature of various security products, especially in the so-called "Enterprise" sector. I hope I have contributed to a discussion about the dangers of these devices and software products.

There is a video recording of the talk avaliable and I'm also sharing the slides (also on Slideshare).

I noticed after the talk that I had a mistake on the slides: When describing Filippo's generic attack on Komodia software I said and wrote SNI (Server Name Indication) on the slides. However the feature that is used here is called SAN (Subject Alt Name). SNI is a feature to have different certificates on one IP, SAN is a feature to have different domain names on one certificate, so they're related and I got confused, sorry for that.

I got a noteworthy comment in the discussion after the talk I also would like to share: These TLS interception proxies by design break client certificate authentication. Client certificates are rarely used, however that's unfortunate, because they are a very useful feature of TLS. This is one more reason to avoid any software that is trying to mess with your TLS connections.

There is a video recording of the talk avaliable and I'm also sharing the slides (also on Slideshare).

I noticed after the talk that I had a mistake on the slides: When describing Filippo's generic attack on Komodia software I said and wrote SNI (Server Name Indication) on the slides. However the feature that is used here is called SAN (Subject Alt Name). SNI is a feature to have different certificates on one IP, SAN is a feature to have different domain names on one certificate, so they're related and I got confused, sorry for that.

I got a noteworthy comment in the discussion after the talk I also would like to share: These TLS interception proxies by design break client certificate authentication. Client certificates are rarely used, however that's unfortunate, because they are a very useful feature of TLS. This is one more reason to avoid any software that is trying to mess with your TLS connections.

Posted by Hanno Böck

in Computer culture, English, Security

at

20:54

| Comments (0)

| Trackbacks (0)

Sunday, April 26. 2015

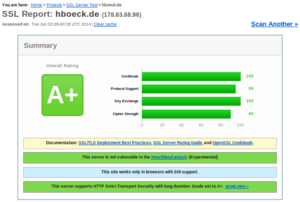

How Kaspersky makes you vulnerable to the FREAK attack and other ways Antivirus software lowers your HTTPS security

Lately a lot of attention has been payed to software like Superfish and Privdog that intercepts TLS connections to be able to manipulate HTTPS traffic. These programs had severe (technically different) vulnerabilities that allowed attacks on HTTPS connections.

Lately a lot of attention has been payed to software like Superfish and Privdog that intercepts TLS connections to be able to manipulate HTTPS traffic. These programs had severe (technically different) vulnerabilities that allowed attacks on HTTPS connections.What these tools do is a widespread method. They install a root certificate into the user's browser and then they perform a so-called Man in the Middle attack. They present the user a certificate generated on the fly and manage the connection to HTTPS servers themselves. Superfish and Privdog did this in an obviously wrong way, Superfish by using the same root certificate on all installations and Privdog by just accepting every invalid certificate from web pages. What about other software that also does MitM interception of HTTPS traffic?

Antivirus software intercepts your HTTPS traffic

Many Antivirus applications and other security products use similar techniques to intercept HTTPS traffic. I had a closer look at three of them: Avast, Kaspersky and ESET. Avast enables TLS interception by default. By default Kaspersky intercepts connections to certain web pages (e. g. banking), there is an option to enable interception by default. In ESET TLS interception is generally disabled by default and can be enabled with an option.

When a security product intercepts HTTPS traffic it is itself responsible to create a TLS connection and check the certificate of a web page. It has to do what otherwise a browser would do. There has been a lot of debate and progress in the way TLS is done in the past years. A number of vulnerabilities in TLS (upon them BEAST, CRIME, Lucky Thirteen, FREAK and others) allowed to learn much more how to do TLS in a secure way. Also, problems with certificate authorities that issued malicious certificates (Diginotar, Comodo, Türktrust and others) led to the development of mitigation technologies like HTTP Public Key Pinning (HPKP) and Certificate Transparency to strengthen the security of Certificate Authorities. Modern browsers protect users much better from various threats than browsers used several years ago.

You may think: "Of course security products like Antivirus applications are fully aware of these developments and do TLS and certificate validation in the best way possible. After all security is their business, so they have to get it right." Unfortunately that's only what's happening in some fantasy IT security world that only exists in the minds of people that listened to industry PR too much. The real world is a bit different: All Antivirus applications I checked lower the security of TLS connections in one way or another.

Disabling of HTTP Public Key Pinning

Each and every TLS intercepting application I tested breaks HTTP Public Key Pinning (HPKP). It is a technology that a lot of people in the IT security community are pretty excited about: It allows a web page to pin public keys of certificates in a browser. On subsequent visits the browser will only accept certificates with these keys. It is a very effective protection against malicious or hacked certificate authorities issuing rogue certificates.

Browsers made a compromise when introducing HPKP. They won't enable the feature for manually installed certificates. The reason for that is simple (although I don't like it): If they hadn't done that they would've broken all TLS interception software like these Antivirus applications. But the applications could do the HPKP checking themselves. They just don't do it.

Kaspersky vulnerable to FREAK and CRIME

Kaspersky vulnerable to FREAK and CRIMEHaving a look at Kaspersky, I saw that it is vulnerable to the FREAK attack, a vulnerability in several TLS libraries that was found recently. Even worse: It seems this issue has been reported publicly in the Kaspersky Forums more than a month ago and it is not fixed yet. Please remember: Kaspersky enables the HTTPS interception by default for sites it considers as especially sensitive, for example banking web pages. Doing that with a known security issue is extremely irresponsible.

I also found a number of other issues. ESET doesn't support TLS 1.2 and therefore uses a less secure encryption algorithm. Avast and ESET don't support OCSP stapling. Kaspersky enables the insecure TLS compression feature that will make a user vulnerable to the CRIME attack. Both Avast and Kaspersky accept nonsensical parameters for Diffie Hellman key exchanges with a size of 8 bit. Avast is especially interesting because it bundles the Google Chrome browser. It installs a browser with advanced HTTPS features and lowers its security right away.