Entries tagged as https

Related tags

adguard komodia maninthemiddle netfiltersdk privdog protocolfilters security superfish tls vulnerability android ac100 certificate cloudflare cryptography facebook gentoo hash laptop linux notebook sha1 sha256 smartbook ssl subnotebook toshiba ubuntu updates windowsxp antivirus bundestrojaner freak kaspersky mcaffee mitm onlinedurchsuchung virus apache certificates datenschutz datensparsamkeit encryption grsecurity itsecurity javascript karlsruhe letsencrypt mod_rewrite nginx ocsp ocspstapling openssl php revocation serendipity sni symlink userdir vortrag web web20 webhosting webmontag websecurity breach cookies crime csrf heist samesite time browser ajax clickjacking content-security-policy csp dell edellroot firefox html khtml konqueror microsoft rss webcore xss ca cacert ccc certificateauthority md5 privatekey rsa sha2 symantec transvalid x509 aiglx blog calendar come2linux compiz eff email english essen games ipv6 kubik ludwigsburg lug lugbk observatory openstreetmap planet rc2 schokokeks simplesharingextensions smime stadtmitte webinale wine xgl 23c3 24c3 4k assembler berlin bewußtsein braunschweig bsideshn c4 cctv copyright darmstadt drm dvb easterhegg entropia freesoftware gpn gpn5 gpn6 gpn7 hacker hannover jugendumweltbewegung jukss kongress königswusterhausen licenses mrmcd mrmcd100b mrmcd101b mysmartgrid ökologie papierlos passwörter pgp philosophie privacy programmieren programming publicdomain querfunk radio rsaoaep rsapss science server slides stuttgart surveillance talk theory tpm überwachung überwachungskameras unicode utf-8 wahl wahlcomputer wahlmaschinen wiesbaden wiki algorithm cccamp11 openleaks pss taz cms joomla update crypto http apt badkeys cccamp cccamp15 chrome chromium cmi crash deb debian deolalikar diffiehellman diploma diplomarbeit enigma fedora fortigate fortinet forwardsecrecy gnupg gpg gsoc key keyexchange keyserver leak libressl math milleniumproblems modulobias nist nss openbsd openid openidconnect openpgp packagemanagement password pnp provablesecurity random revoke rpm schlüssel sha512 signatures sso thesis university verschlüsselung wordpress code freewvs moodle s9y aead aes aok berserk bleichenbacher cfb gajim jabber otr owncloud poodle xmpp administration howto iptables network proxy rfc squid addresssanitizer afl americanfuzzylop bash bufferoverflow fuzzing heartbleed shellshock feed harvester 0days 27c3 adobe afra altushost antivir asan auskunftsanspruch axfr azure barcamp bias bigbluebutton bodensee botnetz bsi bugbounty bundesdatenschutzgesetz bundesverfassungsgericht busby bypass c cbc cellular chcounter clamav clang conflictofinterest cookie cve dingens distributions dns domain drupal eplus fileexfiltration firewall frequency fsfe ftp gallery gcc ghost gimp git glibc gnutls gobi google gsm gstreamer hackerone hacking helma infoleak informationdisclosure internetscan ircbot jodconverter lessig libreoffice luckythirteen malware mantis memorysafety mephisto mobilephones mozilla mpaa mplayer multimedia mysql napster nessus newspaper ntp ntpd openbsc openbts openvas osmocombb padding panda passwordalert passwort pdo phishing python rand redhat rhein salinecourier session shellbot sicherheit snallygaster sniffing spam sqlinjection squirrelmail staatsanwaltschaft stacktrace study subdomain sunras support tlsdate toendacms unicef useafterfree vlc vulnerabilities webapps windows wiretapping xine xsa zerodays zugangsdaten zzuf camera canon date gadgets gammu gnokii gphoto mobile nokia ntimed ntpsec openntpd picture ptp roughtime securetime bugtracker core coredump github nextcloud segfault webroot webserver acid3 base64 css midori script webdesign webkit webstandards demonstration topberlin vorratsdatenspeicherungMonday, April 13. 2020

Generating CRIME safe CSRF Tokens

For a small web project I recently had to consider how to generate secure tokens to prevent Cross Site Request Forgery (CSRF). I wanted to share how I think this should be done, primarily to get some feedback whether other people agree or see room for improvement.

For a small web project I recently had to consider how to generate secure tokens to prevent Cross Site Request Forgery (CSRF). I wanted to share how I think this should be done, primarily to get some feedback whether other people agree or see room for improvement.I am not going to discuss CSRF in general here, I will generally assume that you are aware of how this attack class works. The standard method to protect against CSRF is to add a token to every form that performs an action that is sufficiently random and unique for the session.

Some web applications use the same token for every request or at least the same token for every request of the same kind. However this is problematic due to some TLS attacks.

There are several attacks against TLS and HTTPS that work by generating a large number of requests and then slowly learning about a secret. A common target of such attacks are CSRF tokens. The list of these attacks is long: BEAST, all Padding Oracle attacks (Lucky Thirteen, POODLE, Zombie POODLE, GOLDENDOODLE), RC4 bias attacks and probably a few more that I have forgotten about. The good news is that none of these attacks should be a concern, because they all affect fragile cryptography that is no longer present in modern TLS stacks.

However there is a class of TLS attacks that is still a concern, because there is no good general fix, and these are compression based attacks. The first such attack that has been shown was called CRIME, which targeted TLS compression. TLS compression is no longer used, but a later attack called BREACH used HTTP compression, which is still widely in use and which nobody wants to disable, because HTML code compresses so well. Further improvements of these attacks are known as TIME and HEIST.

I am not going to discuss these attacks in detail, it is sufficient to know that they all rely on a secret being transmitted again and again over a connection. So CSRF tokens are vulnerable to this if they are the same over multiple connections. If we have an always changing CSRF token this attack does not apply to it.

An obvious fix for this is to always generate new CSRF tokens. However this requires quite a bit of state management on the server or other trade-offs, therefore I don’t think it’s desirable. Rather a good concept would be to keep a single server-side secret, but put some randomness in so the token changes on every request.

The BREACH authors have the following brief recommendation (thanks to Ivan Ristic for pointing this out): “Masking secrets (effectively randomizing by XORing with a random secret per request)”.

I read this as having a real token and a random value and the CSRF token would look like random_value + XOR(random_value, real_token). The server could verify this by splitting up the token, XORing the first half with the second and then comparing that to the real token.

However I would like to add something: I would prefer if a token used for one form and action cannot be used for another action. In case there is any form of token exfiltration it seems reasonable to limit the utility of the token as much as possible.

My idea is therefore to use a cryptographic hash function instead of XOR and add a scope string. This could be something like “adduser”, “addblogpost” etc., anything that identifies the action.

The server would keep a secret token per session on the server side and the CSRF token would look like this: random_value + hash(random_value + secret_token + scope). The random value changes each time the token is sent.

I have created some simple PHP code to implement this (if there is sufficient interest I will learn how to turn this into a composer package). The usage is very simple, there is one function to create a token that takes a scope string as the only parameter and another to check a token that takes the public token and the scope and returns true or false.

As for the implementation details I am using 256 bit random values and secret tokens, which is excessively too much and should avoid any discussion about them being too short. For the hash I am using sha364, which is widely supported and not vulnerable to length extension attacks. I do not see any reason why length extension attacks would be relevant here, but still this feels safer. I believe the order of the hash inputs should not matter, but I have seen constructions where having

The CSRF token is Base64-encoded, which should work fine in HTML forms.

My question would be if people think this is a sane design or if they see room for improvement. Also as this is all relatively straightforward and obvious, I am almost sure I am not the first person to invent this, pointers welcome.

Now there is an elephant in the room I also need to discuss. Form tokens are the traditional way to prevent CSRF attacks, but in recent years browsers have introduced a new and completely different way of preventing CSRF attacks called SameSite Cookies. The long term plan is to enable them by default, which would likely make CSRF almost impossible. (These plans have been delayed due to Covid-19 and there will probably be some unfortunate compatibility trade-offs that are reason enough to still set the flag manually in a SameSite-by-default world.)

Now there is an elephant in the room I also need to discuss. Form tokens are the traditional way to prevent CSRF attacks, but in recent years browsers have introduced a new and completely different way of preventing CSRF attacks called SameSite Cookies. The long term plan is to enable them by default, which would likely make CSRF almost impossible. (These plans have been delayed due to Covid-19 and there will probably be some unfortunate compatibility trade-offs that are reason enough to still set the flag manually in a SameSite-by-default world.)SameSite Cookies have two flavors: Lax and Strict. When set to Lax, which is what I would recommend that every web application should do, POST requests sent from another host are sent without the Cookie. With Strict all requests, including GET requests, sent from another host are sent without the Cookie. I do not think this is a desirable setting in most cases, as this breaks many workflows and GET requests should not perform any actions anyway.

Now here is a question I have: Are SameSite cookies enough? Do we even need to worry about CSRF tokens any more or can we just skip them? Are there any scenarios where one can bypass SameSite Cookies, but not CSRF tokens?

One could of course say “Why not both?” and see this as a kind of defense in depth. It is a popular mode of thinking to always see more security mechanisms as better, but I do not agree with that reasoning. Security mechanisms introduce complexity and if you can do with less complexity you usually should. CSRF tokens always felt like an ugly solution to me, and I feel SameSite Cookies are a much cleaner way to solve this problem.

So are there situations where SameSite Cookies do not protect and we need tokens? The obvious one is old browsers that do not support SameSite Cookies, however they have been around for a while and if you are willing to not support really old and obscure browsers that should not matter.

A remaining problem I could think of is software that accepts action requests both as GET and POST variables (e. g. in PHP if one uses the $_REQUESTS variable instead of $_POST). These need to be avoided, but using GET for anything that performs actions in the application should not be done anyway. (SameSite=Strict does not really fix his, as GET requests can still plausibly come from links, e. g. on applications that support internal messaging.)

Also an edge case problem may be a transition period: If a web application removes CSRF tokens and starts using SameSite Cookies at the same time Users may still have old Cookies around without the flag. So a transition period at least as long as the Cookie lifetime should be used.

Furthermore there are bypasses for the SameSite-by-default Cookies as planned by browser vendors, but these do not apply when the web application sets the SameSite flag itself. (Essentially the SameSite-by-default Cookies are only SameSite after two minutes, so there is a small window for an attack after setting the Cookie.)

Considering all this if one carefully makes sure that actions can only be performed by POST requests, sets SameSite=Lax on all Cookies and plans a transition period one should be able to safely remove CSRF tokens. Anyone disagrees?

Image sources: Piqsels, Wikimedia Commons

Posted by Hanno Böck

in Cryptography, English, Security, Webdesign

at

21:54

| Comments (8)

| Trackbacks (0)

Friday, May 19. 2017

The Problem with OCSP Stapling and Must Staple and why Certificate Revocation is still broken

Update (2020-09-16): While three years old, people still find this blog post when looking for information about Stapling problems. For Apache the situation has improved considerably in the meantime: mod_md, which is part of recent apache releases, comes with a new stapling implementation which you can enable with the setting MDStapling on.

Today the OCSP servers from Let’s Encrypt were offline for a while. This has caused far more trouble than it should have, because in theory we have all the technologies available to handle such an incident. However due to failures in how they are implemented they don’t really work.

We have to understand some background. Encrypted connections using the TLS protocol like HTTPS use certificates. These are essentially cryptographic public keys together with a signed statement from a certificate authority that they belong to a certain host name.

CRL and OCSP – two technologies that don’t work

Certificates can be revoked. That means that for some reason the certificate should no longer be used. A typical scenario is when a certificate owner learns that his servers have been hacked and his private keys stolen. In this case it’s good to avoid that the stolen keys and their corresponding certificates can still be used. Therefore a TLS client like a browser should check that a certificate provided by a server is not revoked.

That’s the theory at least. However the history of certificate revocation is a history of two technologies that don’t really work.

One method are certificate revocation lists (CRLs). It’s quite simple: A certificate authority provides a list of certificates that are revoked. This has an obvious limitation: These lists can grow. Given that a revocation check needs to happen during a connection it’s obvious that this is non-workable in any realistic scenario.

The second method is called OCSP (Online Certificate Status Protocol). Here a client can query a server about the status of a single certificate and will get a signed answer. This avoids the size problem of CRLs, but it still has a number of problems. Given that connections should be fast it’s quite a high cost for a client to make a connection to an OCSP server during each handshake. It’s also concerning for privacy, as it gives the operator of an OCSP server a lot of information.

However there’s a more severe problem: What happens if an OCSP server is not available? From a security point of view one could say that a certificate that can’t be OCSP-checked should be considered invalid. However OCSP servers are far too unreliable. So practically all clients implement OCSP in soft fail mode (or not at all). Soft fail means that if the OCSP server is not available the certificate is considered valid.

That makes the whole OCSP concept pointless: If an attacker tries to abuse a stolen, revoked certificate he can just block the connection to the OCSP server – and thus a client can’t learn that it’s revoked. Due to this inherent security failure Chrome decided to disable OCSP checking altogether. As a workaround they have something called CRLsets and Mozilla has something similar called OneCRL, which is essentially a big revocation list for important revocations managed by the browser vendor. However this is a weak workaround that doesn’t cover most certificates.

OCSP Stapling and Must Staple to the rescue?

There are two technologies that could fix this: OCSP Stapling and Must-Staple.

OCSP Stapling moves the querying of the OCSP server from the client to the server. The server gets OCSP replies and then sends them within the TLS handshake. This has several advantages: It avoids the latency and privacy implications of OCSP. It also allows surviving short downtimes of OCSP servers, because a TLS server can cache OCSP replies (they’re usually valid for several days).

However it still does not solve the security issue: If an attacker has a stolen, revoked certificate it can be used without Stapling. The browser won’t know about it and will query the OCSP server, this request can again be blocked by the attacker and the browser will accept the certificate.

Therefore an extension for certificates has been introduced that allows us to require Stapling. It’s usually called OCSP Must-Staple and is defined in RFC 7633 (although the RFC doesn’t mention the name Must-Staple, which can cause some confusion). If a browser sees a certificate with this extension that is used without OCSP Stapling it shouldn’t accept it.

So we should be fine. With OCSP Stapling we can avoid the latency and privacy issues of OCSP and we can avoid failing when OCSP servers have short downtimes. With OCSP Must-Staple we fix the security problems. No more soft fail. All good, right?

The OCSP Stapling implementations of Apache and Nginx are broken

Well, here come the implementations. While a lot of protocols use TLS, the most common use case is the web and HTTPS. According to Netcraft statistics by far the biggest share of active sites on the Internet run on Apache (about 46%), followed by Nginx (about 20 %). It’s reasonable to say that if these technologies should provide a solution for revocation they should be usable with the major products in that area. On the server side this is only OCSP Stapling, as OCSP Must Staple only needs to be checked by the client.

What would you expect from a working OCSP Stapling implementation? It should try to avoid a situation where it’s unable to send out a valid OCSP response. Thus roughly what it should do is to fetch a valid OCSP response as soon as possible and cache it until it gets a new one or it expires. It should furthermore try to fetch a new OCSP response long before the old one expires (ideally several days). And it should never throw away a valid response unless it has a newer one. Google developer Ryan Sleevi wrote a detailed description of what a proper OCSP Stapling implementation could look like.

Apache does none of this.

If Apache tries to renew the OCSP response and gets an error from the OCSP server – e. g. because it’s currently malfunctioning – it will throw away the existing, still valid OCSP response and replace it with the error. It will then send out stapled OCSP errors. Which makes zero sense. Firefox will show an error if it sees this. This has been reported in 2014 and is still unfixed.

Now there’s an option in Apache to avoid this behavior: SSLStaplingReturnResponderErrors. It’s defaulting to on. If you switch it off you won’t get sane behavior (that is – use the still valid, cached response), instead Apache will disable Stapling for the time it gets errors from the OCSP server. That’s better than sending out errors, but it obviously makes using Must Staple a no go.

It gets even crazier. I have set this option, but this morning I still got complaints that Firefox users were seeing errors. That’s because in this case the OCSP server wasn’t sending out errors, it was completely unavailable. For that situation Apache has a feature that will fake a tryLater error to send out to the client. If you’re wondering how that makes any sense: It doesn’t. The “tryLater” error of OCSP isn’t useful at all in TLS, because you can’t try later during a handshake which only lasts seconds.

This is controlled by another option: SSLStaplingFakeTryLater. However if we read the documentation it says “Only effective if SSLStaplingReturnResponderErrors is also enabled.” So if we disabled SSLStapingReturnResponderErrors this shouldn’t matter, right? Well: The documentation is wrong.

There are more problems: Apache doesn’t get the OCSP responses on startup, it only fetches them during the handshake. This causes extra latency on the first connection and increases the risk of hitting a situation where you don’t have a valid OCSP response. Also cached OCSP responses don’t survive server restarts, they’re kept in an in-memory cache.

There’s currently no way to configure Apache to handle OCSP stapling in a reasonable way. Here’s the configuration I use, which will at least make sure that it won’t send out errors and cache the responses a bit longer than it does by default:

I’m less familiar with Nginx, but from what I hear it isn’t much better either. According to this blogpost it doesn’t fetch OCSP responses on startup and will send out the first TLS connections without stapling even if it’s enabled. Here’s a blog post that recommends to work around this by connecting to all configured hosts after the server has started.

To summarize: This is all a big mess. Both Apache and Nginx have OCSP Stapling implementations that are essentially broken. As long as you’re using either of those then enabling Must-Staple is a reliable way to shoot yourself in the foot and get into trouble. Don’t enable it if you plan to use Apache or Nginx.

Certificate revocation is broken. It has been broken since the invention of SSL and it’s still broken. OCSP Stapling and OCSP Must-Staple could fix it in theory. But that would require working and stable implementations in the most widely used server products.

Today the OCSP servers from Let’s Encrypt were offline for a while. This has caused far more trouble than it should have, because in theory we have all the technologies available to handle such an incident. However due to failures in how they are implemented they don’t really work.

We have to understand some background. Encrypted connections using the TLS protocol like HTTPS use certificates. These are essentially cryptographic public keys together with a signed statement from a certificate authority that they belong to a certain host name.

CRL and OCSP – two technologies that don’t work

Certificates can be revoked. That means that for some reason the certificate should no longer be used. A typical scenario is when a certificate owner learns that his servers have been hacked and his private keys stolen. In this case it’s good to avoid that the stolen keys and their corresponding certificates can still be used. Therefore a TLS client like a browser should check that a certificate provided by a server is not revoked.

That’s the theory at least. However the history of certificate revocation is a history of two technologies that don’t really work.

One method are certificate revocation lists (CRLs). It’s quite simple: A certificate authority provides a list of certificates that are revoked. This has an obvious limitation: These lists can grow. Given that a revocation check needs to happen during a connection it’s obvious that this is non-workable in any realistic scenario.

The second method is called OCSP (Online Certificate Status Protocol). Here a client can query a server about the status of a single certificate and will get a signed answer. This avoids the size problem of CRLs, but it still has a number of problems. Given that connections should be fast it’s quite a high cost for a client to make a connection to an OCSP server during each handshake. It’s also concerning for privacy, as it gives the operator of an OCSP server a lot of information.

However there’s a more severe problem: What happens if an OCSP server is not available? From a security point of view one could say that a certificate that can’t be OCSP-checked should be considered invalid. However OCSP servers are far too unreliable. So practically all clients implement OCSP in soft fail mode (or not at all). Soft fail means that if the OCSP server is not available the certificate is considered valid.

That makes the whole OCSP concept pointless: If an attacker tries to abuse a stolen, revoked certificate he can just block the connection to the OCSP server – and thus a client can’t learn that it’s revoked. Due to this inherent security failure Chrome decided to disable OCSP checking altogether. As a workaround they have something called CRLsets and Mozilla has something similar called OneCRL, which is essentially a big revocation list for important revocations managed by the browser vendor. However this is a weak workaround that doesn’t cover most certificates.

OCSP Stapling and Must Staple to the rescue?

There are two technologies that could fix this: OCSP Stapling and Must-Staple.

OCSP Stapling moves the querying of the OCSP server from the client to the server. The server gets OCSP replies and then sends them within the TLS handshake. This has several advantages: It avoids the latency and privacy implications of OCSP. It also allows surviving short downtimes of OCSP servers, because a TLS server can cache OCSP replies (they’re usually valid for several days).

However it still does not solve the security issue: If an attacker has a stolen, revoked certificate it can be used without Stapling. The browser won’t know about it and will query the OCSP server, this request can again be blocked by the attacker and the browser will accept the certificate.

Therefore an extension for certificates has been introduced that allows us to require Stapling. It’s usually called OCSP Must-Staple and is defined in RFC 7633 (although the RFC doesn’t mention the name Must-Staple, which can cause some confusion). If a browser sees a certificate with this extension that is used without OCSP Stapling it shouldn’t accept it.

So we should be fine. With OCSP Stapling we can avoid the latency and privacy issues of OCSP and we can avoid failing when OCSP servers have short downtimes. With OCSP Must-Staple we fix the security problems. No more soft fail. All good, right?

The OCSP Stapling implementations of Apache and Nginx are broken

Well, here come the implementations. While a lot of protocols use TLS, the most common use case is the web and HTTPS. According to Netcraft statistics by far the biggest share of active sites on the Internet run on Apache (about 46%), followed by Nginx (about 20 %). It’s reasonable to say that if these technologies should provide a solution for revocation they should be usable with the major products in that area. On the server side this is only OCSP Stapling, as OCSP Must Staple only needs to be checked by the client.

What would you expect from a working OCSP Stapling implementation? It should try to avoid a situation where it’s unable to send out a valid OCSP response. Thus roughly what it should do is to fetch a valid OCSP response as soon as possible and cache it until it gets a new one or it expires. It should furthermore try to fetch a new OCSP response long before the old one expires (ideally several days). And it should never throw away a valid response unless it has a newer one. Google developer Ryan Sleevi wrote a detailed description of what a proper OCSP Stapling implementation could look like.

Apache does none of this.

If Apache tries to renew the OCSP response and gets an error from the OCSP server – e. g. because it’s currently malfunctioning – it will throw away the existing, still valid OCSP response and replace it with the error. It will then send out stapled OCSP errors. Which makes zero sense. Firefox will show an error if it sees this. This has been reported in 2014 and is still unfixed.

Now there’s an option in Apache to avoid this behavior: SSLStaplingReturnResponderErrors. It’s defaulting to on. If you switch it off you won’t get sane behavior (that is – use the still valid, cached response), instead Apache will disable Stapling for the time it gets errors from the OCSP server. That’s better than sending out errors, but it obviously makes using Must Staple a no go.

It gets even crazier. I have set this option, but this morning I still got complaints that Firefox users were seeing errors. That’s because in this case the OCSP server wasn’t sending out errors, it was completely unavailable. For that situation Apache has a feature that will fake a tryLater error to send out to the client. If you’re wondering how that makes any sense: It doesn’t. The “tryLater” error of OCSP isn’t useful at all in TLS, because you can’t try later during a handshake which only lasts seconds.

This is controlled by another option: SSLStaplingFakeTryLater. However if we read the documentation it says “Only effective if SSLStaplingReturnResponderErrors is also enabled.” So if we disabled SSLStapingReturnResponderErrors this shouldn’t matter, right? Well: The documentation is wrong.

There are more problems: Apache doesn’t get the OCSP responses on startup, it only fetches them during the handshake. This causes extra latency on the first connection and increases the risk of hitting a situation where you don’t have a valid OCSP response. Also cached OCSP responses don’t survive server restarts, they’re kept in an in-memory cache.

There’s currently no way to configure Apache to handle OCSP stapling in a reasonable way. Here’s the configuration I use, which will at least make sure that it won’t send out errors and cache the responses a bit longer than it does by default:

SSLStaplingCache shmcb:/var/tmp/ocsp-stapling-cache/cache(128000000)

SSLUseStapling on

SSLStaplingResponderTimeout 2

SSLStaplingReturnResponderErrors off

SSLStaplingFakeTryLater off

SSLStaplingStandardCacheTimeout 86400I’m less familiar with Nginx, but from what I hear it isn’t much better either. According to this blogpost it doesn’t fetch OCSP responses on startup and will send out the first TLS connections without stapling even if it’s enabled. Here’s a blog post that recommends to work around this by connecting to all configured hosts after the server has started.

To summarize: This is all a big mess. Both Apache and Nginx have OCSP Stapling implementations that are essentially broken. As long as you’re using either of those then enabling Must-Staple is a reliable way to shoot yourself in the foot and get into trouble. Don’t enable it if you plan to use Apache or Nginx.

Certificate revocation is broken. It has been broken since the invention of SSL and it’s still broken. OCSP Stapling and OCSP Must-Staple could fix it in theory. But that would require working and stable implementations in the most widely used server products.

Posted by Hanno Böck

in Cryptography, English, Linux, Security

at

23:25

| Comments (5)

| Trackback (1)

Defined tags for this entry: apache, certificates, cryptography, encryption, https, letsencrypt, nginx, ocsp, ocspstapling, revocation, ssl, tls

Friday, July 15. 2016

Insecure updates in Joomla before 3.6

In early April I reported security problems with the update process to the security contact of Joomla. While the issue has been fixed in Joomla 3.6, the communication process was far from ideal.

The issue itself is pretty simple: Up until recently Joomla fetched information about its updates over unencrypted and unauthenticated HTTP without any security measures.

The update process works in three steps. First of all the Joomla backend fetches a file list.xml from update.joomla.org that contains information about current versions. If a newer version than the one installed is found then the user gets a button that allows him to update Joomla. The file list.xml references an URL for each version with further information about the update called extension_sts.xml. Interestingly this file is fetched over HTTPS, while - in version 3.5 - the file list.xml is not. However this does not help, as the attacker can already intervene at the first step and serve a malicious list.xml that references whatever he wants. In extension_sts.xml there is a download URL for a zip file that contains the update.

Exploiting this for a Man-in-the-Middle-attacker is trivial: Requests to update.joomla.org need to be redirected to an attacker-controlled host. Then the attacker can place his own list.xml, which will reference his own extension_sts.xml, which will contain a link to a backdoored update. I have created a trivial proof of concept for this (just place that on the HTTP host that update.joomla.org gets redirected to).

I think it should be obvious that software updates are a security sensitive area and need to be protected. Using HTTPS is one way of doing that. Using any kind of cryptographic signature system is another way. Unfortunately it seems common web applications are only slowly learning that. Drupal only switched to HTTPS updates earlier this year. It's probably worth checking other web applications that have integrated update processes if they are secure (Wordpress is secure fwiw).

Now here's how the Joomla developers handled this issue: I contacted Joomla via their webpage on April 6th. Their webpage form didn't have a way to attach files, so I offered them to contact me via email so I could send them the proof of concept. I got a reply to that shortly after asking for it. This was the only communication from their side. Around two months later, on June 14th, I asked about the status of this issue and warned that I would soon publish it if I don't get a reaction. I never got any reply.

In the meantime Joomla had published beta versions of the then upcoming version 3.6. I checked that and noted that they have changed the update url from http://update.joomla.org/ to https://update.joomla.org/. So while they weren't communicating with me it seemed a fix was on its way. I then found that there was a pull request and a Github discussion that started even before I first contacted them. Joomla 3.6 was released recently, therefore the issue is fixed. However the release announcement doesn't mention it.

So all in all I contacted them about a security issue they were already in the process of fixing. The problem itself is therefore solved. But the lack of communication about the issue certainly doesn't cast a good light on Joomla's security process.

The issue itself is pretty simple: Up until recently Joomla fetched information about its updates over unencrypted and unauthenticated HTTP without any security measures.

The update process works in three steps. First of all the Joomla backend fetches a file list.xml from update.joomla.org that contains information about current versions. If a newer version than the one installed is found then the user gets a button that allows him to update Joomla. The file list.xml references an URL for each version with further information about the update called extension_sts.xml. Interestingly this file is fetched over HTTPS, while - in version 3.5 - the file list.xml is not. However this does not help, as the attacker can already intervene at the first step and serve a malicious list.xml that references whatever he wants. In extension_sts.xml there is a download URL for a zip file that contains the update.

Exploiting this for a Man-in-the-Middle-attacker is trivial: Requests to update.joomla.org need to be redirected to an attacker-controlled host. Then the attacker can place his own list.xml, which will reference his own extension_sts.xml, which will contain a link to a backdoored update. I have created a trivial proof of concept for this (just place that on the HTTP host that update.joomla.org gets redirected to).

I think it should be obvious that software updates are a security sensitive area and need to be protected. Using HTTPS is one way of doing that. Using any kind of cryptographic signature system is another way. Unfortunately it seems common web applications are only slowly learning that. Drupal only switched to HTTPS updates earlier this year. It's probably worth checking other web applications that have integrated update processes if they are secure (Wordpress is secure fwiw).

Now here's how the Joomla developers handled this issue: I contacted Joomla via their webpage on April 6th. Their webpage form didn't have a way to attach files, so I offered them to contact me via email so I could send them the proof of concept. I got a reply to that shortly after asking for it. This was the only communication from their side. Around two months later, on June 14th, I asked about the status of this issue and warned that I would soon publish it if I don't get a reaction. I never got any reply.

In the meantime Joomla had published beta versions of the then upcoming version 3.6. I checked that and noted that they have changed the update url from http://update.joomla.org/ to https://update.joomla.org/. So while they weren't communicating with me it seemed a fix was on its way. I then found that there was a pull request and a Github discussion that started even before I first contacted them. Joomla 3.6 was released recently, therefore the issue is fixed. However the release announcement doesn't mention it.

So all in all I contacted them about a security issue they were already in the process of fixing. The problem itself is therefore solved. But the lack of communication about the issue certainly doesn't cast a good light on Joomla's security process.

Friday, December 11. 2015

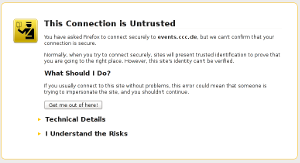

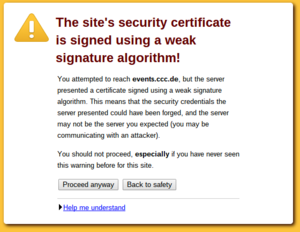

What got us into the SHA1 deprecation mess?

Important notice: After I published this text Adam Langley pointed out that a major assumption is wrong: Android 2.2 actually has no problems with SHA256-signed certificates. I checked this myself and in an emulated Android 2.2 instance I was able to connect to a site with a SHA256-signed certificate. I apologize for that error, I trusted the Cloudflare blog post on that. This whole text was written with that assumption in mind, so it's hard to change without rewriting it from scratch. I have marked the parts that are likely to be questioned. Most of it is still true and Android 2 has a problematic TLS stack (no SNI), but the specific claim regarding SHA256-certificates seems wrong.

This week both Cloudflare and Facebook announced that they want to delay the deprecation of certificates signed with the SHA1 algorithm. This spurred some hot debates whether or not this is a good idea – with two seemingly good causes: On the one side people want to improve security, on the other side access to webpages should remain possible for users of old devices, many of them living in poor countries. I want to give some background on the issue and ask why that unfortunate situation happened in the first place, because I think it highlights some of the most important challenges in the TLS space and more generally in IT security.

This week both Cloudflare and Facebook announced that they want to delay the deprecation of certificates signed with the SHA1 algorithm. This spurred some hot debates whether or not this is a good idea – with two seemingly good causes: On the one side people want to improve security, on the other side access to webpages should remain possible for users of old devices, many of them living in poor countries. I want to give some background on the issue and ask why that unfortunate situation happened in the first place, because I think it highlights some of the most important challenges in the TLS space and more generally in IT security.

SHA1 broken since 2005

The SHA1 algorithm is a cryptographic hash algorithm and it has been know for quite some time that its security isn't great. In 2005 the Chinese researcher Wang Xiaoyun published an attack that would allow to create a collision for SHA1. The attack wasn't practically tested, because it is quite expensive to do so, but it was clear that a financially powerful adversary would be able to perform such an attack. A year before the even older hash function MD5 was broken practically, in 2008 this led to a practical attack against the issuance of TLS certificates. In the past years browsers pushed for the deprecation of SHA1 certificates and it was agreed that starting January 2016 no more certificates signed with SHA1 must be issued, instead the stronger algorithm SHA256 should be used. Many felt this was already far too late, given that it's been ten years since we knew that SHA1 is broken.

A few weeks before the SHA1 deadline Cloudflare and Facebook now question this deprecation plan. They have some strong arguments. According to Cloudflare's numbers there is still a significant number of users that use browsers without support for SHA256-certificates. And those users are primarily in relatively poor, repressive or war-ridden countries. The top three on the list are China, Cameroon and Yemen. Their argument, which is hard to argue with, is that cutting of SHA1 support will primarily affect the poorest users.

Cloudflare and Facebook propose a new mechanism to get legacy validated certificates. These certificates should only be issued to site operators that will use a technology to separate users based on their TLS handshake and only show the SHA1 certificate to those that use an older browser. Facebook already published the code to do that, Cloudflare also announced that they will release the code of their implementation. Right now it's still possible to get SHA1 certificates, therefore those companies could just register them now and use them for three years to come. Asking for this legacy validation process indicates that Cloudflare and Facebook don't see this as a short-term workaround, instead they seem to expect that this will be a solution they use for years to come, without any decided end date.

It's a tough question whether or not this is a good idea. But I want to ask a different question: Why do we have this problem in the first place, why is it hard to fix and what can we do to prevent similar things from happening in the future? One thing is remarkable about this problem: It's a software problem. In theory software can be patched and the solution to a software problem is to update the software. So why can't we just provide updates and get rid of these legacy problems?

Windows XP and Android Froyo

According to Cloudflare there are two main reason why so many users can't use sites with SHA256 certificates: Windows XP and old versions of Android (SHA256 support was added in Android 2.3, so this affects mostly Android 2.2 aka Froyo). We all know that Windows XP shouldn't be used any more, that its support has ended in 2014. But that clearly clashes with realities. People continue using old systems and Windows XP is still alive in many countries, especially in China.

But I'm inclined to say that Windows XP is probably the smaller problem here. With Service Pack 3 Windows XP introduced support for SHA256 certificates. By using an alternative browser (Firefox is still supported on Windows XP if you install SP3) it is even possible to have a relatively safe browsing experience. I'm not saying that I recommend it, but given the circumstances advising people how to update their machines and to install an alternative browser can party provide a way to reduce the reliance on broken algorithms.

The Updatability Gap

But the problem with Android is much, much worse, and I think this brings us to probably the biggest problem in IT security we have today. For years one of the most important messages to users in IT security was: Keep your software up to date. But at the same time the industry has created new software ecosystems where very often that just isn't an option.

In the Android case Google says that it's the responsibility of device vendors and carriers to deliver security updates. The dismal reality is that in most cases they just don't do that. But even if device vendors are willing to provide updates it usually only happens for a very short time frame. Google only supports the latest two Android major versions. For them Android 2.2 is ancient history, but for a significant portion of users it is still the operating system they use.

What we have here is a huge gap between the time frame devices get security updates and the time frame users use these devices. And pretty much everything tells us that the vendors in the Internet of Things ignore these problems even more and the updatability gap will become larger. Many became accustomed to the idea that phones get only used for a year, but it's hard to imagine how that's going to work for a fridge. What's worse: Whether you look at phones or other devices, they often actively try to prevent users from replacing the software on their own.

This is a hard problem to tackle, but it's probably the biggest problem IT security is facing in the upcoming years. We need to get a working concept for updates – a concept that matches the real world use of devices.

Substandard TLS implementations

But there's another part of the SHA1 deprecation story. As I wrote above since 2005 it was clear that SHA1 needs to go away. That was three years before Android was even published. But in 2010 Android still wasn't capable of supporting SHA256 certificates. Google has to take a large part of the blame here. While these days they are at the forefront of deploying high quality and up to date TLS stacks, they shipped a substandard and outdated TLS implementation in Android 2. (Another problem is that all Android 2 versions don't support Server Name Indication, a technology that allows to use different certificates for different hosts on one IP address.)

This is not the first such problem we are facing. With the POODLE vulnerability it became clear that the old SSL version 3 is broken beyond repair and it's impossible to use it safely. The only option was to deprecate it. However doing so was painful, because a lot of devices out there didn't support better protocols. The successor protocol TLS 1.0 (SSL/TLS versions are confusing, I know) was released in 1999. But the problem wasn't that people were using devices older than 1999. The problem was that many vendors shipped devices and software that only supported SSLv3 in recent years.

One example was Windows Phone 7. In 2011 this was the operating system on Microsoft's and Nokia's flagship product, the Lumia 800. Its mail client is unable to connect to servers not supporting SSLv3. It is just inexcusable that in 2011 Microsoft shipped a product which only supported a protocol that was deprecated 12 years earlier. It's even more inexcusable that they refused to fix it later, because it only came to light when Windows Phone 7 was already out of support.

The takeaway from this is that sloppiness from the past can bite you many years later. And this is what we're seeing with Android 2.2 now.

But you might think given these experiences this has stopped today. It hasn't. The largest deployer of substandard TLS implementations these days is Apple. Up until recently (before El Capitan) Safari on OS X didn't support any authenticated encryption cipher suites with AES-GCM and relied purely on the CBC block mode. The CBC cipher suites are a hot candidate for the next deprecation plan, because 2013 the Lucky 13 attack has shown that they are really hard to implement safely. The situation for applications other than the browser (Mail etc.) is even worse on Apple devices. They only support the long deprecated TLS 1.0 protocol – and that's still the case on their latest systems.

There is widespread agreement in the TLS and cryptography community that the only really safe way to use TLS these days is TLS 1.2 with a cipher suite using forward secrecy and authenticated encryption (AES-GCM is the only standardized option for that right now, however ChaCha20/Poly1304 will come soon).

Conclusions

For the specific case of the SHA1 deprecation I would propose the following: Cloudflare and Facebook should go ahead with their handshake workaround for the next years, as long as their current certificates are valid. But this time should be used to find solutions. Reach out to those users visiting your sites and try to understand what could be done to fix the situation. For the Windows XP users this is relatively easy – help them updating their machines and preferably install another browser, most likely that'd be Firefox. For Android there is probably no easy solution, but we have some of the largest Internet companies involved here. Please seriously ask the question: Is it possible to retrofit Android 2.2 with a reasonable TLS stack? What ways are there to get that onto the devices? Is it possible to install a browser app with its own TLS stack on at least some of those devices? This probably doesn't work in most cases, because on many cheap phones there just isn't enough space to install large apps. In the long term I hope that the tech community will start tackling the updatability problem.

In the TLS space I think we need to make sure that no more substandard TLS deployments get shipped today. Point out the vendors that do so and pressure them to stop. It wasn't acceptable in 2010 to ship TLS with long-known problems and it isn't acceptable today.

Image source: Wikimedia Commons

This week both Cloudflare and Facebook announced that they want to delay the deprecation of certificates signed with the SHA1 algorithm. This spurred some hot debates whether or not this is a good idea – with two seemingly good causes: On the one side people want to improve security, on the other side access to webpages should remain possible for users of old devices, many of them living in poor countries. I want to give some background on the issue and ask why that unfortunate situation happened in the first place, because I think it highlights some of the most important challenges in the TLS space and more generally in IT security.

This week both Cloudflare and Facebook announced that they want to delay the deprecation of certificates signed with the SHA1 algorithm. This spurred some hot debates whether or not this is a good idea – with two seemingly good causes: On the one side people want to improve security, on the other side access to webpages should remain possible for users of old devices, many of them living in poor countries. I want to give some background on the issue and ask why that unfortunate situation happened in the first place, because I think it highlights some of the most important challenges in the TLS space and more generally in IT security.SHA1 broken since 2005

The SHA1 algorithm is a cryptographic hash algorithm and it has been know for quite some time that its security isn't great. In 2005 the Chinese researcher Wang Xiaoyun published an attack that would allow to create a collision for SHA1. The attack wasn't practically tested, because it is quite expensive to do so, but it was clear that a financially powerful adversary would be able to perform such an attack. A year before the even older hash function MD5 was broken practically, in 2008 this led to a practical attack against the issuance of TLS certificates. In the past years browsers pushed for the deprecation of SHA1 certificates and it was agreed that starting January 2016 no more certificates signed with SHA1 must be issued, instead the stronger algorithm SHA256 should be used. Many felt this was already far too late, given that it's been ten years since we knew that SHA1 is broken.

A few weeks before the SHA1 deadline Cloudflare and Facebook now question this deprecation plan. They have some strong arguments. According to Cloudflare's numbers there is still a significant number of users that use browsers without support for SHA256-certificates. And those users are primarily in relatively poor, repressive or war-ridden countries. The top three on the list are China, Cameroon and Yemen. Their argument, which is hard to argue with, is that cutting of SHA1 support will primarily affect the poorest users.

Cloudflare and Facebook propose a new mechanism to get legacy validated certificates. These certificates should only be issued to site operators that will use a technology to separate users based on their TLS handshake and only show the SHA1 certificate to those that use an older browser. Facebook already published the code to do that, Cloudflare also announced that they will release the code of their implementation. Right now it's still possible to get SHA1 certificates, therefore those companies could just register them now and use them for three years to come. Asking for this legacy validation process indicates that Cloudflare and Facebook don't see this as a short-term workaround, instead they seem to expect that this will be a solution they use for years to come, without any decided end date.

It's a tough question whether or not this is a good idea. But I want to ask a different question: Why do we have this problem in the first place, why is it hard to fix and what can we do to prevent similar things from happening in the future? One thing is remarkable about this problem: It's a software problem. In theory software can be patched and the solution to a software problem is to update the software. So why can't we just provide updates and get rid of these legacy problems?

Windows XP and Android Froyo

According to Cloudflare there are two main reason why so many users can't use sites with SHA256 certificates: Windows XP and old versions of Android (SHA256 support was added in Android 2.3, so this affects mostly Android 2.2 aka Froyo). We all know that Windows XP shouldn't be used any more, that its support has ended in 2014. But that clearly clashes with realities. People continue using old systems and Windows XP is still alive in many countries, especially in China.

But I'm inclined to say that Windows XP is probably the smaller problem here. With Service Pack 3 Windows XP introduced support for SHA256 certificates. By using an alternative browser (Firefox is still supported on Windows XP if you install SP3) it is even possible to have a relatively safe browsing experience. I'm not saying that I recommend it, but given the circumstances advising people how to update their machines and to install an alternative browser can party provide a way to reduce the reliance on broken algorithms.

The Updatability Gap

But the problem with Android is much, much worse, and I think this brings us to probably the biggest problem in IT security we have today. For years one of the most important messages to users in IT security was: Keep your software up to date. But at the same time the industry has created new software ecosystems where very often that just isn't an option.

In the Android case Google says that it's the responsibility of device vendors and carriers to deliver security updates. The dismal reality is that in most cases they just don't do that. But even if device vendors are willing to provide updates it usually only happens for a very short time frame. Google only supports the latest two Android major versions. For them Android 2.2 is ancient history, but for a significant portion of users it is still the operating system they use.

What we have here is a huge gap between the time frame devices get security updates and the time frame users use these devices. And pretty much everything tells us that the vendors in the Internet of Things ignore these problems even more and the updatability gap will become larger. Many became accustomed to the idea that phones get only used for a year, but it's hard to imagine how that's going to work for a fridge. What's worse: Whether you look at phones or other devices, they often actively try to prevent users from replacing the software on their own.

This is a hard problem to tackle, but it's probably the biggest problem IT security is facing in the upcoming years. We need to get a working concept for updates – a concept that matches the real world use of devices.

Substandard TLS implementations

But there's another part of the SHA1 deprecation story. As I wrote above since 2005 it was clear that SHA1 needs to go away. That was three years before Android was even published. But in 2010 Android still wasn't capable of supporting SHA256 certificates. Google has to take a large part of the blame here. While these days they are at the forefront of deploying high quality and up to date TLS stacks, they shipped a substandard and outdated TLS implementation in Android 2. (Another problem is that all Android 2 versions don't support Server Name Indication, a technology that allows to use different certificates for different hosts on one IP address.)

This is not the first such problem we are facing. With the POODLE vulnerability it became clear that the old SSL version 3 is broken beyond repair and it's impossible to use it safely. The only option was to deprecate it. However doing so was painful, because a lot of devices out there didn't support better protocols. The successor protocol TLS 1.0 (SSL/TLS versions are confusing, I know) was released in 1999. But the problem wasn't that people were using devices older than 1999. The problem was that many vendors shipped devices and software that only supported SSLv3 in recent years.

One example was Windows Phone 7. In 2011 this was the operating system on Microsoft's and Nokia's flagship product, the Lumia 800. Its mail client is unable to connect to servers not supporting SSLv3. It is just inexcusable that in 2011 Microsoft shipped a product which only supported a protocol that was deprecated 12 years earlier. It's even more inexcusable that they refused to fix it later, because it only came to light when Windows Phone 7 was already out of support.

The takeaway from this is that sloppiness from the past can bite you many years later. And this is what we're seeing with Android 2.2 now.

But you might think given these experiences this has stopped today. It hasn't. The largest deployer of substandard TLS implementations these days is Apple. Up until recently (before El Capitan) Safari on OS X didn't support any authenticated encryption cipher suites with AES-GCM and relied purely on the CBC block mode. The CBC cipher suites are a hot candidate for the next deprecation plan, because 2013 the Lucky 13 attack has shown that they are really hard to implement safely. The situation for applications other than the browser (Mail etc.) is even worse on Apple devices. They only support the long deprecated TLS 1.0 protocol – and that's still the case on their latest systems.

There is widespread agreement in the TLS and cryptography community that the only really safe way to use TLS these days is TLS 1.2 with a cipher suite using forward secrecy and authenticated encryption (AES-GCM is the only standardized option for that right now, however ChaCha20/Poly1304 will come soon).

Conclusions

For the specific case of the SHA1 deprecation I would propose the following: Cloudflare and Facebook should go ahead with their handshake workaround for the next years, as long as their current certificates are valid. But this time should be used to find solutions. Reach out to those users visiting your sites and try to understand what could be done to fix the situation. For the Windows XP users this is relatively easy – help them updating their machines and preferably install another browser, most likely that'd be Firefox. For Android there is probably no easy solution, but we have some of the largest Internet companies involved here. Please seriously ask the question: Is it possible to retrofit Android 2.2 with a reasonable TLS stack? What ways are there to get that onto the devices? Is it possible to install a browser app with its own TLS stack on at least some of those devices? This probably doesn't work in most cases, because on many cheap phones there just isn't enough space to install large apps. In the long term I hope that the tech community will start tackling the updatability problem.

In the TLS space I think we need to make sure that no more substandard TLS deployments get shipped today. Point out the vendors that do so and pressure them to stop. It wasn't acceptable in 2010 to ship TLS with long-known problems and it isn't acceptable today.

Image source: Wikimedia Commons

Posted by Hanno Böck

in Cryptography, English, Security

at

15:24

| Comment (1)

| Trackbacks (0)

Defined tags for this entry: android, certificate, cloudflare, cryptography, facebook, hash, https, security, sha1, sha256, ssl, tls, updates, windowsxp

Monday, November 23. 2015

Superfish 2.0: Dangerous Certificate on Dell Laptops breaks encrypted HTTPS Connections

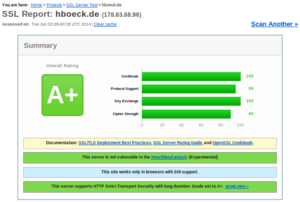

tl;dr Dell laptops come preinstalled with a root certificate and a corresponding private key. That completely compromises the security of encrypted HTTPS connections. I've provided an online check, affected users should delete the certificate.

tl;dr Dell laptops come preinstalled with a root certificate and a corresponding private key. That completely compromises the security of encrypted HTTPS connections. I've provided an online check, affected users should delete the certificate.It seems that Dell hasn't learned anything from the Superfish-scandal earlier this year: Laptops from the company come with a preinstalled root certificate that will be accepted by browsers. The private key is also installed on the system and has been published now. Therefore attackers can use Man in the Middle attacks against Dell users to show them manipulated HTTPS webpages or read their encrypted data.

The certificate, which is installed in the system's certificate store under the name "eDellRoot", gets installed by a software called Dell Foundation Services. This software is still available on Dell's webpage. According to the somewhat unclear description from Dell it is used to provide "foundational services facilitating customer serviceability, messaging and support functions".

The private key of this certificate is marked as non-exportable in the Windows certificate store. However this provides no real protection, there are Tools to export such non-exportable certificate keys. A user of the plattform Reddit has posted the Key there.

For users of the affected Laptops this is a severe security risk. Every attacker can use this root certificate to create valid certificates for arbitrary web pages. Even HTTP Public Key Pinning (HPKP) does not protect against such attacks, because browser vendors allow locally installed certificates to override the key pinning protection. This is a compromise in the implementation that allows the operation of so-called TLS interception proxies.

I was made aware of this issue a while ago by Kristof Mattei. We asked Dell for a statement three weeks ago and didn't get any answer.

It is currently unclear which purpose this certificate served. However it seems unliklely that it was placed there deliberately for surveillance purposes. In that case Dell wouldn't have installed the private key on the system.

Affected are only users that use browsers or other applications that use the system's certificate store. Among the common Windows browsers this affects the Internet Explorer, Edge and Chrome. Not affected are Firefox-users, Mozilla's browser has its own certificate store.

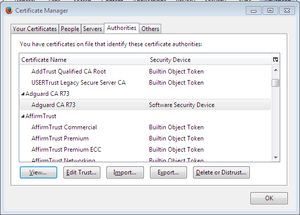

Users of Dell laptops can check if they are affected with an online check tool. Affected users should immediately remove the certificate in the Windows certificate manager. The certificate manager can be started by clicking "Start" and typing in "certmgr.msc". The "eDellRoot" certificate can be found under "Trusted Root Certificate Authorities". You also need to remove the file Dell.Foundation.Agent.Plugins.eDell.dll, Dell has now posted an instruction and a removal tool.

This incident is almost identical with the Superfish-incident. Earlier this year it became public that Lenovo had preinstalled a software called Superfish on its Laptops. Superfish intercepts HTTPS-connections to inject ads. It used a root certificate for that and the corresponding private key was part of the software. After that incident several other programs with the same vulnerability were identified, they all used a software module called Komodia. Similar vulnerabilities were found in other software products, for example in Privdog and in the ad blocker Adguard.

This article is mostly a translation of a German article I wrote for Golem.de.

Image source and license: Wistula / Wikimedia Commons, Creative Commons by 3.0

Update (2015-11-24): Second Dell root certificate DSDTestProvider

I just found out that there is a second root certificate installed with some Dell software that causes exactly the same issue. It is named DSDTestProvider and comes with a software called Dell System Detect. Unlike the Dell Foundations Services this one does not need a Dell computer to be installed, therefore it was trivial to extract the certificate and the private key. My online test now checks both certificates. This new certificate is not covered by Dell's removal instructions yet.

Dell has issued an official statement on their blog and in the comment section a user mentioned this DSDTestProvider certificate. After googling what DSD might be I quickly found it. There have been concerns about the security of Dell System Detect before, Malwarebytes has an article about it from April mentioning that it was vulnerable to a remote code execution vulnerability.

Update (2015-11-26): Service tag information disclosure

Another unrelated issue on Dell PCs was discovered in a tool called Dell Foundation Services. It allows webpages to read an unique service tag. There's also an online check.

Posted by Hanno Böck

in Cryptography, English, Security

at

17:39

| Comments (7)

| Trackbacks (0)

Defined tags for this entry: browser, certificate, cryptography, dell, edellroot, encryption, https, maninthemiddle, security, ssl, superfish, tls, vulnerability

Thursday, August 13. 2015

More TLS Man-in-the-Middle failures - Adguard, Privdog again and ProtocolFilters.dll

In February the discovery of a software called Superfish caused widespread attention. Superfish caused a severe security vulnerability by intercepting HTTPS connections with a Man-in-the-Middle-certificate. The certificate and the corresponding private key was shared amongst all installations.

In February the discovery of a software called Superfish caused widespread attention. Superfish caused a severe security vulnerability by intercepting HTTPS connections with a Man-in-the-Middle-certificate. The certificate and the corresponding private key was shared amongst all installations.The use of Man-in-the-Middle-proxies for traffic interception is a widespread method, an application installs a root certificate into the browser and later intercepts connections by creating signed certificates for webpages on the fly. It quickly became clear that Superfish was only the tip of the iceberg. The underlying software module Komodia was used in a whole range of applications all suffering from the same bug. Later another software named Privdog was found that also intercepted HTTPS traffic and I published a blog post explaining that it was broken in a different way: It completely failed to do any certificate verification on its connections.

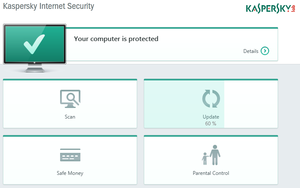

In a later blogpost I analyzed several Antivirus applications that also intercept HTTPS traffic. They were not as broken as Superfish or Privdog, but all of them decreased the security of the TLS encryption in one way or another. The most severe issue was that Kaspersky was at that point still vulnerable to the FREAK bug, more than a month after it was discovered. In a comment to that blogpost I was asked about a software called Adguard. I have to apologize that it took me so long to write this up.

Different certificate, same key

The first thing I did was to install Adguard two times in different VMs and look at the root certificate that got installed into the browser. The fingerprint of the certificates was different. However a closer look revealed something interesting: The RSA modulus was the same. It turned out that Adguard created a new root certificate with a changing serial number for every installation, but it didn't generate a new key. Therefore it is vulnerable to the same attacks as Superfish.

I reported this issue to Adguard. Adguard has fixed this issue, however they still intercept HTTPS traffic.

I learned that Adguard did not always use the same key, instead it chose one out of ten different keys based on the CPU. All ten keys could easily be extracted from a file called ProtocolFilters.dll that was shipped with Adguard. Older versions of Adguard only used one key shared amongst all installations. There also was a very outdated copy of the nss library. It suffers from various vulnerabilities, however it seems they are not exploitable. The library is not used for TLS connections, its only job is to install certificates into the Firefox root store.

Meet Privdog again

The outdated nss version gave me a hint, because I had seen this before: In Privdog. I had spend some time trying to find out if Privdog would be vulnerable to known nss issues (which had the positive side effect that Filippo created proof of concept code for the BERserk vulnerability). What I didn't notice back then was the shared key issue. Privdog also used the same key amongst different installations. So it turns out Privdog was completely broken in two different ways: By sharing the private key amongst installations and by not verifying certificates.

The latest version of Privdog no longer intercepts HTTPS traffic, it works as a browser plugin now. I don't know whether this vulnerability was still present after the initial fix caused by my original blog post.

Now what is this ProtocolFilters.dll? It is a commercial software module that is supposed to be used along with a product called Netfilter SDK. I wondered where else this would be found and if we would have another widely used software module like Komodia.

ProtocolFilters.dll is mentioned a lot in the web, mostly in the context of Potentially Unwanted Applications, also called Crapware. That means software that is either preinstalled or that gets bundled with installers from other software and is often installed without users consent or by tricking the user into clicking some "ok" button without knowing that he or she agrees to install another software. Unfortunately I was unable to get my hands on any other software using it.

Lots of "Potentially Unwanted Applications" use ProtocolFilters.dll

Software names that I found that supposedly include ProtocolFilters.dll: Coupoon, CashReminder, SavingsDownloader, Scorpion Saver, SavingsbullFilter, BRApp, NCupons, Nurjax, Couponarific, delshark, rrsavings, triosir, screentk. If anyone has any of them or any other piece of software bundling ProtocolFilters.dll I'd be interested in receiving a copy.

I'm publishing all Adguard keys and the Privdog key together with example certificates here. I also created a trivial script that can be used to extract keys from ProtocolFilters.dll (or other binary files that include TLS private keys in their binary form). It looks for anything that could be a private key by its initial bytes and then calls OpenSSL to try to decode it. If OpenSSL succeeds it will dump the key.

Finally an announcement for visitors of the Chaos Communication Camp: I will give a talk about TLS interception issues and the whole story of Superfish, Privdog and friends on Sunday.

Update: Due to the storm the talk was delayed. It will happen on Monday at 12:30 in Track South.

Posted by Hanno Böck

in Cryptography, English, Security

at

00:44

| Comments (4)

| Trackback (1)

Defined tags for this entry: adguard, https, komodia, maninthemiddle, netfiltersdk, privdog, protocolfilters, security, superfish, tls, vulnerability

Sunday, April 26. 2015

How Kaspersky makes you vulnerable to the FREAK attack and other ways Antivirus software lowers your HTTPS security

Lately a lot of attention has been payed to software like Superfish and Privdog that intercepts TLS connections to be able to manipulate HTTPS traffic. These programs had severe (technically different) vulnerabilities that allowed attacks on HTTPS connections.

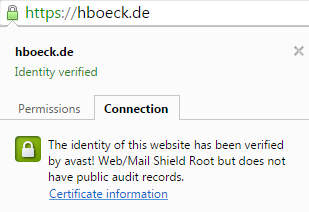

Lately a lot of attention has been payed to software like Superfish and Privdog that intercepts TLS connections to be able to manipulate HTTPS traffic. These programs had severe (technically different) vulnerabilities that allowed attacks on HTTPS connections.What these tools do is a widespread method. They install a root certificate into the user's browser and then they perform a so-called Man in the Middle attack. They present the user a certificate generated on the fly and manage the connection to HTTPS servers themselves. Superfish and Privdog did this in an obviously wrong way, Superfish by using the same root certificate on all installations and Privdog by just accepting every invalid certificate from web pages. What about other software that also does MitM interception of HTTPS traffic?

Antivirus software intercepts your HTTPS traffic

Many Antivirus applications and other security products use similar techniques to intercept HTTPS traffic. I had a closer look at three of them: Avast, Kaspersky and ESET. Avast enables TLS interception by default. By default Kaspersky intercepts connections to certain web pages (e. g. banking), there is an option to enable interception by default. In ESET TLS interception is generally disabled by default and can be enabled with an option.

When a security product intercepts HTTPS traffic it is itself responsible to create a TLS connection and check the certificate of a web page. It has to do what otherwise a browser would do. There has been a lot of debate and progress in the way TLS is done in the past years. A number of vulnerabilities in TLS (upon them BEAST, CRIME, Lucky Thirteen, FREAK and others) allowed to learn much more how to do TLS in a secure way. Also, problems with certificate authorities that issued malicious certificates (Diginotar, Comodo, Türktrust and others) led to the development of mitigation technologies like HTTP Public Key Pinning (HPKP) and Certificate Transparency to strengthen the security of Certificate Authorities. Modern browsers protect users much better from various threats than browsers used several years ago.

You may think: "Of course security products like Antivirus applications are fully aware of these developments and do TLS and certificate validation in the best way possible. After all security is their business, so they have to get it right." Unfortunately that's only what's happening in some fantasy IT security world that only exists in the minds of people that listened to industry PR too much. The real world is a bit different: All Antivirus applications I checked lower the security of TLS connections in one way or another.

Disabling of HTTP Public Key Pinning

Each and every TLS intercepting application I tested breaks HTTP Public Key Pinning (HPKP). It is a technology that a lot of people in the IT security community are pretty excited about: It allows a web page to pin public keys of certificates in a browser. On subsequent visits the browser will only accept certificates with these keys. It is a very effective protection against malicious or hacked certificate authorities issuing rogue certificates.

Browsers made a compromise when introducing HPKP. They won't enable the feature for manually installed certificates. The reason for that is simple (although I don't like it): If they hadn't done that they would've broken all TLS interception software like these Antivirus applications. But the applications could do the HPKP checking themselves. They just don't do it.

Kaspersky vulnerable to FREAK and CRIME

Kaspersky vulnerable to FREAK and CRIMEHaving a look at Kaspersky, I saw that it is vulnerable to the FREAK attack, a vulnerability in several TLS libraries that was found recently. Even worse: It seems this issue has been reported publicly in the Kaspersky Forums more than a month ago and it is not fixed yet. Please remember: Kaspersky enables the HTTPS interception by default for sites it considers as especially sensitive, for example banking web pages. Doing that with a known security issue is extremely irresponsible.

I also found a number of other issues. ESET doesn't support TLS 1.2 and therefore uses a less secure encryption algorithm. Avast and ESET don't support OCSP stapling. Kaspersky enables the insecure TLS compression feature that will make a user vulnerable to the CRIME attack. Both Avast and Kaspersky accept nonsensical parameters for Diffie Hellman key exchanges with a size of 8 bit. Avast is especially interesting because it bundles the Google Chrome browser. It installs a browser with advanced HTTPS features and lowers its security right away.

These TLS features are all things that current versions of Chrome and Firefox get right. If you use them in combination with one of these Antivirus applications you lower the security of HTTPS connections.

There's one more interesting thing: Both Kaspersky and Avast don't intercept traffic when Extended Validation (EV) certificates are used. Extended Validation certificates are the ones that show you a green bar in the address line of the browser with the company name. The reason why they do so is obvious: Using the interception certificate would remove the green bar which users might notice and find worrying. The message the Antivirus companies are sending seems clear: If you want to deliver malware from a web page you should buy an Extended Validation certificate.

Everyone gets HTTPS interception wrong - just don't do it

So what do we make out of this? A lot of software products intercept HTTPS traffic (antiviruses, adware, youth protection filters, ...), many of them promise more security and everyone gets it wrong.

I think these technologies are a misguided approach. The problem is not that they make mistakes in implementing these technologies, I think the idea is wrong from the start. Man in the Middle used to be a description of an attack technique. It seems strange that it turned into something people consider a legitimate security technology. Filtering should happen on the endpoint or not at all. Browsers do a lot these days to make your HTTPS connections more secure. Please don't mess with that.