Friday, January 17. 2025

Private Keys in the Fortigate Leak

What has not been widely recognized is that this leak also contains TLS and SSH private keys. As I am developing badkeys, a tool to identify insecure and compromised keys, this caught my attention. (The following analysis is based on an incomplete subset of the leak. I may update the post if I get access to more complete information.)

The leaked configurations contain keys looking like this (not an actual key from the leak):

set private-key "-----BEGIN ENCRYPTED PRIVATE KEY----- MIGjMF8GCSqGSIb3DQEFDTBSMDEGCSqGSIb3DQEFDDAkBBC5oRB/b9iViG5YoFmw 03T1AgIIADAMBggqhkiG9w0CCQUAMB0GCWCGSAFlAwQBKgQQPsMiXQoINAe2uBZX cbB1MARAXz1LwSJhElDvazEtfywe9pLSdCG9+A0G6CMk2Lp5eR954OjScEQY6Zqe 9J3V/fCQeDanrAE+/dpCefjAD4LhGg== -----END ENCRYPTED PRIVATE KEY-----"They also include corresponding certificates and keys in OpenSSH format. As you can see, these private keys are encrypted. However, above those keys, we can find the encryption password. The password is also encrypted, looking like this:

set password ENC SGFja1RoZVBsYW5ldCF[...]UA==A quick search for the encryption method turned up a script containing code to decrypt these passwords. The encryption key is static and publicly known.

The password line contains a Base64 string that decodes to 148 bytes. The first four bytes, padded with 12 zero bytes, are the initialization vector. The remaining bytes are the encrypted payload. The encryption uses AES-128 in CBC mode. The decrypted passwords appear to be mostly hex numbers and are padded with zero bytes - and sometimes other characters. (I am unaware of their meaning.)

In case I lost you here with technical details, the important takeaway is that in almost all cases, it is possible to decrypt the private key. (I may share a tool to extract the keys at a later point in time.)

The use of a static encryption key is a known vulnerability, tracked as CVE-2019-6693. According to Fortinet's advisory from 2020, this was "fixed" by introducing a setting that allows to configure a custom password.

"Fixing" default passwords by providing and documenting an option to change the password is something I have strong opinions about. It does not work (see also).

One result of this incident is that it gives us some real-world data on how many people will actually change their password if the option is given to them and documented in a security advisory. 99,5% of the keys could be decrypted with the default password. (Consider this as a lower bound and not an exact number. The password decryption may be flaky due to the unclear padding mechanism.)

Overall, there were around 100,000 private keys in PKCS format and 60,000 in OpenSSH format. Most of the corresponding certificates were self-signed, but a few thousand were issued by publicly trusted web certificate authorities, most of them expired. However, the data included keys for 84 WebPKI certificates that were neither expired nor revoked as of this morning. Only 10 unexpired certificates were already revoked.

I have reported the still valid certificates to the responsible certificate authorities for revocation. CAs are obliged to revoke certificates with known-compromised keys within 24 hours. Therefore, these certificates will all be revoked soon. (I also filed two reports due to difficulties with the reporting process of some CAs.)

As mentioned above, it was already known in 2022 that this vulnerabiltiy was actively exploited. Yet, it appears that, overwhelmingly, this did not lead to people replacing their likely compromised private keys and revoking their certificates. While we cannot tell from this observation whether the same is true for passwords and other private keys not used in publicly trusted certificates, it appears likely.

Rant

It is an unfortunate reality that these days, security products often are themselves the source of security vulnerabilities. While I have no empirical evidence for this (and nobody else has the data, have I complained about this before?), I believe that we have entered a situation in recent years where security products turned from "mostly useless, sometimes harmful" to "almost certainly causing more security issues than they prevent". I have more thoughts on this that I may share at another time.

If you are wondering what to do, the solution is neither to patch and fix your Fortinet device nor to buy additional attack surface from one of its equally bad competitors. It is to stop believing that adding more attack surface will increase security.

Detecting affected keys

Detection for those keys has been added to badkeys. It is an open source tool, installable via Python's package management. You can use it to scan SSH host keys, certificates, or private keys. Make sure to update the badkeys blocklist (badkeys --update-bl) before doing so.

Please note that this detection is currently based on incomplete data and does not cover all keys in the leak. More updates with more complete coverage may come. Please also note that I have not made the private keys public. Unlike in other cases, this means badkeys cannot give you the private key. (I may decide to publish the keys at some point in the future.)

The badkeys blocklist uses a yet poorly documented format (it is on my todo list to improve this). I am sharing a list of SPKI SHA256 hashes of the affected keys to make it easier for others to write their own detection.

Update (2025-01-23): As mentioned, the original analysis was done with an incomplete set of the data. I later was able to access more complete data, increasing the number of keys to around 98,000 SSH keys and 167,000 PKCS keys. This also led to additional certificates reported for revocation. I have not kept track of the exact numbers. Therefore, the numbers above all refer to the incomplete dataset.

You can find a script to extract and decrypt passwords and private keys from Fortigate configuration files here.

Update (2025-01-24): During further analysis, I discovered that the dataset contained 314 private keys belonging to Let's Encrypt ACME accounts. I have now deactivated those accounts. Given that it has been over a week since the initial report of the incident, this adds to the impression that neither Fortinet itself nor their affected customers appear to have put any effort into cleaning up after this incident.

Update (2025-04-16): The keys.

Image source: paomedia / Public Domain

Posted by Hanno Böck

in Code, Cryptography, English, Security

at

17:04

| Comments (0)

| Trackbacks (0)

Monday, February 5. 2024

How to create a Secure, Random Password with JavaScript

Let's look at a few examples and learn how to create an actually secure password generation function. Our goal is to create a password from a defined set of characters with a fixed length. The password should be generated from a secure source of randomness, and it should have a uniform distribution, meaning every character should appear with the same likelihood. While the examples are JavaScript code, the principles can be used in any programming language.

One of the first examples to show up in Google is a blog post on the webpage dev.to. Here is the relevant part of the code:

/* Example with weak random number generator, DO NOT USE */

var chars = "0123456789abcdefghijklmnopqrstuvwxyz!@#$%^&*()ABCDEFGHIJKLMNOPQRSTUVWXYZ";

var passwordLength = 12;

var password = "";

for (var i = 0; i <= passwordLength; i++) {

var randomNumber = Math.floor(Math.random() * chars.length);

password += chars.substring(randomNumber, randomNumber +1);

}Math.random() does not provide cryptographically secure random numbers. Do not use them for anything related to security. Use the Web Crypto API instead, and more precisely, the window.crypto.getRandomValues() method.

This is pretty clear: We should not use Math.random() for security purposes, as it gives us no guarantees about the security of its output. This is not a merely theoretical concern: here is an example where someone used Math.random() to generate tokens and ended up seeing duplicate tokens in real-world use.

MDN tells us to use the getRandomValues() function of the Web Crypto API, which generates cryptographically strong random numbers.

We can make a more general statement here: Whenever we need randomness for security purposes, we should use a cryptographically secure random number generator. Even in non-security contexts, using secure random sources usually has no downsides. Theoretically, cryptographically strong random number generators can be slower, but unless you generate Gigabytes of random numbers, this is irrelevant. (I am not going to expand on how exactly cryptographically strong random number generators work, as this is something that should be done by the operating system. You can find a good introduction here.)

All modern operating systems have built-in functionality for this. Unfortunately, for historical reasons, in many programming languages, there are simple and more widely used random number generation functions that many people use, and APIs for secure random numbers often come with extra obstacles and may not always be available. However, in the case of Javascript, crypto.getRandomValues() has been available in all major browsers for over a decade.

After establishing that we should not use Math.random(), we may check whether searching specifically for that gives us a better answer. When we search for "Javascript random password without Math.Random()", the first result that shows up is titled "Never use Math.random() to create passwords in JavaScript". That sounds like a good start. Unfortunately, it makes another mistake. Here is the code it recommends:

/* Example with floating point rounding bias, DO NOT USE */

function generatePassword(length = 16)

{

let generatedPassword = "";

const validChars = "0123456789" +

"abcdefghijklmnopqrstuvwxyz" +

"ABCDEFGHIJKLMNOPQRSTUVWXYZ" +

",.-{}+!\"#$%/()=?";

for (let i = 0; i < length; i++) {

let randomNumber = crypto.getRandomValues(new Uint32Array(1))[0];

randomNumber = randomNumber / 0x100000000;

randomNumber = Math.floor(randomNumber * validChars.length);

generatedPassword += validChars[randomNumber];

}

return generatedPassword;

}The problem with this approach is that it uses floating-point numbers. It is generally a good idea to avoid floats in security and particularly cryptographic applications whenever possible. Floats introduce rounding errors, and due to the way they are stored, it is practically almost impossible to generate a uniform distribution. (See also this explanation in a StackExchange comment.)

Therefore, while this implementation is better than the first and probably "good enough" for random passwords, it is not ideal. It does not give us the best security we can have with a certain length and character choice of a password.

Another way of mapping a random integer number to an index for our list of characters is to use a random value modulo the size of our character class. Here is an example from a StackOverflow comment:

/* Example with modulo bias, DO NOT USE */

var generatePassword = (

length = 20,

characters = '0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZabcdefghijklmnopqrstuvwxyz~!@-#$'

) =>

Array.from(crypto.getRandomValues(new Uint32Array(length)))

.map((x) => characters[x % characters.length])

.join('')The modulo bias in this example is quite small, so let's look at a different example. Assume we use letters and numbers (a-z, A-Z, 0-9, 62 characters total) and take a single byte (256 different values, 0-255) r from the random number generator. If we use the modulus r % 62, some characters are more likely to appear than others. The reason is that 256 is not a multiple of 62, so it is impossible to map our byte to this list of characters with a uniform distribution.

In our example, the lowercase "a" would be mapped to five values (0, 62, 124, 186, 248). The uppercase "A" would be mapped to only four values (26, 88, 150, 212). Some values have a higher probability than others.

(For more detailed explanations of a modulo bias, check this post by Yolan Romailler from Kudelski Security and this post from Sebastian Pipping.)

One way to avoid a modulo bias is to use rejection sampling. The idea is that you throw away the values that cause higher probabilities. In our example above, 248 and higher values cause the modulo bias, so if we generate such a value, we repeat the process. A piece of code to generate a single random character could look like this:

const pwchars = "abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789";

const limit = 256 - (256 % pwchars.length);

do {

randval = window.crypto.getRandomValues(new Uint8Array(1))[0];

} while (randval >= limit);An alternative to rejection sampling is to make the modulo bias so small that it does not matter (by using a very large random value).

However, I find rejection sampling to be a much cleaner solution. If you argue that the modulo bias is so small that it does not matter, you must carefully analyze whether this is true. For a password, a small modulo bias may be okay. For cryptographic applications, things can be different. Rejection sampling avoids the modulo bias completely. Therefore, it is always a safe choice.

There are two things you might wonder about this approach. One is that it introduces a timing difference. In cases where the random number generator turns up multiple numbers in a row that are thrown away, the code runs a bit longer.

Timing differences can be a problem in security code, but this one is not. It does not reveal any information about the password because it is only influenced by values we throw away. Even if an attacker were able to measure the exact timing of our password generation, it would not give him any useful information. (This argument is however only true for a cryptographically secure random number generator. It assumes that the ignored random values do not reveal any information about the random number generator's internal state.)

Another issue with this approach is that it is not guaranteed to finish in any given time. Theoretically, the random number generator could produce numbers above our limit so often that the function stalls. However, the probability of that happening quickly becomes so low that it is irrelevant. Such code is generally so fast that even multiple rounds of rejection would not cause a noticeable delay.

To summarize: If we want to write a secure, random password generation function, we should consider three things: We should use a secure random number generation function. We should avoid floating point numbers. And we should avoid a modulo bias.

Taking this all together, here is a Javascript function that generates a 15-character password, composed of ASCII letters and numbers:

function simplesecpw() {

const pwlen = 15;

const pwchars = "abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789";

const limit = 256 - (256 % pwchars.length);

let passwd = "";

let randval;

for (let i = 0; i < pwlen; i++) {

do {

randval = window.crypto.getRandomValues(new Uint8Array(1))[0];

} while (randval >= limit);

passwd += pwchars[randval % pwchars.length];

}

return passwd;

}If you want to use that code, feel free to do so. I have published it - and a slightly more configurable version that allows optionally setting the length and the set of characters - under a very permissive license (0BSD license).

An online demo generating a password with this code can be found at https://password.hboeck.de/. All code is available on GitHub.

Image Source: SVG Repo, CC0

Posted by Hanno Böck

in Code, Cryptography, English, Security, Webdesign

at

15:23

| Comments (0)

| Trackbacks (0)

Wednesday, October 21. 2020

File Exfiltration via Libreoffice in BigBlueButton and JODConverter

BigBlueButton is a free web-based video conferencing software that lately got quite popular, largely due to Covid-19. Earlier this year I did a brief check on its security which led to an article on Golem.de (German). I want to share the most significant findings here.

BigBlueButton is a free web-based video conferencing software that lately got quite popular, largely due to Covid-19. Earlier this year I did a brief check on its security which led to an article on Golem.de (German). I want to share the most significant findings here.BigBlueButton has a feature that lets a presenter upload a presentation in a wide variety of file formats that gets then displayed in the web application. This looked like a huge attack surface. The conversion for many file formats is done with Libreoffice on the server. Looking for ways to exploit server-side Libreoffice rendering I found a blog post by Bret Buerhaus that discussed a number of ways of exploiting such setups.

One of the methods described there is a feature in Opendocument Text (ODT) files that allows embedding a file from an external URL in a text section. This can be a web URL like https or a file url and include a local file.

This directly worked in BigBlueButton. An ODT file that referenced a local file would display that local file. This allows displaying any file that the user running the BigBlueButton service could access on the server. A possible way to exploit this is to exfiltrate the configuration file that contains the API secret key, which then allows basically controlling the BigBlueButton instance. I have a video showing the exploit here. (I will publish the exploit later.)

I reported this to the developers of BigBlueButton in May. Unfortunately my experience with their security process was not very good. At first I did not get an answer at all. After another mail they told me they plan to sandbox the Libreoffice process either via a chroot or a docker container. However that still has not happened yet. It is planned for the upcoming version 2.3 and independent of this bug this is a good idea, as Libreoffice just creates a lot of attack surface.

Recently I looked a bit more into this. The functionality to include external files only happens after a manual user confirmation and if one uses Libreoffice on the command line it does not work at all by default. So in theory this exploit should not have worked, but it did.

It turned out the reason for this was another piece of software that BigBlueButton uses called JODConverter. It provides a wrapper around the conversion functionality of Libreoffice. After contacting both the Libreoffice security team and the developer of JODConverter we figured out that it enables including external URLs by default.

I forwarded this information to the BigBlueButton developers and it finally let to a fix, they now change the default settings of JODConverter manually. The JODConverter developer considers changing the default as well, but this has not happened yet. Other software or web pages using JODConverter for serverside file conversion may thus still be vulnerable.

The fix was included in version 2.2.27. Today I learned that the company RedTeam Pentesting has later independently found the same vulnerability. They also requested a CVE: It is now filed as CVE-2020-25820.

While this issue is fixed, the handling of security issues by BigBlueButton was not exactly stellar. It took around five months from my initial report to a fix. The release notes do not mention that this is an important security update (the change has the note “speed up the conversion”).

I found a bunch of other security issues in BigBlueButton and proposed some hardening changes. This took a lot of back and forth, but all significant issues are resolved now.

Another issue with the presentation upload was that it allowed cross site scripting, because it did not set a proper content type for downloads. This was independently discovered by another person and was fixed a while ago. (If you are interested in details about this class of vulnerabilities: I have given a talk about it at last year’s Security Fest.)

The session Cookies both from BigBlueButton itself and from its default web frontend Greenlight were not set with a secure flag, so the cookies could be transmitted in clear text over the network. This has also been changed now.

By default the BigBlueButton installation script starts several services that open ports that do not need to be publicly accessible. This is now also changed. A freeswitch service run locally was installed with a default password (“ClueCon”), this is now also changed to a random password by the installation script.

What also looks quite problematic is the use of outdated software. BigBlueButton only works on Ubuntu 16.04, which is a long term support version, so it still receives updates. But it also uses several external repositories, including one that installs NodeJS version 8 and shows a warning that this repository no longer receives security updates. There is an open bug in the bug tracker.

If you are using BigBlueButton I strongly recommend you update to at least version 2.2.27. This should fix all the issues I found. I would wish that the BigBlueButton developers improve their security process, react more timely to external reports and more transparent when issues are fixed.

Image Source: Wikimedia Commons / NOAA / Public Domain

Update: Proof of concept published.

Posted by Hanno Böck

in Code, English, Linux, Security

at

14:14

| Comments (0)

| Trackback (1)

Defined tags for this entry: bigbluebutton, cookie, fileexfiltration, itsecurity, jodconverter, libreoffice, security, websecurity, xss

Monday, December 16. 2019

#include </etc/shadow>

Recently I saw a tweet where someone mentioned that you can include /dev/stdin in C code compiled with gcc. This is, to say the very least, surprising.

When you see something like this with an IT security background you start to wonder if this can be abused for an attack. While I couldn't come up with anything, I started to wonder what else you could include. As you can basically include arbitrary paths on a system this may be used to exfiltrate data - if you can convince someone else to compile your code.

There are plenty of webpages that offer online services where you can type in C code and run it. It is obvious that such systems are insecure if the code running is not sandboxed in some way. But is it equally obvious that the compiler also needs to be sandboxed?

How would you attack something like this? Exfiltrating data directly through the code is relatively difficult, because you need to include data that ends up being valid C code. Maybe there's a trick to make something like /etc/shadow valid C code (you can put code before and after the include), but I haven't found it. But it's not needed either: The error messages you get from the compiler are all you need. All online tools I tested will show you the errors if your code doesn't compile.

I even found one service that allowed me to add

#include </etc/shadow>

and showed me the hash of the root password. This effectively means this service is running compile tasks as root.

Including various files in /etc allows to learn something about the system. For example /etc/lsb-release often gives information about the distribution in use. Interestingly, including pseudo-files from /proc does not work. It seems gcc treats them like empty files. This limits possibilities to learn about the system. /sys and /dev work, but they contain less human readable information.

In summary I think services letting other people compile code should consider sandboxing the compile process and thus make sure no interesting information can be exfiltrated with these attack vectors.

When you see something like this with an IT security background you start to wonder if this can be abused for an attack. While I couldn't come up with anything, I started to wonder what else you could include. As you can basically include arbitrary paths on a system this may be used to exfiltrate data - if you can convince someone else to compile your code.

There are plenty of webpages that offer online services where you can type in C code and run it. It is obvious that such systems are insecure if the code running is not sandboxed in some way. But is it equally obvious that the compiler also needs to be sandboxed?

How would you attack something like this? Exfiltrating data directly through the code is relatively difficult, because you need to include data that ends up being valid C code. Maybe there's a trick to make something like /etc/shadow valid C code (you can put code before and after the include), but I haven't found it. But it's not needed either: The error messages you get from the compiler are all you need. All online tools I tested will show you the errors if your code doesn't compile.

I even found one service that allowed me to add

#include </etc/shadow>

and showed me the hash of the root password. This effectively means this service is running compile tasks as root.

Including various files in /etc allows to learn something about the system. For example /etc/lsb-release often gives information about the distribution in use. Interestingly, including pseudo-files from /proc does not work. It seems gcc treats them like empty files. This limits possibilities to learn about the system. /sys and /dev work, but they contain less human readable information.

In summary I think services letting other people compile code should consider sandboxing the compile process and thus make sure no interesting information can be exfiltrated with these attack vectors.

Friday, September 13. 2019

Security Issues with PGP Signatures and Linux Package Management

In discussions around the PGP ecosystem one thing I often hear is that while PGP has its problems, it's an important tool for package signatures in Linux distributions. I therefore want to highlight a few issues I came across in this context that are rooted in problems in the larger PGP ecosystem.

Let's look at an example of the use of PGP signatures for deb packages, the Ubuntu Linux installation instructions for HHVM. HHVM is an implementation of the HACK programming language and developed by Facebook. I'm just using HHVM as an example here, as it nicely illustrates two attacks I want to talk about, but you'll find plenty of similar installation instructions for other software packages. I have reported these issues to Facebook, but they decided not to change anything.

The instructions for Ubuntu (and very similarly for Debian) recommend that users execute these commands in order to install HHVM from the repository of its developers:

The crucial part here is the line starting with apt-key. It fetches the key that is used to sign the repository from the Ubuntu key server, which itself is part of the PGP keyserver network.

Attack 1: Flooding Key with Signatures

Attack 1: Flooding Key with Signatures

The first possible attack is actually quite simple: One can make the signature key offered here unusable by appending many signatures.

A key concept of the PGP keyservers is that they operate append-only. New data gets added, but never removed. PGP keys can sign other keys and these signatures can also be uploaded to the keyservers and get added to a key. Crucially the owner of a key has no influence on this.

This means everyone can grow the size of a key by simply adding many signatures to it. Lately this has happened to a number of keys, see the blog posts by Daniel Kahn Gillmor and Robert Hansen, two members of the PGP community who have personally been affected by this. The effect of this is that when GnuPG tries to import such a key it becomes excessively slow and at some point will simply not work any more.

For the above installation instructions this means anyone can make them unusable by attacking the referenced release key. In my tests I was still able to import one of the attacked keys with apt-key after several minutes, but these keys "only" have a few ten thousand signatures, growing them to a few megabytes size. There's no reason an attacker couldn't use millions of signatures and grow single keys to gigabytes.

Attack 2: Rogue packages with a colliding Key Id

The installation instructions reference the key as 0xB4112585D386EB94, which is a 64 bit hexadecimal key id.

Key ids are a central concept in the PGP ecosystem. The key id is a truncated SHA1 hash of the public key. It's possible to either use the last 32 bit, 64 bit or the full 160 bit of the hash.

It's been pointed out in the past that short key ids allow colliding key ids. This means an attacker can generate a different key with the same key id where he owns the private key simply by bruteforcing the id. In 2014 Richard Klafter and Eric Swanson showed with the Evil32 attack how to create colliding key ids for all keys in the so-called strong set (meaning all keys that are connected with most other keys in the web of trust). Later someone unknown uploaded these keys to the key servers causing quite some confusion.

It should be noted that the issue of colliding key ids was known and discussed in the community way earlier, see for example this discussion from 2002.

The practical attacks targeted 32 bit key ids, but the same attack works against 64 bit key ids, too, it just costs more. I contacted the authors of the Evil32 attack and Eric Swanson estimated in a back of the envelope calculation that it would cost roughly $ 120.000 to perform such an attack with GPUs on cloud providers. This is expensive, but within the possibilities of powerful attackers. Though one can also find similar installation instructions using a 32 bit key id, where the attack is really cheap.

Going back to the installation instructions from above we can imagine the following attack: A man in the middle network attacker can intercept the connection to the keyserver - it's not encrypted or authenticated - and provide the victim a colliding key. Afterwards the key is imported by the victim, so the attacker can provide repositories with packages signed by his key, ultimately leading to code execution.

You may notice that there's a problem with this attack: The repository provided by HHVM is using HTTPS. Thus the attacker can not simply provide a rogue HHVM repository. However the attack still works.

The imported PGP key is not bound to any specific repository. Thus if the victim has any non-HTTPS repository configured in his system the attacker can provide a rogue repository on the next call of "apt update". Notably by default both Debian and Ubuntu use HTTP for their repositories (a Debian developer even runs a dedicated web page explaining why this is no big deal).

Attack 3: Key over HTTP

Issues with package keys aren't confined to Debian/APT-based distributions. I found these installation instructions at Dropbox (Link to Wayback Machine, as Dropbox has changed them after I reported this):

It should be obvious what the issue here is: Both the key and the repository are fetched over HTTP, a network attacker can simply provide his own key and repository.

Discussion

The standard answer you often get when you point out security problems with PGP-based systems is: "It's not PGP/GnuPG, people are just using it wrong". But I believe these issues show some deeper problems with the PGP ecosystem. The key flooding issue is inherited from the systemically flawed concept of the append only key servers.

The other issue here is lack of deprecation. Short key ids are problematic, it's been known for a long time and there have been plenty of calls to get rid of them. This begs the question why no effort has been made to deprecate support for them. One could have said at some point: Future versions of GnuPG will show a warning for short key ids and in three years we will stop supporting them.

This reminds of other issues like unauthenticated encryption, where people have been arguing that this was fixed back in 1999 by the introduction of the MDC. Yet in 2018 it was still exploitable, because the unauthenticated version was never properly deprecated.

Fix

For all people having installation instructions for external repositories my recommendation would be to avoid any use of public key servers. Host the keys on your own infrastructure and provide them via HTTPS. Furthermore any reference to 32 bit or 64 bit key ids should be avoided.

Update: Some people have pointed out to me that the Debian Wiki contains guidelines for third party repositories that avoid the issues mentioned here.

Let's look at an example of the use of PGP signatures for deb packages, the Ubuntu Linux installation instructions for HHVM. HHVM is an implementation of the HACK programming language and developed by Facebook. I'm just using HHVM as an example here, as it nicely illustrates two attacks I want to talk about, but you'll find plenty of similar installation instructions for other software packages. I have reported these issues to Facebook, but they decided not to change anything.

The instructions for Ubuntu (and very similarly for Debian) recommend that users execute these commands in order to install HHVM from the repository of its developers:

apt-get update

apt-get install software-properties-common apt-transport-https

apt-key adv --recv-keys --keyserver hkp://keyserver.ubuntu.com:80 0xB4112585D386EB94

add-apt-repository https://dl.hhvm.com/ubuntu

apt-get update

apt-get install hhvm

apt-get install software-properties-common apt-transport-https

apt-key adv --recv-keys --keyserver hkp://keyserver.ubuntu.com:80 0xB4112585D386EB94

add-apt-repository https://dl.hhvm.com/ubuntu

apt-get update

apt-get install hhvm

The crucial part here is the line starting with apt-key. It fetches the key that is used to sign the repository from the Ubuntu key server, which itself is part of the PGP keyserver network.

Attack 1: Flooding Key with Signatures

Attack 1: Flooding Key with SignaturesThe first possible attack is actually quite simple: One can make the signature key offered here unusable by appending many signatures.

A key concept of the PGP keyservers is that they operate append-only. New data gets added, but never removed. PGP keys can sign other keys and these signatures can also be uploaded to the keyservers and get added to a key. Crucially the owner of a key has no influence on this.

This means everyone can grow the size of a key by simply adding many signatures to it. Lately this has happened to a number of keys, see the blog posts by Daniel Kahn Gillmor and Robert Hansen, two members of the PGP community who have personally been affected by this. The effect of this is that when GnuPG tries to import such a key it becomes excessively slow and at some point will simply not work any more.

For the above installation instructions this means anyone can make them unusable by attacking the referenced release key. In my tests I was still able to import one of the attacked keys with apt-key after several minutes, but these keys "only" have a few ten thousand signatures, growing them to a few megabytes size. There's no reason an attacker couldn't use millions of signatures and grow single keys to gigabytes.

Attack 2: Rogue packages with a colliding Key Id

The installation instructions reference the key as 0xB4112585D386EB94, which is a 64 bit hexadecimal key id.

Key ids are a central concept in the PGP ecosystem. The key id is a truncated SHA1 hash of the public key. It's possible to either use the last 32 bit, 64 bit or the full 160 bit of the hash.

It's been pointed out in the past that short key ids allow colliding key ids. This means an attacker can generate a different key with the same key id where he owns the private key simply by bruteforcing the id. In 2014 Richard Klafter and Eric Swanson showed with the Evil32 attack how to create colliding key ids for all keys in the so-called strong set (meaning all keys that are connected with most other keys in the web of trust). Later someone unknown uploaded these keys to the key servers causing quite some confusion.

It should be noted that the issue of colliding key ids was known and discussed in the community way earlier, see for example this discussion from 2002.

The practical attacks targeted 32 bit key ids, but the same attack works against 64 bit key ids, too, it just costs more. I contacted the authors of the Evil32 attack and Eric Swanson estimated in a back of the envelope calculation that it would cost roughly $ 120.000 to perform such an attack with GPUs on cloud providers. This is expensive, but within the possibilities of powerful attackers. Though one can also find similar installation instructions using a 32 bit key id, where the attack is really cheap.

Going back to the installation instructions from above we can imagine the following attack: A man in the middle network attacker can intercept the connection to the keyserver - it's not encrypted or authenticated - and provide the victim a colliding key. Afterwards the key is imported by the victim, so the attacker can provide repositories with packages signed by his key, ultimately leading to code execution.

You may notice that there's a problem with this attack: The repository provided by HHVM is using HTTPS. Thus the attacker can not simply provide a rogue HHVM repository. However the attack still works.

The imported PGP key is not bound to any specific repository. Thus if the victim has any non-HTTPS repository configured in his system the attacker can provide a rogue repository on the next call of "apt update". Notably by default both Debian and Ubuntu use HTTP for their repositories (a Debian developer even runs a dedicated web page explaining why this is no big deal).

Attack 3: Key over HTTP

Issues with package keys aren't confined to Debian/APT-based distributions. I found these installation instructions at Dropbox (Link to Wayback Machine, as Dropbox has changed them after I reported this):

Add the following to /etc/yum.conf.

name=Dropbox Repository

baseurl=http://linux.dropbox.com/fedora/\$releasever/

gpgkey=http://linux.dropbox.com/fedora/rpm-public-key.asc

name=Dropbox Repository

baseurl=http://linux.dropbox.com/fedora/\$releasever/

gpgkey=http://linux.dropbox.com/fedora/rpm-public-key.asc

It should be obvious what the issue here is: Both the key and the repository are fetched over HTTP, a network attacker can simply provide his own key and repository.

Discussion

The standard answer you often get when you point out security problems with PGP-based systems is: "It's not PGP/GnuPG, people are just using it wrong". But I believe these issues show some deeper problems with the PGP ecosystem. The key flooding issue is inherited from the systemically flawed concept of the append only key servers.

The other issue here is lack of deprecation. Short key ids are problematic, it's been known for a long time and there have been plenty of calls to get rid of them. This begs the question why no effort has been made to deprecate support for them. One could have said at some point: Future versions of GnuPG will show a warning for short key ids and in three years we will stop supporting them.

This reminds of other issues like unauthenticated encryption, where people have been arguing that this was fixed back in 1999 by the introduction of the MDC. Yet in 2018 it was still exploitable, because the unauthenticated version was never properly deprecated.

Fix

For all people having installation instructions for external repositories my recommendation would be to avoid any use of public key servers. Host the keys on your own infrastructure and provide them via HTTPS. Furthermore any reference to 32 bit or 64 bit key ids should be avoided.

Update: Some people have pointed out to me that the Debian Wiki contains guidelines for third party repositories that avoid the issues mentioned here.

Posted by Hanno Böck

in Code, English, Linux, Security

at

14:17

| Comments (5)

| Trackback (1)

Defined tags for this entry: apt, cryptography, deb, debian, fedora, gnupg, gpg, linux, openpgp, packagemanagement, pgp, rpm, security, signatures, ubuntu

Wednesday, April 11. 2018

Introducing Snallygaster - a Tool to Scan for Secrets on Web Servers

A few days ago I figured out that several blogs operated by T-Mobile Austria had a Git repository exposed which included their wordpress configuration file. Due to the fact that a phpMyAdmin installation was also accessible this would have allowed me to change or delete their database and subsequently take over their blogs.

A few days ago I figured out that several blogs operated by T-Mobile Austria had a Git repository exposed which included their wordpress configuration file. Due to the fact that a phpMyAdmin installation was also accessible this would have allowed me to change or delete their database and subsequently take over their blogs.Git Repositories, Private Keys, Core Dumps

Last year I discovered that the German postal service exposed a database with 200.000 addresses on their webpage, because it was simply named dump.sql (which is the default filename for database exports in the documentation example of mysql). An Australian online pharmacy exposed a database under the filename xaa, which is the output of the "split" tool on Unix systems.

It also turns out that plenty of people store their private keys for TLS certificates on their servers - or their SSH keys. Crashing web applications can leave behind coredumps that may expose application memory.

For a while now I became interested in this class of surprisingly trivial vulnerabilities: People leave files accessible on their web servers that shouldn't be public. I've given talks at a couple of conferences (recordings available from Bornhack, SEC-T, Driving IT). I scanned for these issues with a python script that extended with more and more such checks.

Scan your Web Pages with snallygaster

It's taken a bit longer than intended, but I finally released it: It's called Snallygaster and is available on Github and PyPi.

Apart from many checks for secret files it also contains some checks for related issues like checking invalid src references which can lead to Domain takeover vulnerabilities, for the Optionsleed vulnerability which I discovered during this work and for a couple of other vulnerabilities I found interesting and easily testable.

Some may ask why I wrote my own tool instead of extending an existing project. I thought about it, but I didn't really find any existing free software vulnerability scanner that I found suitable. The tool that comes closest is probably Nikto, but testing it I felt it comes with a lot of checks - thus it's slow - and few results. I wanted a tool with a relatively high impact that doesn't take forever to run. Another commonly mentioned free vulnerability scanner is OpenVAS - a fork from Nessus back when that was free software - but I found that always very annoying to use and overengineered. It's not a tool you can "just run". So I ended up creating my own tool.

A Dragon Legend in US Maryland

Finally you may wonder what the name means. The Snallygaster is a dragon that according to some legends was seen in Maryland and other parts of the US. Why that name? There's no particular reason, I just searched for a suitable name, I thought a mythical creature may make a good name. So I searched Wikipedia for potential names and checked for name collisions. This one had none and also sounded funny and interesting enough.

I hope snallygaster turns out to be useful for administrators and pentesters and helps exposing this class of trivial, but often powerful, vulnerabilities. Obviously I welcome new ideas of further tests that could be added to snallygaster.

Thursday, September 7. 2017

In Search of a Secure Time Source

Update: This blogpost was written before NTS was available, and the information is outdated. If you are looking for a modern solution, I recommend using software and a time server with Network Time Security, as specified in RFC 8915.

All our computers and smartphones have an internal clock and need to know the current time. As configuring the time manually is annoying it's common to set the time via Internet services. What tends to get forgotten is that a reasonably accurate clock is often a crucial part of security features like certificate lifetimes or features with expiration times like HSTS. Thus the timesetting should be secure - but usually it isn't.

All our computers and smartphones have an internal clock and need to know the current time. As configuring the time manually is annoying it's common to set the time via Internet services. What tends to get forgotten is that a reasonably accurate clock is often a crucial part of security features like certificate lifetimes or features with expiration times like HSTS. Thus the timesetting should be secure - but usually it isn't.

I'd like my systems to have a secure time. So I'm looking for a timesetting tool that fullfils two requirements:

Although these seem like trivial requirements to my knowledge such a tool doesn't exist. These are relatively loose requirements. One might want to add:

Some people need a very accurate time source, for example for certain scientific use cases. But that's outside of my scope. For the vast majority of use cases a clock that is off by a few seconds doesn't matter. While it's certainly a good idea to consider rogue servers given the current state of things I'd be happy to have a solution where I simply trust a server from Google or any other major Internet entity.

So let's look at what we have:

NTP

The common way of setting the clock is the NTP protocol. NTP itself has no transport security built in. It's a plaintext protocol open to manipulation and man in the middle attacks.

There are two variants of "secure" NTP. "Autokey", an authenticated variant of NTP, is broken. There's also a symmetric authentication, but that is impractical for widespread use, as it would require to negotiate a pre-shared key with the time server in advance.

NTPsec and Ntimed

In response to some vulnerabilities in the reference implementation of NTP two projects started developing "more secure" variants of NTP. Ntimed - a rewrite by Poul-Henning Kamp - and NTPsec, a fork of the original NTP software. Ntimed hasn't seen any development for several years, NTPsec seems active. NTPsec had some controversies with the developers of the original NTP reference implementation and its main developer is - to put it mildly - a controversial character.

But none of that matters. Both projects don't implement a "secure" NTP. The "sec" in NTPsec refers to the security of the code, not to the security of the protocol itself. It's still just an implementation of the old, insecure NTP.

Network Time Security

There's a draft for a new secure variant of NTP - called Network Time Security. It adds authentication to NTP.

However it's just a draft and it seems stalled. It hasn't been updated for over a year. In any case: It's not widely implemented and thus it's currently not usable. If that changes it may be an option.

tlsdate

tlsdate is a hack abusing the timestamp of the TLS protocol. The TLS timestamp of a server can be used to set the system time. This doesn't provide high accuracy, as the timestamp is only given in seconds, but it's good enough.

I've used and advocated tlsdate for a while, but it has some problems. The timestamp in the TLS handshake doesn't really have any meaning within the protocol, so several implementers decided to replace it with a random value. Unfortunately that is also true for the default server hardcoded into tlsdate.

Some Linux distributions still ship a package with a default server that will send random timestamps. The result is that your system time is set to a random value. I reported this to Ubuntu a while ago. It never got fixed, however the latest Ubuntu version Zesty Zapis (17.04) doesn't ship tlsdate any more.

Given that Google has shipped tlsdate for some in ChromeOS time it seems unlikely that Google will send randomized timestamps any time soon. Thus if you use tlsdate with www.google.com it should work for now. But it's no future-proof solution.

TLS 1.3 removes the TLS timestamp, so this whole concept isn't future-proof. Alternatively it supports using an HTTPS timestamp. The development of tlsdate has stalled, it hasn't seen any updates lately. It doesn't build with the latest version of OpenSSL (1.1) So it likely will become unusable soon.

OpenNTPD

OpenNTPD

The developers of OpenNTPD, the NTP daemon from OpenBSD, came up with a nice idea. NTP provides high accuracy, yet no security. Via HTTPS you can get a timestamp with low accuracy. So they combined the two: They use NTP to set the time, but they check whether the given time deviates significantly from an HTTPS host. So the HTTPS host provides safety boundaries for the NTP time.

This would be really nice, if there wasn't a catch: This feature depends on an API only provided by LibreSSL, the OpenBSD fork of OpenSSL. So it's not available on most common Linux systems. (Also why doesn't the OpenNTPD web page support HTTPS?)

Roughtime

Roughtime is a Google project. It fetches the time from multiple servers and uses some fancy cryptography to make sure that malicious servers get detected. If a roughtime server sends a bad time then the client gets a cryptographic proof of the malicious behavior, making it possible to blame and shame rogue servers. Roughtime doesn't provide the high accuracy that NTP provides.

From a security perspective it's the nicest of all solutions. However it fails the availability test. Google provides two reference implementations in C++ and in Go, but it's not packaged for any major Linux distribution. Google has an unfortunate tendency to use unusual dependencies and arcane build systems nobody else uses, so packaging it comes with some challenges.

One line bash script beats all existing solutions

As you can see none of the currently available solutions is really feasible and none fulfils the two mild requirements of authenticity and availability.

This is frustrating given that it's a really simple problem. In fact, it's so simple that you can solve it with a single line bash script:

This line sends an HTTPS request to Google, fetches the date header from the response and passes that to the date command line utility.

It provides authenticity via TLS. If the current system time is far off then this fails, as the TLS connection relies on the validity period of the current certificate. Google currently uses certificates with a validity of around three months. The accuracy is only in seconds, so it doesn't qualify for high accuracy requirements. There's no protection against a rogue Google server providing a wrong time.

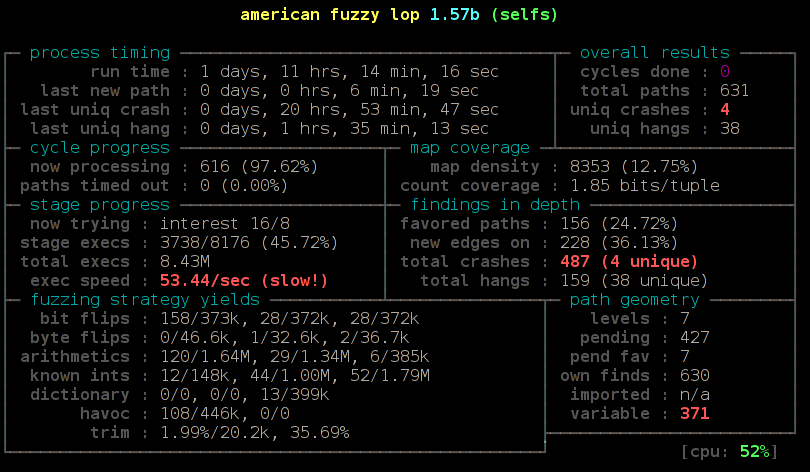

Another potential security concern may be that Google might attack the parser of the date setting tool by serving a malformed date string. However I ran american fuzzy lop against it and it looks robust.

While this certainly isn't as accurate as NTP or as secure as roughtime, it's better than everything else that's available. I put this together in a slightly more advanced bash script called httpstime.

All our computers and smartphones have an internal clock and need to know the current time. As configuring the time manually is annoying it's common to set the time via Internet services. What tends to get forgotten is that a reasonably accurate clock is often a crucial part of security features like certificate lifetimes or features with expiration times like HSTS. Thus the timesetting should be secure - but usually it isn't.

All our computers and smartphones have an internal clock and need to know the current time. As configuring the time manually is annoying it's common to set the time via Internet services. What tends to get forgotten is that a reasonably accurate clock is often a crucial part of security features like certificate lifetimes or features with expiration times like HSTS. Thus the timesetting should be secure - but usually it isn't.I'd like my systems to have a secure time. So I'm looking for a timesetting tool that fullfils two requirements:

- It provides authenticity of the time and is not vulnerable to man in the middle attacks.

- It is widely available on common Linux systems.

Although these seem like trivial requirements to my knowledge such a tool doesn't exist. These are relatively loose requirements. One might want to add:

- The timesetting needs to provide a good accuracy.

- The timesetting needs to be protected against malicious time servers.

Some people need a very accurate time source, for example for certain scientific use cases. But that's outside of my scope. For the vast majority of use cases a clock that is off by a few seconds doesn't matter. While it's certainly a good idea to consider rogue servers given the current state of things I'd be happy to have a solution where I simply trust a server from Google or any other major Internet entity.

So let's look at what we have:

NTP

The common way of setting the clock is the NTP protocol. NTP itself has no transport security built in. It's a plaintext protocol open to manipulation and man in the middle attacks.

There are two variants of "secure" NTP. "Autokey", an authenticated variant of NTP, is broken. There's also a symmetric authentication, but that is impractical for widespread use, as it would require to negotiate a pre-shared key with the time server in advance.

NTPsec and Ntimed

In response to some vulnerabilities in the reference implementation of NTP two projects started developing "more secure" variants of NTP. Ntimed - a rewrite by Poul-Henning Kamp - and NTPsec, a fork of the original NTP software. Ntimed hasn't seen any development for several years, NTPsec seems active. NTPsec had some controversies with the developers of the original NTP reference implementation and its main developer is - to put it mildly - a controversial character.

But none of that matters. Both projects don't implement a "secure" NTP. The "sec" in NTPsec refers to the security of the code, not to the security of the protocol itself. It's still just an implementation of the old, insecure NTP.

Network Time Security

There's a draft for a new secure variant of NTP - called Network Time Security. It adds authentication to NTP.

However it's just a draft and it seems stalled. It hasn't been updated for over a year. In any case: It's not widely implemented and thus it's currently not usable. If that changes it may be an option.

tlsdate

tlsdate is a hack abusing the timestamp of the TLS protocol. The TLS timestamp of a server can be used to set the system time. This doesn't provide high accuracy, as the timestamp is only given in seconds, but it's good enough.

I've used and advocated tlsdate for a while, but it has some problems. The timestamp in the TLS handshake doesn't really have any meaning within the protocol, so several implementers decided to replace it with a random value. Unfortunately that is also true for the default server hardcoded into tlsdate.

Some Linux distributions still ship a package with a default server that will send random timestamps. The result is that your system time is set to a random value. I reported this to Ubuntu a while ago. It never got fixed, however the latest Ubuntu version Zesty Zapis (17.04) doesn't ship tlsdate any more.

Given that Google has shipped tlsdate for some in ChromeOS time it seems unlikely that Google will send randomized timestamps any time soon. Thus if you use tlsdate with www.google.com it should work for now. But it's no future-proof solution.

TLS 1.3 removes the TLS timestamp, so this whole concept isn't future-proof. Alternatively it supports using an HTTPS timestamp. The development of tlsdate has stalled, it hasn't seen any updates lately. It doesn't build with the latest version of OpenSSL (1.1) So it likely will become unusable soon.

OpenNTPD

OpenNTPDThe developers of OpenNTPD, the NTP daemon from OpenBSD, came up with a nice idea. NTP provides high accuracy, yet no security. Via HTTPS you can get a timestamp with low accuracy. So they combined the two: They use NTP to set the time, but they check whether the given time deviates significantly from an HTTPS host. So the HTTPS host provides safety boundaries for the NTP time.

This would be really nice, if there wasn't a catch: This feature depends on an API only provided by LibreSSL, the OpenBSD fork of OpenSSL. So it's not available on most common Linux systems. (Also why doesn't the OpenNTPD web page support HTTPS?)

Roughtime

Roughtime is a Google project. It fetches the time from multiple servers and uses some fancy cryptography to make sure that malicious servers get detected. If a roughtime server sends a bad time then the client gets a cryptographic proof of the malicious behavior, making it possible to blame and shame rogue servers. Roughtime doesn't provide the high accuracy that NTP provides.

From a security perspective it's the nicest of all solutions. However it fails the availability test. Google provides two reference implementations in C++ and in Go, but it's not packaged for any major Linux distribution. Google has an unfortunate tendency to use unusual dependencies and arcane build systems nobody else uses, so packaging it comes with some challenges.

One line bash script beats all existing solutions

As you can see none of the currently available solutions is really feasible and none fulfils the two mild requirements of authenticity and availability.

This is frustrating given that it's a really simple problem. In fact, it's so simple that you can solve it with a single line bash script:

date -s "$(curl -sI https://www.google.com/|grep -i 'date:'|sed -e 's/^.ate: //g')"This line sends an HTTPS request to Google, fetches the date header from the response and passes that to the date command line utility.

It provides authenticity via TLS. If the current system time is far off then this fails, as the TLS connection relies on the validity period of the current certificate. Google currently uses certificates with a validity of around three months. The accuracy is only in seconds, so it doesn't qualify for high accuracy requirements. There's no protection against a rogue Google server providing a wrong time.

Another potential security concern may be that Google might attack the parser of the date setting tool by serving a malformed date string. However I ran american fuzzy lop against it and it looks robust.

While this certainly isn't as accurate as NTP or as secure as roughtime, it's better than everything else that's available. I put this together in a slightly more advanced bash script called httpstime.

Posted by Hanno Böck

in Code, Cryptography, English, Linux, Security

at

17:07

| Comments (6)

| Trackbacks (0)

Tuesday, September 5. 2017

Abandoned Domain Takeover as a Web Security Risk

In the modern web it's extremely common to include thirdparty content on web pages. Youtube videos, social media buttons, ads, statistic tools, CDNs for fonts and common javascript files - there are plenty of good and many not so good reasons for this. What is often forgotten is that including other peoples content means giving other people control over your webpage. This is obviously particularly risky if it involves javascript, as this gives a third party full code execution rights in the context of your webpage.

I recently helped a person whose Wordpress blog had a problem: The layout looked broken. The cause was that the theme used a font from a web host - and that host was down. This was easy to fix. I was able to extract the font file from the Internet Archive and store a copy locally. But it made me thinking: What happens if you include third party content on your webpage and the service from which you're including it disappears?

I put together a simple script that would check webpages for HTML tags with the src attribute. If the src attribute points to an external host it checks if the host name actually can be resolved to an IP address. I ran that check on the Alexa Top 1 Million list. It gave me some interesting results. (This methodology has some limits, as it won't discover indirect src references or includes within javascript code, but it should be good enough to get a rough picture.)

Yahoo! Web Analytics was shut down in 2012, yet in 2017 Flickr still tried to use it

The webpage of Flickr included a script from Yahoo! Web Analytics. If you don't know Yahoo Analytics - that may be because it's been shut down in 2012. Although Flickr is a Yahoo! company it seems they haven't noted for quite a while. (The code is gone now, likely because I mentioned it on Twitter.) This example has no security impact as the domain still belongs to Yahoo. But it likely caused an unnecessary slowdown of page loads over many years.

Going through the list of domains I saw plenty of the things you'd expect: Typos, broken URLs, references to localhost and subdomains no longer in use. Sometimes I saw weird stuff, like references to javascript from browser extensions. My best explanation is that someone had a plugin installed that would inject those into pages and then created a copy of the page with the browser which later endet up being used as the real webpage.

I looked for abandoned domain names that might be worth registering. There weren't many. In most cases the invalid domains were hosts that didn't resolve, but that still belonged to someone. I found a few, but they were only used by one or two hosts.

Takeover of unregistered Azure subdomain

But then I saw a couple of domains referencing a javascript from a non-resolving host called piwiklionshare.azurewebsites.net. This is a subdomain from Microsoft's cloud service Azure. Conveniently Azure allows creating test accounts for free, so I was able to grab this subdomain without any costs.

Doing so allowed me to look at the HTTP log files and see what web pages included code from that subdomain. All of them were local newspapers from the US. 20 of them belonged to two adjacent IP addresses, indicating that they were all managed by the same company. I was able to contact them. While I never received any answer, shortly afterwards the code was gone from all those pages.

However the page with most hits was not so easy to contact. It was also a newspaper, the Saline Courier. I tried contacting them directly, their chief editor and their second chief editor. No answer.

After a while I wondered what I could do. Ultimately at some point Microsoft wouldn't let me host that subdomain any longer for free. I didn't want to risk that others could grab that subdomain, but at the same time I obviously also didn't want to pay in order to keep some web page safe whose owners didn't even bother to read my e-mails.

But of course I had another way of contacting them: I could execute Javascript on their web page and use that for some friendly defacement. After some contemplating whether that would be a legitimate thing to do I decided to go for it. I changed the background color to some flashy pink and send them a message. The page remained usable, but it was a message hard to ignore.

With some trouble on the way - first they broke their CSS, then they showed a PHP error message, then they reverted to the page with the defacement. But in the end they managed to remove the code.

There are still a couple of other pages that include that Javascript. Most of them however look like broken test webpages. The only legitimately looking webpage that still embeds that code is the Columbia Missourian. However they don't embed it on the start page, only on the error reporting form they have for every article. It's been several weeks now, they don't seem to care.

What happens to abandoned domains?

There are reasons to believe that what I showed here is only the tip of the iceberg. In many cases when services discontinue their domains don't simply disappear. If the domain name is valuable then almost certainly someone will try to register it immediately after it becomes available.

Someone trying to abuse abandoned domains could watch out for services going ot of business or widely referenced domains becoming available. Just to name an example: I found a couple of hosts referencing subdomains of compete.com. If you go to their web page, you can learn that the company Compete has discontinued its service in 2016. How long will they keep their domain? And what will happen with it afterwards? Whoever gets the domain can hijack all the web pages that still include javascript from it.

Be sure to know what you include

There are some obvious takeaways from this. If you include other peoples code on your web page then you should know what that means: You give them permission to execute whatever they want on your web page. This means you need to wonder how much you can trust them.

At the very least you should be aware who is allowed to execute code on your web page. If they shut down their business or discontinue the service you have been using then you obviously should remove that code immediately. And if you include code from a web statistics service that you never look at anyway you may simply want to remove that as well.

I recently helped a person whose Wordpress blog had a problem: The layout looked broken. The cause was that the theme used a font from a web host - and that host was down. This was easy to fix. I was able to extract the font file from the Internet Archive and store a copy locally. But it made me thinking: What happens if you include third party content on your webpage and the service from which you're including it disappears?

I put together a simple script that would check webpages for HTML tags with the src attribute. If the src attribute points to an external host it checks if the host name actually can be resolved to an IP address. I ran that check on the Alexa Top 1 Million list. It gave me some interesting results. (This methodology has some limits, as it won't discover indirect src references or includes within javascript code, but it should be good enough to get a rough picture.)

Yahoo! Web Analytics was shut down in 2012, yet in 2017 Flickr still tried to use it

The webpage of Flickr included a script from Yahoo! Web Analytics. If you don't know Yahoo Analytics - that may be because it's been shut down in 2012. Although Flickr is a Yahoo! company it seems they haven't noted for quite a while. (The code is gone now, likely because I mentioned it on Twitter.) This example has no security impact as the domain still belongs to Yahoo. But it likely caused an unnecessary slowdown of page loads over many years.

Going through the list of domains I saw plenty of the things you'd expect: Typos, broken URLs, references to localhost and subdomains no longer in use. Sometimes I saw weird stuff, like references to javascript from browser extensions. My best explanation is that someone had a plugin installed that would inject those into pages and then created a copy of the page with the browser which later endet up being used as the real webpage.

I looked for abandoned domain names that might be worth registering. There weren't many. In most cases the invalid domains were hosts that didn't resolve, but that still belonged to someone. I found a few, but they were only used by one or two hosts.

Takeover of unregistered Azure subdomain

But then I saw a couple of domains referencing a javascript from a non-resolving host called piwiklionshare.azurewebsites.net. This is a subdomain from Microsoft's cloud service Azure. Conveniently Azure allows creating test accounts for free, so I was able to grab this subdomain without any costs.

Doing so allowed me to look at the HTTP log files and see what web pages included code from that subdomain. All of them were local newspapers from the US. 20 of them belonged to two adjacent IP addresses, indicating that they were all managed by the same company. I was able to contact them. While I never received any answer, shortly afterwards the code was gone from all those pages.

However the page with most hits was not so easy to contact. It was also a newspaper, the Saline Courier. I tried contacting them directly, their chief editor and their second chief editor. No answer.

After a while I wondered what I could do. Ultimately at some point Microsoft wouldn't let me host that subdomain any longer for free. I didn't want to risk that others could grab that subdomain, but at the same time I obviously also didn't want to pay in order to keep some web page safe whose owners didn't even bother to read my e-mails.

But of course I had another way of contacting them: I could execute Javascript on their web page and use that for some friendly defacement. After some contemplating whether that would be a legitimate thing to do I decided to go for it. I changed the background color to some flashy pink and send them a message. The page remained usable, but it was a message hard to ignore.

With some trouble on the way - first they broke their CSS, then they showed a PHP error message, then they reverted to the page with the defacement. But in the end they managed to remove the code.

There are still a couple of other pages that include that Javascript. Most of them however look like broken test webpages. The only legitimately looking webpage that still embeds that code is the Columbia Missourian. However they don't embed it on the start page, only on the error reporting form they have for every article. It's been several weeks now, they don't seem to care.

What happens to abandoned domains?

There are reasons to believe that what I showed here is only the tip of the iceberg. In many cases when services discontinue their domains don't simply disappear. If the domain name is valuable then almost certainly someone will try to register it immediately after it becomes available.

Someone trying to abuse abandoned domains could watch out for services going ot of business or widely referenced domains becoming available. Just to name an example: I found a couple of hosts referencing subdomains of compete.com. If you go to their web page, you can learn that the company Compete has discontinued its service in 2016. How long will they keep their domain? And what will happen with it afterwards? Whoever gets the domain can hijack all the web pages that still include javascript from it.

Be sure to know what you include

There are some obvious takeaways from this. If you include other peoples code on your web page then you should know what that means: You give them permission to execute whatever they want on your web page. This means you need to wonder how much you can trust them.

At the very least you should be aware who is allowed to execute code on your web page. If they shut down their business or discontinue the service you have been using then you obviously should remove that code immediately. And if you include code from a web statistics service that you never look at anyway you may simply want to remove that as well.

Posted by Hanno Böck

in Code, English, Security, Webdesign

at

19:11

| Comments (3)

| Trackback (1)

Defined tags for this entry: azure, domain, javascript, newspaper, salinecourier, security, subdomain, websecurity

Tuesday, January 26. 2016

Safer use of C code - running Gentoo with Address Sanitizer

Update: When I wrote this blog post it was an open question for me whether using Address Sanitizer in production is a good idea. A recent analysis posted on the oss-security mailing list explains in detail why using Asan in its current form is almost certainly not a good idea. Having any suid binary built with Asan enables a local root exploit - and there are various other issues. Therefore using Gentoo with Address Sanitizer is only recommended for developing and debugging purposes.

Address Sanitizer is a remarkable feature that is part of the gcc and clang compilers. It can be used to find many typical C bugs - invalid memory reads and writes, use after free errors etc. - while running applications. It has found countless bugs in many software packages. I'm often surprised that many people in the free software community seem to be unaware of this powerful tool.

Address Sanitizer is a remarkable feature that is part of the gcc and clang compilers. It can be used to find many typical C bugs - invalid memory reads and writes, use after free errors etc. - while running applications. It has found countless bugs in many software packages. I'm often surprised that many people in the free software community seem to be unaware of this powerful tool.

Address Sanitizer is mainly intended to be a debugging tool. It is usually used to test single applications, often in combination with fuzzing. But as Address Sanitizer can prevent many typical C security bugs - why not use it in production? It doesn't come for free. Address Sanitizer takes significantly more memory and slows down applications by 50 - 100 %. But for some security sensitive applications this may be a reasonable trade-off. The Tor project is already experimenting with this with its Hardened Tor Browser.

One project I've been working on in the past months is to allow a Gentoo system to be compiled with Address Sanitizer. Today I'm publishing this and want to allow others to test it. I have created a page in the Gentoo Wiki that should become the central documentation hub for this project. I published an overlay with several fixes and quirks on Github.

I see this work as part of my Fuzzing Project. (I'm posting it here because the Gentoo category of my personal blog gets indexed by Planet Gentoo.)

I am not sure if using Gentoo with Address Sanitizer is reasonable for a production system. One thing that makes me uneasy in suggesting this for high security requirements is that it's currently incompatible with Grsecurity. But just creating this project already caused me to find a whole number of bugs in several applications. Some notable examples include Coreutils/shred, Bash ([2], [3]), man-db, Pidgin-OTR, Courier, Syslog-NG, Screen, Claws-Mail ([2], [3]), ProFTPD ([2], [3]) ICU, TCL ([2]), Dovecot. I think it was worth the effort.

I will present this work in a talk at FOSDEM in Brussels this Saturday, 14:00, in the Security Devroom.

Address Sanitizer is a remarkable feature that is part of the gcc and clang compilers. It can be used to find many typical C bugs - invalid memory reads and writes, use after free errors etc. - while running applications. It has found countless bugs in many software packages. I'm often surprised that many people in the free software community seem to be unaware of this powerful tool.

Address Sanitizer is a remarkable feature that is part of the gcc and clang compilers. It can be used to find many typical C bugs - invalid memory reads and writes, use after free errors etc. - while running applications. It has found countless bugs in many software packages. I'm often surprised that many people in the free software community seem to be unaware of this powerful tool.Address Sanitizer is mainly intended to be a debugging tool. It is usually used to test single applications, often in combination with fuzzing. But as Address Sanitizer can prevent many typical C security bugs - why not use it in production? It doesn't come for free. Address Sanitizer takes significantly more memory and slows down applications by 50 - 100 %. But for some security sensitive applications this may be a reasonable trade-off. The Tor project is already experimenting with this with its Hardened Tor Browser.

One project I've been working on in the past months is to allow a Gentoo system to be compiled with Address Sanitizer. Today I'm publishing this and want to allow others to test it. I have created a page in the Gentoo Wiki that should become the central documentation hub for this project. I published an overlay with several fixes and quirks on Github.

I see this work as part of my Fuzzing Project. (I'm posting it here because the Gentoo category of my personal blog gets indexed by Planet Gentoo.)

I am not sure if using Gentoo with Address Sanitizer is reasonable for a production system. One thing that makes me uneasy in suggesting this for high security requirements is that it's currently incompatible with Grsecurity. But just creating this project already caused me to find a whole number of bugs in several applications. Some notable examples include Coreutils/shred, Bash ([2], [3]), man-db, Pidgin-OTR, Courier, Syslog-NG, Screen, Claws-Mail ([2], [3]), ProFTPD ([2], [3]) ICU, TCL ([2]), Dovecot. I think it was worth the effort.

I will present this work in a talk at FOSDEM in Brussels this Saturday, 14:00, in the Security Devroom.

Posted by Hanno Böck

in Code, English, Gentoo, Linux, Security

at

01:40

| Comments (5)

| Trackbacks (0)

Defined tags for this entry: addresssanitizer, asan, bufferoverflow, c, clang, gcc, gentoo, linux, memorysafety, security, useafterfree

Monday, November 30. 2015

A little POODLE left in GnuTLS (old versions)

tl;dr Older GnuTLS versions (2.x) fail to check the first byte of the padding in CBC modes. Various stable Linux distributions, including Ubuntu LTS and Debian wheezy (oldstable) use this version. Current GnuTLS versions are not affected.