Entries tagged as itsecurity

Related tags

apache certificates cryptography datenschutz datensparsamkeit encryption grsecurity https javascript karlsruhe letsencrypt linux mod_rewrite nginx ocsp ocspstapling openssl php revocation security serendipity sni ssl symlink tls userdir vortrag web web20 webhosting webmontag websecurity bigbluebutton cookie fileexfiltration jodconverter libreoffice xss drupal gallery mantis session sniffing squirrelmail aiglx algorithm android apt badkeys braunschweig browser bsideshn ca cacert ccc cccamp cccamp15 certificate chrome chromium cloudflare cmi compiz crash crypto darmstadt deb debian dell deolalikar diffiehellman diploma diplomarbeit easterhegg edellroot eff email english enigma facebook fedora fortigate fortinet forwardsecrecy gentoo gnupg gpg gsoc hannover hash http key keyexchange keyserver leak libressl maninthemiddle math md5 milleniumproblems mitm modulobias mrmcd mrmcd101b nist nss observatory openbsd openid openidconnect openpgp packagemanagement papierlos password pgp pnp privacy privatekey provablesecurity pss random rc2 revoke rpm rsa rsapss schlüssel server sha1 sha2 sha256 sha512 signatures slides smime sso stuttgart superfish talk thesis transvalid ubuntu unicode university updates utf-8 verschlüsselung vulnerability windowsxp wordpress x509 ajax azure bypass clickjacking cve domain escapa firefox ftp games gobi google helma html kde khtml konqueror lemmings microsoft mozilla mpaa newspaper passwordalert salinecourier subdomain webcore xsa 0days 27c3 addresssanitizer adguard adobe aead aes afra altushost antivir antivirus aok asan auskunftsanspruch axfr barcamp bash berlin berserk bias bleichenbacher blog bodensee botnetz bsi bufferoverflow bugbounty bundesdatenschutzgesetz bundestrojaner bundesverfassungsgericht busby c cbc cccamp11 cellular cfb chcounter clamav clang cms code conflictofinterest csrf dingens distributions dns eplus firewall freak freesoftware freewvs frequency fsfe fuzzing gajim gcc ghost gimp git glibc gnutls gsm gstreamer hackerone hacking heartbleed infoleak informationdisclosure internetscan ircbot jabber joomla kaspersky komodia lessig luckythirteen malware memorysafety mephisto mobilephones moodle mplayer mrmcd100b multimedia mysql napster nessus netfiltersdk ntp ntpd onlinedurchsuchung openbsc openbts openleaks openvas osmocombb otr owncloud padding panda passwort passwörter pdo phishing poodle privdog protocolfilters python rand redhat rhein rss s9y science shellbot shellshock sicherheit snallygaster spam sqlinjection staatsanwaltschaft stacktrace study sunras support taz tlsdate toendacms überwachung unicef update useafterfree virus vlc vulnerabilities webapps wiesbaden windows wiretapping xgl xine xmpp zerodays zugangsdaten zzuf acid3 base64 breach cookies crime css heist midori samesite script time webdesign webkit webstandards content-security-policy core coredump csp segfault webroot webserver okteTuesday, February 25. 2025

Mixing up Public and Private Keys in OpenID Connect deployments

OpenID Connect is a single sign-on protocol that allows web pages to offer logins via other services. Whenever you see a web page that offers logins via, e.g., your Google or Facebook account, the technology behind it is usually OpenID Connect.

An OpenID Provider like Google can publish a configuration file in JSON format for services interacting with it at a defined URL location of this form: https://example.com/.well-known/openid-configuration (Google's can be found here.)

Those configuration files contain a field "jwks_uri" pointing to a JSON Web Key Set (JWKS) containing cryptographic public keys used to verify authentication tokens. JSON Web Keys are a way to encode cryptographic keys in JSON format, and a JSON Web Key Set is a JSON structure containing multiple such keys. (You can find Google's here.)

Given that the OpenID configuration file is at a known location and references the public keys, that gives us an easy way to scan for such keys. By scanning the Tranco Top 1 Million list and extending the scan with hostnames from SSO-Monitor (a research project providing extensive data about single sign-on services), I identified around 13.000 hosts with a valid OpenID Connect configuration and corresponding JSON Web Key Sets.

Confusing Public and Private Keys

JSON Web Keys have a very peculiar property. Cryptographic public and private keys are, in essence, just some large numbers. For most algorithms, all the numbers of the public key are also contained in the private key. For JSON Web Keys, those numbers are encoded with urlsafe Base64 encoding. (In case you don't know what urlsafe Base64 means, don't worry, it's not important here.)

Here is an example of an ECDSA public key in JSON Web Key format:

{

"kty": "EC",

"crv": "P-256",

"x": "MKBCTNIcKUSDii11ySs3526iDZ8AiTo7Tu6KPAqv7D4",

"y": "4Etl6SRW2YiLUrN5vfvVHuhp7x8PxltmWWlbbM4IFyM"

}Here is the corresponding private key:

{

"kty": "EC",

"crv": "P-256",

"x": "MKBCTNIcKUSDii11ySs3526iDZ8AiTo7Tu6KPAqv7D4",

"y": "4Etl6SRW2YiLUrN5vfvVHuhp7x8PxltmWWlbbM4IFyM",

"d": "870MB6gfuTJ4HtUnUvYMyJpr5eUZNP4Bk43bVdj3eAE"

}You may notice that they look very similar. The only difference is that the private key contains one additional value called d, which, in the case of ECDSA, is the private key value. In the case of RSA, the private key contains multiple additional values (one of them also called "d"), but the general idea is the same: the private key is just the public key plus some extra values.

What is very unusual and something I have not seen in any other context is that the serialization format for public and private keys is the same. The only way to distinguish public and private keys is to check if there are private values. JSON is commonly interpreted in an extensible way, meaning that any additional fields in a JSON field are usually ignored if they have no meaning to the application reading a JSON file.

These two facts combined lead to an interesting and dangerous property. Using a private key instead of a public key will usually work, because every private key in JSON Web Key format is also a valid public key.

You can guess by now where this is going. I checked whether any of the collected public keys from OpenID configurations were actually private keys.

This was the case for 9 hosts. Those included host names belonging to some prominent companies, including stackoverflowteams.com, stack.uberinternal.com, and ask.fisglobal.com. Those three all appear to use a service provided by Stackoverflow, and have since been fixed. (A report to Uber's bug bounty program at HackerOne was closed as a duplicate for a report they said they cannot show me. The report to FIS Global was closed by Bugcrowd's triagers as not applicable, with a generic response containing some explanations about OpenID Connect that appeared to be entirely unrelated to my report. After I asked for an explanation, I was asked to provide a proof of concept after the issue was already fixed. Stack Overflow has no bug bounty program, but fixed it after a report to their security contact.)

Short RSA Keys

7 hosts had RSA keys with a key length of 512 bit configured. Such keys have long been known to be breakable, and today, doing so is possible with relatively little effort. 45 hosts had RSA keys with a length of 1024 bit, which is considered to be breakable by powerful attackers, although such an attack has not yet been publicly demonstrated.

The first successful public attack on RSA with 512 bit was performed in 1999. Back then, it required months on a supercomputer. Today, breaking such keys is accessible to practically everyone. An implementation of the best-known attack on RSA is available as an Open Source software called CADO-NFS. Ryan Castellucci recently ran such an attack for a 512-bit RSA key they found in the control software of a solar and battery storage system. They mentioned a price of $70 for cloud services to perform the attack in a few hours. Cracking an RSA-512 bit key is, therefore, not a significant hurdle for any determined attacker.

Using Example Keys in Production

Running badkeys on the found keys uncovered another type of vulnerability. Before running the scan, I ensured that badkeys would detect example private keys in common Open Source OpenID Connect implementations. I discovered 18 affected hosts with keys that were such "Public Private Keys," i.e., keys where the corresponding private key is part of an existing, publicly available software package.

I have reported all 512-bit RSA keys and uses of example keys to the affected parties. Most of them remain unfixed.

Impact

Overall, I discovered 33 vulnerable hosts. With 13,000 detected OpenID configurations total, 0.25% of those were vulnerable in a way that would allow an attacker to access the private key.

How severe is such a private key break? It depends. OpenID Connect supports different ways in which authentication tokens are exchanged between an OpenID Provider and a Consumer. The token can be exchanged via the browser, and in this case, it is most severe, as it simply allows an attacker to sign arbitrary login tokens for any identity.

The token can also be exchanged directly between the OpenID Provider and the Consumer. In this case, an attack is much less likely, as it would require a man-in-the-middle attack and an additional attack on the TLS connection between the two servers. I have not made any attempts to figure out which methods the affected hosts were using.

How to do better

I would argue that two of these issues could have been entirely prevented by better specifications.

As mentioned, it is a curious and unusual fact that JSON Web Keys use the same serialization format for public and private keys. It is a design decision that makes confusing public and private keys likely.

In an ecosystem where public and private keys are entirely different — like TLS or SSH — any attempt to configure a private key instead of a public key would immediately be noticed, as it would simply not work.

One mitigation that can be implemented within the existing specification is for OpenID Connect implementations to check whether a JSON Web Key Set contains any private keys. For all currently supported values, this can easily be done by checking the presence of a value "d". (Not sure if this is a coincidence, but for both RSA and ECDSA, we tend to call the private key "d".)

The current OpenID Connect Discovery specification says: "The JWK Set MUST NOT contain private or symmetric key values." Therefore, checking it would merely mean that an implementation is enforcing compliance with the existing specification. I would suggest adding a requirement for such a check to future versions of the standard.

Similarly, I recommend such a check for any other protocol or software utilizing JSON Web Keys or JSON Web Key Sets in places where only public keys are expected.

It is probably too late to change the JSON Web Key standard itself for existing algorithms. However, in the future, such scenarios could easily be avoided by slightly different specifications. Currently, the algorithm of a JSON Web Key is configured in the "kty" field and can have values like "RSA" or "EC".

Let's take one of the future post-quantum signature algorithms as an example. If we specify ML-DSA-65 (I know, not a name easy to remember), instead of defining a "kty" value (or, as they seem to do it these days, the "alg" value) of "ML-DSA-65", we could assign two values: "ML-DSA-65-Private" and "ML-DSA-65-Public".

When it comes to short RSA keys, it is surprising that 512-bit keys are even possible in these protocols. OpenID Connect and everything it is based on is relatively new. The first draft version of the JSON Web Key standard dates back to 2012 — thirteen years after the first successful attack on RSA-512. Warnings that RSA-1024 was potentially insecure date back to the early 2000s, and at the time all of this was specified, there were widely accepted recommendations for a minimal key size of 2048 bit.

All of this is to say that those protocols should have never supported such short RSA keys. Ideally, they should have never supported RSA with arbitrary key sizes, and just defined a few standard key sizes. (The RSA specification itself comes from a time when it was common to allow a lot of flexibility in cryptographic algorithms. While pretty much everyone uses RSA keys in standard sizes that are multiples of 1024, it is possible to even use key sizes that are not aligned on bytes — like 2049 bit. The same could be said about the RSA exponent, which everyone sets to 65537 these days. Making these things configurable provides no advantage and adds needless complexity.)

Preventing the use of known example keys — I like to call them Public Private Keys — is more difficult to avoid. We could reduce these problems if people agreed to use a standardized set of test keys. RFC 9500 contains some test keys for this exact use case. My recommendation is that any key used as an example in an RFC document is a good test key. While that would not prevent people from using those test keys in production, it would make it easier to detect such cases.

You can use badkeys to check existing deployments for several known vulnerabilities and known-compromised keys. I added a parameter --jwk that allows directly scanning files with JSON Web Keys or JSON Web Key Sets in the latest version of badkeys.

Thanks to Daniel Fett for the idea to scan OpenID Connect keys and for feedback on this issue. Thanks to Sebastian Pipping for valuable feedback for this blogpost.

Image source: SVG Repo/CC0

Posted by Hanno Böck

in Cryptography, English, Security

at

12:08

| Comment (1)

| Trackbacks (0)

Defined tags for this entry: cryptography, itsecurity, openid, openidconnect, security, sso, websecurity

Wednesday, October 21. 2020

File Exfiltration via Libreoffice in BigBlueButton and JODConverter

BigBlueButton is a free web-based video conferencing software that lately got quite popular, largely due to Covid-19. Earlier this year I did a brief check on its security which led to an article on Golem.de (German). I want to share the most significant findings here.

BigBlueButton is a free web-based video conferencing software that lately got quite popular, largely due to Covid-19. Earlier this year I did a brief check on its security which led to an article on Golem.de (German). I want to share the most significant findings here.BigBlueButton has a feature that lets a presenter upload a presentation in a wide variety of file formats that gets then displayed in the web application. This looked like a huge attack surface. The conversion for many file formats is done with Libreoffice on the server. Looking for ways to exploit server-side Libreoffice rendering I found a blog post by Bret Buerhaus that discussed a number of ways of exploiting such setups.

One of the methods described there is a feature in Opendocument Text (ODT) files that allows embedding a file from an external URL in a text section. This can be a web URL like https or a file url and include a local file.

This directly worked in BigBlueButton. An ODT file that referenced a local file would display that local file. This allows displaying any file that the user running the BigBlueButton service could access on the server. A possible way to exploit this is to exfiltrate the configuration file that contains the API secret key, which then allows basically controlling the BigBlueButton instance. I have a video showing the exploit here. (I will publish the exploit later.)

I reported this to the developers of BigBlueButton in May. Unfortunately my experience with their security process was not very good. At first I did not get an answer at all. After another mail they told me they plan to sandbox the Libreoffice process either via a chroot or a docker container. However that still has not happened yet. It is planned for the upcoming version 2.3 and independent of this bug this is a good idea, as Libreoffice just creates a lot of attack surface.

Recently I looked a bit more into this. The functionality to include external files only happens after a manual user confirmation and if one uses Libreoffice on the command line it does not work at all by default. So in theory this exploit should not have worked, but it did.

It turned out the reason for this was another piece of software that BigBlueButton uses called JODConverter. It provides a wrapper around the conversion functionality of Libreoffice. After contacting both the Libreoffice security team and the developer of JODConverter we figured out that it enables including external URLs by default.

I forwarded this information to the BigBlueButton developers and it finally let to a fix, they now change the default settings of JODConverter manually. The JODConverter developer considers changing the default as well, but this has not happened yet. Other software or web pages using JODConverter for serverside file conversion may thus still be vulnerable.

The fix was included in version 2.2.27. Today I learned that the company RedTeam Pentesting has later independently found the same vulnerability. They also requested a CVE: It is now filed as CVE-2020-25820.

While this issue is fixed, the handling of security issues by BigBlueButton was not exactly stellar. It took around five months from my initial report to a fix. The release notes do not mention that this is an important security update (the change has the note “speed up the conversion”).

I found a bunch of other security issues in BigBlueButton and proposed some hardening changes. This took a lot of back and forth, but all significant issues are resolved now.

Another issue with the presentation upload was that it allowed cross site scripting, because it did not set a proper content type for downloads. This was independently discovered by another person and was fixed a while ago. (If you are interested in details about this class of vulnerabilities: I have given a talk about it at last year’s Security Fest.)

The session Cookies both from BigBlueButton itself and from its default web frontend Greenlight were not set with a secure flag, so the cookies could be transmitted in clear text over the network. This has also been changed now.

By default the BigBlueButton installation script starts several services that open ports that do not need to be publicly accessible. This is now also changed. A freeswitch service run locally was installed with a default password (“ClueCon”), this is now also changed to a random password by the installation script.

What also looks quite problematic is the use of outdated software. BigBlueButton only works on Ubuntu 16.04, which is a long term support version, so it still receives updates. But it also uses several external repositories, including one that installs NodeJS version 8 and shows a warning that this repository no longer receives security updates. There is an open bug in the bug tracker.

If you are using BigBlueButton I strongly recommend you update to at least version 2.2.27. This should fix all the issues I found. I would wish that the BigBlueButton developers improve their security process, react more timely to external reports and more transparent when issues are fixed.

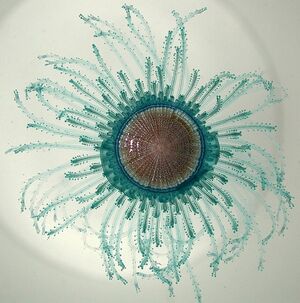

Image Source: Wikimedia Commons / NOAA / Public Domain

Update: Proof of concept published.

Posted by Hanno Böck

in Code, English, Linux, Security

at

14:14

| Comments (0)

| Trackback (1)

Defined tags for this entry: bigbluebutton, cookie, fileexfiltration, itsecurity, jodconverter, libreoffice, security, websecurity, xss

Monday, April 6. 2020

Userdir URLs like https://example.org/~username/ are dangerous

I would like to point out a security problem with a classic variant of web space hosting. While this issue should be obvious to anyone knowing basic web security, I have never seen it being discussed publicly.

Some server operators allow every user on the system to have a personal web space where they can place files in a directory (often ~/public_html) and they will appear on the host under a URL with a tilde and their username (e.g. https://example.org/~username/). The Apache web server provides such a function in the mod_userdir module. While this concept is rather old, it is still used by some and is often used by universities and Linux distributions.

From a web security perspective there is a very obvious problem with such setups that stems from the same origin policy, which is a core principle of Javascript security. While there are many subtleties about it, the key principle is that a piece of Javascript running on one web host is isolated from other web hosts.

To put this into a practical example: If you read your emails on a web interface on example.com then a script running on example.org should not be able to read your mails, change your password or mess in any other way with the application running on a different host. However if an attacker can place a script on example.com, which is called a Cross Site Scripting or XSS vulnerability, the attacker may be able to do all that.

The problem with userdir URLs should now become obvious: All userdir URLs on one server run on the same host and thus are in the same origin. It has XSS by design.

What does that mean in practice? Let‘s assume we have Bob, who has the username „bob“ on exampe.org, runs a blog on https://example.org/~bob/. User Mallory, who has the username „mallory“ on the same host, wants to attack Bob. If Bob is currently logged into his blog and Mallory manages to convince Bob to open her webpage – hosted at https://example.org/~mallory/ - at the same time she can place an attack script there that will attack Bob. The attack could be a variety of things from adding another user to the blog, changing Bob‘s password or reading unpublished content.

This is only an issue if the users on example.org do not trust each other, so the operator of the host may decide this is no problem if there is only a small number of trusted users. However there is another issue: An XSS vulnerability on any of the userdir web pages on the same host may be used to attack any other web page on the same host.

So if for example Alice runs an outdated web application with a known XSS vulnerability on https://example.org/~alice/ and Bob runs his blog on https://example.org/~bob/ then Mallory can use the vulnerability in Alice‘s web application to attack Bob.

All of this is primarily an issue if people run non-trivial web applications that have accounts and logins. If the web pages are only used to host static content the issues become much less problematic, though it is still with some limitations possible that one user could show the webpage of another user in a manipulated way.

So what does that mean? You probably should not use userdir URLs for anything except hosting of simple, static content - and probably not even there if you can avoid it. Even in situations where all users are considered trusted there is an increased risk, as vulnerabilities can cross application boundaries. As for Apache‘s mod_userdir I have contacted the Apache developers and they agreed to add a warning to the documentation.

If you want to provide something similar to your users you might want to give every user a subdomain, for example https://alice.example.org/, https://bob.example.org/ etc. There is however still a caveat with this: Unfortunately the same origin policy does not apply to all web technologies and particularly it does not apply to Cookies. However cross-hostname Cookie attacks are much less straightforward and there is often no practical attack scenario, thus using subdomains is still the more secure choice.

To avoid these Cookie issues for domains where user content is hosted regularly – a well-known example is github.io – there is the Public Suffix List for such domains. If you run a service with user subdomains you might want to consider adding your domain there, which can be done with a pull request.

Some server operators allow every user on the system to have a personal web space where they can place files in a directory (often ~/public_html) and they will appear on the host under a URL with a tilde and their username (e.g. https://example.org/~username/). The Apache web server provides such a function in the mod_userdir module. While this concept is rather old, it is still used by some and is often used by universities and Linux distributions.

From a web security perspective there is a very obvious problem with such setups that stems from the same origin policy, which is a core principle of Javascript security. While there are many subtleties about it, the key principle is that a piece of Javascript running on one web host is isolated from other web hosts.

To put this into a practical example: If you read your emails on a web interface on example.com then a script running on example.org should not be able to read your mails, change your password or mess in any other way with the application running on a different host. However if an attacker can place a script on example.com, which is called a Cross Site Scripting or XSS vulnerability, the attacker may be able to do all that.

The problem with userdir URLs should now become obvious: All userdir URLs on one server run on the same host and thus are in the same origin. It has XSS by design.

What does that mean in practice? Let‘s assume we have Bob, who has the username „bob“ on exampe.org, runs a blog on https://example.org/~bob/. User Mallory, who has the username „mallory“ on the same host, wants to attack Bob. If Bob is currently logged into his blog and Mallory manages to convince Bob to open her webpage – hosted at https://example.org/~mallory/ - at the same time she can place an attack script there that will attack Bob. The attack could be a variety of things from adding another user to the blog, changing Bob‘s password or reading unpublished content.

This is only an issue if the users on example.org do not trust each other, so the operator of the host may decide this is no problem if there is only a small number of trusted users. However there is another issue: An XSS vulnerability on any of the userdir web pages on the same host may be used to attack any other web page on the same host.

So if for example Alice runs an outdated web application with a known XSS vulnerability on https://example.org/~alice/ and Bob runs his blog on https://example.org/~bob/ then Mallory can use the vulnerability in Alice‘s web application to attack Bob.

All of this is primarily an issue if people run non-trivial web applications that have accounts and logins. If the web pages are only used to host static content the issues become much less problematic, though it is still with some limitations possible that one user could show the webpage of another user in a manipulated way.

So what does that mean? You probably should not use userdir URLs for anything except hosting of simple, static content - and probably not even there if you can avoid it. Even in situations where all users are considered trusted there is an increased risk, as vulnerabilities can cross application boundaries. As for Apache‘s mod_userdir I have contacted the Apache developers and they agreed to add a warning to the documentation.

If you want to provide something similar to your users you might want to give every user a subdomain, for example https://alice.example.org/, https://bob.example.org/ etc. There is however still a caveat with this: Unfortunately the same origin policy does not apply to all web technologies and particularly it does not apply to Cookies. However cross-hostname Cookie attacks are much less straightforward and there is often no practical attack scenario, thus using subdomains is still the more secure choice.

To avoid these Cookie issues for domains where user content is hosted regularly – a well-known example is github.io – there is the Public Suffix List for such domains. If you run a service with user subdomains you might want to consider adding your domain there, which can be done with a pull request.

(Page 1 of 1, totaling 3 entries)