Saturday, October 8. 2011

Free rar unpacking code

One of the few pieces of non-free software I always needed on my system is a rar unpacker. Despite that there are very good free alternatives for high-compression archivers like 7-zip or tar.xz, many people seem to like relying on a proprietary format like rar and it's in widespread use.

Years ago, someone came up with a GPLed rar unpacker, but sadly, that was never updated to support the rar version 3 format. Its development is stalled.

For that reason, some time back I suggested to the Free Software Foundation to add a free rar unpacking tool to their list of high priority projects - they did so. Happily I recently read that they've removed it. There's The Unarchiver now, based on an old amiga library. It supports a whole bunch of formats - including rar v3. It's mainly a MacOS application, but it also provides a command line tool that can be compiled in Linux.

It needs objective C, the gnustep-base libraries and it took me some time to get it to compile properly. For the Gentoo-users: I already committed an ebuild, just run "emerge unar".emerge TheUnarchiver

Update: Changed ebuild name to unar, as that's the name upstream uses for the command line version now.

Years ago, someone came up with a GPLed rar unpacker, but sadly, that was never updated to support the rar version 3 format. Its development is stalled.

For that reason, some time back I suggested to the Free Software Foundation to add a free rar unpacking tool to their list of high priority projects - they did so. Happily I recently read that they've removed it. There's The Unarchiver now, based on an old amiga library. It supports a whole bunch of formats - including rar v3. It's mainly a MacOS application, but it also provides a command line tool that can be compiled in Linux.

It needs objective C, the gnustep-base libraries and it took me some time to get it to compile properly. For the Gentoo-users: I already committed an ebuild, just run "emerge unar".emerge TheUnarchiver

Update: Changed ebuild name to unar, as that's the name upstream uses for the command line version now.

Sunday, August 21. 2011

The sad state of the Linux Desktop

Some days ago it was reported that Microsoft declared it considers Linux on the desktop no longer a threat for its business. Now I usually wouldn't care that much what Microsoft is saying, but in this case, I think, they're very right – and thererfore I wonder why this hasn't raised any discussions in the free software community (at least I haven't seen one – if it has and I missed it, please provide links in the comments). So I'd like to make a start.

Some days ago it was reported that Microsoft declared it considers Linux on the desktop no longer a threat for its business. Now I usually wouldn't care that much what Microsoft is saying, but in this case, I think, they're very right – and thererfore I wonder why this hasn't raised any discussions in the free software community (at least I haven't seen one – if it has and I missed it, please provide links in the comments). So I'd like to make a start.A few years ago, I can remember that I was pretty optimistic about a Linux-based Desktop (and I think many shared my views). It seemed with advantages like being able to provide a large number of high quality applications for free and having proven to be much more resilient against security threats it was just a matter of time. I had the impression that development was often going into the right direction, just to name one example freedesktop.org was just starting to try to unify the different Linux desktop environments and make standards so KDE applications work better under GNOME and vice versa.

Today, my impression is that everything is in a pretty sad state. Don't get me wrong: Free software plays an important role on Desktops – and that's really good. Major web browsers are based on free software, applications like VLC are very successful. But the basis – the operating system – is usually a non-free one.

I recently was looking for netbooks. Some years ago, Asus came out with the Eee PC, a small and cheap laptop which ran Linux by default – one year later they provided a version with Windows as an alternative. Today, you won't find a single Netbook with Linux as the default OS. I read more often than not in recent years that public authorities trying to get along with Linux have failed.

I think I made my point; the Linux Desktop is in a sad state – I'd like to discuss why this is the case and how we (the free software community) can change it. I won't claim that I have the definite answer for the cause. I think it's a mix of things, I'd like to start with some points:

- Some people seem to see Desktop environments more as a playground for creative ideas than something other people want to use on a daily basis in a stable way. This is pretty much true for KDE 4 – the KDE team abandoned a well-working Desktop environment KDE 3.5 for something that isn't stable even today and suffers from a lot of regressions. They permanently invent new things like Akonadi and make them mandatory even for people who don't care about them – I seriously don't have an idea what it does, except throwing strange error messages at me. I switched to GNOME, but what I heard about GNOME 3 doesn't make me feel that it's much better there (I haven't tested it yet and I hope that, unlike the KDE-team, GNOME learns from that and supports 2.x until version 3 is in a state working equally well). I think Ubuntu's playing with the Unity Desktop go in the same direction: We found something cool, we'll use it, we don't care that we'll piss of a bunch of our users. In contrast to that, I have the impression that what I named above – the idea that we can integrate different desktop environments better by standards – isn't seen as important as it used to be. (I know this part may provoke flames, I hope this won't hide the other points I made)

- The driver problem. I still encounter it to be one of the biggest obstacles and it hasn't changed a bit for years. You just can't buy a piece of hardware and use it. It usually is “somehow possible”, but the default is that it requires a lot of extra geeky work that the average user will never manage. I think there's no easy solution to that, as it would require cooperation from hardware vendors (and with diminishing importance of the Linux Desktop this is likely getting harder). But a lot of things are also self-made. In 2006, Eric Raymond wrote an essay how crappy CUPS is – I think it hasn't improved since then. How often have I read Ubuntu bug reports that go like this: “My printer worked in version [last version], but it doesn't work in [current version]” - “Me too.” - “Me too.” - “Me too” - no reply from any developer. One point that this shares with the one above is the caring about regressions, which I think should be a top priority, but obviously, many in the free software community don't seem to think so. (if you don't know the word: something is called a regression if something worked in an older version of a software, but no longer works in the current version)

- The market around us has changed. Back then, we were faced with a “Windows or nothing” situation we wanted to change to a “Windows or Linux” situation. Today, we're faced with “Windows or MacOS X”. Sure, MacOS existed back then, but it only got a relevant market share in recent years (and many current or former free software developers use MacOS X now). Competition makes products better, so Windows today is not Windows back then. Our competitors just got better.

- The desktop is loosing share. This is a point often made, with mobile phones, tablets, gaming consoles and other devices taking over tasks that were done with desktop computers in the past. This is certainly true for some degree, but I think it's also often overestimated. Desktop computers still play an important role and I'm sure they will continue to do so for a long time. The discussion how free software performs on other devices (and how free Android is) is an interesting one, too, but I won't go into it for now, as I want to talk about the Desktop here.

Okay, I've started the discussion, I'd like others to join. Please remember: It's not my goal to flame or to blame anyone – my goal is to discuss how we can make the Linux desktop successful again.

Thursday, January 27. 2011

Energy effiency of cable modems and routers

I already wrote in the past that a couple of times that I'm worried about the insane high energy consumption of DSL and WLAN hardware that's supposed to run all the time.

I already wrote in the past that a couple of times that I'm worried about the insane high energy consumption of DSL and WLAN hardware that's supposed to run all the time.Recently, I switched my internet provider from O2 to Kabel Deutschland and got new hardware. I made some findings I found interesting:

It seems very many power supplies today have a label on their energy effiency. If you find something called "EFFIENCY LEVEL: V" - that's it. V is currently the best, I the worst. Higher values are reserved for the future (so this is much more intelligent than the stupid EU energy label, where A stands for "this was the best when we invented this label some years ago"). I haven't tried that yet, but from what I read it seems worth replacing inefficient power supplies with better ones.

The cable modem I got eats 4 Watts. Considering that it's the crucial part that cannot be switched off as long as I want to be able to receive phone calls, I consider this rather high. The power supply had effiency level IV. If anyone knows of any energy saving cable modems, I'm open for suggestions.

I was quite impressed by the router I got for free. It's a D-Link 615 and it's using 2,4 Watts with wireless and 1,4 Watts without. That's MUCH better than anything I've seen before. So at least we see some progress here. (and for people interested in free software: it seems at least DD-WRT claims to support it and the other *WRT projects are working on it)

Though I still fail to understand why there can't be a simple law stating that every electronic device must put information about it's energy consumption on the package.

Posted by Hanno Böck

in Ecology, English, Linux

at

21:22

| Comment (1)

| Trackbacks (0)

Defined tags for this entry: cablemodem, climate, d-link, ecology, efficiency, eletricity, environment, kabeldeutschland, o2, router, strom, stromverbrauch, umwelt

Wednesday, January 5. 2011

How to create a PGP/GPG-key free of SHA-1

If you've read my last blog entry, you saw that I was struggling a bit with the fact that I was unable to create a PGP key without SHA-1. This is a bit tricky, as there are various places where hash functions are used within a pgp key:

1. The key self-signatures and signatures on other keys. Every key has user IDs that are signed with the master key itself. This is to proofe that the names and mail adresses in the key belong to the keyholder itself and can't be replaced my a malicous attacker.

2. The signatures on messages, for example E-Mails.

3. The preference in side the key - this indicates to other people what sigature algorithms you would prefer if they send messages to you.

4. The fingerprint.

1 is controlled by the setting cert-digest-algo in the file gpg.conf (for both self-signatures and signatures to other keys). 2 is controlled by the setting personal-digest-preferences. So you should add these two lines to your gpg.conf, preferrably before you create your own key (if you intend to create one, don't bother if you want to stick with your current one):

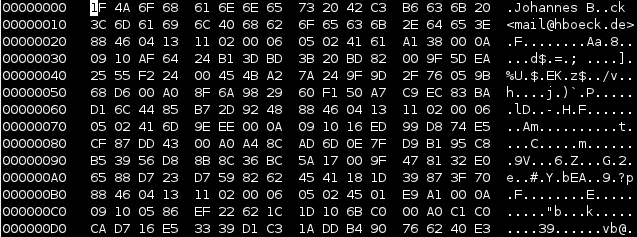

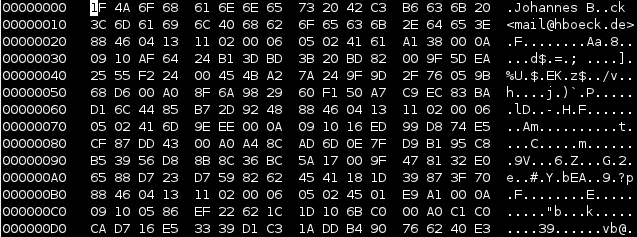

It is also not really trivial to check the used algorithms. For message signatures, if you verify them with gpg -v --verify [filename]. For key signatures, I found no option to do that - but a workaround: Export the key whose signatures you'd like to check gpg --export --armor [key ID] > filename.asc. Then parse the exported file with gpg -vv filename.asc. It'll show you blocks like this:

The big question remains: Why is this so complicated and why isn't gnupg just defaulting to SHA256? I don't know the answer.

(Please also have a look at this blog entry from Debian about the topic)

1. The key self-signatures and signatures on other keys. Every key has user IDs that are signed with the master key itself. This is to proofe that the names and mail adresses in the key belong to the keyholder itself and can't be replaced my a malicous attacker.

2. The signatures on messages, for example E-Mails.

3. The preference in side the key - this indicates to other people what sigature algorithms you would prefer if they send messages to you.

4. The fingerprint.

1 is controlled by the setting cert-digest-algo in the file gpg.conf (for both self-signatures and signatures to other keys). 2 is controlled by the setting personal-digest-preferences. So you should add these two lines to your gpg.conf, preferrably before you create your own key (if you intend to create one, don't bother if you want to stick with your current one):

personal-digest-preferences SHA2563 defaults to SHA256 if you generate your key with a recent GnuPG version. You can check it with gpg --edit-key [your key ID] and then showpref. For 4, I think it can't be changed at all (though I think it doesn't mean a security threat for collission attacks - still it should be changed at some point).

cert-digest-algo SHA256

It is also not really trivial to check the used algorithms. For message signatures, if you verify them with gpg -v --verify [filename]. For key signatures, I found no option to do that - but a workaround: Export the key whose signatures you'd like to check gpg --export --armor [key ID] > filename.asc. Then parse the exported file with gpg -vv filename.asc. It'll show you blocks like this:

:signature packet: algo 1, keyid A5880072BBB51E42The digest algo 8 is what you're looking for. 1 means MD5, 2 means SHA1, 8 means SHA256. Other values can be looked up in include/cipher.h in the source code. No, that's not user friendly. But I found no easier way.

version 4, created 1294258192, md5len 0, sigclass 0x13

digest algo 8, begin of digest 3e c3

The big question remains: Why is this so complicated and why isn't gnupg just defaulting to SHA256? I don't know the answer.

(Please also have a look at this blog entry from Debian about the topic)

Sunday, December 26. 2010

Goodbye 3DBD3B20, welcome BBB51E42

Having used my PGP key 3DBD3B20 for almost eight years, it's finally time for a new one: 4F9F43A9. The old primary key was a 1024 bit DSA key, which had two drawbacks:

1. 1024 bit keys for DLP or factoring based algorithms are considered insecure.

2. It's impossible to set the used hash algorithm to anything beyond SHA-1.

My new key has 4096 bits key size (2048 bit is the default of GnuPG since 2.0.13 and should be fairly enough, but I wanted some extra security) and the default hash algorithm preference is SHA-256. I had to make a couple of decisions for my name in the key:

1. I'm usually called Hanno, but my real/official name is Johannes.

2. My surname has a special character (ö) in it, which can be represented as oe.

In my previous keys, I've mixed this. I decided against this for the new key, because both my inofficial prename Hanno and my umlaut-converted surname Boeck are part of my mail adress, so people should still be able to find my key if they're searching for that.

Another decision was the time I wanted my key to be valid. I've decided to give it an expiration date, but a fairly long one: 10 years from now.

I've signed my new key with my old key, so if you've signed my old one, you should be able to verify the new one. I leave it up to you if you decide to sign my new key or if you want to re-new the signing procedure. I'll start from scratch and won't sign any keys I've signed with the old key automatically with the new one. If you want to key-sign with me, you may find me on the 27C3 within the next days.

My old key will be valid for a while, at some time in the future I'll probably revoke it.

Update: I just found out that having a key without SHA-1 is trickier than I thought. The self-signatures were still SHA-1. I could re-do the self-signatures and revoke the old ones, but that'd clutter the key with a lot of useless cruft and as the new key wasn't around long and didn't get any signatures I couldn't get easily again, I decided to start over again: The new key is BBB51E42 and the other one will be revoked.

I'll write another blog entry to document how you can create your own SHA-256 only key.

1. 1024 bit keys for DLP or factoring based algorithms are considered insecure.

2. It's impossible to set the used hash algorithm to anything beyond SHA-1.

My new key has 4096 bits key size (2048 bit is the default of GnuPG since 2.0.13 and should be fairly enough, but I wanted some extra security) and the default hash algorithm preference is SHA-256. I had to make a couple of decisions for my name in the key:

1. I'm usually called Hanno, but my real/official name is Johannes.

2. My surname has a special character (ö) in it, which can be represented as oe.

In my previous keys, I've mixed this. I decided against this for the new key, because both my inofficial prename Hanno and my umlaut-converted surname Boeck are part of my mail adress, so people should still be able to find my key if they're searching for that.

Another decision was the time I wanted my key to be valid. I've decided to give it an expiration date, but a fairly long one: 10 years from now.

I've signed my new key with my old key, so if you've signed my old one, you should be able to verify the new one. I leave it up to you if you decide to sign my new key or if you want to re-new the signing procedure. I'll start from scratch and won't sign any keys I've signed with the old key automatically with the new one. If you want to key-sign with me, you may find me on the 27C3 within the next days.

My old key will be valid for a while, at some time in the future I'll probably revoke it.

Update: I just found out that having a key without SHA-1 is trickier than I thought. The self-signatures were still SHA-1. I could re-do the self-signatures and revoke the old ones, but that'd clutter the key with a lot of useless cruft and as the new key wasn't around long and didn't get any signatures I couldn't get easily again, I decided to start over again: The new key is BBB51E42 and the other one will be revoked.

I'll write another blog entry to document how you can create your own SHA-256 only key.

Posted by Hanno Böck

in Cryptography, English, Gentoo, Linux, Security

at

18:16

| Comments (3)

| Trackbacks (0)

Defined tags for this entry: cryptography, datenschutz, encryption, gnupg, gpg, key, pgp, privacy, schlüssel, security, sha1, sha2, verschlüsselung

Tuesday, December 14. 2010

How I revoked my old PGP key

Prologue of this story: A very long time ago (2004 to be exact), I decided to create a new PGP / GnuPG key with 4096 bits (due to this talk). However, shortly after that, I had a hardware failure of my hard disc. The home was a dm-crypt partition with xfs. I was able to restore most data, but it seemed the key was lost. I continued to use my old key I had in a backup and the 4096 key was bitrotting on keyservers. And that always annoyed me. In the meantime, I found all private keys of old DOS (2.6.3i) and Windows (5.0) PGP keys I had created in the past and revoked them, but this 4096 key was still there.

I still have the hard disc in question and a couple of dumps I created during the data rescue back then. Today, I decided that I'll have to try restoring that key again. My strategy was not trying to do anything on the filesystem, but only operate within the image. Very likely the data must be there somewhere.

I found a place where I was rather sure that this must be the key. But exporting that piece with dd didn't succeed - looking a bit more at it, it seemed that the beginning was in shape, but at some place there were zeros. I don't know if this is due to the corruption or the fact that the filesystem didn't store the data sequentially at that place - but it didn't matter. I had a look at the file format of PGP keys in RFC 4880. Public keys and private keys are stored pretty similar. Only the beginning (the real "key") part differs, the userid / signatures / rest part is equal. So I was able to extract the private key block (starting with 0x95) with the rest (I just used the place where the first cleartext userid started with my name "Johannes"). What should I say? It worked like a charm. I was able to import my old private key and was able to revoke it. Key 147C5A9F is no longer valid. Great!

P. S.: Next step will be finally creating a new 4096 bit RSA key and abandoning my still-in-use 1024 bit DSA key for good.

I still have the hard disc in question and a couple of dumps I created during the data rescue back then. Today, I decided that I'll have to try restoring that key again. My strategy was not trying to do anything on the filesystem, but only operate within the image. Very likely the data must be there somewhere.

I found a place where I was rather sure that this must be the key. But exporting that piece with dd didn't succeed - looking a bit more at it, it seemed that the beginning was in shape, but at some place there were zeros. I don't know if this is due to the corruption or the fact that the filesystem didn't store the data sequentially at that place - but it didn't matter. I had a look at the file format of PGP keys in RFC 4880. Public keys and private keys are stored pretty similar. Only the beginning (the real "key") part differs, the userid / signatures / rest part is equal. So I was able to extract the private key block (starting with 0x95) with the rest (I just used the place where the first cleartext userid started with my name "Johannes"). What should I say? It worked like a charm. I was able to import my old private key and was able to revoke it. Key 147C5A9F is no longer valid. Great!

P. S.: Next step will be finally creating a new 4096 bit RSA key and abandoning my still-in-use 1024 bit DSA key for good.

Posted by Hanno Böck

in Code, Computer culture, Cryptography, English, Linux, Security

at

15:47

| Comment (1)

| Trackbacks (0)

Friday, December 10. 2010

Notes from talk about GSM and free software

Yesterday I was at a talk at the FSFE Berlin about free software and GSM. It was an interesting talk and discussion.

Probably most of you know that GSM is the protocol that keeps the large majority of mobile phones running. In the past, only a handful of companies worked with the protocol and according to the talk, even most mobile phone companies don't know much of the internal details, as they usually buy ready-made chips.

Three free software projects work on GSM, OpenBTS and OpenBSC on the server side and OsmocomBB on the client side. What I didn't know yet and think is really remarkable: The Island State of Niue installed a GSM-network based on OpenBTS. The island found no commercial operator, so they installed a free software based and community supported GSM network.

Afterwards, we had a longer discussion about security and privacy implications of GSM. To sum it up, GSM is horribly broken on the security side. It offers no authentication between phones and cells. Also, it's encryption has been broken in the early 90s. There is not much progress in protocol improvements although this is known for a very long time. It's also well known that so-called IMSI-cachers are sold illegally for a few thousand dollars. The only reason GSM is still working at all is basically that those possibilities still cost a few thousands. But cheaper hardware and improvement in free GSM software makes it more likely that those possibilities will have a greater impact in the future (this is only a brief summary and I'm not really in that topic, see Wikipedia for some starting points for more info).

There was a bit of discussion about the question how realistic it is that some "normal user" is threatened by this due to the price of a few thousand dollars for the equipment. I didn't bring this up in the discussion any more, but I remember having seen a talk by a guy from Intel that the tendency is to design generic chips for various protocols that can be GSM, Bluetooth or WLAN purely by software control. Thinking about that, this raises the question of protocol security even more, as it might already be possible to use mainstream computer hardware to do mobile phone wiretapping by just replacing the firmware of a wireless lan card. It almost certainly will be possible within some years.

Another topic that was raised was frequency regulation. Even with free software you wouldn't be able to operate your own GSM network, because you couldn't afford buying a frequency (although it seems to be possible to get a testing license for a limited space, e. g. for technical workshops - the 27C3 will have a GSM test network). I mentioned that there's a chapter in the book "Code" from Lawrence Lessig (available in an updated version here, chapter is "The Regulators of Speech: Distribution" and starts on page 270 in the PDF). The thoughts from Lessing are that frequency regulation was neccessary in the beginning of radio technology, but today, it would be easily possible to design protocols that don't need regulation - they could be auto-regulating, e. g. with a prefix in front of every data package (the way wireless lan works). But the problem with that is that today, frequency usage generates large income for the state - that's completely against the original idea of it, as it's primarily purpose was to keep technology usable.

Probably most of you know that GSM is the protocol that keeps the large majority of mobile phones running. In the past, only a handful of companies worked with the protocol and according to the talk, even most mobile phone companies don't know much of the internal details, as they usually buy ready-made chips.

Three free software projects work on GSM, OpenBTS and OpenBSC on the server side and OsmocomBB on the client side. What I didn't know yet and think is really remarkable: The Island State of Niue installed a GSM-network based on OpenBTS. The island found no commercial operator, so they installed a free software based and community supported GSM network.

Afterwards, we had a longer discussion about security and privacy implications of GSM. To sum it up, GSM is horribly broken on the security side. It offers no authentication between phones and cells. Also, it's encryption has been broken in the early 90s. There is not much progress in protocol improvements although this is known for a very long time. It's also well known that so-called IMSI-cachers are sold illegally for a few thousand dollars. The only reason GSM is still working at all is basically that those possibilities still cost a few thousands. But cheaper hardware and improvement in free GSM software makes it more likely that those possibilities will have a greater impact in the future (this is only a brief summary and I'm not really in that topic, see Wikipedia for some starting points for more info).

There was a bit of discussion about the question how realistic it is that some "normal user" is threatened by this due to the price of a few thousand dollars for the equipment. I didn't bring this up in the discussion any more, but I remember having seen a talk by a guy from Intel that the tendency is to design generic chips for various protocols that can be GSM, Bluetooth or WLAN purely by software control. Thinking about that, this raises the question of protocol security even more, as it might already be possible to use mainstream computer hardware to do mobile phone wiretapping by just replacing the firmware of a wireless lan card. It almost certainly will be possible within some years.

Another topic that was raised was frequency regulation. Even with free software you wouldn't be able to operate your own GSM network, because you couldn't afford buying a frequency (although it seems to be possible to get a testing license for a limited space, e. g. for technical workshops - the 27C3 will have a GSM test network). I mentioned that there's a chapter in the book "Code" from Lawrence Lessig (available in an updated version here, chapter is "The Regulators of Speech: Distribution" and starts on page 270 in the PDF). The thoughts from Lessing are that frequency regulation was neccessary in the beginning of radio technology, but today, it would be easily possible to design protocols that don't need regulation - they could be auto-regulating, e. g. with a prefix in front of every data package (the way wireless lan works). But the problem with that is that today, frequency usage generates large income for the state - that's completely against the original idea of it, as it's primarily purpose was to keep technology usable.

Posted by Hanno Böck

in Computer culture, Cryptography, English, Linux, Security

at

22:35

| Comments (0)

| Trackbacks (0)

Defined tags for this entry: 27c3, berlin, cellular, freesoftware, frequency, fsfe, gsm, lessig, mobilephones, openbsc, openbts, osmocombb, privacy, security, wiretapping

Friday, October 22. 2010

overheatd - is your CPU too hot?

Update: I got some nice hints in the comments. cpufreqd also includes this functionality and is probably the much more advanced solution. Also, I got a hint to linux-PHC, which allows undervolting a CPU and thus also saves energy.

I recently quite often had the problem that my system suddenly was shutting down. The reason was that when my processor got beyond 100 °C, my kernel decided that it's better to do so. I don't really know what caused this, but anyway, I needed a solution.

So i hacked together overheatd. A very effective way of cooling down a CPU is reducing its speed / frequency. Pretty much any modern CPU can do that and on Linux this can be controlled via the cpufreq interface. I wrote a little daemon that simply checks every 5 seconds (adjustable) if the temperature is over a certain treshold (90 °C default, also adjustable) and if yes, it sets cpufreq to the powersave governor (which means lowest speed possible). When the temperature is below or at 90 °C again, it's set back to the (default) ondemand governor. It also works for more than one CPU (I have a dual core), though it's very likely that it has bugs as soon as one goes beyond 10 CPUs - but I have no way to test this. Feel free to report bugs.

This could be made more sophisticated (not going to the lowest frequency but step by step to lower frequencies), but it does its job quite well for now. It might be a good idea to support something like this directly in the kernel (I wonder why that isn't the case already - it's pretty obvious), but that would probably involve a skilled kernel-hacker.

I recently quite often had the problem that my system suddenly was shutting down. The reason was that when my processor got beyond 100 °C, my kernel decided that it's better to do so. I don't really know what caused this, but anyway, I needed a solution.

So i hacked together overheatd. A very effective way of cooling down a CPU is reducing its speed / frequency. Pretty much any modern CPU can do that and on Linux this can be controlled via the cpufreq interface. I wrote a little daemon that simply checks every 5 seconds (adjustable) if the temperature is over a certain treshold (90 °C default, also adjustable) and if yes, it sets cpufreq to the powersave governor (which means lowest speed possible). When the temperature is below or at 90 °C again, it's set back to the (default) ondemand governor. It also works for more than one CPU (I have a dual core), though it's very likely that it has bugs as soon as one goes beyond 10 CPUs - but I have no way to test this. Feel free to report bugs.

This could be made more sophisticated (not going to the lowest frequency but step by step to lower frequencies), but it does its job quite well for now. It might be a good idea to support something like this directly in the kernel (I wonder why that isn't the case already - it's pretty obvious), but that would probably involve a skilled kernel-hacker.

Friday, May 14. 2010

Secure RSA padding: RSA-PSS

I got selected for this years Google Summer of Code with a project for the implementation of RSA-PSS in the nss library. RSA-PSS will also be the topic of my diploma thesis, so I thought I'd write some lines about it.

RSA is, as you may probably know, the most widely used public key cryptography algorithm. It can be used for signing and encryption, RSA-PSS is about signing (something similar, RSA-OAEP, exists for encryption, but that's not my main topic).

The formula for the RSA-algorithm is S = M^k mod N (S is the signature, M the input, k the private key and N the product of two big prime numbers). One important thing is that M is not the Message itself, but some encoding of the message. A simple way of doing this encoding is using a hash-function, for example SHA256. This is basically how old standards (like PKCS #1 1.5) worked. While no attacks exist against this scheme, it's believed that this can be improved. One reason is that while the RSA-function accepts an input of size N (which is the same length as the keysize, for example 2048/4096 bit), hash-functions usually produce much smaller inputs (something like 160/256 bit).

An improved scheme for that is the Probabilistic Signature Scheme (PSS), (Bellare/Rogaway 1996/1998). PSS is "provable secure". It does not mean that the outcoming algorithm is "provable secure" (that's impossible with today's math), but that the outcome is as secure as the input algorithm RSA and the used hash function (so-called "random oracle model"). A standard for PSS-encryption is PKCS #1 2.1 (republished as RFC 3447) So PSS in general is a good idea as a security measure, but as there is no real pressure to implement it, it's still not used very much. Just an example, the new DNSSEC ressource records just published last year still use the old PKCS #1 1.5 standard.

For SSL/TLS, standards to use PSS exist (RFC 4055, RFC 5756), but implementation is widely lacking. Just recently, openssl got support for PSS verification. The only implementation of signature creation I'm aware of is the java-library bouncycastle (yes, this forced me to write some lines of java code).

The nss library is used by the Mozilla products (Firefox, Thunderbird), so an implementation there is crucial for a more widespread use of PSS.

RSA is, as you may probably know, the most widely used public key cryptography algorithm. It can be used for signing and encryption, RSA-PSS is about signing (something similar, RSA-OAEP, exists for encryption, but that's not my main topic).

The formula for the RSA-algorithm is S = M^k mod N (S is the signature, M the input, k the private key and N the product of two big prime numbers). One important thing is that M is not the Message itself, but some encoding of the message. A simple way of doing this encoding is using a hash-function, for example SHA256. This is basically how old standards (like PKCS #1 1.5) worked. While no attacks exist against this scheme, it's believed that this can be improved. One reason is that while the RSA-function accepts an input of size N (which is the same length as the keysize, for example 2048/4096 bit), hash-functions usually produce much smaller inputs (something like 160/256 bit).

An improved scheme for that is the Probabilistic Signature Scheme (PSS), (Bellare/Rogaway 1996/1998). PSS is "provable secure". It does not mean that the outcoming algorithm is "provable secure" (that's impossible with today's math), but that the outcome is as secure as the input algorithm RSA and the used hash function (so-called "random oracle model"). A standard for PSS-encryption is PKCS #1 2.1 (republished as RFC 3447) So PSS in general is a good idea as a security measure, but as there is no real pressure to implement it, it's still not used very much. Just an example, the new DNSSEC ressource records just published last year still use the old PKCS #1 1.5 standard.

For SSL/TLS, standards to use PSS exist (RFC 4055, RFC 5756), but implementation is widely lacking. Just recently, openssl got support for PSS verification. The only implementation of signature creation I'm aware of is the java-library bouncycastle (yes, this forced me to write some lines of java code).

The nss library is used by the Mozilla products (Firefox, Thunderbird), so an implementation there is crucial for a more widespread use of PSS.

Posted by Hanno Böck

in Code, Cryptography, English, Linux, Security

at

23:22

| Comments (0)

| Trackbacks (0)

Sunday, February 7. 2010

Free and open source developers meeting (FOSDEM)

After reading a lot about interesting stuff happening at this years FOSDEM, I decided very short term to go there. The FOSDEM in Brussels is probably one of the biggest (if not the biggest at all) meetings of free software developers. Unlike similar events (like several Linuxtag-events in Germany), it's focus is mainly on developers, so the talks are more high level.

After reading a lot about interesting stuff happening at this years FOSDEM, I decided very short term to go there. The FOSDEM in Brussels is probably one of the biggest (if not the biggest at all) meetings of free software developers. Unlike similar events (like several Linuxtag-events in Germany), it's focus is mainly on developers, so the talks are more high level.My impressions from FOSDEM so far: There are much more people compared when I was here a few years ago, so it seems the number of free software developers is inceasing (which is great). The interest focus seems to be to extend free software to other areas. Embedded devices, the BIOS, open hardware (lot's of interest in 3D-printers).

Yesterday morning, there was a quite interesting talk by Richard Clayton about Phishing, Scam etc. with lots of statistics and info about the supposed business models behind it. Afterwards I had a nice chat with some developers from OpenInkpot. There was a big interest in the Coreboot-talk, so I (and many others) just didn't get in because it was full.

Later Gentoo-developer Petteri Räty gave a talk about "How to be a good upstream" and I'd suggest every free software developer to have a look on that (I'll put the link here later).

I've just attended a rather interesting talk about 3D-printers like RepRap and MakerBot.

Posted by Hanno Böck

in Code, Computer culture, Copyright, English, Gentoo, Life, Linux

at

10:34

| Comments (0)

| Trackbacks (0)

Defined tags for this entry: fosdem fosdem2010 freesoftware linux reprap makerb

Monday, February 1. 2010

SSL-Certificates with SHA256 signature

At least since 2005 it's well known that the cryptographic hash function SHA1 is seriously flawed and it's only a matter of time until it will be broken. However, it's still widely used and it can be expected that it'll be used long enough to allow real world attacks (as it happened with MD5 before). The NIST (the US National Institute of Standards and Technology) suggests not to use SHA1 after 2010, the german BSI (Bundesamt für Sicherheit in der Informationstechnik) says they should've been fadet out by the end of 2009.

The probably most widely used encryption protocol is SSL. It is a protocol that can operate on top of many other internet protocols and is for example widely used for banking accounts.

As SSL is a pretty complex protocol, it needs hash functions at various places, here I'm just looking at one of them. The signatures created by the certificate authorities. Every SSL certificate is signed by a CA, even if you generate SSL certificates yourself, they are self-signed, meaning that the certificate itself is it's own CA. From what I know, despite the suggestions mentioned above no big CA will give you certificates signed with anything better than SHA1. You can check this with:

openssl x509 -text -in [your ssl certificate]

Look for "Signature Algorithm". It'll most likely say sha1WithRSAEncryption. If your CA is good, it'll show sha256WithRSAEncryption. If your CA is really bad, it may show md5WithRSAEncryption.

When asking for SHA256 support, you often get the answer that the software still has problems, it's not ready yet. When asking for more information I never got answers. So I tried it myself. On an up-to-date apache webserver with mod_ssl, it was no problem to install a SHA256 signed certificate based on a SHA256 signed test CA. All browsers I've tried (Firefox 3.6, Konqueror 4.3.5, Opera 10.10, IE8 and even IE6) had no problem with it. You can check it out at [removed]. You will get a certificate warning (obviously, as it's signed by my own test CA), but you'll be able to view the page. If you want to test it without warnings, you can also import the CA certificate.

I'd be interested if this causes any problems (on server or on client side), so please leave a comment if you are aware of any incompatibilities.

Update: By request in the comments, I've also created a SHA512 testcase.

Update 2: StartSSL wrote me that they tried providing SHA256-certificates about a year ago and had too many problems - it wasn't very specific but they mentioned that earlier Windows XP and Windows 2003 Server versions may have problems.

The probably most widely used encryption protocol is SSL. It is a protocol that can operate on top of many other internet protocols and is for example widely used for banking accounts.

As SSL is a pretty complex protocol, it needs hash functions at various places, here I'm just looking at one of them. The signatures created by the certificate authorities. Every SSL certificate is signed by a CA, even if you generate SSL certificates yourself, they are self-signed, meaning that the certificate itself is it's own CA. From what I know, despite the suggestions mentioned above no big CA will give you certificates signed with anything better than SHA1. You can check this with:

openssl x509 -text -in [your ssl certificate]

Look for "Signature Algorithm". It'll most likely say sha1WithRSAEncryption. If your CA is good, it'll show sha256WithRSAEncryption. If your CA is really bad, it may show md5WithRSAEncryption.

When asking for SHA256 support, you often get the answer that the software still has problems, it's not ready yet. When asking for more information I never got answers. So I tried it myself. On an up-to-date apache webserver with mod_ssl, it was no problem to install a SHA256 signed certificate based on a SHA256 signed test CA. All browsers I've tried (Firefox 3.6, Konqueror 4.3.5, Opera 10.10, IE8 and even IE6) had no problem with it. You can check it out at [removed]. You will get a certificate warning (obviously, as it's signed by my own test CA), but you'll be able to view the page. If you want to test it without warnings, you can also import the CA certificate.

I'd be interested if this causes any problems (on server or on client side), so please leave a comment if you are aware of any incompatibilities.

Update: By request in the comments, I've also created a SHA512 testcase.

Update 2: StartSSL wrote me that they tried providing SHA256-certificates about a year ago and had too many problems - it wasn't very specific but they mentioned that earlier Windows XP and Windows 2003 Server versions may have problems.

Posted by Hanno Böck

in Cryptography, English, Gentoo, Linux, Security

at

23:23

| Comments (15)

| Trackback (1)

Thursday, January 14. 2010

BIOS update by extracting HD image from ISO

Today I faced an interesting Linux problem that made me learn a couple of things I'd like to share. At first, we found an issue on a Thinkpad X301 notebook that was fixed in a newer BIOS version. So we wanted to do a BIOS update. Lenovo provides BIOS updates either for Windows or as bootable ISO CD-images. But the device had no CD-drive and only Linux installed. First we tried unetbootin, a tool to create bootable USB sticks out of ISO-Images. That didn't work.

So I had a deeper look at the ISO. What puzzled me was that when mounting it as a loopback device, there were no files on it. After some research I learned that there are different ways to create bootable CDs and one of them is the El Torito extension. It places an image of a harddisk on the CD, when booting, the image is loaded into memory and an OS can be executed (this probably only works for quite simple OSes like DOS, the Lenovo BIOS Upgrade disk is based on PC-DOS). There's a small PERL-script called geteltorito that is able to extract such images from ISO files.

It's possible to boot such harddisk images with grub and memdisk (part of syslinux). Install syslinux, place the file memdisk into /boot (found in /usr/lib/syslinux/ or /usr/share/syslinux/) and add something like this to your grub config:

Or for grub2:

Now you can select bios update in your boot menu and it should boot the BIOS upgrade utility.

(Note that this does not work for all Lenovo BIOS updates, only for those using an El Torito harddisk image - you can mount your iso with mount -o loop [path_to_iso] [mount_path] to check, if there are any files, this method is not for you)

So I had a deeper look at the ISO. What puzzled me was that when mounting it as a loopback device, there were no files on it. After some research I learned that there are different ways to create bootable CDs and one of them is the El Torito extension. It places an image of a harddisk on the CD, when booting, the image is loaded into memory and an OS can be executed (this probably only works for quite simple OSes like DOS, the Lenovo BIOS Upgrade disk is based on PC-DOS). There's a small PERL-script called geteltorito that is able to extract such images from ISO files.

It's possible to boot such harddisk images with grub and memdisk (part of syslinux). Install syslinux, place the file memdisk into /boot (found in /usr/lib/syslinux/ or /usr/share/syslinux/) and add something like this to your grub config:

title HD Image

root (hd0,0)

kernel /boot/memdisk

initrd /boot/image.img

root (hd0,0)

kernel /boot/memdisk

initrd /boot/image.img

Or for grub2:

menuentry "HD Image" {

set root=(hd0,2)

linux16 /boot/memdisk

initrd16 /boot/hdimage.img

}

set root=(hd0,2)

linux16 /boot/memdisk

initrd16 /boot/hdimage.img

}

Now you can select bios update in your boot menu and it should boot the BIOS upgrade utility.

(Note that this does not work for all Lenovo BIOS updates, only for those using an El Torito harddisk image - you can mount your iso with mount -o loop [path_to_iso] [mount_path] to check, if there are any files, this method is not for you)

Friday, January 8. 2010

Videos aus ARD Mediathek herunterladen

Ich stand heute vor dem Problem, ein Video aus der ARD-Mediathek herunterladen zu wollen. Die gibt es meistens nur noch als Flash und ohne Download-Link.

Die Videos werden über RTMP übertragen, was ein Flash-eigenes Videostreaming-Protokoll ist. Im gulli-Forum fand ich eine Anleitung. Ich habe darauf basierend ein kleines Skript ardget geschrieben, mit dem man das bequem erledigen kann. Aufzurufen einfach über

ardget "[URL der Mediathek]"

Die Anführungszeichen sind notwendig, weil die URLs &-Zeichen enthalten, die sonst von der Shell fehlinterpretiert werden. Da die Videos teilweise mit Javascript-URLs verlinkt sind, filtere ich das auch entsprechend, man kann also den kompletten javascript: beginnenden Link übergeben. Benötigt wird entweder flvstreamer oder rtmpdump, sollte ansonsten in jeder gängigen Unix-Shell funktionieren.

Die Videos werden über RTMP übertragen, was ein Flash-eigenes Videostreaming-Protokoll ist. Im gulli-Forum fand ich eine Anleitung. Ich habe darauf basierend ein kleines Skript ardget geschrieben, mit dem man das bequem erledigen kann. Aufzurufen einfach über

ardget "[URL der Mediathek]"

Die Anführungszeichen sind notwendig, weil die URLs &-Zeichen enthalten, die sonst von der Shell fehlinterpretiert werden. Da die Videos teilweise mit Javascript-URLs verlinkt sind, filtere ich das auch entsprechend, man kann also den kompletten javascript: beginnenden Link übergeben. Benötigt wird entweder flvstreamer oder rtmpdump, sollte ansonsten in jeder gängigen Unix-Shell funktionieren.

Friday, September 18. 2009

O2 DSl-Router die Macken austreiben

Seit kurzem bin ich in Besitz eines Internetzugangs von O2. Dabei wird ein Router (DSL Router Classic, das Ding stammt wohl von Zyxel, eine genaue Typbezeichnung habe ich bisher nicht gefunden), der gleichzeitig auch VoIP macht, mitgeliefert.

Dabei sind bei mir zwei Probleme aufgetaucht, die vielleicht auch anderen (potentiell intensiveren) Internetnutzern aufstoßen, deswegen teile ich hier mal mit wie man dem Ding beibringt, normales Internet anzubieten.

Zunächst ist mir aufgestossen, dass wenn ich viele eMail-Konten gleichzeitig abholen wollte, bei einem Teil davon Verbindungsfehler auftraten. Etwas geklicke durch das Webinterface brachte mich auf den Punkt »Firewall« (»Firewall« wird ja heutzutage für alles und nichts als Buzzword benutzt). Dahinter verbergen sich einige Limits für Verbindungen, die doch ziemlich niedrig gesetzt sind. Was mir vermutlich die Probleme bereitet hat, ist die Begrenzung auf 10 unvollständige TCP-Verbindungen.

Ich habe eine Weile drüber nachgedacht, ob ich die Funktionalität einer solchen »Firewall« aus irgendeinem Grund nützlich finde und bin drauf gekommen, dass, solange ich meinem Rechner vertraue, mir das Ding nur Probleme bereitet und es schlicht ausgeschaltet.

Ein weiteres Problem lies sich nicht so einfach Lösen: SSH-Verbindungen, bei denen ich eine Weile nichts gemacht hatte und auf denen auch keine Ausgabe kam, standen still. Mutmaßung: TCP-Verbindungen werden gekappt, wenn länger keine Daten über sie fließen. Nur fand sich nirgends eine Option, um dem beizukommen. Nach etwas Suchen im Netz bin ich dann drauf gestoßen, dass das Teil ein Telnet-Interface hat (Benutzername: admin, Passwort identisch mit Webinterface), über das man sich einloggen kann und einige Optionen setzen, die das Webinterface nicht anbietet (siehe solariz.de: Zyxel Router P-334 optimieren).

war dann das Zauberwort, welches den TCP-Timeout höher setzt (eine Stunde erschien mir passabel, Voreinstellung war 300, also 5 Minuten).

Dabei sind bei mir zwei Probleme aufgetaucht, die vielleicht auch anderen (potentiell intensiveren) Internetnutzern aufstoßen, deswegen teile ich hier mal mit wie man dem Ding beibringt, normales Internet anzubieten.

Zunächst ist mir aufgestossen, dass wenn ich viele eMail-Konten gleichzeitig abholen wollte, bei einem Teil davon Verbindungsfehler auftraten. Etwas geklicke durch das Webinterface brachte mich auf den Punkt »Firewall« (»Firewall« wird ja heutzutage für alles und nichts als Buzzword benutzt). Dahinter verbergen sich einige Limits für Verbindungen, die doch ziemlich niedrig gesetzt sind. Was mir vermutlich die Probleme bereitet hat, ist die Begrenzung auf 10 unvollständige TCP-Verbindungen.

Ich habe eine Weile drüber nachgedacht, ob ich die Funktionalität einer solchen »Firewall« aus irgendeinem Grund nützlich finde und bin drauf gekommen, dass, solange ich meinem Rechner vertraue, mir das Ding nur Probleme bereitet und es schlicht ausgeschaltet.

Ein weiteres Problem lies sich nicht so einfach Lösen: SSH-Verbindungen, bei denen ich eine Weile nichts gemacht hatte und auf denen auch keine Ausgabe kam, standen still. Mutmaßung: TCP-Verbindungen werden gekappt, wenn länger keine Daten über sie fließen. Nur fand sich nirgends eine Option, um dem beizukommen. Nach etwas Suchen im Netz bin ich dann drauf gestoßen, dass das Teil ein Telnet-Interface hat (Benutzername: admin, Passwort identisch mit Webinterface), über das man sich einloggen kann und einige Optionen setzen, die das Webinterface nicht anbietet (siehe solariz.de: Zyxel Router P-334 optimieren).

ip nat timeout tcp 3600

war dann das Zauberwort, welches den TCP-Timeout höher setzt (eine Stunde erschien mir passabel, Voreinstellung war 300, also 5 Minuten).

Thursday, July 9. 2009

LPIC-1

After passing the second exam at the Linuxtag, I'm now officially allowed to call myself LPIC-1.

After passing the second exam at the Linuxtag, I'm now officially allowed to call myself LPIC-1.

« previous page

(Page 3 of 16, totaling 238 entries)

» next page