Wednesday, October 21. 2020

File Exfiltration via Libreoffice in BigBlueButton and JODConverter

BigBlueButton is a free web-based video conferencing software that lately got quite popular, largely due to Covid-19. Earlier this year I did a brief check on its security which led to an article on Golem.de (German). I want to share the most significant findings here.

BigBlueButton is a free web-based video conferencing software that lately got quite popular, largely due to Covid-19. Earlier this year I did a brief check on its security which led to an article on Golem.de (German). I want to share the most significant findings here.BigBlueButton has a feature that lets a presenter upload a presentation in a wide variety of file formats that gets then displayed in the web application. This looked like a huge attack surface. The conversion for many file formats is done with Libreoffice on the server. Looking for ways to exploit server-side Libreoffice rendering I found a blog post by Bret Buerhaus that discussed a number of ways of exploiting such setups.

One of the methods described there is a feature in Opendocument Text (ODT) files that allows embedding a file from an external URL in a text section. This can be a web URL like https or a file url and include a local file.

This directly worked in BigBlueButton. An ODT file that referenced a local file would display that local file. This allows displaying any file that the user running the BigBlueButton service could access on the server. A possible way to exploit this is to exfiltrate the configuration file that contains the API secret key, which then allows basically controlling the BigBlueButton instance. I have a video showing the exploit here. (I will publish the exploit later.)

I reported this to the developers of BigBlueButton in May. Unfortunately my experience with their security process was not very good. At first I did not get an answer at all. After another mail they told me they plan to sandbox the Libreoffice process either via a chroot or a docker container. However that still has not happened yet. It is planned for the upcoming version 2.3 and independent of this bug this is a good idea, as Libreoffice just creates a lot of attack surface.

Recently I looked a bit more into this. The functionality to include external files only happens after a manual user confirmation and if one uses Libreoffice on the command line it does not work at all by default. So in theory this exploit should not have worked, but it did.

It turned out the reason for this was another piece of software that BigBlueButton uses called JODConverter. It provides a wrapper around the conversion functionality of Libreoffice. After contacting both the Libreoffice security team and the developer of JODConverter we figured out that it enables including external URLs by default.

I forwarded this information to the BigBlueButton developers and it finally let to a fix, they now change the default settings of JODConverter manually. The JODConverter developer considers changing the default as well, but this has not happened yet. Other software or web pages using JODConverter for serverside file conversion may thus still be vulnerable.

The fix was included in version 2.2.27. Today I learned that the company RedTeam Pentesting has later independently found the same vulnerability. They also requested a CVE: It is now filed as CVE-2020-25820.

While this issue is fixed, the handling of security issues by BigBlueButton was not exactly stellar. It took around five months from my initial report to a fix. The release notes do not mention that this is an important security update (the change has the note “speed up the conversion”).

I found a bunch of other security issues in BigBlueButton and proposed some hardening changes. This took a lot of back and forth, but all significant issues are resolved now.

Another issue with the presentation upload was that it allowed cross site scripting, because it did not set a proper content type for downloads. This was independently discovered by another person and was fixed a while ago. (If you are interested in details about this class of vulnerabilities: I have given a talk about it at last year’s Security Fest.)

The session Cookies both from BigBlueButton itself and from its default web frontend Greenlight were not set with a secure flag, so the cookies could be transmitted in clear text over the network. This has also been changed now.

By default the BigBlueButton installation script starts several services that open ports that do not need to be publicly accessible. This is now also changed. A freeswitch service run locally was installed with a default password (“ClueCon”), this is now also changed to a random password by the installation script.

What also looks quite problematic is the use of outdated software. BigBlueButton only works on Ubuntu 16.04, which is a long term support version, so it still receives updates. But it also uses several external repositories, including one that installs NodeJS version 8 and shows a warning that this repository no longer receives security updates. There is an open bug in the bug tracker.

If you are using BigBlueButton I strongly recommend you update to at least version 2.2.27. This should fix all the issues I found. I would wish that the BigBlueButton developers improve their security process, react more timely to external reports and more transparent when issues are fixed.

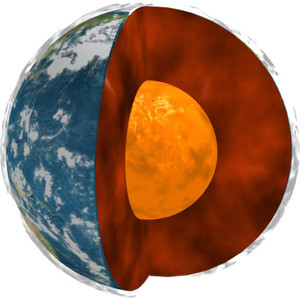

Image Source: Wikimedia Commons / NOAA / Public Domain

Update: Proof of concept published.

Posted by Hanno Böck

in Code, English, Linux, Security

at

14:14

| Comments (0)

| Trackback (1)

Defined tags for this entry: bigbluebutton, cookie, fileexfiltration, itsecurity, jodconverter, libreoffice, security, websecurity, xss

Monday, April 6. 2020

Userdir URLs like https://example.org/~username/ are dangerous

I would like to point out a security problem with a classic variant of web space hosting. While this issue should be obvious to anyone knowing basic web security, I have never seen it being discussed publicly.

Some server operators allow every user on the system to have a personal web space where they can place files in a directory (often ~/public_html) and they will appear on the host under a URL with a tilde and their username (e.g. https://example.org/~username/). The Apache web server provides such a function in the mod_userdir module. While this concept is rather old, it is still used by some and is often used by universities and Linux distributions.

From a web security perspective there is a very obvious problem with such setups that stems from the same origin policy, which is a core principle of Javascript security. While there are many subtleties about it, the key principle is that a piece of Javascript running on one web host is isolated from other web hosts.

To put this into a practical example: If you read your emails on a web interface on example.com then a script running on example.org should not be able to read your mails, change your password or mess in any other way with the application running on a different host. However if an attacker can place a script on example.com, which is called a Cross Site Scripting or XSS vulnerability, the attacker may be able to do all that.

The problem with userdir URLs should now become obvious: All userdir URLs on one server run on the same host and thus are in the same origin. It has XSS by design.

What does that mean in practice? Let‘s assume we have Bob, who has the username „bob“ on exampe.org, runs a blog on https://example.org/~bob/. User Mallory, who has the username „mallory“ on the same host, wants to attack Bob. If Bob is currently logged into his blog and Mallory manages to convince Bob to open her webpage – hosted at https://example.org/~mallory/ - at the same time she can place an attack script there that will attack Bob. The attack could be a variety of things from adding another user to the blog, changing Bob‘s password or reading unpublished content.

This is only an issue if the users on example.org do not trust each other, so the operator of the host may decide this is no problem if there is only a small number of trusted users. However there is another issue: An XSS vulnerability on any of the userdir web pages on the same host may be used to attack any other web page on the same host.

So if for example Alice runs an outdated web application with a known XSS vulnerability on https://example.org/~alice/ and Bob runs his blog on https://example.org/~bob/ then Mallory can use the vulnerability in Alice‘s web application to attack Bob.

All of this is primarily an issue if people run non-trivial web applications that have accounts and logins. If the web pages are only used to host static content the issues become much less problematic, though it is still with some limitations possible that one user could show the webpage of another user in a manipulated way.

So what does that mean? You probably should not use userdir URLs for anything except hosting of simple, static content - and probably not even there if you can avoid it. Even in situations where all users are considered trusted there is an increased risk, as vulnerabilities can cross application boundaries. As for Apache‘s mod_userdir I have contacted the Apache developers and they agreed to add a warning to the documentation.

If you want to provide something similar to your users you might want to give every user a subdomain, for example https://alice.example.org/, https://bob.example.org/ etc. There is however still a caveat with this: Unfortunately the same origin policy does not apply to all web technologies and particularly it does not apply to Cookies. However cross-hostname Cookie attacks are much less straightforward and there is often no practical attack scenario, thus using subdomains is still the more secure choice.

To avoid these Cookie issues for domains where user content is hosted regularly – a well-known example is github.io – there is the Public Suffix List for such domains. If you run a service with user subdomains you might want to consider adding your domain there, which can be done with a pull request.

Some server operators allow every user on the system to have a personal web space where they can place files in a directory (often ~/public_html) and they will appear on the host under a URL with a tilde and their username (e.g. https://example.org/~username/). The Apache web server provides such a function in the mod_userdir module. While this concept is rather old, it is still used by some and is often used by universities and Linux distributions.

From a web security perspective there is a very obvious problem with such setups that stems from the same origin policy, which is a core principle of Javascript security. While there are many subtleties about it, the key principle is that a piece of Javascript running on one web host is isolated from other web hosts.

To put this into a practical example: If you read your emails on a web interface on example.com then a script running on example.org should not be able to read your mails, change your password or mess in any other way with the application running on a different host. However if an attacker can place a script on example.com, which is called a Cross Site Scripting or XSS vulnerability, the attacker may be able to do all that.

The problem with userdir URLs should now become obvious: All userdir URLs on one server run on the same host and thus are in the same origin. It has XSS by design.

What does that mean in practice? Let‘s assume we have Bob, who has the username „bob“ on exampe.org, runs a blog on https://example.org/~bob/. User Mallory, who has the username „mallory“ on the same host, wants to attack Bob. If Bob is currently logged into his blog and Mallory manages to convince Bob to open her webpage – hosted at https://example.org/~mallory/ - at the same time she can place an attack script there that will attack Bob. The attack could be a variety of things from adding another user to the blog, changing Bob‘s password or reading unpublished content.

This is only an issue if the users on example.org do not trust each other, so the operator of the host may decide this is no problem if there is only a small number of trusted users. However there is another issue: An XSS vulnerability on any of the userdir web pages on the same host may be used to attack any other web page on the same host.

So if for example Alice runs an outdated web application with a known XSS vulnerability on https://example.org/~alice/ and Bob runs his blog on https://example.org/~bob/ then Mallory can use the vulnerability in Alice‘s web application to attack Bob.

All of this is primarily an issue if people run non-trivial web applications that have accounts and logins. If the web pages are only used to host static content the issues become much less problematic, though it is still with some limitations possible that one user could show the webpage of another user in a manipulated way.

So what does that mean? You probably should not use userdir URLs for anything except hosting of simple, static content - and probably not even there if you can avoid it. Even in situations where all users are considered trusted there is an increased risk, as vulnerabilities can cross application boundaries. As for Apache‘s mod_userdir I have contacted the Apache developers and they agreed to add a warning to the documentation.

If you want to provide something similar to your users you might want to give every user a subdomain, for example https://alice.example.org/, https://bob.example.org/ etc. There is however still a caveat with this: Unfortunately the same origin policy does not apply to all web technologies and particularly it does not apply to Cookies. However cross-hostname Cookie attacks are much less straightforward and there is often no practical attack scenario, thus using subdomains is still the more secure choice.

To avoid these Cookie issues for domains where user content is hosted regularly – a well-known example is github.io – there is the Public Suffix List for such domains. If you run a service with user subdomains you might want to consider adding your domain there, which can be done with a pull request.

Monday, December 16. 2019

#include </etc/shadow>

Recently I saw a tweet where someone mentioned that you can include /dev/stdin in C code compiled with gcc. This is, to say the very least, surprising.

When you see something like this with an IT security background you start to wonder if this can be abused for an attack. While I couldn't come up with anything, I started to wonder what else you could include. As you can basically include arbitrary paths on a system this may be used to exfiltrate data - if you can convince someone else to compile your code.

There are plenty of webpages that offer online services where you can type in C code and run it. It is obvious that such systems are insecure if the code running is not sandboxed in some way. But is it equally obvious that the compiler also needs to be sandboxed?

How would you attack something like this? Exfiltrating data directly through the code is relatively difficult, because you need to include data that ends up being valid C code. Maybe there's a trick to make something like /etc/shadow valid C code (you can put code before and after the include), but I haven't found it. But it's not needed either: The error messages you get from the compiler are all you need. All online tools I tested will show you the errors if your code doesn't compile.

I even found one service that allowed me to add

#include </etc/shadow>

and showed me the hash of the root password. This effectively means this service is running compile tasks as root.

Including various files in /etc allows to learn something about the system. For example /etc/lsb-release often gives information about the distribution in use. Interestingly, including pseudo-files from /proc does not work. It seems gcc treats them like empty files. This limits possibilities to learn about the system. /sys and /dev work, but they contain less human readable information.

In summary I think services letting other people compile code should consider sandboxing the compile process and thus make sure no interesting information can be exfiltrated with these attack vectors.

When you see something like this with an IT security background you start to wonder if this can be abused for an attack. While I couldn't come up with anything, I started to wonder what else you could include. As you can basically include arbitrary paths on a system this may be used to exfiltrate data - if you can convince someone else to compile your code.

There are plenty of webpages that offer online services where you can type in C code and run it. It is obvious that such systems are insecure if the code running is not sandboxed in some way. But is it equally obvious that the compiler also needs to be sandboxed?

How would you attack something like this? Exfiltrating data directly through the code is relatively difficult, because you need to include data that ends up being valid C code. Maybe there's a trick to make something like /etc/shadow valid C code (you can put code before and after the include), but I haven't found it. But it's not needed either: The error messages you get from the compiler are all you need. All online tools I tested will show you the errors if your code doesn't compile.

I even found one service that allowed me to add

#include </etc/shadow>

and showed me the hash of the root password. This effectively means this service is running compile tasks as root.

Including various files in /etc allows to learn something about the system. For example /etc/lsb-release often gives information about the distribution in use. Interestingly, including pseudo-files from /proc does not work. It seems gcc treats them like empty files. This limits possibilities to learn about the system. /sys and /dev work, but they contain less human readable information.

In summary I think services letting other people compile code should consider sandboxing the compile process and thus make sure no interesting information can be exfiltrated with these attack vectors.

Friday, September 13. 2019

Security Issues with PGP Signatures and Linux Package Management

In discussions around the PGP ecosystem one thing I often hear is that while PGP has its problems, it's an important tool for package signatures in Linux distributions. I therefore want to highlight a few issues I came across in this context that are rooted in problems in the larger PGP ecosystem.

Let's look at an example of the use of PGP signatures for deb packages, the Ubuntu Linux installation instructions for HHVM. HHVM is an implementation of the HACK programming language and developed by Facebook. I'm just using HHVM as an example here, as it nicely illustrates two attacks I want to talk about, but you'll find plenty of similar installation instructions for other software packages. I have reported these issues to Facebook, but they decided not to change anything.

The instructions for Ubuntu (and very similarly for Debian) recommend that users execute these commands in order to install HHVM from the repository of its developers:

The crucial part here is the line starting with apt-key. It fetches the key that is used to sign the repository from the Ubuntu key server, which itself is part of the PGP keyserver network.

Attack 1: Flooding Key with Signatures

Attack 1: Flooding Key with Signatures

The first possible attack is actually quite simple: One can make the signature key offered here unusable by appending many signatures.

A key concept of the PGP keyservers is that they operate append-only. New data gets added, but never removed. PGP keys can sign other keys and these signatures can also be uploaded to the keyservers and get added to a key. Crucially the owner of a key has no influence on this.

This means everyone can grow the size of a key by simply adding many signatures to it. Lately this has happened to a number of keys, see the blog posts by Daniel Kahn Gillmor and Robert Hansen, two members of the PGP community who have personally been affected by this. The effect of this is that when GnuPG tries to import such a key it becomes excessively slow and at some point will simply not work any more.

For the above installation instructions this means anyone can make them unusable by attacking the referenced release key. In my tests I was still able to import one of the attacked keys with apt-key after several minutes, but these keys "only" have a few ten thousand signatures, growing them to a few megabytes size. There's no reason an attacker couldn't use millions of signatures and grow single keys to gigabytes.

Attack 2: Rogue packages with a colliding Key Id

The installation instructions reference the key as 0xB4112585D386EB94, which is a 64 bit hexadecimal key id.

Key ids are a central concept in the PGP ecosystem. The key id is a truncated SHA1 hash of the public key. It's possible to either use the last 32 bit, 64 bit or the full 160 bit of the hash.

It's been pointed out in the past that short key ids allow colliding key ids. This means an attacker can generate a different key with the same key id where he owns the private key simply by bruteforcing the id. In 2014 Richard Klafter and Eric Swanson showed with the Evil32 attack how to create colliding key ids for all keys in the so-called strong set (meaning all keys that are connected with most other keys in the web of trust). Later someone unknown uploaded these keys to the key servers causing quite some confusion.

It should be noted that the issue of colliding key ids was known and discussed in the community way earlier, see for example this discussion from 2002.

The practical attacks targeted 32 bit key ids, but the same attack works against 64 bit key ids, too, it just costs more. I contacted the authors of the Evil32 attack and Eric Swanson estimated in a back of the envelope calculation that it would cost roughly $ 120.000 to perform such an attack with GPUs on cloud providers. This is expensive, but within the possibilities of powerful attackers. Though one can also find similar installation instructions using a 32 bit key id, where the attack is really cheap.

Going back to the installation instructions from above we can imagine the following attack: A man in the middle network attacker can intercept the connection to the keyserver - it's not encrypted or authenticated - and provide the victim a colliding key. Afterwards the key is imported by the victim, so the attacker can provide repositories with packages signed by his key, ultimately leading to code execution.

You may notice that there's a problem with this attack: The repository provided by HHVM is using HTTPS. Thus the attacker can not simply provide a rogue HHVM repository. However the attack still works.

The imported PGP key is not bound to any specific repository. Thus if the victim has any non-HTTPS repository configured in his system the attacker can provide a rogue repository on the next call of "apt update". Notably by default both Debian and Ubuntu use HTTP for their repositories (a Debian developer even runs a dedicated web page explaining why this is no big deal).

Attack 3: Key over HTTP

Issues with package keys aren't confined to Debian/APT-based distributions. I found these installation instructions at Dropbox (Link to Wayback Machine, as Dropbox has changed them after I reported this):

It should be obvious what the issue here is: Both the key and the repository are fetched over HTTP, a network attacker can simply provide his own key and repository.

Discussion

The standard answer you often get when you point out security problems with PGP-based systems is: "It's not PGP/GnuPG, people are just using it wrong". But I believe these issues show some deeper problems with the PGP ecosystem. The key flooding issue is inherited from the systemically flawed concept of the append only key servers.

The other issue here is lack of deprecation. Short key ids are problematic, it's been known for a long time and there have been plenty of calls to get rid of them. This begs the question why no effort has been made to deprecate support for them. One could have said at some point: Future versions of GnuPG will show a warning for short key ids and in three years we will stop supporting them.

This reminds of other issues like unauthenticated encryption, where people have been arguing that this was fixed back in 1999 by the introduction of the MDC. Yet in 2018 it was still exploitable, because the unauthenticated version was never properly deprecated.

Fix

For all people having installation instructions for external repositories my recommendation would be to avoid any use of public key servers. Host the keys on your own infrastructure and provide them via HTTPS. Furthermore any reference to 32 bit or 64 bit key ids should be avoided.

Update: Some people have pointed out to me that the Debian Wiki contains guidelines for third party repositories that avoid the issues mentioned here.

Let's look at an example of the use of PGP signatures for deb packages, the Ubuntu Linux installation instructions for HHVM. HHVM is an implementation of the HACK programming language and developed by Facebook. I'm just using HHVM as an example here, as it nicely illustrates two attacks I want to talk about, but you'll find plenty of similar installation instructions for other software packages. I have reported these issues to Facebook, but they decided not to change anything.

The instructions for Ubuntu (and very similarly for Debian) recommend that users execute these commands in order to install HHVM from the repository of its developers:

apt-get update

apt-get install software-properties-common apt-transport-https

apt-key adv --recv-keys --keyserver hkp://keyserver.ubuntu.com:80 0xB4112585D386EB94

add-apt-repository https://dl.hhvm.com/ubuntu

apt-get update

apt-get install hhvm

apt-get install software-properties-common apt-transport-https

apt-key adv --recv-keys --keyserver hkp://keyserver.ubuntu.com:80 0xB4112585D386EB94

add-apt-repository https://dl.hhvm.com/ubuntu

apt-get update

apt-get install hhvm

The crucial part here is the line starting with apt-key. It fetches the key that is used to sign the repository from the Ubuntu key server, which itself is part of the PGP keyserver network.

Attack 1: Flooding Key with Signatures

Attack 1: Flooding Key with SignaturesThe first possible attack is actually quite simple: One can make the signature key offered here unusable by appending many signatures.

A key concept of the PGP keyservers is that they operate append-only. New data gets added, but never removed. PGP keys can sign other keys and these signatures can also be uploaded to the keyservers and get added to a key. Crucially the owner of a key has no influence on this.

This means everyone can grow the size of a key by simply adding many signatures to it. Lately this has happened to a number of keys, see the blog posts by Daniel Kahn Gillmor and Robert Hansen, two members of the PGP community who have personally been affected by this. The effect of this is that when GnuPG tries to import such a key it becomes excessively slow and at some point will simply not work any more.

For the above installation instructions this means anyone can make them unusable by attacking the referenced release key. In my tests I was still able to import one of the attacked keys with apt-key after several minutes, but these keys "only" have a few ten thousand signatures, growing them to a few megabytes size. There's no reason an attacker couldn't use millions of signatures and grow single keys to gigabytes.

Attack 2: Rogue packages with a colliding Key Id

The installation instructions reference the key as 0xB4112585D386EB94, which is a 64 bit hexadecimal key id.

Key ids are a central concept in the PGP ecosystem. The key id is a truncated SHA1 hash of the public key. It's possible to either use the last 32 bit, 64 bit or the full 160 bit of the hash.

It's been pointed out in the past that short key ids allow colliding key ids. This means an attacker can generate a different key with the same key id where he owns the private key simply by bruteforcing the id. In 2014 Richard Klafter and Eric Swanson showed with the Evil32 attack how to create colliding key ids for all keys in the so-called strong set (meaning all keys that are connected with most other keys in the web of trust). Later someone unknown uploaded these keys to the key servers causing quite some confusion.

It should be noted that the issue of colliding key ids was known and discussed in the community way earlier, see for example this discussion from 2002.

The practical attacks targeted 32 bit key ids, but the same attack works against 64 bit key ids, too, it just costs more. I contacted the authors of the Evil32 attack and Eric Swanson estimated in a back of the envelope calculation that it would cost roughly $ 120.000 to perform such an attack with GPUs on cloud providers. This is expensive, but within the possibilities of powerful attackers. Though one can also find similar installation instructions using a 32 bit key id, where the attack is really cheap.

Going back to the installation instructions from above we can imagine the following attack: A man in the middle network attacker can intercept the connection to the keyserver - it's not encrypted or authenticated - and provide the victim a colliding key. Afterwards the key is imported by the victim, so the attacker can provide repositories with packages signed by his key, ultimately leading to code execution.

You may notice that there's a problem with this attack: The repository provided by HHVM is using HTTPS. Thus the attacker can not simply provide a rogue HHVM repository. However the attack still works.

The imported PGP key is not bound to any specific repository. Thus if the victim has any non-HTTPS repository configured in his system the attacker can provide a rogue repository on the next call of "apt update". Notably by default both Debian and Ubuntu use HTTP for their repositories (a Debian developer even runs a dedicated web page explaining why this is no big deal).

Attack 3: Key over HTTP

Issues with package keys aren't confined to Debian/APT-based distributions. I found these installation instructions at Dropbox (Link to Wayback Machine, as Dropbox has changed them after I reported this):

Add the following to /etc/yum.conf.

name=Dropbox Repository

baseurl=http://linux.dropbox.com/fedora/\$releasever/

gpgkey=http://linux.dropbox.com/fedora/rpm-public-key.asc

name=Dropbox Repository

baseurl=http://linux.dropbox.com/fedora/\$releasever/

gpgkey=http://linux.dropbox.com/fedora/rpm-public-key.asc

It should be obvious what the issue here is: Both the key and the repository are fetched over HTTP, a network attacker can simply provide his own key and repository.

Discussion

The standard answer you often get when you point out security problems with PGP-based systems is: "It's not PGP/GnuPG, people are just using it wrong". But I believe these issues show some deeper problems with the PGP ecosystem. The key flooding issue is inherited from the systemically flawed concept of the append only key servers.

The other issue here is lack of deprecation. Short key ids are problematic, it's been known for a long time and there have been plenty of calls to get rid of them. This begs the question why no effort has been made to deprecate support for them. One could have said at some point: Future versions of GnuPG will show a warning for short key ids and in three years we will stop supporting them.

This reminds of other issues like unauthenticated encryption, where people have been arguing that this was fixed back in 1999 by the introduction of the MDC. Yet in 2018 it was still exploitable, because the unauthenticated version was never properly deprecated.

Fix

For all people having installation instructions for external repositories my recommendation would be to avoid any use of public key servers. Host the keys on your own infrastructure and provide them via HTTPS. Furthermore any reference to 32 bit or 64 bit key ids should be avoided.

Update: Some people have pointed out to me that the Debian Wiki contains guidelines for third party repositories that avoid the issues mentioned here.

Posted by Hanno Böck

in Code, English, Linux, Security

at

14:17

| Comments (5)

| Trackback (1)

Defined tags for this entry: apt, cryptography, deb, debian, fedora, gnupg, gpg, linux, openpgp, packagemanagement, pgp, rpm, security, signatures, ubuntu

Thursday, November 16. 2017

Some minor Security Quirks in Firefox

I'd like to point out that Mozilla hasn't fixed most of those issues, despite all of them being reported several months ago.

Bypassing XSA warning via FTP

XSA or Cross-Site Authentication is an interesting and not very well known attack. It's been discovered by Joachim Breitner in 2005.

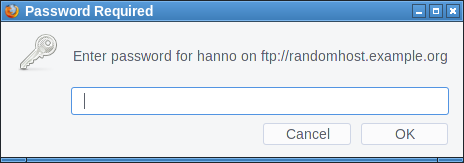

Some web pages, mostly forums, allow users to include third party images. This can be abused by an attacker to steal other user's credentials. An attacker first posts something with an image from a server he controls. He then switches on HTTP authentication for that image. All visitors of the page will now see a login dialog on that page. They may be tempted to type in their login credentials into the HTTP authentication dialog, which seems to come from the page they trust.

The original XSA attack is, as said, quite old. As a countermeasure Firefox implements a warning in HTTP authentication dialogs that were created by a subresource like an image. However it only does that for HTTP, not for FTP.

So an attacker can run an FTP server and include an image from there. By then requiring an FTP login and logging all login attempts to the server he can gather credentials. The password dialog will show the host name of the attacker's FTP server, but he could choose one that looks close enough to the targeted web page to not raise suspicion.

I haven't found any popular site that allows embedding images from non-HTTP-protocols. The most popular page that allows embedding external images at all is Stack Overflow, but it only allows HTTPS. Generally embedding third party images is less common these days, most pages keep local copies if they embed external images.

This bug is yet unfixed.

Obviously one could fix it by showing the same warning for FTP that is shown for HTTP authentication. But I'd rather recommend to completely block authentication dialogs on third party content. This is also what Chrome is doing. Mozilla has been discussing this for several years with no result.

Firefox also has an open bug about disallowing FTP on subresources. This would obviously also fix this scenario.

Window-modal popup via FTP

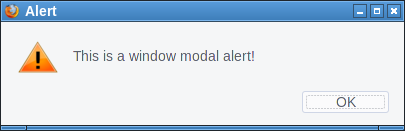

In the early days of JavaScript web pages could annoy users with popups. Browsers have since changed the behavior of JavaScript popups. They are now tab-modal, which means they're not blocking the interaction with the whole browser, they're just part of one tab and will only block the interaction with the web page that created them.

So it is a goal of modern browsers to not allow web pages to create window-modal alerts that block the interaction with the whole browser. However I figured out FTP gives us a bypass of this restriction.

If Firefox receives some random garbage over an FTP connection that it cannot interpret as FTP commands it will open an alert window showing that garbage.

First we open up our fake "FTP-Server" that will simply send a message to all clients. We can just use netcat for this:

while true; do echo "Hello" | nc -l -p 21; doneThen we try to open a connection, e. g. by typing ftp://localhost in the address bar on the same system. Firefox will not show the alert immediately. However if we then click on the URL bar and press enter again it will show the alert window. I tried to replicate that behavior with JavaScript, which worked sometimes. I'm relatively sure this can be made reliable.

There are two problems here. One is that server controlled content is showed to the user without any interpretation. This alert window seems to be intended as some kind of error message. However it doesn't make a lot of sense like that. If at all it should probably be prefixed by some message like "the server sent an invalid command". But ultimately if the browser receives random garbage instead of protocol messages it's probably not wise to display that at all. The second problem is that FTP error messages probably should be tab-modal as well.

This bug is also yet unfixed.

FTP considered dangerous

FTP is an old protocol with many problems. Some consider the fact that browsers still support it a problem. I tend to agree, ideally FTP should simply be removed from modern browsers.

FTP in browsers is insecure by design. While TLS-enabled FTP exists browsers have never supported it. The FTP code is probably not well audited, as it's rarely used. And the fact that another protocol exists that can be used similarly to HTTP has the potential of surprises. For example I found it quite surprising to learn that it's possible to have unencrypted and unauthenticated FTP connections to hosts that enabled HSTS. (The lack of cookie support on FTP seems to avoid causing security issues, but it's still unexpected and feels dangerous.)

Self-XSS in bookmark manager export

The Firefox Bookmark manager allows exporting bookmarks to an HTML document. Before the current Firefox 57 it was possible to inject JavaScript into this exported HTML via the tags field.

I tried to come up with a plausible scenario where this could matter, however this turned out to be difficult. This would be a problematic behavior if there's a way for a web page to create such a bookmark. While it is possible to create a bookmark dialog with JavaScript, this doesn't allow us to prefill the tags field. Thus there is no way a web page can insert any content here.

One could come up with implausible social engineering scenarios (web page asks user to create a bookmark and insert some specific string into the tags field), but that seems very far fetched. A remotely plausible scenario would be a situation where a browser can be used by multiple people who are allowed to create bookmarks and the bookmarks are regularly exported and uploaded to a web page. However that also seems quite far fetched.

This was fixed in the latest Firefox release as CVE-2017-7840 and considered as low severity.

Crashing Firefox on Linux via notification API

The notification API allows browsers to send notification alerts that the operating system will show in small notification windows. A notification can contain a small message and an icon.

When playing this one of the very first things that came to my mind was to check what happens if one simply sends a very large icon. A user has to approve that a web page is allowed to use the notification API, however if he does the result is an immediate crash of the browser. This only "works" on Linux. The proof of concept is quite simple, we just embed a large black PNG via a data URI:

<script>Notification.requestPermission(function(status){

new Notification("",{icon: "data:image/png;base64,iVBORw0KGgoAAAANSUhEUgAAE4gAABOIAQAAAAB147pmAAAL70lEQVR4Ae3BAQ0AAADCIPuntscHD" + "A".repeat(4043) + "yDjFUQABEK0vGQAAAABJRU5ErkJggg==",});

});</script>I haven't fully tracked down what's causing this, but it seems that Firefox tries to send a message to the system's notification daemon with libnotify and if that's too large for the message size limit of dbus it will not properly handle the resulting error.

What I found quite frustrating is that when I reported it I learned that this was a duplicate of a bug that has already been reported more than a year ago. I feel having such a simple browser crash bug open for such a long time is not appropriate. It is still unfixed.

Thursday, September 7. 2017

In Search of a Secure Time Source

Update: This blogpost was written before NTS was available, and the information is outdated. If you are looking for a modern solution, I recommend using software and a time server with Network Time Security, as specified in RFC 8915.

All our computers and smartphones have an internal clock and need to know the current time. As configuring the time manually is annoying it's common to set the time via Internet services. What tends to get forgotten is that a reasonably accurate clock is often a crucial part of security features like certificate lifetimes or features with expiration times like HSTS. Thus the timesetting should be secure - but usually it isn't.

All our computers and smartphones have an internal clock and need to know the current time. As configuring the time manually is annoying it's common to set the time via Internet services. What tends to get forgotten is that a reasonably accurate clock is often a crucial part of security features like certificate lifetimes or features with expiration times like HSTS. Thus the timesetting should be secure - but usually it isn't.

I'd like my systems to have a secure time. So I'm looking for a timesetting tool that fullfils two requirements:

Although these seem like trivial requirements to my knowledge such a tool doesn't exist. These are relatively loose requirements. One might want to add:

Some people need a very accurate time source, for example for certain scientific use cases. But that's outside of my scope. For the vast majority of use cases a clock that is off by a few seconds doesn't matter. While it's certainly a good idea to consider rogue servers given the current state of things I'd be happy to have a solution where I simply trust a server from Google or any other major Internet entity.

So let's look at what we have:

NTP

The common way of setting the clock is the NTP protocol. NTP itself has no transport security built in. It's a plaintext protocol open to manipulation and man in the middle attacks.

There are two variants of "secure" NTP. "Autokey", an authenticated variant of NTP, is broken. There's also a symmetric authentication, but that is impractical for widespread use, as it would require to negotiate a pre-shared key with the time server in advance.

NTPsec and Ntimed

In response to some vulnerabilities in the reference implementation of NTP two projects started developing "more secure" variants of NTP. Ntimed - a rewrite by Poul-Henning Kamp - and NTPsec, a fork of the original NTP software. Ntimed hasn't seen any development for several years, NTPsec seems active. NTPsec had some controversies with the developers of the original NTP reference implementation and its main developer is - to put it mildly - a controversial character.

But none of that matters. Both projects don't implement a "secure" NTP. The "sec" in NTPsec refers to the security of the code, not to the security of the protocol itself. It's still just an implementation of the old, insecure NTP.

Network Time Security

There's a draft for a new secure variant of NTP - called Network Time Security. It adds authentication to NTP.

However it's just a draft and it seems stalled. It hasn't been updated for over a year. In any case: It's not widely implemented and thus it's currently not usable. If that changes it may be an option.

tlsdate

tlsdate is a hack abusing the timestamp of the TLS protocol. The TLS timestamp of a server can be used to set the system time. This doesn't provide high accuracy, as the timestamp is only given in seconds, but it's good enough.

I've used and advocated tlsdate for a while, but it has some problems. The timestamp in the TLS handshake doesn't really have any meaning within the protocol, so several implementers decided to replace it with a random value. Unfortunately that is also true for the default server hardcoded into tlsdate.

Some Linux distributions still ship a package with a default server that will send random timestamps. The result is that your system time is set to a random value. I reported this to Ubuntu a while ago. It never got fixed, however the latest Ubuntu version Zesty Zapis (17.04) doesn't ship tlsdate any more.

Given that Google has shipped tlsdate for some in ChromeOS time it seems unlikely that Google will send randomized timestamps any time soon. Thus if you use tlsdate with www.google.com it should work for now. But it's no future-proof solution.

TLS 1.3 removes the TLS timestamp, so this whole concept isn't future-proof. Alternatively it supports using an HTTPS timestamp. The development of tlsdate has stalled, it hasn't seen any updates lately. It doesn't build with the latest version of OpenSSL (1.1) So it likely will become unusable soon.

OpenNTPD

OpenNTPD

The developers of OpenNTPD, the NTP daemon from OpenBSD, came up with a nice idea. NTP provides high accuracy, yet no security. Via HTTPS you can get a timestamp with low accuracy. So they combined the two: They use NTP to set the time, but they check whether the given time deviates significantly from an HTTPS host. So the HTTPS host provides safety boundaries for the NTP time.

This would be really nice, if there wasn't a catch: This feature depends on an API only provided by LibreSSL, the OpenBSD fork of OpenSSL. So it's not available on most common Linux systems. (Also why doesn't the OpenNTPD web page support HTTPS?)

Roughtime

Roughtime is a Google project. It fetches the time from multiple servers and uses some fancy cryptography to make sure that malicious servers get detected. If a roughtime server sends a bad time then the client gets a cryptographic proof of the malicious behavior, making it possible to blame and shame rogue servers. Roughtime doesn't provide the high accuracy that NTP provides.

From a security perspective it's the nicest of all solutions. However it fails the availability test. Google provides two reference implementations in C++ and in Go, but it's not packaged for any major Linux distribution. Google has an unfortunate tendency to use unusual dependencies and arcane build systems nobody else uses, so packaging it comes with some challenges.

One line bash script beats all existing solutions

As you can see none of the currently available solutions is really feasible and none fulfils the two mild requirements of authenticity and availability.

This is frustrating given that it's a really simple problem. In fact, it's so simple that you can solve it with a single line bash script:

This line sends an HTTPS request to Google, fetches the date header from the response and passes that to the date command line utility.

It provides authenticity via TLS. If the current system time is far off then this fails, as the TLS connection relies on the validity period of the current certificate. Google currently uses certificates with a validity of around three months. The accuracy is only in seconds, so it doesn't qualify for high accuracy requirements. There's no protection against a rogue Google server providing a wrong time.

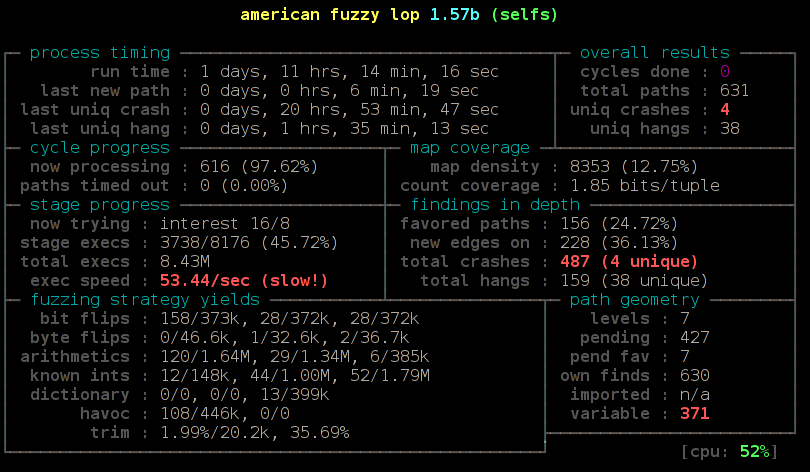

Another potential security concern may be that Google might attack the parser of the date setting tool by serving a malformed date string. However I ran american fuzzy lop against it and it looks robust.

While this certainly isn't as accurate as NTP or as secure as roughtime, it's better than everything else that's available. I put this together in a slightly more advanced bash script called httpstime.

All our computers and smartphones have an internal clock and need to know the current time. As configuring the time manually is annoying it's common to set the time via Internet services. What tends to get forgotten is that a reasonably accurate clock is often a crucial part of security features like certificate lifetimes or features with expiration times like HSTS. Thus the timesetting should be secure - but usually it isn't.

All our computers and smartphones have an internal clock and need to know the current time. As configuring the time manually is annoying it's common to set the time via Internet services. What tends to get forgotten is that a reasonably accurate clock is often a crucial part of security features like certificate lifetimes or features with expiration times like HSTS. Thus the timesetting should be secure - but usually it isn't.I'd like my systems to have a secure time. So I'm looking for a timesetting tool that fullfils two requirements:

- It provides authenticity of the time and is not vulnerable to man in the middle attacks.

- It is widely available on common Linux systems.

Although these seem like trivial requirements to my knowledge such a tool doesn't exist. These are relatively loose requirements. One might want to add:

- The timesetting needs to provide a good accuracy.

- The timesetting needs to be protected against malicious time servers.

Some people need a very accurate time source, for example for certain scientific use cases. But that's outside of my scope. For the vast majority of use cases a clock that is off by a few seconds doesn't matter. While it's certainly a good idea to consider rogue servers given the current state of things I'd be happy to have a solution where I simply trust a server from Google or any other major Internet entity.

So let's look at what we have:

NTP

The common way of setting the clock is the NTP protocol. NTP itself has no transport security built in. It's a plaintext protocol open to manipulation and man in the middle attacks.

There are two variants of "secure" NTP. "Autokey", an authenticated variant of NTP, is broken. There's also a symmetric authentication, but that is impractical for widespread use, as it would require to negotiate a pre-shared key with the time server in advance.

NTPsec and Ntimed

In response to some vulnerabilities in the reference implementation of NTP two projects started developing "more secure" variants of NTP. Ntimed - a rewrite by Poul-Henning Kamp - and NTPsec, a fork of the original NTP software. Ntimed hasn't seen any development for several years, NTPsec seems active. NTPsec had some controversies with the developers of the original NTP reference implementation and its main developer is - to put it mildly - a controversial character.

But none of that matters. Both projects don't implement a "secure" NTP. The "sec" in NTPsec refers to the security of the code, not to the security of the protocol itself. It's still just an implementation of the old, insecure NTP.

Network Time Security

There's a draft for a new secure variant of NTP - called Network Time Security. It adds authentication to NTP.

However it's just a draft and it seems stalled. It hasn't been updated for over a year. In any case: It's not widely implemented and thus it's currently not usable. If that changes it may be an option.

tlsdate

tlsdate is a hack abusing the timestamp of the TLS protocol. The TLS timestamp of a server can be used to set the system time. This doesn't provide high accuracy, as the timestamp is only given in seconds, but it's good enough.

I've used and advocated tlsdate for a while, but it has some problems. The timestamp in the TLS handshake doesn't really have any meaning within the protocol, so several implementers decided to replace it with a random value. Unfortunately that is also true for the default server hardcoded into tlsdate.

Some Linux distributions still ship a package with a default server that will send random timestamps. The result is that your system time is set to a random value. I reported this to Ubuntu a while ago. It never got fixed, however the latest Ubuntu version Zesty Zapis (17.04) doesn't ship tlsdate any more.

Given that Google has shipped tlsdate for some in ChromeOS time it seems unlikely that Google will send randomized timestamps any time soon. Thus if you use tlsdate with www.google.com it should work for now. But it's no future-proof solution.

TLS 1.3 removes the TLS timestamp, so this whole concept isn't future-proof. Alternatively it supports using an HTTPS timestamp. The development of tlsdate has stalled, it hasn't seen any updates lately. It doesn't build with the latest version of OpenSSL (1.1) So it likely will become unusable soon.

OpenNTPD

OpenNTPDThe developers of OpenNTPD, the NTP daemon from OpenBSD, came up with a nice idea. NTP provides high accuracy, yet no security. Via HTTPS you can get a timestamp with low accuracy. So they combined the two: They use NTP to set the time, but they check whether the given time deviates significantly from an HTTPS host. So the HTTPS host provides safety boundaries for the NTP time.

This would be really nice, if there wasn't a catch: This feature depends on an API only provided by LibreSSL, the OpenBSD fork of OpenSSL. So it's not available on most common Linux systems. (Also why doesn't the OpenNTPD web page support HTTPS?)

Roughtime

Roughtime is a Google project. It fetches the time from multiple servers and uses some fancy cryptography to make sure that malicious servers get detected. If a roughtime server sends a bad time then the client gets a cryptographic proof of the malicious behavior, making it possible to blame and shame rogue servers. Roughtime doesn't provide the high accuracy that NTP provides.

From a security perspective it's the nicest of all solutions. However it fails the availability test. Google provides two reference implementations in C++ and in Go, but it's not packaged for any major Linux distribution. Google has an unfortunate tendency to use unusual dependencies and arcane build systems nobody else uses, so packaging it comes with some challenges.

One line bash script beats all existing solutions

As you can see none of the currently available solutions is really feasible and none fulfils the two mild requirements of authenticity and availability.

This is frustrating given that it's a really simple problem. In fact, it's so simple that you can solve it with a single line bash script:

date -s "$(curl -sI https://www.google.com/|grep -i 'date:'|sed -e 's/^.ate: //g')"This line sends an HTTPS request to Google, fetches the date header from the response and passes that to the date command line utility.

It provides authenticity via TLS. If the current system time is far off then this fails, as the TLS connection relies on the validity period of the current certificate. Google currently uses certificates with a validity of around three months. The accuracy is only in seconds, so it doesn't qualify for high accuracy requirements. There's no protection against a rogue Google server providing a wrong time.

Another potential security concern may be that Google might attack the parser of the date setting tool by serving a malformed date string. However I ran american fuzzy lop against it and it looks robust.

While this certainly isn't as accurate as NTP or as secure as roughtime, it's better than everything else that's available. I put this together in a slightly more advanced bash script called httpstime.

Posted by Hanno Böck

in Code, Cryptography, English, Linux, Security

at

17:07

| Comments (6)

| Trackbacks (0)

Thursday, July 20. 2017

How I tricked Symantec with a Fake Private Key

Lately, some attention was drawn to a widespread problem with TLS certificates. Many people are accidentally publishing their private keys. Sometimes they are released as part of applications, in Github repositories or with common filenames on web servers.

Lately, some attention was drawn to a widespread problem with TLS certificates. Many people are accidentally publishing their private keys. Sometimes they are released as part of applications, in Github repositories or with common filenames on web servers.If a private key is compromised, a certificate authority is obliged to revoke it. The Baseline Requirements – a set of rules that browsers and certificate authorities agreed upon – regulate this and say that in such a case a certificate authority shall revoke the key within 24 hours (Section 4.9.1.1 in the current Baseline Requirements 1.4.8). These rules exist despite the fact that revocation has various problems and doesn’t work very well, but that’s another topic.

I reported various key compromises to certificate authorities recently and while not all of them reacted in time, they eventually revoked all certificates belonging to the private keys. I wondered however how thorough they actually check the key compromises. Obviously one would expect that they cryptographically verify that an exposed private key really is the private key belonging to a certificate.

I registered two test domains at a provider that would allow me to hide my identity and not show up in the whois information. I then ordered test certificates from Symantec (via their brand RapidSSL) and Comodo. These are the biggest certificate authorities and they both offer short term test certificates for free. I then tried to trick them into revoking those certificates with a fake private key.

Forging a private key

To understand this we need to get a bit into the details of RSA keys. In essence a cryptographic key is just a set of numbers. For RSA a public key consists of a modulus (usually named N) and a public exponent (usually called e). You don’t have to understand their mathematical meaning, just keep in mind: They’re nothing more than numbers.

An RSA private key is also just numbers, but more of them. If you have heard any introductory RSA descriptions you may know that a private key consists of a private exponent (called d), but in practice it’s a bit more. Private keys usually contain the full public key (N, e), the private exponent (d) and several other values that are redundant, but they are useful to speed up certain things. But just keep in mind that a public key consists of two numbers and a private key is a public key plus some additional numbers. A certificate ultimately is just a public key with some additional information (like the host name that says for which web page it’s valid) signed by a certificate authority.

A naive check whether a private key belongs to a certificate could be done by extracting the public key parts of both the certificate and the private key for comparison. However it is quite obvious that this isn’t secure. An attacker could construct a private key that contains the public key of an existing certificate and the private key parts of some other, bogus key. Obviously such a fake key couldn’t be used and would only produce errors, but it would survive such a naive check.

I created such fake keys for both domains and uploaded them to Pastebin. If you want to create such fake keys on your own here’s a script. To make my report less suspicious I searched Pastebin for real, compromised private keys belonging to certificates. This again shows how problematic the leakage of private keys is: I easily found seven private keys for Comodo certificates and three for Symantec certificates, plus several more for other certificate authorities, which I also reported. These additional keys allowed me to make my report to Symantec and Comodo less suspicious: I could hide my fake key report within other legitimate reports about a key compromise.

Symantec revoked a certificate based on a forged private key

Comodo didn’t fall for it. They answered me that there is something wrong with this key. Symantec however answered me that they revoked all certificates – including the one with the fake private key.

Comodo didn’t fall for it. They answered me that there is something wrong with this key. Symantec however answered me that they revoked all certificates – including the one with the fake private key.No harm was done here, because the certificate was only issued for my own test domain. But I could’ve also fake private keys of other peoples' certificates. Very likely Symantec would have revoked them as well, causing downtimes for those sites. I even could’ve easily created a fake key belonging to Symantec’s own certificate.

The communication by Symantec with the domain owner was far from ideal. I first got a mail that they were unable to process my order. Then I got another mail about a “cancellation request”. They didn’t explain what really happened and that the revocation happened due to a key uploaded on Pastebin.

I then informed Symantec about the invalid key (from my “real” identity), claiming that I just noted there’s something wrong with it. At that point they should’ve been aware that they revoked the certificate in error. Then I contacted the support with my “domain owner” identity and asked why the certificate was revoked. The answer: “I wanted to inform you that your FreeSSL certificate was cancelled as during a log check it was determined that the private key was compromised.”

To summarize: Symantec never told the domain owner that the certificate was revoked due to a key leaked on Pastebin. I assume in all the other cases they also didn’t inform their customers. Thus they may have experienced a certificate revocation, but don’t know why. So they can’t learn and can’t improve their processes to make sure this doesn’t happen again. Also, Symantec still insisted to the domain owner that the key was compromised even after I already had informed them that the key was faulty.

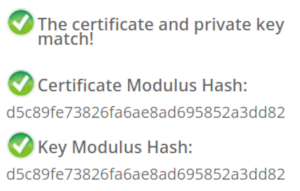

How to check if a private key belongs to a certificate?

In case you wonder how you properly check whether a private key belongs to a certificate you may of course resort to a Google search. And this was fascinating – and scary – to me: I searched Google for “check if private key matches certificate”. I got plenty of instructions. Almost all of them were wrong. The first result is a page from SSLShopper. They recommend to compare the MD5 hash of the modulus. That they use MD5 is not the problem here, the problem is that this is a naive check only comparing parts of the public key. They even provide a form to check this. (That they ask you to put your private key into a form is a different issue on its own, but at least they have a warning about this and recommend to check locally.)

In case you wonder how you properly check whether a private key belongs to a certificate you may of course resort to a Google search. And this was fascinating – and scary – to me: I searched Google for “check if private key matches certificate”. I got plenty of instructions. Almost all of them were wrong. The first result is a page from SSLShopper. They recommend to compare the MD5 hash of the modulus. That they use MD5 is not the problem here, the problem is that this is a naive check only comparing parts of the public key. They even provide a form to check this. (That they ask you to put your private key into a form is a different issue on its own, but at least they have a warning about this and recommend to check locally.)Furthermore we get the same wrong instructions from the University of Wisconsin, Comodo (good that their engineers were smart enough not to rely on their own documentation), tbs internet (“SSL expert since 1996”), ShellHacks, IBM and RapidSSL (aka Symantec). A post on Stackexchange is the only result that actually mentions a proper check for RSA keys. Two more Stackexchange posts are not related to RSA, I haven’t checked their solutions in detail.

Going to Google results page two among some unrelated links we find more wrong instructions and tools from Symantec (Update 2020: Link offline), SSL247 (“Symantec Specialist Partner Website Security” - they learned from the best) and some private blog. A documentation by Aspera (belonging to IBM) at least mentions that you can check the private key, but in an unrelated section of the document. Also we get more tools that ask you to upload your private key and then not properly check it from SSLChecker.com, the SSL Store (Symantec “Website Security Platinum Partner”), GlobeSSL (“in SSL we trust”) and - well - RapidSSL.

Documented Security Vulnerability in OpenSSL

So if people google for instructions they’ll almost inevitably end up with non-working instructions or tools. But what about other options? Let’s say we want to automate this and have a tool that verifies whether a certificate matches a private key using OpenSSL. We may end up finding that OpenSSL has a function

x509_check_private_key() that can be used to “check the consistency of a private key with the public key in an X509 certificate or certificate request”. Sounds like exactly what we need, right?Well, until you read the full docs and find out that it has a BUGS section: “The check_private_key functions don't check if k itself is indeed a private key or not. It merely compares the public materials (e.g. exponent and modulus of an RSA key) and/or key parameters (e.g. EC params of an EC key) of a key pair.”

I think this is a security vulnerability in OpenSSL (discussion with OpenSSL here). And that doesn’t change just because it’s a documented security vulnerability. Notably there are downstream consumers of this function that failed to copy that part of the documentation, see for example the corresponding PHP function (the limitation is however mentioned in a comment by a user).

So how do you really check whether a private key matches a certificate?

Ultimately there are two reliable ways to check whether a private key belongs to a certificate. One way is to check whether the various values of the private key are consistent and then check whether the public key matches. For example a private key contains values p and q that are the prime factors of the public modulus N. If you multiply them and compare them to N you can be sure that you have a legitimate private key. It’s one of the core properties of RSA that it’s secure based on the assumption that it’s not feasible to calculate p and q from N.

You can use OpenSSL to check the consistency of a private key:

openssl rsa -in [privatekey] -checkFor my forged keys it will tell you:

RSA key error: n does not equal p qYou can then compare the public key, for example by calculating the so-called SPKI SHA256 hash:

openssl pkey -in [privatekey] -pubout -outform der | sha256sumopenssl x509 -in [certificate] -pubkey |openssl pkey -pubin -pubout -outform der | sha256sumAnother way is to sign a message with the private key and then verify it with the public key. You could do it like this:

openssl x509 -in [certificate] -noout -pubkey > pubkey.pemdd if=/dev/urandom of=rnd bs=32 count=1openssl rsautl -sign -pkcs -inkey [privatekey] -in rnd -out sigopenssl rsautl -verify -pkcs -pubin -inkey pubkey.pem -in sig -out checkcmp rnd checkrm rnd check sig pubkey.pemIf cmp produces no output then the signature matches.

As this is all quite complex due to OpenSSLs arcane command line interface I have put this all together in a script. You can pass a certificate and a private key, both in ASCII/PEM format, and it will do both checks.

Summary

Symantec did a major blunder by revoking a certificate based on completely forged evidence. There’s hardly any excuse for this and it indicates that they operate a certificate authority without a proper understanding of the cryptographic background.

Apart from that the problem of checking whether a private key and certificate match seems to be largely documented wrong. Plenty of erroneous guides and tools may cause others to fall for the same trap.

Update: Symantec answered with a blog post.

Posted by Hanno Böck

in Cryptography, English, Linux, Security

at

16:58

| Comments (12)

| Trackback (1)

Defined tags for this entry: ca, certificate, certificateauthority, openssl, privatekey, rsa, ssl, symantec, tls, x509

Thursday, June 15. 2017

Don't leave Coredumps on Web Servers

Coredumps are a feature of Linux and other Unix systems to analyze crashing software. If a software crashes, for example due to an invalid memory access, the operating system can save the current content of the application's memory to a file. By default it is simply called

Coredumps are a feature of Linux and other Unix systems to analyze crashing software. If a software crashes, for example due to an invalid memory access, the operating system can save the current content of the application's memory to a file. By default it is simply called core.While this is useful for debugging purposes it can produce a security risk. If a web application crashes the coredump may simply end up in the web server's root folder. Given that its file name is known an attacker can simply download it via an URL of the form

https://example.org/core. As coredumps contain an application's memory they may expose secret information. A very typical example would be passwords.PHP used to crash relatively often. Recently a lot of these crash bugs have been fixed, in part because PHP now has a bug bounty program. But there are still situations in which PHP crashes. Some of them likely won't be fixed.

How to disclose?

With a scan of the Alexa Top 1 Million domains for exposed core dumps I found around 1.000 vulnerable hosts. I was faced with a challenge: How can I properly disclose this? It is obvious that I wouldn't write hundreds of manual mails. So I needed an automated way to contact the site owners.

Abusix runs a service where you can query the abuse contacts of IP addresses via a DNS query. This turned out to be very useful for this purpose. One could also imagine contacting domain owners directly, but that's not very practical. The domain whois databases have rate limits and don't always expose contact mail addresses in a machine readable way.

Using the abuse contacts doesn't reach all of the affected host operators. Some abuse contacts were nonexistent mail addresses, others didn't have abuse contacts at all. I also got all kinds of automated replies, some of them asking me to fill out forms or do other things, otherwise my message wouldn't be read. Due to the scale I ignored those. I feel that if people make it hard for me to inform them about security problems that's not my responsibility.

I took away two things that I changed in a second batch of disclosures. Some abuse contacts seem to automatically search for IP addresses in the abuse mails. I originally only included affected URLs. So I changed that to include the affected IPs as well.

In many cases I was informed that the affected hosts are not owned by the company I contacted, but by a customer. Some of them asked me if they're allowed to forward the message to them. I thought that would be obvious, but I made it explicit now. Some of them asked me that I contact their customers, which again, of course, is impractical at scale. And sorry: They are your customers, not mine.

How to fix and prevent it?

If you have a coredump on your web host, the obvious fix is to remove it from there. However you obviously also want to prevent this from happening again.

There are two settings that impact coredump creation: A limits setting, configurable via

/etc/security/limits.conf and ulimit and a sysctl interface that can be found under /proc/sys/kernel/core_pattern.The limits setting is a size limit for coredumps. If it is set to zero then no core dumps are created. To set this as the default you can add something like this to your

limits.conf:* soft core 0The sysctl interface sets a pattern for the file name and can also contain a path. You can set it to something like this:

/var/log/core/core.%e.%p.%h.%tThis would store all coredumps under

/var/log/core/ and add the executable name, process id, host name and timestamp to the filename. The directory needs to be writable by all users, you should use a directory with the sticky bit (chmod +t).If you set this via the proc file interface it will only be temporary until the next reboot. To set this permanently you can add it to

/etc/sysctl.conf:kernel.core_pattern = /var/log/core/core.%e.%p.%h.%tSome Linux distributions directly forward core dumps to crash analysis tools. This can be done by prefixing the pattern with a pipe (|). These tools like apport from Ubuntu or abrt from Fedora have also been the source of security vulnerabilities in the past. However that's a separate issue.

Look out for coredumps

My scans showed that this is a relatively common issue. Among popular web pages around one in a thousand were affected before my disclosure attempts. I recommend that pentesters and developers of security scan tools consider checking for this. It's simple: Just try download the

/core file and check if it looks like an executable. In most cases it will be an ELF file, however sometimes it may be a Mach-O (OS X) or an a.out file (very old Linux and Unix systems).Image credit: NASA/JPL-Université Paris Diderot

Posted by Hanno Böck

in English, Gentoo, Linux, Security

at

11:20

| Comments (0)

| Trackback (1)

Defined tags for this entry: core, coredump, crash, linux, php, segfault, vulnerability, webroot, websecurity, webserver

Friday, May 19. 2017

The Problem with OCSP Stapling and Must Staple and why Certificate Revocation is still broken

Update (2020-09-16): While three years old, people still find this blog post when looking for information about Stapling problems. For Apache the situation has improved considerably in the meantime: mod_md, which is part of recent apache releases, comes with a new stapling implementation which you can enable with the setting MDStapling on.

Today the OCSP servers from Let’s Encrypt were offline for a while. This has caused far more trouble than it should have, because in theory we have all the technologies available to handle such an incident. However due to failures in how they are implemented they don’t really work.

We have to understand some background. Encrypted connections using the TLS protocol like HTTPS use certificates. These are essentially cryptographic public keys together with a signed statement from a certificate authority that they belong to a certain host name.

CRL and OCSP – two technologies that don’t work

Certificates can be revoked. That means that for some reason the certificate should no longer be used. A typical scenario is when a certificate owner learns that his servers have been hacked and his private keys stolen. In this case it’s good to avoid that the stolen keys and their corresponding certificates can still be used. Therefore a TLS client like a browser should check that a certificate provided by a server is not revoked.

That’s the theory at least. However the history of certificate revocation is a history of two technologies that don’t really work.

One method are certificate revocation lists (CRLs). It’s quite simple: A certificate authority provides a list of certificates that are revoked. This has an obvious limitation: These lists can grow. Given that a revocation check needs to happen during a connection it’s obvious that this is non-workable in any realistic scenario.

The second method is called OCSP (Online Certificate Status Protocol). Here a client can query a server about the status of a single certificate and will get a signed answer. This avoids the size problem of CRLs, but it still has a number of problems. Given that connections should be fast it’s quite a high cost for a client to make a connection to an OCSP server during each handshake. It’s also concerning for privacy, as it gives the operator of an OCSP server a lot of information.

However there’s a more severe problem: What happens if an OCSP server is not available? From a security point of view one could say that a certificate that can’t be OCSP-checked should be considered invalid. However OCSP servers are far too unreliable. So practically all clients implement OCSP in soft fail mode (or not at all). Soft fail means that if the OCSP server is not available the certificate is considered valid.

That makes the whole OCSP concept pointless: If an attacker tries to abuse a stolen, revoked certificate he can just block the connection to the OCSP server – and thus a client can’t learn that it’s revoked. Due to this inherent security failure Chrome decided to disable OCSP checking altogether. As a workaround they have something called CRLsets and Mozilla has something similar called OneCRL, which is essentially a big revocation list for important revocations managed by the browser vendor. However this is a weak workaround that doesn’t cover most certificates.

OCSP Stapling and Must Staple to the rescue?

There are two technologies that could fix this: OCSP Stapling and Must-Staple.

OCSP Stapling moves the querying of the OCSP server from the client to the server. The server gets OCSP replies and then sends them within the TLS handshake. This has several advantages: It avoids the latency and privacy implications of OCSP. It also allows surviving short downtimes of OCSP servers, because a TLS server can cache OCSP replies (they’re usually valid for several days).

However it still does not solve the security issue: If an attacker has a stolen, revoked certificate it can be used without Stapling. The browser won’t know about it and will query the OCSP server, this request can again be blocked by the attacker and the browser will accept the certificate.

Therefore an extension for certificates has been introduced that allows us to require Stapling. It’s usually called OCSP Must-Staple and is defined in RFC 7633 (although the RFC doesn’t mention the name Must-Staple, which can cause some confusion). If a browser sees a certificate with this extension that is used without OCSP Stapling it shouldn’t accept it.

So we should be fine. With OCSP Stapling we can avoid the latency and privacy issues of OCSP and we can avoid failing when OCSP servers have short downtimes. With OCSP Must-Staple we fix the security problems. No more soft fail. All good, right?

The OCSP Stapling implementations of Apache and Nginx are broken