Thursday, September 7. 2017

In Search of a Secure Time Source

Update: This blogpost was written before NTS was available, and the information is outdated. If you are looking for a modern solution, I recommend using software and a time server with Network Time Security, as specified in RFC 8915.

All our computers and smartphones have an internal clock and need to know the current time. As configuring the time manually is annoying it's common to set the time via Internet services. What tends to get forgotten is that a reasonably accurate clock is often a crucial part of security features like certificate lifetimes or features with expiration times like HSTS. Thus the timesetting should be secure - but usually it isn't.

All our computers and smartphones have an internal clock and need to know the current time. As configuring the time manually is annoying it's common to set the time via Internet services. What tends to get forgotten is that a reasonably accurate clock is often a crucial part of security features like certificate lifetimes or features with expiration times like HSTS. Thus the timesetting should be secure - but usually it isn't.

I'd like my systems to have a secure time. So I'm looking for a timesetting tool that fullfils two requirements:

Although these seem like trivial requirements to my knowledge such a tool doesn't exist. These are relatively loose requirements. One might want to add:

Some people need a very accurate time source, for example for certain scientific use cases. But that's outside of my scope. For the vast majority of use cases a clock that is off by a few seconds doesn't matter. While it's certainly a good idea to consider rogue servers given the current state of things I'd be happy to have a solution where I simply trust a server from Google or any other major Internet entity.

So let's look at what we have:

NTP

The common way of setting the clock is the NTP protocol. NTP itself has no transport security built in. It's a plaintext protocol open to manipulation and man in the middle attacks.

There are two variants of "secure" NTP. "Autokey", an authenticated variant of NTP, is broken. There's also a symmetric authentication, but that is impractical for widespread use, as it would require to negotiate a pre-shared key with the time server in advance.

NTPsec and Ntimed

In response to some vulnerabilities in the reference implementation of NTP two projects started developing "more secure" variants of NTP. Ntimed - a rewrite by Poul-Henning Kamp - and NTPsec, a fork of the original NTP software. Ntimed hasn't seen any development for several years, NTPsec seems active. NTPsec had some controversies with the developers of the original NTP reference implementation and its main developer is - to put it mildly - a controversial character.

But none of that matters. Both projects don't implement a "secure" NTP. The "sec" in NTPsec refers to the security of the code, not to the security of the protocol itself. It's still just an implementation of the old, insecure NTP.

Network Time Security

There's a draft for a new secure variant of NTP - called Network Time Security. It adds authentication to NTP.

However it's just a draft and it seems stalled. It hasn't been updated for over a year. In any case: It's not widely implemented and thus it's currently not usable. If that changes it may be an option.

tlsdate

tlsdate is a hack abusing the timestamp of the TLS protocol. The TLS timestamp of a server can be used to set the system time. This doesn't provide high accuracy, as the timestamp is only given in seconds, but it's good enough.

I've used and advocated tlsdate for a while, but it has some problems. The timestamp in the TLS handshake doesn't really have any meaning within the protocol, so several implementers decided to replace it with a random value. Unfortunately that is also true for the default server hardcoded into tlsdate.

Some Linux distributions still ship a package with a default server that will send random timestamps. The result is that your system time is set to a random value. I reported this to Ubuntu a while ago. It never got fixed, however the latest Ubuntu version Zesty Zapis (17.04) doesn't ship tlsdate any more.

Given that Google has shipped tlsdate for some in ChromeOS time it seems unlikely that Google will send randomized timestamps any time soon. Thus if you use tlsdate with www.google.com it should work for now. But it's no future-proof solution.

TLS 1.3 removes the TLS timestamp, so this whole concept isn't future-proof. Alternatively it supports using an HTTPS timestamp. The development of tlsdate has stalled, it hasn't seen any updates lately. It doesn't build with the latest version of OpenSSL (1.1) So it likely will become unusable soon.

OpenNTPD

OpenNTPD

The developers of OpenNTPD, the NTP daemon from OpenBSD, came up with a nice idea. NTP provides high accuracy, yet no security. Via HTTPS you can get a timestamp with low accuracy. So they combined the two: They use NTP to set the time, but they check whether the given time deviates significantly from an HTTPS host. So the HTTPS host provides safety boundaries for the NTP time.

This would be really nice, if there wasn't a catch: This feature depends on an API only provided by LibreSSL, the OpenBSD fork of OpenSSL. So it's not available on most common Linux systems. (Also why doesn't the OpenNTPD web page support HTTPS?)

Roughtime

Roughtime is a Google project. It fetches the time from multiple servers and uses some fancy cryptography to make sure that malicious servers get detected. If a roughtime server sends a bad time then the client gets a cryptographic proof of the malicious behavior, making it possible to blame and shame rogue servers. Roughtime doesn't provide the high accuracy that NTP provides.

From a security perspective it's the nicest of all solutions. However it fails the availability test. Google provides two reference implementations in C++ and in Go, but it's not packaged for any major Linux distribution. Google has an unfortunate tendency to use unusual dependencies and arcane build systems nobody else uses, so packaging it comes with some challenges.

One line bash script beats all existing solutions

As you can see none of the currently available solutions is really feasible and none fulfils the two mild requirements of authenticity and availability.

This is frustrating given that it's a really simple problem. In fact, it's so simple that you can solve it with a single line bash script:

This line sends an HTTPS request to Google, fetches the date header from the response and passes that to the date command line utility.

It provides authenticity via TLS. If the current system time is far off then this fails, as the TLS connection relies on the validity period of the current certificate. Google currently uses certificates with a validity of around three months. The accuracy is only in seconds, so it doesn't qualify for high accuracy requirements. There's no protection against a rogue Google server providing a wrong time.

Another potential security concern may be that Google might attack the parser of the date setting tool by serving a malformed date string. However I ran american fuzzy lop against it and it looks robust.

While this certainly isn't as accurate as NTP or as secure as roughtime, it's better than everything else that's available. I put this together in a slightly more advanced bash script called httpstime.

All our computers and smartphones have an internal clock and need to know the current time. As configuring the time manually is annoying it's common to set the time via Internet services. What tends to get forgotten is that a reasonably accurate clock is often a crucial part of security features like certificate lifetimes or features with expiration times like HSTS. Thus the timesetting should be secure - but usually it isn't.

All our computers and smartphones have an internal clock and need to know the current time. As configuring the time manually is annoying it's common to set the time via Internet services. What tends to get forgotten is that a reasonably accurate clock is often a crucial part of security features like certificate lifetimes or features with expiration times like HSTS. Thus the timesetting should be secure - but usually it isn't.I'd like my systems to have a secure time. So I'm looking for a timesetting tool that fullfils two requirements:

- It provides authenticity of the time and is not vulnerable to man in the middle attacks.

- It is widely available on common Linux systems.

Although these seem like trivial requirements to my knowledge such a tool doesn't exist. These are relatively loose requirements. One might want to add:

- The timesetting needs to provide a good accuracy.

- The timesetting needs to be protected against malicious time servers.

Some people need a very accurate time source, for example for certain scientific use cases. But that's outside of my scope. For the vast majority of use cases a clock that is off by a few seconds doesn't matter. While it's certainly a good idea to consider rogue servers given the current state of things I'd be happy to have a solution where I simply trust a server from Google or any other major Internet entity.

So let's look at what we have:

NTP

The common way of setting the clock is the NTP protocol. NTP itself has no transport security built in. It's a plaintext protocol open to manipulation and man in the middle attacks.

There are two variants of "secure" NTP. "Autokey", an authenticated variant of NTP, is broken. There's also a symmetric authentication, but that is impractical for widespread use, as it would require to negotiate a pre-shared key with the time server in advance.

NTPsec and Ntimed

In response to some vulnerabilities in the reference implementation of NTP two projects started developing "more secure" variants of NTP. Ntimed - a rewrite by Poul-Henning Kamp - and NTPsec, a fork of the original NTP software. Ntimed hasn't seen any development for several years, NTPsec seems active. NTPsec had some controversies with the developers of the original NTP reference implementation and its main developer is - to put it mildly - a controversial character.

But none of that matters. Both projects don't implement a "secure" NTP. The "sec" in NTPsec refers to the security of the code, not to the security of the protocol itself. It's still just an implementation of the old, insecure NTP.

Network Time Security

There's a draft for a new secure variant of NTP - called Network Time Security. It adds authentication to NTP.

However it's just a draft and it seems stalled. It hasn't been updated for over a year. In any case: It's not widely implemented and thus it's currently not usable. If that changes it may be an option.

tlsdate

tlsdate is a hack abusing the timestamp of the TLS protocol. The TLS timestamp of a server can be used to set the system time. This doesn't provide high accuracy, as the timestamp is only given in seconds, but it's good enough.

I've used and advocated tlsdate for a while, but it has some problems. The timestamp in the TLS handshake doesn't really have any meaning within the protocol, so several implementers decided to replace it with a random value. Unfortunately that is also true for the default server hardcoded into tlsdate.

Some Linux distributions still ship a package with a default server that will send random timestamps. The result is that your system time is set to a random value. I reported this to Ubuntu a while ago. It never got fixed, however the latest Ubuntu version Zesty Zapis (17.04) doesn't ship tlsdate any more.

Given that Google has shipped tlsdate for some in ChromeOS time it seems unlikely that Google will send randomized timestamps any time soon. Thus if you use tlsdate with www.google.com it should work for now. But it's no future-proof solution.

TLS 1.3 removes the TLS timestamp, so this whole concept isn't future-proof. Alternatively it supports using an HTTPS timestamp. The development of tlsdate has stalled, it hasn't seen any updates lately. It doesn't build with the latest version of OpenSSL (1.1) So it likely will become unusable soon.

OpenNTPD

OpenNTPDThe developers of OpenNTPD, the NTP daemon from OpenBSD, came up with a nice idea. NTP provides high accuracy, yet no security. Via HTTPS you can get a timestamp with low accuracy. So they combined the two: They use NTP to set the time, but they check whether the given time deviates significantly from an HTTPS host. So the HTTPS host provides safety boundaries for the NTP time.

This would be really nice, if there wasn't a catch: This feature depends on an API only provided by LibreSSL, the OpenBSD fork of OpenSSL. So it's not available on most common Linux systems. (Also why doesn't the OpenNTPD web page support HTTPS?)

Roughtime

Roughtime is a Google project. It fetches the time from multiple servers and uses some fancy cryptography to make sure that malicious servers get detected. If a roughtime server sends a bad time then the client gets a cryptographic proof of the malicious behavior, making it possible to blame and shame rogue servers. Roughtime doesn't provide the high accuracy that NTP provides.

From a security perspective it's the nicest of all solutions. However it fails the availability test. Google provides two reference implementations in C++ and in Go, but it's not packaged for any major Linux distribution. Google has an unfortunate tendency to use unusual dependencies and arcane build systems nobody else uses, so packaging it comes with some challenges.

One line bash script beats all existing solutions

As you can see none of the currently available solutions is really feasible and none fulfils the two mild requirements of authenticity and availability.

This is frustrating given that it's a really simple problem. In fact, it's so simple that you can solve it with a single line bash script:

date -s "$(curl -sI https://www.google.com/|grep -i 'date:'|sed -e 's/^.ate: //g')"This line sends an HTTPS request to Google, fetches the date header from the response and passes that to the date command line utility.

It provides authenticity via TLS. If the current system time is far off then this fails, as the TLS connection relies on the validity period of the current certificate. Google currently uses certificates with a validity of around three months. The accuracy is only in seconds, so it doesn't qualify for high accuracy requirements. There's no protection against a rogue Google server providing a wrong time.

Another potential security concern may be that Google might attack the parser of the date setting tool by serving a malformed date string. However I ran american fuzzy lop against it and it looks robust.

While this certainly isn't as accurate as NTP or as secure as roughtime, it's better than everything else that's available. I put this together in a slightly more advanced bash script called httpstime.

Posted by Hanno Böck

in Code, Cryptography, English, Linux, Security

at

17:07

| Comments (6)

| Trackbacks (0)

Tuesday, September 5. 2017

Abandoned Domain Takeover as a Web Security Risk

In the modern web it's extremely common to include thirdparty content on web pages. Youtube videos, social media buttons, ads, statistic tools, CDNs for fonts and common javascript files - there are plenty of good and many not so good reasons for this. What is often forgotten is that including other peoples content means giving other people control over your webpage. This is obviously particularly risky if it involves javascript, as this gives a third party full code execution rights in the context of your webpage.

I recently helped a person whose Wordpress blog had a problem: The layout looked broken. The cause was that the theme used a font from a web host - and that host was down. This was easy to fix. I was able to extract the font file from the Internet Archive and store a copy locally. But it made me thinking: What happens if you include third party content on your webpage and the service from which you're including it disappears?

I put together a simple script that would check webpages for HTML tags with the src attribute. If the src attribute points to an external host it checks if the host name actually can be resolved to an IP address. I ran that check on the Alexa Top 1 Million list. It gave me some interesting results. (This methodology has some limits, as it won't discover indirect src references or includes within javascript code, but it should be good enough to get a rough picture.)

Yahoo! Web Analytics was shut down in 2012, yet in 2017 Flickr still tried to use it

The webpage of Flickr included a script from Yahoo! Web Analytics. If you don't know Yahoo Analytics - that may be because it's been shut down in 2012. Although Flickr is a Yahoo! company it seems they haven't noted for quite a while. (The code is gone now, likely because I mentioned it on Twitter.) This example has no security impact as the domain still belongs to Yahoo. But it likely caused an unnecessary slowdown of page loads over many years.

Going through the list of domains I saw plenty of the things you'd expect: Typos, broken URLs, references to localhost and subdomains no longer in use. Sometimes I saw weird stuff, like references to javascript from browser extensions. My best explanation is that someone had a plugin installed that would inject those into pages and then created a copy of the page with the browser which later endet up being used as the real webpage.

I looked for abandoned domain names that might be worth registering. There weren't many. In most cases the invalid domains were hosts that didn't resolve, but that still belonged to someone. I found a few, but they were only used by one or two hosts.

Takeover of unregistered Azure subdomain

But then I saw a couple of domains referencing a javascript from a non-resolving host called piwiklionshare.azurewebsites.net. This is a subdomain from Microsoft's cloud service Azure. Conveniently Azure allows creating test accounts for free, so I was able to grab this subdomain without any costs.

Doing so allowed me to look at the HTTP log files and see what web pages included code from that subdomain. All of them were local newspapers from the US. 20 of them belonged to two adjacent IP addresses, indicating that they were all managed by the same company. I was able to contact them. While I never received any answer, shortly afterwards the code was gone from all those pages.

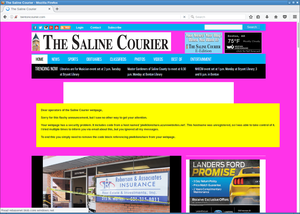

However the page with most hits was not so easy to contact. It was also a newspaper, the Saline Courier. I tried contacting them directly, their chief editor and their second chief editor. No answer.

After a while I wondered what I could do. Ultimately at some point Microsoft wouldn't let me host that subdomain any longer for free. I didn't want to risk that others could grab that subdomain, but at the same time I obviously also didn't want to pay in order to keep some web page safe whose owners didn't even bother to read my e-mails.

But of course I had another way of contacting them: I could execute Javascript on their web page and use that for some friendly defacement. After some contemplating whether that would be a legitimate thing to do I decided to go for it. I changed the background color to some flashy pink and send them a message. The page remained usable, but it was a message hard to ignore.

With some trouble on the way - first they broke their CSS, then they showed a PHP error message, then they reverted to the page with the defacement. But in the end they managed to remove the code.

There are still a couple of other pages that include that Javascript. Most of them however look like broken test webpages. The only legitimately looking webpage that still embeds that code is the Columbia Missourian. However they don't embed it on the start page, only on the error reporting form they have for every article. It's been several weeks now, they don't seem to care.

What happens to abandoned domains?

There are reasons to believe that what I showed here is only the tip of the iceberg. In many cases when services discontinue their domains don't simply disappear. If the domain name is valuable then almost certainly someone will try to register it immediately after it becomes available.

Someone trying to abuse abandoned domains could watch out for services going ot of business or widely referenced domains becoming available. Just to name an example: I found a couple of hosts referencing subdomains of compete.com. If you go to their web page, you can learn that the company Compete has discontinued its service in 2016. How long will they keep their domain? And what will happen with it afterwards? Whoever gets the domain can hijack all the web pages that still include javascript from it.

Be sure to know what you include

There are some obvious takeaways from this. If you include other peoples code on your web page then you should know what that means: You give them permission to execute whatever they want on your web page. This means you need to wonder how much you can trust them.

At the very least you should be aware who is allowed to execute code on your web page. If they shut down their business or discontinue the service you have been using then you obviously should remove that code immediately. And if you include code from a web statistics service that you never look at anyway you may simply want to remove that as well.

I recently helped a person whose Wordpress blog had a problem: The layout looked broken. The cause was that the theme used a font from a web host - and that host was down. This was easy to fix. I was able to extract the font file from the Internet Archive and store a copy locally. But it made me thinking: What happens if you include third party content on your webpage and the service from which you're including it disappears?

I put together a simple script that would check webpages for HTML tags with the src attribute. If the src attribute points to an external host it checks if the host name actually can be resolved to an IP address. I ran that check on the Alexa Top 1 Million list. It gave me some interesting results. (This methodology has some limits, as it won't discover indirect src references or includes within javascript code, but it should be good enough to get a rough picture.)

Yahoo! Web Analytics was shut down in 2012, yet in 2017 Flickr still tried to use it

The webpage of Flickr included a script from Yahoo! Web Analytics. If you don't know Yahoo Analytics - that may be because it's been shut down in 2012. Although Flickr is a Yahoo! company it seems they haven't noted for quite a while. (The code is gone now, likely because I mentioned it on Twitter.) This example has no security impact as the domain still belongs to Yahoo. But it likely caused an unnecessary slowdown of page loads over many years.

Going through the list of domains I saw plenty of the things you'd expect: Typos, broken URLs, references to localhost and subdomains no longer in use. Sometimes I saw weird stuff, like references to javascript from browser extensions. My best explanation is that someone had a plugin installed that would inject those into pages and then created a copy of the page with the browser which later endet up being used as the real webpage.

I looked for abandoned domain names that might be worth registering. There weren't many. In most cases the invalid domains were hosts that didn't resolve, but that still belonged to someone. I found a few, but they were only used by one or two hosts.

Takeover of unregistered Azure subdomain

But then I saw a couple of domains referencing a javascript from a non-resolving host called piwiklionshare.azurewebsites.net. This is a subdomain from Microsoft's cloud service Azure. Conveniently Azure allows creating test accounts for free, so I was able to grab this subdomain without any costs.

Doing so allowed me to look at the HTTP log files and see what web pages included code from that subdomain. All of them were local newspapers from the US. 20 of them belonged to two adjacent IP addresses, indicating that they were all managed by the same company. I was able to contact them. While I never received any answer, shortly afterwards the code was gone from all those pages.

However the page with most hits was not so easy to contact. It was also a newspaper, the Saline Courier. I tried contacting them directly, their chief editor and their second chief editor. No answer.

After a while I wondered what I could do. Ultimately at some point Microsoft wouldn't let me host that subdomain any longer for free. I didn't want to risk that others could grab that subdomain, but at the same time I obviously also didn't want to pay in order to keep some web page safe whose owners didn't even bother to read my e-mails.

But of course I had another way of contacting them: I could execute Javascript on their web page and use that for some friendly defacement. After some contemplating whether that would be a legitimate thing to do I decided to go for it. I changed the background color to some flashy pink and send them a message. The page remained usable, but it was a message hard to ignore.

With some trouble on the way - first they broke their CSS, then they showed a PHP error message, then they reverted to the page with the defacement. But in the end they managed to remove the code.

There are still a couple of other pages that include that Javascript. Most of them however look like broken test webpages. The only legitimately looking webpage that still embeds that code is the Columbia Missourian. However they don't embed it on the start page, only on the error reporting form they have for every article. It's been several weeks now, they don't seem to care.

What happens to abandoned domains?

There are reasons to believe that what I showed here is only the tip of the iceberg. In many cases when services discontinue their domains don't simply disappear. If the domain name is valuable then almost certainly someone will try to register it immediately after it becomes available.

Someone trying to abuse abandoned domains could watch out for services going ot of business or widely referenced domains becoming available. Just to name an example: I found a couple of hosts referencing subdomains of compete.com. If you go to their web page, you can learn that the company Compete has discontinued its service in 2016. How long will they keep their domain? And what will happen with it afterwards? Whoever gets the domain can hijack all the web pages that still include javascript from it.

Be sure to know what you include

There are some obvious takeaways from this. If you include other peoples code on your web page then you should know what that means: You give them permission to execute whatever they want on your web page. This means you need to wonder how much you can trust them.

At the very least you should be aware who is allowed to execute code on your web page. If they shut down their business or discontinue the service you have been using then you obviously should remove that code immediately. And if you include code from a web statistics service that you never look at anyway you may simply want to remove that as well.

Posted by Hanno Böck

in Code, English, Security, Webdesign

at

19:11

| Comments (3)

| Trackback (1)

Defined tags for this entry: azure, domain, javascript, newspaper, salinecourier, security, subdomain, websecurity

Thursday, July 20. 2017

How I tricked Symantec with a Fake Private Key

Lately, some attention was drawn to a widespread problem with TLS certificates. Many people are accidentally publishing their private keys. Sometimes they are released as part of applications, in Github repositories or with common filenames on web servers.

Lately, some attention was drawn to a widespread problem with TLS certificates. Many people are accidentally publishing their private keys. Sometimes they are released as part of applications, in Github repositories or with common filenames on web servers.If a private key is compromised, a certificate authority is obliged to revoke it. The Baseline Requirements – a set of rules that browsers and certificate authorities agreed upon – regulate this and say that in such a case a certificate authority shall revoke the key within 24 hours (Section 4.9.1.1 in the current Baseline Requirements 1.4.8). These rules exist despite the fact that revocation has various problems and doesn’t work very well, but that’s another topic.

I reported various key compromises to certificate authorities recently and while not all of them reacted in time, they eventually revoked all certificates belonging to the private keys. I wondered however how thorough they actually check the key compromises. Obviously one would expect that they cryptographically verify that an exposed private key really is the private key belonging to a certificate.

I registered two test domains at a provider that would allow me to hide my identity and not show up in the whois information. I then ordered test certificates from Symantec (via their brand RapidSSL) and Comodo. These are the biggest certificate authorities and they both offer short term test certificates for free. I then tried to trick them into revoking those certificates with a fake private key.

Forging a private key

To understand this we need to get a bit into the details of RSA keys. In essence a cryptographic key is just a set of numbers. For RSA a public key consists of a modulus (usually named N) and a public exponent (usually called e). You don’t have to understand their mathematical meaning, just keep in mind: They’re nothing more than numbers.

An RSA private key is also just numbers, but more of them. If you have heard any introductory RSA descriptions you may know that a private key consists of a private exponent (called d), but in practice it’s a bit more. Private keys usually contain the full public key (N, e), the private exponent (d) and several other values that are redundant, but they are useful to speed up certain things. But just keep in mind that a public key consists of two numbers and a private key is a public key plus some additional numbers. A certificate ultimately is just a public key with some additional information (like the host name that says for which web page it’s valid) signed by a certificate authority.

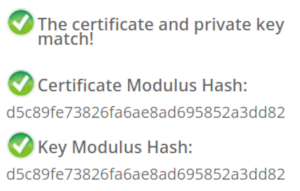

A naive check whether a private key belongs to a certificate could be done by extracting the public key parts of both the certificate and the private key for comparison. However it is quite obvious that this isn’t secure. An attacker could construct a private key that contains the public key of an existing certificate and the private key parts of some other, bogus key. Obviously such a fake key couldn’t be used and would only produce errors, but it would survive such a naive check.

I created such fake keys for both domains and uploaded them to Pastebin. If you want to create such fake keys on your own here’s a script. To make my report less suspicious I searched Pastebin for real, compromised private keys belonging to certificates. This again shows how problematic the leakage of private keys is: I easily found seven private keys for Comodo certificates and three for Symantec certificates, plus several more for other certificate authorities, which I also reported. These additional keys allowed me to make my report to Symantec and Comodo less suspicious: I could hide my fake key report within other legitimate reports about a key compromise.

Symantec revoked a certificate based on a forged private key

Comodo didn’t fall for it. They answered me that there is something wrong with this key. Symantec however answered me that they revoked all certificates – including the one with the fake private key.

Comodo didn’t fall for it. They answered me that there is something wrong with this key. Symantec however answered me that they revoked all certificates – including the one with the fake private key.No harm was done here, because the certificate was only issued for my own test domain. But I could’ve also fake private keys of other peoples' certificates. Very likely Symantec would have revoked them as well, causing downtimes for those sites. I even could’ve easily created a fake key belonging to Symantec’s own certificate.

The communication by Symantec with the domain owner was far from ideal. I first got a mail that they were unable to process my order. Then I got another mail about a “cancellation request”. They didn’t explain what really happened and that the revocation happened due to a key uploaded on Pastebin.

I then informed Symantec about the invalid key (from my “real” identity), claiming that I just noted there’s something wrong with it. At that point they should’ve been aware that they revoked the certificate in error. Then I contacted the support with my “domain owner” identity and asked why the certificate was revoked. The answer: “I wanted to inform you that your FreeSSL certificate was cancelled as during a log check it was determined that the private key was compromised.”

To summarize: Symantec never told the domain owner that the certificate was revoked due to a key leaked on Pastebin. I assume in all the other cases they also didn’t inform their customers. Thus they may have experienced a certificate revocation, but don’t know why. So they can’t learn and can’t improve their processes to make sure this doesn’t happen again. Also, Symantec still insisted to the domain owner that the key was compromised even after I already had informed them that the key was faulty.

How to check if a private key belongs to a certificate?

In case you wonder how you properly check whether a private key belongs to a certificate you may of course resort to a Google search. And this was fascinating – and scary – to me: I searched Google for “check if private key matches certificate”. I got plenty of instructions. Almost all of them were wrong. The first result is a page from SSLShopper. They recommend to compare the MD5 hash of the modulus. That they use MD5 is not the problem here, the problem is that this is a naive check only comparing parts of the public key. They even provide a form to check this. (That they ask you to put your private key into a form is a different issue on its own, but at least they have a warning about this and recommend to check locally.)

In case you wonder how you properly check whether a private key belongs to a certificate you may of course resort to a Google search. And this was fascinating – and scary – to me: I searched Google for “check if private key matches certificate”. I got plenty of instructions. Almost all of them were wrong. The first result is a page from SSLShopper. They recommend to compare the MD5 hash of the modulus. That they use MD5 is not the problem here, the problem is that this is a naive check only comparing parts of the public key. They even provide a form to check this. (That they ask you to put your private key into a form is a different issue on its own, but at least they have a warning about this and recommend to check locally.)Furthermore we get the same wrong instructions from the University of Wisconsin, Comodo (good that their engineers were smart enough not to rely on their own documentation), tbs internet (“SSL expert since 1996”), ShellHacks, IBM and RapidSSL (aka Symantec). A post on Stackexchange is the only result that actually mentions a proper check for RSA keys. Two more Stackexchange posts are not related to RSA, I haven’t checked their solutions in detail.

Going to Google results page two among some unrelated links we find more wrong instructions and tools from Symantec (Update 2020: Link offline), SSL247 (“Symantec Specialist Partner Website Security” - they learned from the best) and some private blog. A documentation by Aspera (belonging to IBM) at least mentions that you can check the private key, but in an unrelated section of the document. Also we get more tools that ask you to upload your private key and then not properly check it from SSLChecker.com, the SSL Store (Symantec “Website Security Platinum Partner”), GlobeSSL (“in SSL we trust”) and - well - RapidSSL.

Documented Security Vulnerability in OpenSSL

So if people google for instructions they’ll almost inevitably end up with non-working instructions or tools. But what about other options? Let’s say we want to automate this and have a tool that verifies whether a certificate matches a private key using OpenSSL. We may end up finding that OpenSSL has a function

x509_check_private_key() that can be used to “check the consistency of a private key with the public key in an X509 certificate or certificate request”. Sounds like exactly what we need, right?Well, until you read the full docs and find out that it has a BUGS section: “The check_private_key functions don't check if k itself is indeed a private key or not. It merely compares the public materials (e.g. exponent and modulus of an RSA key) and/or key parameters (e.g. EC params of an EC key) of a key pair.”

I think this is a security vulnerability in OpenSSL (discussion with OpenSSL here). And that doesn’t change just because it’s a documented security vulnerability. Notably there are downstream consumers of this function that failed to copy that part of the documentation, see for example the corresponding PHP function (the limitation is however mentioned in a comment by a user).

So how do you really check whether a private key matches a certificate?

Ultimately there are two reliable ways to check whether a private key belongs to a certificate. One way is to check whether the various values of the private key are consistent and then check whether the public key matches. For example a private key contains values p and q that are the prime factors of the public modulus N. If you multiply them and compare them to N you can be sure that you have a legitimate private key. It’s one of the core properties of RSA that it’s secure based on the assumption that it’s not feasible to calculate p and q from N.

You can use OpenSSL to check the consistency of a private key:

openssl rsa -in [privatekey] -checkFor my forged keys it will tell you:

RSA key error: n does not equal p qYou can then compare the public key, for example by calculating the so-called SPKI SHA256 hash:

openssl pkey -in [privatekey] -pubout -outform der | sha256sumopenssl x509 -in [certificate] -pubkey |openssl pkey -pubin -pubout -outform der | sha256sumAnother way is to sign a message with the private key and then verify it with the public key. You could do it like this:

openssl x509 -in [certificate] -noout -pubkey > pubkey.pemdd if=/dev/urandom of=rnd bs=32 count=1openssl rsautl -sign -pkcs -inkey [privatekey] -in rnd -out sigopenssl rsautl -verify -pkcs -pubin -inkey pubkey.pem -in sig -out checkcmp rnd checkrm rnd check sig pubkey.pemIf cmp produces no output then the signature matches.

As this is all quite complex due to OpenSSLs arcane command line interface I have put this all together in a script. You can pass a certificate and a private key, both in ASCII/PEM format, and it will do both checks.

Summary

Symantec did a major blunder by revoking a certificate based on completely forged evidence. There’s hardly any excuse for this and it indicates that they operate a certificate authority without a proper understanding of the cryptographic background.

Apart from that the problem of checking whether a private key and certificate match seems to be largely documented wrong. Plenty of erroneous guides and tools may cause others to fall for the same trap.

Update: Symantec answered with a blog post.

Posted by Hanno Böck

in Cryptography, English, Linux, Security

at

16:58

| Comments (12)

| Trackback (1)

Defined tags for this entry: ca, certificate, certificateauthority, openssl, privatekey, rsa, ssl, symantec, tls, x509

Thursday, June 15. 2017

Don't leave Coredumps on Web Servers

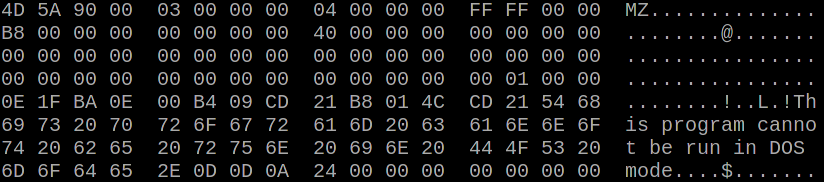

Coredumps are a feature of Linux and other Unix systems to analyze crashing software. If a software crashes, for example due to an invalid memory access, the operating system can save the current content of the application's memory to a file. By default it is simply called

Coredumps are a feature of Linux and other Unix systems to analyze crashing software. If a software crashes, for example due to an invalid memory access, the operating system can save the current content of the application's memory to a file. By default it is simply called core.While this is useful for debugging purposes it can produce a security risk. If a web application crashes the coredump may simply end up in the web server's root folder. Given that its file name is known an attacker can simply download it via an URL of the form

https://example.org/core. As coredumps contain an application's memory they may expose secret information. A very typical example would be passwords.PHP used to crash relatively often. Recently a lot of these crash bugs have been fixed, in part because PHP now has a bug bounty program. But there are still situations in which PHP crashes. Some of them likely won't be fixed.

How to disclose?

With a scan of the Alexa Top 1 Million domains for exposed core dumps I found around 1.000 vulnerable hosts. I was faced with a challenge: How can I properly disclose this? It is obvious that I wouldn't write hundreds of manual mails. So I needed an automated way to contact the site owners.

Abusix runs a service where you can query the abuse contacts of IP addresses via a DNS query. This turned out to be very useful for this purpose. One could also imagine contacting domain owners directly, but that's not very practical. The domain whois databases have rate limits and don't always expose contact mail addresses in a machine readable way.

Using the abuse contacts doesn't reach all of the affected host operators. Some abuse contacts were nonexistent mail addresses, others didn't have abuse contacts at all. I also got all kinds of automated replies, some of them asking me to fill out forms or do other things, otherwise my message wouldn't be read. Due to the scale I ignored those. I feel that if people make it hard for me to inform them about security problems that's not my responsibility.

I took away two things that I changed in a second batch of disclosures. Some abuse contacts seem to automatically search for IP addresses in the abuse mails. I originally only included affected URLs. So I changed that to include the affected IPs as well.

In many cases I was informed that the affected hosts are not owned by the company I contacted, but by a customer. Some of them asked me if they're allowed to forward the message to them. I thought that would be obvious, but I made it explicit now. Some of them asked me that I contact their customers, which again, of course, is impractical at scale. And sorry: They are your customers, not mine.

How to fix and prevent it?

If you have a coredump on your web host, the obvious fix is to remove it from there. However you obviously also want to prevent this from happening again.

There are two settings that impact coredump creation: A limits setting, configurable via

/etc/security/limits.conf and ulimit and a sysctl interface that can be found under /proc/sys/kernel/core_pattern.The limits setting is a size limit for coredumps. If it is set to zero then no core dumps are created. To set this as the default you can add something like this to your

limits.conf:* soft core 0The sysctl interface sets a pattern for the file name and can also contain a path. You can set it to something like this:

/var/log/core/core.%e.%p.%h.%tThis would store all coredumps under

/var/log/core/ and add the executable name, process id, host name and timestamp to the filename. The directory needs to be writable by all users, you should use a directory with the sticky bit (chmod +t).If you set this via the proc file interface it will only be temporary until the next reboot. To set this permanently you can add it to

/etc/sysctl.conf:kernel.core_pattern = /var/log/core/core.%e.%p.%h.%tSome Linux distributions directly forward core dumps to crash analysis tools. This can be done by prefixing the pattern with a pipe (|). These tools like apport from Ubuntu or abrt from Fedora have also been the source of security vulnerabilities in the past. However that's a separate issue.

Look out for coredumps

My scans showed that this is a relatively common issue. Among popular web pages around one in a thousand were affected before my disclosure attempts. I recommend that pentesters and developers of security scan tools consider checking for this. It's simple: Just try download the

/core file and check if it looks like an executable. In most cases it will be an ELF file, however sometimes it may be a Mach-O (OS X) or an a.out file (very old Linux and Unix systems).Image credit: NASA/JPL-Université Paris Diderot

Posted by Hanno Böck

in English, Gentoo, Linux, Security

at

11:20

| Comments (0)

| Trackback (1)

Defined tags for this entry: core, coredump, crash, linux, php, segfault, vulnerability, webroot, websecurity, webserver

Friday, May 19. 2017

The Problem with OCSP Stapling and Must Staple and why Certificate Revocation is still broken

Update (2020-09-16): While three years old, people still find this blog post when looking for information about Stapling problems. For Apache the situation has improved considerably in the meantime: mod_md, which is part of recent apache releases, comes with a new stapling implementation which you can enable with the setting MDStapling on.

Today the OCSP servers from Let’s Encrypt were offline for a while. This has caused far more trouble than it should have, because in theory we have all the technologies available to handle such an incident. However due to failures in how they are implemented they don’t really work.

We have to understand some background. Encrypted connections using the TLS protocol like HTTPS use certificates. These are essentially cryptographic public keys together with a signed statement from a certificate authority that they belong to a certain host name.

CRL and OCSP – two technologies that don’t work

Certificates can be revoked. That means that for some reason the certificate should no longer be used. A typical scenario is when a certificate owner learns that his servers have been hacked and his private keys stolen. In this case it’s good to avoid that the stolen keys and their corresponding certificates can still be used. Therefore a TLS client like a browser should check that a certificate provided by a server is not revoked.

That’s the theory at least. However the history of certificate revocation is a history of two technologies that don’t really work.

One method are certificate revocation lists (CRLs). It’s quite simple: A certificate authority provides a list of certificates that are revoked. This has an obvious limitation: These lists can grow. Given that a revocation check needs to happen during a connection it’s obvious that this is non-workable in any realistic scenario.

The second method is called OCSP (Online Certificate Status Protocol). Here a client can query a server about the status of a single certificate and will get a signed answer. This avoids the size problem of CRLs, but it still has a number of problems. Given that connections should be fast it’s quite a high cost for a client to make a connection to an OCSP server during each handshake. It’s also concerning for privacy, as it gives the operator of an OCSP server a lot of information.

However there’s a more severe problem: What happens if an OCSP server is not available? From a security point of view one could say that a certificate that can’t be OCSP-checked should be considered invalid. However OCSP servers are far too unreliable. So practically all clients implement OCSP in soft fail mode (or not at all). Soft fail means that if the OCSP server is not available the certificate is considered valid.

That makes the whole OCSP concept pointless: If an attacker tries to abuse a stolen, revoked certificate he can just block the connection to the OCSP server – and thus a client can’t learn that it’s revoked. Due to this inherent security failure Chrome decided to disable OCSP checking altogether. As a workaround they have something called CRLsets and Mozilla has something similar called OneCRL, which is essentially a big revocation list for important revocations managed by the browser vendor. However this is a weak workaround that doesn’t cover most certificates.

OCSP Stapling and Must Staple to the rescue?

There are two technologies that could fix this: OCSP Stapling and Must-Staple.

OCSP Stapling moves the querying of the OCSP server from the client to the server. The server gets OCSP replies and then sends them within the TLS handshake. This has several advantages: It avoids the latency and privacy implications of OCSP. It also allows surviving short downtimes of OCSP servers, because a TLS server can cache OCSP replies (they’re usually valid for several days).

However it still does not solve the security issue: If an attacker has a stolen, revoked certificate it can be used without Stapling. The browser won’t know about it and will query the OCSP server, this request can again be blocked by the attacker and the browser will accept the certificate.

Therefore an extension for certificates has been introduced that allows us to require Stapling. It’s usually called OCSP Must-Staple and is defined in RFC 7633 (although the RFC doesn’t mention the name Must-Staple, which can cause some confusion). If a browser sees a certificate with this extension that is used without OCSP Stapling it shouldn’t accept it.

So we should be fine. With OCSP Stapling we can avoid the latency and privacy issues of OCSP and we can avoid failing when OCSP servers have short downtimes. With OCSP Must-Staple we fix the security problems. No more soft fail. All good, right?

The OCSP Stapling implementations of Apache and Nginx are broken

Well, here come the implementations. While a lot of protocols use TLS, the most common use case is the web and HTTPS. According to Netcraft statistics by far the biggest share of active sites on the Internet run on Apache (about 46%), followed by Nginx (about 20 %). It’s reasonable to say that if these technologies should provide a solution for revocation they should be usable with the major products in that area. On the server side this is only OCSP Stapling, as OCSP Must Staple only needs to be checked by the client.

What would you expect from a working OCSP Stapling implementation? It should try to avoid a situation where it’s unable to send out a valid OCSP response. Thus roughly what it should do is to fetch a valid OCSP response as soon as possible and cache it until it gets a new one or it expires. It should furthermore try to fetch a new OCSP response long before the old one expires (ideally several days). And it should never throw away a valid response unless it has a newer one. Google developer Ryan Sleevi wrote a detailed description of what a proper OCSP Stapling implementation could look like.

Apache does none of this.

If Apache tries to renew the OCSP response and gets an error from the OCSP server – e. g. because it’s currently malfunctioning – it will throw away the existing, still valid OCSP response and replace it with the error. It will then send out stapled OCSP errors. Which makes zero sense. Firefox will show an error if it sees this. This has been reported in 2014 and is still unfixed.

Now there’s an option in Apache to avoid this behavior: SSLStaplingReturnResponderErrors. It’s defaulting to on. If you switch it off you won’t get sane behavior (that is – use the still valid, cached response), instead Apache will disable Stapling for the time it gets errors from the OCSP server. That’s better than sending out errors, but it obviously makes using Must Staple a no go.

It gets even crazier. I have set this option, but this morning I still got complaints that Firefox users were seeing errors. That’s because in this case the OCSP server wasn’t sending out errors, it was completely unavailable. For that situation Apache has a feature that will fake a tryLater error to send out to the client. If you’re wondering how that makes any sense: It doesn’t. The “tryLater” error of OCSP isn’t useful at all in TLS, because you can’t try later during a handshake which only lasts seconds.

This is controlled by another option: SSLStaplingFakeTryLater. However if we read the documentation it says “Only effective if SSLStaplingReturnResponderErrors is also enabled.” So if we disabled SSLStapingReturnResponderErrors this shouldn’t matter, right? Well: The documentation is wrong.

There are more problems: Apache doesn’t get the OCSP responses on startup, it only fetches them during the handshake. This causes extra latency on the first connection and increases the risk of hitting a situation where you don’t have a valid OCSP response. Also cached OCSP responses don’t survive server restarts, they’re kept in an in-memory cache.

There’s currently no way to configure Apache to handle OCSP stapling in a reasonable way. Here’s the configuration I use, which will at least make sure that it won’t send out errors and cache the responses a bit longer than it does by default:

I’m less familiar with Nginx, but from what I hear it isn’t much better either. According to this blogpost it doesn’t fetch OCSP responses on startup and will send out the first TLS connections without stapling even if it’s enabled. Here’s a blog post that recommends to work around this by connecting to all configured hosts after the server has started.

To summarize: This is all a big mess. Both Apache and Nginx have OCSP Stapling implementations that are essentially broken. As long as you’re using either of those then enabling Must-Staple is a reliable way to shoot yourself in the foot and get into trouble. Don’t enable it if you plan to use Apache or Nginx.

Certificate revocation is broken. It has been broken since the invention of SSL and it’s still broken. OCSP Stapling and OCSP Must-Staple could fix it in theory. But that would require working and stable implementations in the most widely used server products.

Today the OCSP servers from Let’s Encrypt were offline for a while. This has caused far more trouble than it should have, because in theory we have all the technologies available to handle such an incident. However due to failures in how they are implemented they don’t really work.

We have to understand some background. Encrypted connections using the TLS protocol like HTTPS use certificates. These are essentially cryptographic public keys together with a signed statement from a certificate authority that they belong to a certain host name.

CRL and OCSP – two technologies that don’t work

Certificates can be revoked. That means that for some reason the certificate should no longer be used. A typical scenario is when a certificate owner learns that his servers have been hacked and his private keys stolen. In this case it’s good to avoid that the stolen keys and their corresponding certificates can still be used. Therefore a TLS client like a browser should check that a certificate provided by a server is not revoked.

That’s the theory at least. However the history of certificate revocation is a history of two technologies that don’t really work.

One method are certificate revocation lists (CRLs). It’s quite simple: A certificate authority provides a list of certificates that are revoked. This has an obvious limitation: These lists can grow. Given that a revocation check needs to happen during a connection it’s obvious that this is non-workable in any realistic scenario.

The second method is called OCSP (Online Certificate Status Protocol). Here a client can query a server about the status of a single certificate and will get a signed answer. This avoids the size problem of CRLs, but it still has a number of problems. Given that connections should be fast it’s quite a high cost for a client to make a connection to an OCSP server during each handshake. It’s also concerning for privacy, as it gives the operator of an OCSP server a lot of information.

However there’s a more severe problem: What happens if an OCSP server is not available? From a security point of view one could say that a certificate that can’t be OCSP-checked should be considered invalid. However OCSP servers are far too unreliable. So practically all clients implement OCSP in soft fail mode (or not at all). Soft fail means that if the OCSP server is not available the certificate is considered valid.

That makes the whole OCSP concept pointless: If an attacker tries to abuse a stolen, revoked certificate he can just block the connection to the OCSP server – and thus a client can’t learn that it’s revoked. Due to this inherent security failure Chrome decided to disable OCSP checking altogether. As a workaround they have something called CRLsets and Mozilla has something similar called OneCRL, which is essentially a big revocation list for important revocations managed by the browser vendor. However this is a weak workaround that doesn’t cover most certificates.

OCSP Stapling and Must Staple to the rescue?

There are two technologies that could fix this: OCSP Stapling and Must-Staple.

OCSP Stapling moves the querying of the OCSP server from the client to the server. The server gets OCSP replies and then sends them within the TLS handshake. This has several advantages: It avoids the latency and privacy implications of OCSP. It also allows surviving short downtimes of OCSP servers, because a TLS server can cache OCSP replies (they’re usually valid for several days).

However it still does not solve the security issue: If an attacker has a stolen, revoked certificate it can be used without Stapling. The browser won’t know about it and will query the OCSP server, this request can again be blocked by the attacker and the browser will accept the certificate.

Therefore an extension for certificates has been introduced that allows us to require Stapling. It’s usually called OCSP Must-Staple and is defined in RFC 7633 (although the RFC doesn’t mention the name Must-Staple, which can cause some confusion). If a browser sees a certificate with this extension that is used without OCSP Stapling it shouldn’t accept it.

So we should be fine. With OCSP Stapling we can avoid the latency and privacy issues of OCSP and we can avoid failing when OCSP servers have short downtimes. With OCSP Must-Staple we fix the security problems. No more soft fail. All good, right?

The OCSP Stapling implementations of Apache and Nginx are broken

Well, here come the implementations. While a lot of protocols use TLS, the most common use case is the web and HTTPS. According to Netcraft statistics by far the biggest share of active sites on the Internet run on Apache (about 46%), followed by Nginx (about 20 %). It’s reasonable to say that if these technologies should provide a solution for revocation they should be usable with the major products in that area. On the server side this is only OCSP Stapling, as OCSP Must Staple only needs to be checked by the client.

What would you expect from a working OCSP Stapling implementation? It should try to avoid a situation where it’s unable to send out a valid OCSP response. Thus roughly what it should do is to fetch a valid OCSP response as soon as possible and cache it until it gets a new one or it expires. It should furthermore try to fetch a new OCSP response long before the old one expires (ideally several days). And it should never throw away a valid response unless it has a newer one. Google developer Ryan Sleevi wrote a detailed description of what a proper OCSP Stapling implementation could look like.

Apache does none of this.

If Apache tries to renew the OCSP response and gets an error from the OCSP server – e. g. because it’s currently malfunctioning – it will throw away the existing, still valid OCSP response and replace it with the error. It will then send out stapled OCSP errors. Which makes zero sense. Firefox will show an error if it sees this. This has been reported in 2014 and is still unfixed.

Now there’s an option in Apache to avoid this behavior: SSLStaplingReturnResponderErrors. It’s defaulting to on. If you switch it off you won’t get sane behavior (that is – use the still valid, cached response), instead Apache will disable Stapling for the time it gets errors from the OCSP server. That’s better than sending out errors, but it obviously makes using Must Staple a no go.

It gets even crazier. I have set this option, but this morning I still got complaints that Firefox users were seeing errors. That’s because in this case the OCSP server wasn’t sending out errors, it was completely unavailable. For that situation Apache has a feature that will fake a tryLater error to send out to the client. If you’re wondering how that makes any sense: It doesn’t. The “tryLater” error of OCSP isn’t useful at all in TLS, because you can’t try later during a handshake which only lasts seconds.

This is controlled by another option: SSLStaplingFakeTryLater. However if we read the documentation it says “Only effective if SSLStaplingReturnResponderErrors is also enabled.” So if we disabled SSLStapingReturnResponderErrors this shouldn’t matter, right? Well: The documentation is wrong.

There are more problems: Apache doesn’t get the OCSP responses on startup, it only fetches them during the handshake. This causes extra latency on the first connection and increases the risk of hitting a situation where you don’t have a valid OCSP response. Also cached OCSP responses don’t survive server restarts, they’re kept in an in-memory cache.

There’s currently no way to configure Apache to handle OCSP stapling in a reasonable way. Here’s the configuration I use, which will at least make sure that it won’t send out errors and cache the responses a bit longer than it does by default:

SSLStaplingCache shmcb:/var/tmp/ocsp-stapling-cache/cache(128000000)

SSLUseStapling on

SSLStaplingResponderTimeout 2

SSLStaplingReturnResponderErrors off

SSLStaplingFakeTryLater off

SSLStaplingStandardCacheTimeout 86400I’m less familiar with Nginx, but from what I hear it isn’t much better either. According to this blogpost it doesn’t fetch OCSP responses on startup and will send out the first TLS connections without stapling even if it’s enabled. Here’s a blog post that recommends to work around this by connecting to all configured hosts after the server has started.

To summarize: This is all a big mess. Both Apache and Nginx have OCSP Stapling implementations that are essentially broken. As long as you’re using either of those then enabling Must-Staple is a reliable way to shoot yourself in the foot and get into trouble. Don’t enable it if you plan to use Apache or Nginx.

Certificate revocation is broken. It has been broken since the invention of SSL and it’s still broken. OCSP Stapling and OCSP Must-Staple could fix it in theory. But that would require working and stable implementations in the most widely used server products.

Posted by Hanno Böck

in Cryptography, English, Linux, Security

at

23:25

| Comments (5)

| Trackback (1)

Defined tags for this entry: apache, certificates, cryptography, encryption, https, letsencrypt, nginx, ocsp, ocspstapling, revocation, ssl, tls

Wednesday, April 19. 2017

Passwords in the Bug Reports (Owncloud/Nextcloud)

A while ago I wanted to report a bug in one of Nextcloud's apps. They use the Github issue tracker, after creating a new issue I was welcomed with a long list of things they wanted to know about my installation. I filled the info to the best of my knowledge, until I was asked for this:

A while ago I wanted to report a bug in one of Nextcloud's apps. They use the Github issue tracker, after creating a new issue I was welcomed with a long list of things they wanted to know about my installation. I filled the info to the best of my knowledge, until I was asked for this:The content of config/config.php:

Which made me stop and wonder: The config file probably contains sensitive information like passwords. I quickly checked, and yes it does. It depends on the configuration of your Nextcloud installation, but in many cases the configuration contains variables for the database password (dbpassword), the smtp mail server password (mail_smtppassword) or both. Combined with other information from the config file (e. g. it also contains the smtp hostname) this could be very valuable information for an attacker.

A few lines later the bug reporting template has a warning (“Without the database password, passwordsalt and secret”), though this is incomplete, as it doesn't mention the smtp password. It also provides an alternative way of getting the content of the config file via the command line.

However... you know, this is the Internet. People don't read the fineprint. If you ask them to paste the content of their config file they might just do it.

User's passwords publicly accessible

The issues on github are all public and the URLs are of a very simple form and numbered (e. g. https://github.com/nextcloud/calendar/issues/[number]), so downloading all issues from a project is trivial. Thus with a quick check I could confirm that some users indeed posted real looking passwords to the bug tracker.

Nextcoud is a fork of Owncloud, so I checked that as well. The bug reporting template contained exactly the same words, probably Nextcloud just copied it over when they forked. So I reported the issue to both Owncloud and Nextcloud via their HackerOne bug bounty programs. That was in January.

I proposed that both projects should go through their past bug reports and remove everything that looks like a password or another sensitive value. I also said that I think asking for the content of the configuration file is inherently dangerous and should be avoided. To allow users to share configuration options in a safe way I proposed to offer an option similar to the command line tool (which may not be available or usable for all users) in the web interface.

The reaction wasn't overwhelming. Apart from confirming that both projects acknowledged the problem nothing happened for quite a while. During FOSDEM I reached out to members of both projects and discussed the issue in person. Shortly after that I announced that I intended to disclose this issue three months after the initial report.

Disclosure deadline was nearing with passwords still public

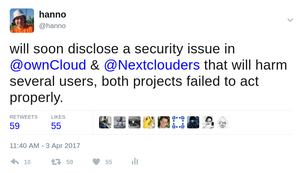

The deadline was nearing and I didn't receive any report on any actions being taken by Owncloud or Nextcloud. I sent out this tweet which received quite some attention (and I'm sorry that some people got worried about a vulnerability in Owncloud/Nextcloud itself, I got a couple of questions):

In all fairness to NextCloud, they had actually started scrubbing data from the existing bug reports, they just hadn't informed me. After the tweet Nextcloud gave me an update and Owncloud asked for a one week extension of the disclosure deadline which I agreed to.

The outcome by now isn't ideal. Both projects have scrubbed all obvious passwords from existing bug reports, although I still find values where it's not entirely clear whether they are replacement values or just very bad passwords (e. g. things like “123456”, but you might argue that people using such passwords have other problems).

Nextcloud has changed the wording of the bug reporting template. The new template still asks for the config file, but it mentions the safer command line option first and has the warning closer to the mentioning of the config. This is still far from ideal and I wouldn't be surprised if people continue pasting their passwords. However Nextcloud developers have indicated in the HackerOne discussion that they might pick up my idea of offering a GUI version to export a scrubbed config file. Owncloud has changed nothing yet.

If you have reported bugs to Owncloud or Nextcloud in the past and are unsure whether you may have pasted your password it's probably best to change it. Even if it's been removed now it may still be available within search engine caches or it might have already been recorded by an attacker.

Saturday, April 8. 2017

And then I saw the Password in the Stack Trace

I want to tell a little story here. I am usually relatively savvy in IT security issues. Yet I was made aware of a quite severe mistake today that caused a security issue in my web page. I want to learn from mistakes, but maybe also others can learn something as well.

I have a private web page. Its primary purpose is to provide a list of links to articles I wrote elsewhere. It's probably not a high value target, but well, being an IT security person I wanted to get security right.

Of course the page uses TLS-encryption via HTTPS. It also uses HTTP Strict Transport Security (HSTS), TLS 1.2 with an AEAD and forward secrecy, has a CAA record and even HPKP (although I tend to tell people that they shouldn't use HPKP, because it's too easy to get wrong). Obviously it has an A+ rating on SSL Labs.

Surely I thought about Cross Site Scripting (XSS). While an XSS on the page wouldn't be very interesting - it doesn't have any kind of login or backend and doesn't use cookies - and also quite unlikely – no user supplied input – I've done everything to prevent XSS. I set a strict Content Security Policy header and other security headers. I have an A-rating on securityheaders.io (no A+, because after several HPKP missteps I decided to use a short timeout).

I also thought about SQL injection. While an SQL injection would be quite unlikely – you remember, no user supplied input – I'm using prepared statements, so SQL injections should be practically impossible.

All in all I felt that I have a pretty secure web page. So what could possibly go wrong?

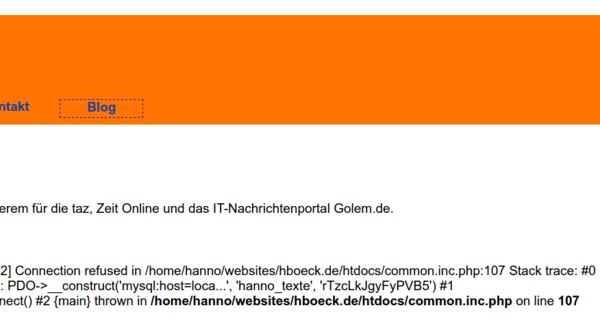

Well, this morning someone send me this screenshot:

And before you ask: Yes, this was the real database password. (I changed it now.)

So what happened? The mysql server was down for a moment. It had crashed for reasons unrelated to this web page. I had already taken care of that and hadn't noted the password leak. The crashed mysql server subsequently let to an error message by PDO (PDO stands for PHP Database Object and is the modern way of doing database operations in PHP).

The PDO error message contains a stack trace of the function call including function parameters. And this led to the password leak: The password is passed to the PDO constructor as a parameter.

There are a few things to note here. First of all for this to happen the PHP option display_errors needs to be enabled. It is recommended to disable this option in production systems, however it is enabled by default. (Interesting enough the PHP documentation about display_errors doesn't even tell you what the default is.)

display_errors wasn't enabled by accident. It was actually disabled in the past. I made a conscious decision to enable it. Back when we had display_errors disabled on the server I once tested a new PHP version where our custom config wasn't enabled yet. I noticed several bugs in PHP pages. So my rationale was that disabling display_errors hides bugs, thus I'd better enable it. In hindsight it was a bad idea. But well... hindsight is 20/20.

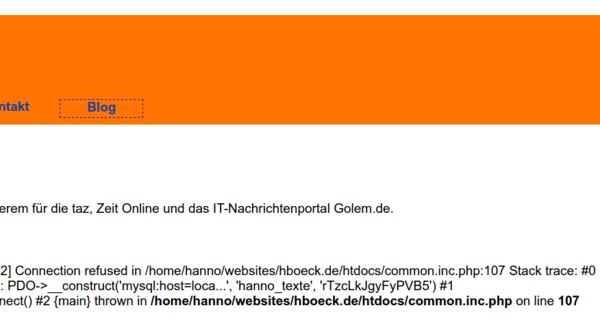

The second thing to note is that this only happens because PDO throws an exception that is unhandled. To be fair, the PDO documentation mentions this risk. Other kinds of PHP bugs don't show stack traces. If I had used mysqli – the second supported API to access MySQL databases in PHP – the error message would've looked like this:

While this still leaks the username, it's much less dangerous. This is a subtlety that is far from obvious. PHP functions have different modes of error reporting. Object oriented functions – like PDO – throw exceptions. Unhandled exceptions will lead to stack traces. Other functions will just report error messages without stack traces.

If you wonder about the impact: It's probably minor. People could've seen the password, but I haven't noticed any changes in the database. I obviously changed it immediately after being notified. I'm pretty certain that there is no way that a database compromise could be used to execute code within the web page code. It's far too simple for that.

Of course there are a number of ways this could've been prevented, and I've implemented several of them. I'm now properly handling exceptions from PDO. I also set a general exception handler that will inform me (and not the web page visitor) if any other unhandled exceptions occur. And finally I've changed the server's default to display_errors being disabled.

While I don't want to shift too much blame here, I think PHP is making this far too easy to happen. There exists a bug report about the leaking of passwords in stack traces from 2014, but nothing happened. I think there are a variety of unfortunate decisions made by PHP. If display_errors is dangerous and discouraged for production systems then it shouldn't be enabled by default.

PHP could avoid sending stack traces by default and make this a separate option from display_errors. It could also introduce a way to make exceptions fatal for functions so that calling those functions is prevented outside of a try/catch block that handles them. (However that obviously would introduce compatibility problems with existing applications, as Craig Young pointed out to me.)

So finally maybe a couple of takeaways:

I have a private web page. Its primary purpose is to provide a list of links to articles I wrote elsewhere. It's probably not a high value target, but well, being an IT security person I wanted to get security right.

Of course the page uses TLS-encryption via HTTPS. It also uses HTTP Strict Transport Security (HSTS), TLS 1.2 with an AEAD and forward secrecy, has a CAA record and even HPKP (although I tend to tell people that they shouldn't use HPKP, because it's too easy to get wrong). Obviously it has an A+ rating on SSL Labs.

Surely I thought about Cross Site Scripting (XSS). While an XSS on the page wouldn't be very interesting - it doesn't have any kind of login or backend and doesn't use cookies - and also quite unlikely – no user supplied input – I've done everything to prevent XSS. I set a strict Content Security Policy header and other security headers. I have an A-rating on securityheaders.io (no A+, because after several HPKP missteps I decided to use a short timeout).

I also thought about SQL injection. While an SQL injection would be quite unlikely – you remember, no user supplied input – I'm using prepared statements, so SQL injections should be practically impossible.

All in all I felt that I have a pretty secure web page. So what could possibly go wrong?

Well, this morning someone send me this screenshot:

And before you ask: Yes, this was the real database password. (I changed it now.)

So what happened? The mysql server was down for a moment. It had crashed for reasons unrelated to this web page. I had already taken care of that and hadn't noted the password leak. The crashed mysql server subsequently let to an error message by PDO (PDO stands for PHP Database Object and is the modern way of doing database operations in PHP).

The PDO error message contains a stack trace of the function call including function parameters. And this led to the password leak: The password is passed to the PDO constructor as a parameter.

There are a few things to note here. First of all for this to happen the PHP option display_errors needs to be enabled. It is recommended to disable this option in production systems, however it is enabled by default. (Interesting enough the PHP documentation about display_errors doesn't even tell you what the default is.)

display_errors wasn't enabled by accident. It was actually disabled in the past. I made a conscious decision to enable it. Back when we had display_errors disabled on the server I once tested a new PHP version where our custom config wasn't enabled yet. I noticed several bugs in PHP pages. So my rationale was that disabling display_errors hides bugs, thus I'd better enable it. In hindsight it was a bad idea. But well... hindsight is 20/20.

The second thing to note is that this only happens because PDO throws an exception that is unhandled. To be fair, the PDO documentation mentions this risk. Other kinds of PHP bugs don't show stack traces. If I had used mysqli – the second supported API to access MySQL databases in PHP – the error message would've looked like this:

PHP Warning: mysqli::__construct(): (HY000/1045): Access denied for user 'test'@'localhost' (using password: YES) in /home/[...]/mysqli.php on line 3While this still leaks the username, it's much less dangerous. This is a subtlety that is far from obvious. PHP functions have different modes of error reporting. Object oriented functions – like PDO – throw exceptions. Unhandled exceptions will lead to stack traces. Other functions will just report error messages without stack traces.

If you wonder about the impact: It's probably minor. People could've seen the password, but I haven't noticed any changes in the database. I obviously changed it immediately after being notified. I'm pretty certain that there is no way that a database compromise could be used to execute code within the web page code. It's far too simple for that.

Of course there are a number of ways this could've been prevented, and I've implemented several of them. I'm now properly handling exceptions from PDO. I also set a general exception handler that will inform me (and not the web page visitor) if any other unhandled exceptions occur. And finally I've changed the server's default to display_errors being disabled.