Wednesday, May 4. 2011

Diploma thesis on RSA-PSS finished

Today I submitted my diploma thesis to my university.

Today I submitted my diploma thesis to my university.The thesis summarizes several months of investigation of the Probabilistic Signature Scheme (PSS). Traditionally, RSA signatures are done by hashing and then signing them. PSS is an improved, provable secure scheme to prepare a message before signing. The main focus was to investigate where PSS is implemented and used in real world cryptographic applications with a special focus on X.509.

During my work on that, I also implemented PSS signatures for the nss library in the Google Summer of Code 2010.

The thesis itself (including PDF and latex sources), patches for nss and everything else relevant can be found at

http://rsapss.hboeck.de.

Thursday, April 21. 2011

X.509 / SSL certificate test cases

https is likely the most widely used cryptographic protocol. It's based on X.509 certificates. There's a living debate how useful this concept is at all, mainly through the interesting findings of the EFF SSL Observatory. But that won't be my point today.

Pretty much all webpage certificates use RSA and sadly, the vast majority still use insecure hash algorithms. But it is rarely known that the X.509 standards support a whole bunch of other public key algorithms.

I've set up a page with a couple of test-cases for less-often used algorithm combinations. At the moment, it's mainly focused on RSASSA-PSS, but I plan to add elliptic curve algorithms soon. As I won't get any certificate authority to sign me certificates with anything else than classic RSA, I created my own testing root CA.

I'd be very interested to get some feedback. If you happen to have some interesting OS/Browser combination, please import the root certificate and send me a screenshot where I can see how many green ticks there are (post a link to the screenshot in the commends or send it via email).

At the moment, I'm especially looking for people to test:

Pretty much all webpage certificates use RSA and sadly, the vast majority still use insecure hash algorithms. But it is rarely known that the X.509 standards support a whole bunch of other public key algorithms.

I've set up a page with a couple of test-cases for less-often used algorithm combinations. At the moment, it's mainly focused on RSASSA-PSS, but I plan to add elliptic curve algorithms soon. As I won't get any certificate authority to sign me certificates with anything else than classic RSA, I created my own testing root CA.

I'd be very interested to get some feedback. If you happen to have some interesting OS/Browser combination, please import the root certificate and send me a screenshot where I can see how many green ticks there are (post a link to the screenshot in the commends or send it via email).

At the moment, I'm especially looking for people to test:

- Internet Explorer 9 on Windows 7

- Safari on latest MacOS X

- Internal browser on iPhone (I don't know if it's possible to install a new certificate authority there)

Saturday, February 26. 2011

Playing with the EFF SSL Observatory

The Electronic Frontier Foundation is running a fascinating project called the SSL Observatory. What they basically do is quite simple: They collected all SSL certificates they could get via https (by scanning all possible IPs), put them in a database and made statistics with them.

For an introduction, watch their talk at the 27C3 - it's worth it. For example, they found a couple of "Extended Validation"-Certificates that clearly violated the rules for extended validation, including one 512-bit EV-certificate.

The great thing is: They provide the full mysql database for download. I took the time to import the thing locally and am now able to run my own queries against it.

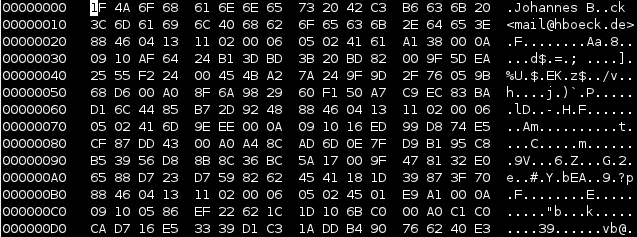

Let's show some examples: I'm interested in crypto algorithms used in the wild, so I wanted to know which are used in the wild at all. My query:

And the result:

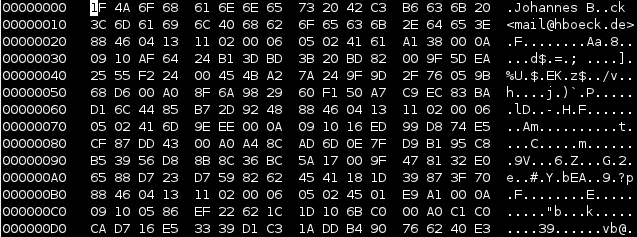

This query was only for the valid certs, meaning they were signed by any browser-supported certificate authority. Now I run the same query on the all_certs table, which contains every cert, including expired, self-signed or otherwise invalid ones:

For an introduction, watch their talk at the 27C3 - it's worth it. For example, they found a couple of "Extended Validation"-Certificates that clearly violated the rules for extended validation, including one 512-bit EV-certificate.

The great thing is: They provide the full mysql database for download. I took the time to import the thing locally and am now able to run my own queries against it.

Let's show some examples: I'm interested in crypto algorithms used in the wild, so I wanted to know which are used in the wild at all. My query:

SELECT `Signature Algorithm`, count(*) FROM valid_certs GROUP BY `Signature Algorithm` ORDER BY count(*);shows all signature algorithms used on the certificates.

And the result:

+--------------------------+----------+Nothing very surprising here. Seems nobody is using anything else than RSA. The most popular hash algorithm is SHA-1, followed by MD5. The transition to SHA-256 seems to go very slowly (btw., the most common argument I heared when asking CAs for SHA-256 certificates was that Windows XP before service pack 3 doesn't support that). The four MD2-certificates seem interesting, though even that old, it's still more secure than MD5 and provides a similar security margin as SHA-1, though support for it has been removed from a couple of security libraries some time ago.

| Signature Algorithm | count(*) |

+--------------------------+----------+

| sha512WithRSAEncryption | 1 |

| sha1WithRSA | 1 |

| md2WithRSAEncryption | 4 |

| sha256WithRSAEncryption | 62 |

| md5WithRSAEncryption | 29958 |

| sha1WithRSAEncryption | 1503333 |

+--------------------------+----------+

This query was only for the valid certs, meaning they were signed by any browser-supported certificate authority. Now I run the same query on the all_certs table, which contains every cert, including expired, self-signed or otherwise invalid ones:

+-------------------------------------------------------+----------+It seems quite some people are experimenting with DSA signatures. Interesting are the number of GOST-certificates. GOST was a set of cryptography standards by the former soviet union. Seems the number of people trying to use elliptic curves is really low (compared to the popularity they have and that if anyone cares for SSL performance, they may be a good catch). For the algorithms only showing numbers, 1.2.840.113549.1.1.10 is RSASSA-PSS (not detected by current openssl release versions), 1.3.6.1.4.1.5849.1.3.2 is also a GOST-variant (GOST3411withECGOST3410) and 1.2.840.113549.27.1.5 is unknown to google, so it must be something very special.

| Signature Algorithm | count(*) |

+-------------------------------------------------------+----------+

| 1.2.840.113549.27.1.5 | 1 |

| sha1 | 1 |

| dsaEncryption | 1 |

| 1.3.6.1.4.1.5849.1.3.2 | 1 |

| md5WithRSAEncryption ANDALSO md5WithRSAEncryption | 1 |

| ecdsa-with-Specified | 1 |

| dsaWithSHA1-old | 2 |

| itu-t ANDALSO itu-t | 2 |

| dsaWithSHA | 3 |

| 1.2.840.113549.1.1.10 | 4 |

| ecdsa-with-SHA384 | 5 |

| ecdsa-with-SHA512 | 5 |

| ripemd160WithRSA | 9 |

| md4WithRSAEncryption | 15 |

| sha384WithRSAEncryption | 24 |

| GOST R 34.11-94 with GOST R 34.10-94 | 25 |

| shaWithRSAEncryption | 50 |

| sha1WithRSAEncryption ANDALSO sha1WithRSAEncryption | 72 |

| rsaEncryption | 86 |

| md2WithRSAEncryption | 120 |

| GOST R 34.11-94 with GOST R 34.10-2001 | 378 |

| sha512WithRSAEncryption | 513 |

| sha256WithRSAEncryption | 2542 |

| dsaWithSHA1 | 2703 |

| sha1WithRSA | 60969 |

| md5WithRSAEncryption | 1354658 |

| sha1WithRSAEncryption | 4196367 |

+-------------------------------------------------------+----------+

Posted by Hanno Böck

in Computer culture, Cryptography, English, Science, Security

at

22:40

| Comments (0)

| Trackbacks (0)

Defined tags for this entry: algorithm, certificate, cryptography, eff, observatory, pss, rsa, security, ssl

Wednesday, January 5. 2011

How to create a PGP/GPG-key free of SHA-1

If you've read my last blog entry, you saw that I was struggling a bit with the fact that I was unable to create a PGP key without SHA-1. This is a bit tricky, as there are various places where hash functions are used within a pgp key:

1. The key self-signatures and signatures on other keys. Every key has user IDs that are signed with the master key itself. This is to proofe that the names and mail adresses in the key belong to the keyholder itself and can't be replaced my a malicous attacker.

2. The signatures on messages, for example E-Mails.

3. The preference in side the key - this indicates to other people what sigature algorithms you would prefer if they send messages to you.

4. The fingerprint.

1 is controlled by the setting cert-digest-algo in the file gpg.conf (for both self-signatures and signatures to other keys). 2 is controlled by the setting personal-digest-preferences. So you should add these two lines to your gpg.conf, preferrably before you create your own key (if you intend to create one, don't bother if you want to stick with your current one):

It is also not really trivial to check the used algorithms. For message signatures, if you verify them with gpg -v --verify [filename]. For key signatures, I found no option to do that - but a workaround: Export the key whose signatures you'd like to check gpg --export --armor [key ID] > filename.asc. Then parse the exported file with gpg -vv filename.asc. It'll show you blocks like this:

The big question remains: Why is this so complicated and why isn't gnupg just defaulting to SHA256? I don't know the answer.

(Please also have a look at this blog entry from Debian about the topic)

1. The key self-signatures and signatures on other keys. Every key has user IDs that are signed with the master key itself. This is to proofe that the names and mail adresses in the key belong to the keyholder itself and can't be replaced my a malicous attacker.

2. The signatures on messages, for example E-Mails.

3. The preference in side the key - this indicates to other people what sigature algorithms you would prefer if they send messages to you.

4. The fingerprint.

1 is controlled by the setting cert-digest-algo in the file gpg.conf (for both self-signatures and signatures to other keys). 2 is controlled by the setting personal-digest-preferences. So you should add these two lines to your gpg.conf, preferrably before you create your own key (if you intend to create one, don't bother if you want to stick with your current one):

personal-digest-preferences SHA2563 defaults to SHA256 if you generate your key with a recent GnuPG version. You can check it with gpg --edit-key [your key ID] and then showpref. For 4, I think it can't be changed at all (though I think it doesn't mean a security threat for collission attacks - still it should be changed at some point).

cert-digest-algo SHA256

It is also not really trivial to check the used algorithms. For message signatures, if you verify them with gpg -v --verify [filename]. For key signatures, I found no option to do that - but a workaround: Export the key whose signatures you'd like to check gpg --export --armor [key ID] > filename.asc. Then parse the exported file with gpg -vv filename.asc. It'll show you blocks like this:

:signature packet: algo 1, keyid A5880072BBB51E42The digest algo 8 is what you're looking for. 1 means MD5, 2 means SHA1, 8 means SHA256. Other values can be looked up in include/cipher.h in the source code. No, that's not user friendly. But I found no easier way.

version 4, created 1294258192, md5len 0, sigclass 0x13

digest algo 8, begin of digest 3e c3

The big question remains: Why is this so complicated and why isn't gnupg just defaulting to SHA256? I don't know the answer.

(Please also have a look at this blog entry from Debian about the topic)

Sunday, December 26. 2010

Goodbye 3DBD3B20, welcome BBB51E42

Having used my PGP key 3DBD3B20 for almost eight years, it's finally time for a new one: 4F9F43A9. The old primary key was a 1024 bit DSA key, which had two drawbacks:

1. 1024 bit keys for DLP or factoring based algorithms are considered insecure.

2. It's impossible to set the used hash algorithm to anything beyond SHA-1.

My new key has 4096 bits key size (2048 bit is the default of GnuPG since 2.0.13 and should be fairly enough, but I wanted some extra security) and the default hash algorithm preference is SHA-256. I had to make a couple of decisions for my name in the key:

1. I'm usually called Hanno, but my real/official name is Johannes.

2. My surname has a special character (ö) in it, which can be represented as oe.

In my previous keys, I've mixed this. I decided against this for the new key, because both my inofficial prename Hanno and my umlaut-converted surname Boeck are part of my mail adress, so people should still be able to find my key if they're searching for that.

Another decision was the time I wanted my key to be valid. I've decided to give it an expiration date, but a fairly long one: 10 years from now.

I've signed my new key with my old key, so if you've signed my old one, you should be able to verify the new one. I leave it up to you if you decide to sign my new key or if you want to re-new the signing procedure. I'll start from scratch and won't sign any keys I've signed with the old key automatically with the new one. If you want to key-sign with me, you may find me on the 27C3 within the next days.

My old key will be valid for a while, at some time in the future I'll probably revoke it.

Update: I just found out that having a key without SHA-1 is trickier than I thought. The self-signatures were still SHA-1. I could re-do the self-signatures and revoke the old ones, but that'd clutter the key with a lot of useless cruft and as the new key wasn't around long and didn't get any signatures I couldn't get easily again, I decided to start over again: The new key is BBB51E42 and the other one will be revoked.

I'll write another blog entry to document how you can create your own SHA-256 only key.

1. 1024 bit keys for DLP or factoring based algorithms are considered insecure.

2. It's impossible to set the used hash algorithm to anything beyond SHA-1.

My new key has 4096 bits key size (2048 bit is the default of GnuPG since 2.0.13 and should be fairly enough, but I wanted some extra security) and the default hash algorithm preference is SHA-256. I had to make a couple of decisions for my name in the key:

1. I'm usually called Hanno, but my real/official name is Johannes.

2. My surname has a special character (ö) in it, which can be represented as oe.

In my previous keys, I've mixed this. I decided against this for the new key, because both my inofficial prename Hanno and my umlaut-converted surname Boeck are part of my mail adress, so people should still be able to find my key if they're searching for that.

Another decision was the time I wanted my key to be valid. I've decided to give it an expiration date, but a fairly long one: 10 years from now.

I've signed my new key with my old key, so if you've signed my old one, you should be able to verify the new one. I leave it up to you if you decide to sign my new key or if you want to re-new the signing procedure. I'll start from scratch and won't sign any keys I've signed with the old key automatically with the new one. If you want to key-sign with me, you may find me on the 27C3 within the next days.

My old key will be valid for a while, at some time in the future I'll probably revoke it.

Update: I just found out that having a key without SHA-1 is trickier than I thought. The self-signatures were still SHA-1. I could re-do the self-signatures and revoke the old ones, but that'd clutter the key with a lot of useless cruft and as the new key wasn't around long and didn't get any signatures I couldn't get easily again, I decided to start over again: The new key is BBB51E42 and the other one will be revoked.

I'll write another blog entry to document how you can create your own SHA-256 only key.

Posted by Hanno Böck

in Cryptography, English, Gentoo, Linux, Security

at

18:16

| Comments (3)

| Trackbacks (0)

Defined tags for this entry: cryptography, datenschutz, encryption, gnupg, gpg, key, pgp, privacy, schlüssel, security, sha1, sha2, verschlüsselung

Tuesday, December 14. 2010

How I revoked my old PGP key

Prologue of this story: A very long time ago (2004 to be exact), I decided to create a new PGP / GnuPG key with 4096 bits (due to this talk). However, shortly after that, I had a hardware failure of my hard disc. The home was a dm-crypt partition with xfs. I was able to restore most data, but it seemed the key was lost. I continued to use my old key I had in a backup and the 4096 key was bitrotting on keyservers. And that always annoyed me. In the meantime, I found all private keys of old DOS (2.6.3i) and Windows (5.0) PGP keys I had created in the past and revoked them, but this 4096 key was still there.

I still have the hard disc in question and a couple of dumps I created during the data rescue back then. Today, I decided that I'll have to try restoring that key again. My strategy was not trying to do anything on the filesystem, but only operate within the image. Very likely the data must be there somewhere.

I found a place where I was rather sure that this must be the key. But exporting that piece with dd didn't succeed - looking a bit more at it, it seemed that the beginning was in shape, but at some place there were zeros. I don't know if this is due to the corruption or the fact that the filesystem didn't store the data sequentially at that place - but it didn't matter. I had a look at the file format of PGP keys in RFC 4880. Public keys and private keys are stored pretty similar. Only the beginning (the real "key") part differs, the userid / signatures / rest part is equal. So I was able to extract the private key block (starting with 0x95) with the rest (I just used the place where the first cleartext userid started with my name "Johannes"). What should I say? It worked like a charm. I was able to import my old private key and was able to revoke it. Key 147C5A9F is no longer valid. Great!

P. S.: Next step will be finally creating a new 4096 bit RSA key and abandoning my still-in-use 1024 bit DSA key for good.

I still have the hard disc in question and a couple of dumps I created during the data rescue back then. Today, I decided that I'll have to try restoring that key again. My strategy was not trying to do anything on the filesystem, but only operate within the image. Very likely the data must be there somewhere.

I found a place where I was rather sure that this must be the key. But exporting that piece with dd didn't succeed - looking a bit more at it, it seemed that the beginning was in shape, but at some place there were zeros. I don't know if this is due to the corruption or the fact that the filesystem didn't store the data sequentially at that place - but it didn't matter. I had a look at the file format of PGP keys in RFC 4880. Public keys and private keys are stored pretty similar. Only the beginning (the real "key") part differs, the userid / signatures / rest part is equal. So I was able to extract the private key block (starting with 0x95) with the rest (I just used the place where the first cleartext userid started with my name "Johannes"). What should I say? It worked like a charm. I was able to import my old private key and was able to revoke it. Key 147C5A9F is no longer valid. Great!

P. S.: Next step will be finally creating a new 4096 bit RSA key and abandoning my still-in-use 1024 bit DSA key for good.

Posted by Hanno Böck

in Code, Computer culture, Cryptography, English, Linux, Security

at

15:47

| Comment (1)

| Trackbacks (0)

Friday, December 10. 2010

Notes from talk about GSM and free software

Yesterday I was at a talk at the FSFE Berlin about free software and GSM. It was an interesting talk and discussion.

Probably most of you know that GSM is the protocol that keeps the large majority of mobile phones running. In the past, only a handful of companies worked with the protocol and according to the talk, even most mobile phone companies don't know much of the internal details, as they usually buy ready-made chips.

Three free software projects work on GSM, OpenBTS and OpenBSC on the server side and OsmocomBB on the client side. What I didn't know yet and think is really remarkable: The Island State of Niue installed a GSM-network based on OpenBTS. The island found no commercial operator, so they installed a free software based and community supported GSM network.

Afterwards, we had a longer discussion about security and privacy implications of GSM. To sum it up, GSM is horribly broken on the security side. It offers no authentication between phones and cells. Also, it's encryption has been broken in the early 90s. There is not much progress in protocol improvements although this is known for a very long time. It's also well known that so-called IMSI-cachers are sold illegally for a few thousand dollars. The only reason GSM is still working at all is basically that those possibilities still cost a few thousands. But cheaper hardware and improvement in free GSM software makes it more likely that those possibilities will have a greater impact in the future (this is only a brief summary and I'm not really in that topic, see Wikipedia for some starting points for more info).

There was a bit of discussion about the question how realistic it is that some "normal user" is threatened by this due to the price of a few thousand dollars for the equipment. I didn't bring this up in the discussion any more, but I remember having seen a talk by a guy from Intel that the tendency is to design generic chips for various protocols that can be GSM, Bluetooth or WLAN purely by software control. Thinking about that, this raises the question of protocol security even more, as it might already be possible to use mainstream computer hardware to do mobile phone wiretapping by just replacing the firmware of a wireless lan card. It almost certainly will be possible within some years.

Another topic that was raised was frequency regulation. Even with free software you wouldn't be able to operate your own GSM network, because you couldn't afford buying a frequency (although it seems to be possible to get a testing license for a limited space, e. g. for technical workshops - the 27C3 will have a GSM test network). I mentioned that there's a chapter in the book "Code" from Lawrence Lessig (available in an updated version here, chapter is "The Regulators of Speech: Distribution" and starts on page 270 in the PDF). The thoughts from Lessing are that frequency regulation was neccessary in the beginning of radio technology, but today, it would be easily possible to design protocols that don't need regulation - they could be auto-regulating, e. g. with a prefix in front of every data package (the way wireless lan works). But the problem with that is that today, frequency usage generates large income for the state - that's completely against the original idea of it, as it's primarily purpose was to keep technology usable.

Probably most of you know that GSM is the protocol that keeps the large majority of mobile phones running. In the past, only a handful of companies worked with the protocol and according to the talk, even most mobile phone companies don't know much of the internal details, as they usually buy ready-made chips.

Three free software projects work on GSM, OpenBTS and OpenBSC on the server side and OsmocomBB on the client side. What I didn't know yet and think is really remarkable: The Island State of Niue installed a GSM-network based on OpenBTS. The island found no commercial operator, so they installed a free software based and community supported GSM network.

Afterwards, we had a longer discussion about security and privacy implications of GSM. To sum it up, GSM is horribly broken on the security side. It offers no authentication between phones and cells. Also, it's encryption has been broken in the early 90s. There is not much progress in protocol improvements although this is known for a very long time. It's also well known that so-called IMSI-cachers are sold illegally for a few thousand dollars. The only reason GSM is still working at all is basically that those possibilities still cost a few thousands. But cheaper hardware and improvement in free GSM software makes it more likely that those possibilities will have a greater impact in the future (this is only a brief summary and I'm not really in that topic, see Wikipedia for some starting points for more info).

There was a bit of discussion about the question how realistic it is that some "normal user" is threatened by this due to the price of a few thousand dollars for the equipment. I didn't bring this up in the discussion any more, but I remember having seen a talk by a guy from Intel that the tendency is to design generic chips for various protocols that can be GSM, Bluetooth or WLAN purely by software control. Thinking about that, this raises the question of protocol security even more, as it might already be possible to use mainstream computer hardware to do mobile phone wiretapping by just replacing the firmware of a wireless lan card. It almost certainly will be possible within some years.

Another topic that was raised was frequency regulation. Even with free software you wouldn't be able to operate your own GSM network, because you couldn't afford buying a frequency (although it seems to be possible to get a testing license for a limited space, e. g. for technical workshops - the 27C3 will have a GSM test network). I mentioned that there's a chapter in the book "Code" from Lawrence Lessig (available in an updated version here, chapter is "The Regulators of Speech: Distribution" and starts on page 270 in the PDF). The thoughts from Lessing are that frequency regulation was neccessary in the beginning of radio technology, but today, it would be easily possible to design protocols that don't need regulation - they could be auto-regulating, e. g. with a prefix in front of every data package (the way wireless lan works). But the problem with that is that today, frequency usage generates large income for the state - that's completely against the original idea of it, as it's primarily purpose was to keep technology usable.

Posted by Hanno Böck

in Computer culture, Cryptography, English, Linux, Security

at

22:35

| Comments (0)

| Trackbacks (0)

Defined tags for this entry: 27c3, berlin, cellular, freesoftware, frequency, fsfe, gsm, lessig, mobilephones, openbsc, openbts, osmocombb, privacy, security, wiretapping

Tuesday, August 10. 2010

P != NP and what this may mean to cryptography

Yesterday I read via twitter that the HP researcher Vinay Deolalikar claimed to have proofen P!=NP. If you never heared about it, the question whether P=PN or not is probably the biggest unsolved problem in computer science and one of the biggest ones in mathematics. It's one of the seven millenium problems that the Clay Mathematics Institute announced in 2000. Only one of them has been solved yet (Poincaré conjecture) and everyone who solves one gets one million dollar for it.

The P/NP-problem is one of the candidates where many have thought that it may never be solved at all and if this result is true, it's a serious sensation. Obviously, that someone claimed to have solved it does not mean that it is solved. Dozends of pages with complex math need to be peer reviewed by other researchers. Even if it's correct, it will take some time until it'll be widely accepted. I'm far away from understanding the math used there, so I cannot comment on it, but it seems Vinay Deolalikar is a serious researcher and has published in the area before, so it's at least promising. As I'm currently working on "provable" cryptography and this has quite some relation to it, I'll try to explain it a bit in simple words and will give some outlook what this may mean for the security of your bank accounts and encrypted emails in the future.

P and NP are problem classes that say how hard it is to solve a problem. Generally speaking, P problems are ones that can be solved rather fast - more exactly, their running time can be expressed as a polynom. NP problems on the other hand are problems where a simple method exists to verify if they are correct but it's still hard to solve them. To give a real-world example: If you have a number of objects and want to put them into a box. Though you don't know if they fit into the box. There's a vast number of possibilitys how to order the objects so they fit into the box, so it may be really hard to find out if it's possible at all. But if you have a solution (all objects are in the box), you can close the lit and easily see that the solution works (I'm not entirely sure on that but I think this is a variant of KNAPSACK). There's another important class of problems and that are NP complete problems. Those are like the "kings" of NP problems, their meaning is that if you have an efficient algorithm for one NP complete problem, you would be able to use that to solve all other NP problems.

NP problems are the basis of cryptography. The most popular public key algorithm, RSA, is based on the factoring problem. Factoring means that you divide a non-prime into a number of primes, for example factoring 6 results in 2*3. It is hard to do factoring on a large number, but if you have two factors, it's easy to check that they are indeed factors of the large number by multiplying them. One big problem with RSA (and pretty much all other cryptographic methods) is that it's possible that a trick exists that nobody has found yet which makes it easy to factorize a large number. Such a trick would undermine the basis of most cryptography used in the internet today, for example https/ssl.

What one would want to see is cryptography that is provable secure. This would mean that one can proove that it's really hard (where "really hard" could be something like "this is not possible with normal computers using the amount of mass in the earth in the lifetime of a human") to break it. With todays math, such proofs are nearly impossible. In math terms, this would be a lower bound for the complexity of a problem.

And that's where the P!=NP proof get's interesting. If it's true that P!=NP then this would mean NP problems are definitely more complex than P problems. So this might be the first breakthrough in defining lower bounds of complexity. I said above that I'm currently working on "proovable" security (with the example of RSA-PSS), but provable in this context means that you have core algorithms that you believe are secure and design your provable cryptographic system around it. Knowing that P!=NP could be the first step in having really "provable secure" algorithms at the heart of cryptography.

I want to stress that it's only a "first step". Up until today, nobody was able to design a useful public key cryptography system around an NP hard problem. Factoring is NP, but (at least as far as we know) it's not NP hard. I haven't covered the whole topic of quantum computers at all, which opens up a whole lot of other questions (for the curious, it's unknown if NP hard problems can be solved with quantum computers).

As a final conclusion, if the upper result is true, this will lead to a whole new aera of cryptographic research - and some of it will very likely end up in your webbrowser within some years.

The P/NP-problem is one of the candidates where many have thought that it may never be solved at all and if this result is true, it's a serious sensation. Obviously, that someone claimed to have solved it does not mean that it is solved. Dozends of pages with complex math need to be peer reviewed by other researchers. Even if it's correct, it will take some time until it'll be widely accepted. I'm far away from understanding the math used there, so I cannot comment on it, but it seems Vinay Deolalikar is a serious researcher and has published in the area before, so it's at least promising. As I'm currently working on "provable" cryptography and this has quite some relation to it, I'll try to explain it a bit in simple words and will give some outlook what this may mean for the security of your bank accounts and encrypted emails in the future.

P and NP are problem classes that say how hard it is to solve a problem. Generally speaking, P problems are ones that can be solved rather fast - more exactly, their running time can be expressed as a polynom. NP problems on the other hand are problems where a simple method exists to verify if they are correct but it's still hard to solve them. To give a real-world example: If you have a number of objects and want to put them into a box. Though you don't know if they fit into the box. There's a vast number of possibilitys how to order the objects so they fit into the box, so it may be really hard to find out if it's possible at all. But if you have a solution (all objects are in the box), you can close the lit and easily see that the solution works (I'm not entirely sure on that but I think this is a variant of KNAPSACK). There's another important class of problems and that are NP complete problems. Those are like the "kings" of NP problems, their meaning is that if you have an efficient algorithm for one NP complete problem, you would be able to use that to solve all other NP problems.

NP problems are the basis of cryptography. The most popular public key algorithm, RSA, is based on the factoring problem. Factoring means that you divide a non-prime into a number of primes, for example factoring 6 results in 2*3. It is hard to do factoring on a large number, but if you have two factors, it's easy to check that they are indeed factors of the large number by multiplying them. One big problem with RSA (and pretty much all other cryptographic methods) is that it's possible that a trick exists that nobody has found yet which makes it easy to factorize a large number. Such a trick would undermine the basis of most cryptography used in the internet today, for example https/ssl.

What one would want to see is cryptography that is provable secure. This would mean that one can proove that it's really hard (where "really hard" could be something like "this is not possible with normal computers using the amount of mass in the earth in the lifetime of a human") to break it. With todays math, such proofs are nearly impossible. In math terms, this would be a lower bound for the complexity of a problem.

And that's where the P!=NP proof get's interesting. If it's true that P!=NP then this would mean NP problems are definitely more complex than P problems. So this might be the first breakthrough in defining lower bounds of complexity. I said above that I'm currently working on "proovable" security (with the example of RSA-PSS), but provable in this context means that you have core algorithms that you believe are secure and design your provable cryptographic system around it. Knowing that P!=NP could be the first step in having really "provable secure" algorithms at the heart of cryptography.

I want to stress that it's only a "first step". Up until today, nobody was able to design a useful public key cryptography system around an NP hard problem. Factoring is NP, but (at least as far as we know) it's not NP hard. I haven't covered the whole topic of quantum computers at all, which opens up a whole lot of other questions (for the curious, it's unknown if NP hard problems can be solved with quantum computers).

As a final conclusion, if the upper result is true, this will lead to a whole new aera of cryptographic research - and some of it will very likely end up in your webbrowser within some years.

Posted by Hanno Böck

in Computer culture, Cryptography, English, Science

at

12:42

| Comments (2)

| Trackbacks (0)

Defined tags for this entry: cmi, cryptography, deolalikar, math, milleniumproblems, pnp, provablesecurity, security

Friday, May 14. 2010

Secure RSA padding: RSA-PSS

I got selected for this years Google Summer of Code with a project for the implementation of RSA-PSS in the nss library. RSA-PSS will also be the topic of my diploma thesis, so I thought I'd write some lines about it.

RSA is, as you may probably know, the most widely used public key cryptography algorithm. It can be used for signing and encryption, RSA-PSS is about signing (something similar, RSA-OAEP, exists for encryption, but that's not my main topic).

The formula for the RSA-algorithm is S = M^k mod N (S is the signature, M the input, k the private key and N the product of two big prime numbers). One important thing is that M is not the Message itself, but some encoding of the message. A simple way of doing this encoding is using a hash-function, for example SHA256. This is basically how old standards (like PKCS #1 1.5) worked. While no attacks exist against this scheme, it's believed that this can be improved. One reason is that while the RSA-function accepts an input of size N (which is the same length as the keysize, for example 2048/4096 bit), hash-functions usually produce much smaller inputs (something like 160/256 bit).

An improved scheme for that is the Probabilistic Signature Scheme (PSS), (Bellare/Rogaway 1996/1998). PSS is "provable secure". It does not mean that the outcoming algorithm is "provable secure" (that's impossible with today's math), but that the outcome is as secure as the input algorithm RSA and the used hash function (so-called "random oracle model"). A standard for PSS-encryption is PKCS #1 2.1 (republished as RFC 3447) So PSS in general is a good idea as a security measure, but as there is no real pressure to implement it, it's still not used very much. Just an example, the new DNSSEC ressource records just published last year still use the old PKCS #1 1.5 standard.

For SSL/TLS, standards to use PSS exist (RFC 4055, RFC 5756), but implementation is widely lacking. Just recently, openssl got support for PSS verification. The only implementation of signature creation I'm aware of is the java-library bouncycastle (yes, this forced me to write some lines of java code).

The nss library is used by the Mozilla products (Firefox, Thunderbird), so an implementation there is crucial for a more widespread use of PSS.

RSA is, as you may probably know, the most widely used public key cryptography algorithm. It can be used for signing and encryption, RSA-PSS is about signing (something similar, RSA-OAEP, exists for encryption, but that's not my main topic).

The formula for the RSA-algorithm is S = M^k mod N (S is the signature, M the input, k the private key and N the product of two big prime numbers). One important thing is that M is not the Message itself, but some encoding of the message. A simple way of doing this encoding is using a hash-function, for example SHA256. This is basically how old standards (like PKCS #1 1.5) worked. While no attacks exist against this scheme, it's believed that this can be improved. One reason is that while the RSA-function accepts an input of size N (which is the same length as the keysize, for example 2048/4096 bit), hash-functions usually produce much smaller inputs (something like 160/256 bit).

An improved scheme for that is the Probabilistic Signature Scheme (PSS), (Bellare/Rogaway 1996/1998). PSS is "provable secure". It does not mean that the outcoming algorithm is "provable secure" (that's impossible with today's math), but that the outcome is as secure as the input algorithm RSA and the used hash function (so-called "random oracle model"). A standard for PSS-encryption is PKCS #1 2.1 (republished as RFC 3447) So PSS in general is a good idea as a security measure, but as there is no real pressure to implement it, it's still not used very much. Just an example, the new DNSSEC ressource records just published last year still use the old PKCS #1 1.5 standard.

For SSL/TLS, standards to use PSS exist (RFC 4055, RFC 5756), but implementation is widely lacking. Just recently, openssl got support for PSS verification. The only implementation of signature creation I'm aware of is the java-library bouncycastle (yes, this forced me to write some lines of java code).

The nss library is used by the Mozilla products (Firefox, Thunderbird), so an implementation there is crucial for a more widespread use of PSS.

Posted by Hanno Böck

in Code, Cryptography, English, Linux, Security

at

23:22

| Comments (0)

| Trackbacks (0)

Monday, February 1. 2010

SSL-Certificates with SHA256 signature

At least since 2005 it's well known that the cryptographic hash function SHA1 is seriously flawed and it's only a matter of time until it will be broken. However, it's still widely used and it can be expected that it'll be used long enough to allow real world attacks (as it happened with MD5 before). The NIST (the US National Institute of Standards and Technology) suggests not to use SHA1 after 2010, the german BSI (Bundesamt für Sicherheit in der Informationstechnik) says they should've been fadet out by the end of 2009.

The probably most widely used encryption protocol is SSL. It is a protocol that can operate on top of many other internet protocols and is for example widely used for banking accounts.

As SSL is a pretty complex protocol, it needs hash functions at various places, here I'm just looking at one of them. The signatures created by the certificate authorities. Every SSL certificate is signed by a CA, even if you generate SSL certificates yourself, they are self-signed, meaning that the certificate itself is it's own CA. From what I know, despite the suggestions mentioned above no big CA will give you certificates signed with anything better than SHA1. You can check this with:

openssl x509 -text -in [your ssl certificate]

Look for "Signature Algorithm". It'll most likely say sha1WithRSAEncryption. If your CA is good, it'll show sha256WithRSAEncryption. If your CA is really bad, it may show md5WithRSAEncryption.

When asking for SHA256 support, you often get the answer that the software still has problems, it's not ready yet. When asking for more information I never got answers. So I tried it myself. On an up-to-date apache webserver with mod_ssl, it was no problem to install a SHA256 signed certificate based on a SHA256 signed test CA. All browsers I've tried (Firefox 3.6, Konqueror 4.3.5, Opera 10.10, IE8 and even IE6) had no problem with it. You can check it out at [removed]. You will get a certificate warning (obviously, as it's signed by my own test CA), but you'll be able to view the page. If you want to test it without warnings, you can also import the CA certificate.

I'd be interested if this causes any problems (on server or on client side), so please leave a comment if you are aware of any incompatibilities.

Update: By request in the comments, I've also created a SHA512 testcase.

Update 2: StartSSL wrote me that they tried providing SHA256-certificates about a year ago and had too many problems - it wasn't very specific but they mentioned that earlier Windows XP and Windows 2003 Server versions may have problems.

The probably most widely used encryption protocol is SSL. It is a protocol that can operate on top of many other internet protocols and is for example widely used for banking accounts.

As SSL is a pretty complex protocol, it needs hash functions at various places, here I'm just looking at one of them. The signatures created by the certificate authorities. Every SSL certificate is signed by a CA, even if you generate SSL certificates yourself, they are self-signed, meaning that the certificate itself is it's own CA. From what I know, despite the suggestions mentioned above no big CA will give you certificates signed with anything better than SHA1. You can check this with:

openssl x509 -text -in [your ssl certificate]

Look for "Signature Algorithm". It'll most likely say sha1WithRSAEncryption. If your CA is good, it'll show sha256WithRSAEncryption. If your CA is really bad, it may show md5WithRSAEncryption.

When asking for SHA256 support, you often get the answer that the software still has problems, it's not ready yet. When asking for more information I never got answers. So I tried it myself. On an up-to-date apache webserver with mod_ssl, it was no problem to install a SHA256 signed certificate based on a SHA256 signed test CA. All browsers I've tried (Firefox 3.6, Konqueror 4.3.5, Opera 10.10, IE8 and even IE6) had no problem with it. You can check it out at [removed]. You will get a certificate warning (obviously, as it's signed by my own test CA), but you'll be able to view the page. If you want to test it without warnings, you can also import the CA certificate.

I'd be interested if this causes any problems (on server or on client side), so please leave a comment if you are aware of any incompatibilities.

Update: By request in the comments, I've also created a SHA512 testcase.

Update 2: StartSSL wrote me that they tried providing SHA256-certificates about a year ago and had too many problems - it wasn't very specific but they mentioned that earlier Windows XP and Windows 2003 Server versions may have problems.

Posted by Hanno Böck

in Cryptography, English, Gentoo, Linux, Security

at

23:23

| Comments (15)

| Trackback (1)

Saturday, January 3. 2009

Folien zu Kryptographie-Vortrag

Gestern habe ich den Versuch unternommen, in einem Vortrag ein paar Hintergründe über Kryptographie zu vermitteln, u. a. mit dem Versuch, einen Diffie-Hellmann-Schlüsselaustausch mathematisch zu erklären.

Die Folien gibt's hier.

Die Folien gibt's hier.

Thursday, September 25. 2008

SSL Session hijacking

Recently, two publications raised awareness of a problem with ssl secured websites.

If a website is configured to always forward traffic to ssl, one would assume that all traffic is safe and nothing can be sniffed. Though, if one is able to sniff network traffic and also has the ability to forward the victim to a crafted site (which can, e. g., be just sending him some »hey, read this, interesting text« message), he can then force the victim to open a http-connection. If the cookie has not set the secured flag, the attacker can sniff the cookie and take over the session of the user (assuming it's using some kind of cookie-based session, which is pretty standard on today's webapps).

The solution to this is that a webapp should always check if the connection is ssl and set the secured flag accordingly. For PHP, this could be something like this:

I've recently investigated all web applications I'm using myself and reported the issue (Mantis / CVE-2008-3102, Drupal / CVE-2008-3661, Gallery / CVE-2008-3662, Squirrelmail / CVE-2008-3663). I have some more pending I want to investigate further.

I call security researchers to add this issue to their list of common things one has to look after. I find the firefox-extension CookieMonster very useful for this.

The result of my reports was quite mixed. While the gallery team took the issue very serious (and even payed me a bounty for my report, many thanks for that), the drupal team thinks there is no issue and did nothing. The others have not released updates yet, but fixed the issue in their code.

And for a final word, I want to share a mail with you I got after posting the gallery issue to full disclosure:

for fuck's sake dude! half of the planet, military, government, financial sites suffer from this and the best you could come up with is a fucking photo album no one uses! do everybody a favor and die you lame fuck!

If a website is configured to always forward traffic to ssl, one would assume that all traffic is safe and nothing can be sniffed. Though, if one is able to sniff network traffic and also has the ability to forward the victim to a crafted site (which can, e. g., be just sending him some »hey, read this, interesting text« message), he can then force the victim to open a http-connection. If the cookie has not set the secured flag, the attacker can sniff the cookie and take over the session of the user (assuming it's using some kind of cookie-based session, which is pretty standard on today's webapps).

The solution to this is that a webapp should always check if the connection is ssl and set the secured flag accordingly. For PHP, this could be something like this:

if ($_SERVER['HTTPS']) session_set_cookie_params( 0, '/', '', true, true );

I've recently investigated all web applications I'm using myself and reported the issue (Mantis / CVE-2008-3102, Drupal / CVE-2008-3661, Gallery / CVE-2008-3662, Squirrelmail / CVE-2008-3663). I have some more pending I want to investigate further.

I call security researchers to add this issue to their list of common things one has to look after. I find the firefox-extension CookieMonster very useful for this.

The result of my reports was quite mixed. While the gallery team took the issue very serious (and even payed me a bounty for my report, many thanks for that), the drupal team thinks there is no issue and did nothing. The others have not released updates yet, but fixed the issue in their code.

And for a final word, I want to share a mail with you I got after posting the gallery issue to full disclosure:

for fuck's sake dude! half of the planet, military, government, financial sites suffer from this and the best you could come up with is a fucking photo album no one uses! do everybody a favor and die you lame fuck!

Tuesday, April 29. 2008

Hash-collissions in real world scenarios

I just read an article about the recent wordpress vulnerability (if you're running wordpress, please update to 2.5.1 NOW), one point raised my attention: The attack uses MD5-collisions.

I wrote some articles about hash collisions a while back. Short introduction: A cryptographic hash-function is a function where you can put in any data and you'll get a unique, fixed-size value. »unique« in this case scenario means that it's very hard to calculate two different strings matching to the same hash value. If you can do that, the function should be considered broken.

The MD5 function got broken some years back (2004) and it's more or less a question of time when the same will happen to SHA1. There have been scientific results claiming that an attacker with enough money could easily create a supercomputer able to create collisions on SHA1. The evil thing is: Due to the design of both functions, if you have one collision, you can create many more easily.

Although those facts are well known, SHA1 is still widely used (just have a look at your SSL connections or at the way the PGP web of trust works) and MD5 isn't dead either. The fact that a well-known piece of software got issues depending on hash collisions should raise attention. Pretty much all security considerations on cryptographic protocols rely on the collision resistance of hash functions.

The NIST plans to define new hash functions until 2012, until then it's probably a safe choice to stick with SHA256 or SHA512.

I wrote some articles about hash collisions a while back. Short introduction: A cryptographic hash-function is a function where you can put in any data and you'll get a unique, fixed-size value. »unique« in this case scenario means that it's very hard to calculate two different strings matching to the same hash value. If you can do that, the function should be considered broken.

The MD5 function got broken some years back (2004) and it's more or less a question of time when the same will happen to SHA1. There have been scientific results claiming that an attacker with enough money could easily create a supercomputer able to create collisions on SHA1. The evil thing is: Due to the design of both functions, if you have one collision, you can create many more easily.

Although those facts are well known, SHA1 is still widely used (just have a look at your SSL connections or at the way the PGP web of trust works) and MD5 isn't dead either. The fact that a well-known piece of software got issues depending on hash collisions should raise attention. Pretty much all security considerations on cryptographic protocols rely on the collision resistance of hash functions.

The NIST plans to define new hash functions until 2012, until then it's probably a safe choice to stick with SHA256 or SHA512.

Posted by Hanno Böck

in Code, Cryptography, English, Security

at

21:44

| Comments (3)

| Trackbacks (0)

Tuesday, February 26. 2008

Manually decrypting S/MIME mails

I recently took the new CAcert assurer test. Afterwards, one has to send a S/MIME-signed mail to get a PDF-certificate.

Having the same problem like Bernd, the answer came in an RC2-encrypted S/MIME-mail. I'm using kmail, kmail uses gpgsm for S/MIME and that doesn't support RC2.

While this opens some obvious questions (Why is anyone in the world still using RC2? Why is anyone using S/MIME at all?), I was able to circumvent that without the hassle of installing thunderbird (which was Bernd's solution).

openssl supports RC2 and can handle S/MIME. And this did the trick:

It needed the full mail, which took me a while, because I first tried to only decrypt the attachment.

Having the same problem like Bernd, the answer came in an RC2-encrypted S/MIME-mail. I'm using kmail, kmail uses gpgsm for S/MIME and that doesn't support RC2.

While this opens some obvious questions (Why is anyone in the world still using RC2? Why is anyone using S/MIME at all?), I was able to circumvent that without the hassle of installing thunderbird (which was Bernd's solution).

openssl supports RC2 and can handle S/MIME. And this did the trick:

openssl smime -decrypt -in [full mail] -inkey sslclientcert.key

It needed the full mail, which took me a while, because I first tried to only decrypt the attachment.

Posted by Hanno Böck

in Code, Cryptography, English, Linux, Security

at

21:05

| Comments (0)

| Trackbacks (0)

Thursday, May 3. 2007

09 F9 11 02 9D 74 E3 5B D8 41 56 C5 63 56 88 C0

09 F9 11 02 9D 74 E3 5B D8 41 56 C5 63 56 88 C0

is all I have to say today.

is all I have to say today.

Posted by Hanno Böck

in Copyright, Cryptography, English, Movies, Politics, Security

at

03:59

| Comments (2)

| Trackback (1)

« previous page

(Page 3 of 5, totaling 65 entries)

» next page