Friday, December 10. 2010

Notes from talk about GSM and free software

Yesterday I was at a talk at the FSFE Berlin about free software and GSM. It was an interesting talk and discussion.

Probably most of you know that GSM is the protocol that keeps the large majority of mobile phones running. In the past, only a handful of companies worked with the protocol and according to the talk, even most mobile phone companies don't know much of the internal details, as they usually buy ready-made chips.

Three free software projects work on GSM, OpenBTS and OpenBSC on the server side and OsmocomBB on the client side. What I didn't know yet and think is really remarkable: The Island State of Niue installed a GSM-network based on OpenBTS. The island found no commercial operator, so they installed a free software based and community supported GSM network.

Afterwards, we had a longer discussion about security and privacy implications of GSM. To sum it up, GSM is horribly broken on the security side. It offers no authentication between phones and cells. Also, it's encryption has been broken in the early 90s. There is not much progress in protocol improvements although this is known for a very long time. It's also well known that so-called IMSI-cachers are sold illegally for a few thousand dollars. The only reason GSM is still working at all is basically that those possibilities still cost a few thousands. But cheaper hardware and improvement in free GSM software makes it more likely that those possibilities will have a greater impact in the future (this is only a brief summary and I'm not really in that topic, see Wikipedia for some starting points for more info).

There was a bit of discussion about the question how realistic it is that some "normal user" is threatened by this due to the price of a few thousand dollars for the equipment. I didn't bring this up in the discussion any more, but I remember having seen a talk by a guy from Intel that the tendency is to design generic chips for various protocols that can be GSM, Bluetooth or WLAN purely by software control. Thinking about that, this raises the question of protocol security even more, as it might already be possible to use mainstream computer hardware to do mobile phone wiretapping by just replacing the firmware of a wireless lan card. It almost certainly will be possible within some years.

Another topic that was raised was frequency regulation. Even with free software you wouldn't be able to operate your own GSM network, because you couldn't afford buying a frequency (although it seems to be possible to get a testing license for a limited space, e. g. for technical workshops - the 27C3 will have a GSM test network). I mentioned that there's a chapter in the book "Code" from Lawrence Lessig (available in an updated version here, chapter is "The Regulators of Speech: Distribution" and starts on page 270 in the PDF). The thoughts from Lessing are that frequency regulation was neccessary in the beginning of radio technology, but today, it would be easily possible to design protocols that don't need regulation - they could be auto-regulating, e. g. with a prefix in front of every data package (the way wireless lan works). But the problem with that is that today, frequency usage generates large income for the state - that's completely against the original idea of it, as it's primarily purpose was to keep technology usable.

Probably most of you know that GSM is the protocol that keeps the large majority of mobile phones running. In the past, only a handful of companies worked with the protocol and according to the talk, even most mobile phone companies don't know much of the internal details, as they usually buy ready-made chips.

Three free software projects work on GSM, OpenBTS and OpenBSC on the server side and OsmocomBB on the client side. What I didn't know yet and think is really remarkable: The Island State of Niue installed a GSM-network based on OpenBTS. The island found no commercial operator, so they installed a free software based and community supported GSM network.

Afterwards, we had a longer discussion about security and privacy implications of GSM. To sum it up, GSM is horribly broken on the security side. It offers no authentication between phones and cells. Also, it's encryption has been broken in the early 90s. There is not much progress in protocol improvements although this is known for a very long time. It's also well known that so-called IMSI-cachers are sold illegally for a few thousand dollars. The only reason GSM is still working at all is basically that those possibilities still cost a few thousands. But cheaper hardware and improvement in free GSM software makes it more likely that those possibilities will have a greater impact in the future (this is only a brief summary and I'm not really in that topic, see Wikipedia for some starting points for more info).

There was a bit of discussion about the question how realistic it is that some "normal user" is threatened by this due to the price of a few thousand dollars for the equipment. I didn't bring this up in the discussion any more, but I remember having seen a talk by a guy from Intel that the tendency is to design generic chips for various protocols that can be GSM, Bluetooth or WLAN purely by software control. Thinking about that, this raises the question of protocol security even more, as it might already be possible to use mainstream computer hardware to do mobile phone wiretapping by just replacing the firmware of a wireless lan card. It almost certainly will be possible within some years.

Another topic that was raised was frequency regulation. Even with free software you wouldn't be able to operate your own GSM network, because you couldn't afford buying a frequency (although it seems to be possible to get a testing license for a limited space, e. g. for technical workshops - the 27C3 will have a GSM test network). I mentioned that there's a chapter in the book "Code" from Lawrence Lessig (available in an updated version here, chapter is "The Regulators of Speech: Distribution" and starts on page 270 in the PDF). The thoughts from Lessing are that frequency regulation was neccessary in the beginning of radio technology, but today, it would be easily possible to design protocols that don't need regulation - they could be auto-regulating, e. g. with a prefix in front of every data package (the way wireless lan works). But the problem with that is that today, frequency usage generates large income for the state - that's completely against the original idea of it, as it's primarily purpose was to keep technology usable.

Posted by Hanno Böck

in Computer culture, Cryptography, English, Linux, Security

at

22:35

| Comments (0)

| Trackbacks (0)

Defined tags for this entry: 27c3, berlin, cellular, freesoftware, frequency, fsfe, gsm, lessig, mobilephones, openbsc, openbts, osmocombb, privacy, security, wiretapping

Thursday, September 9. 2010

Test your browser for Clickjacking protection

In 2008, a rather interesting new kind of security problem within web applications was found called Clickjacking. The idea is rather simple but genious: A webpage from the attacked web application is loaded into an iframe (a way to display a webpage within another webpage), but so small that the user cannot see it. Via javascript, this iframe is always placed below the mouse cursor and a button is focused in the iframe. When the user clicks anywhere on an attackers page, it clicks the button in his webapp causing some action the user didn't want to do.

What makes this vulnerability especially interesting is that it is a vulnerability within protocols and that it was pretty that there would be no easy fix without any changes to existing technology. A possible attempt to circumvent this would be a javascript frame killer code within every web application, but that's far away from being a nice solution (as it makes it neccessary to have javascript code around even if your webapp does not use any javascript at all).

Now, Microsoft suggested a new http header X-FRAME-OPTIONS that can be set to DENY or SAMEORIGIN. DENY means that the webpage sending that header may not be displayed in a frame or iframe at all. SAMEORIGIN means that it may only be referenced from webpages on the same domain name (sidenote: I tend to not like Microsoft and their behaviour on standards and security very much, but in this case there's no reason for that. Although it's not a standard – yet? - this proposal is completely sane and makes sense).

Just recently, Firefox added support, all major other browser already did that before (Opera, Chrome), so we finally have a solution to protect against clickjacking (konqueror does not support it yet and I found no plans for it, which may be a sign for the sad state of konqueror development regarding security features - they're also the only browser not supporting SNI). It's now up to web application developers to use that header. For most of them – if they're not using frames at all - it's probably quite easy, as they can just set the header to DENY all the time. If an app uses frames, it requires a bit more thoughts where to set DENY and where to use SAMEORIGIN.

It would also be nice to have some "official" IETF or W3C standard for it, but as all major browsers agree on that, it's okay to start using it now.

But the main reason I wrote this long introduction: I've set up a little test page where you can check if your browser supports the new header. If it doesn't, you should look for an update.

What makes this vulnerability especially interesting is that it is a vulnerability within protocols and that it was pretty that there would be no easy fix without any changes to existing technology. A possible attempt to circumvent this would be a javascript frame killer code within every web application, but that's far away from being a nice solution (as it makes it neccessary to have javascript code around even if your webapp does not use any javascript at all).

Now, Microsoft suggested a new http header X-FRAME-OPTIONS that can be set to DENY or SAMEORIGIN. DENY means that the webpage sending that header may not be displayed in a frame or iframe at all. SAMEORIGIN means that it may only be referenced from webpages on the same domain name (sidenote: I tend to not like Microsoft and their behaviour on standards and security very much, but in this case there's no reason for that. Although it's not a standard – yet? - this proposal is completely sane and makes sense).

Just recently, Firefox added support, all major other browser already did that before (Opera, Chrome), so we finally have a solution to protect against clickjacking (konqueror does not support it yet and I found no plans for it, which may be a sign for the sad state of konqueror development regarding security features - they're also the only browser not supporting SNI). It's now up to web application developers to use that header. For most of them – if they're not using frames at all - it's probably quite easy, as they can just set the header to DENY all the time. If an app uses frames, it requires a bit more thoughts where to set DENY and where to use SAMEORIGIN.

It would also be nice to have some "official" IETF or W3C standard for it, but as all major browsers agree on that, it's okay to start using it now.

But the main reason I wrote this long introduction: I've set up a little test page where you can check if your browser supports the new header. If it doesn't, you should look for an update.

Posted by Hanno Böck

in Code, English, Security

at

00:22

| Comment (1)

| Trackbacks (0)

Defined tags for this entry: browser, clickjacking, firefox, javascript, microsoft, security, vulnerability, websecurity

Friday, May 14. 2010

Secure RSA padding: RSA-PSS

I got selected for this years Google Summer of Code with a project for the implementation of RSA-PSS in the nss library. RSA-PSS will also be the topic of my diploma thesis, so I thought I'd write some lines about it.

RSA is, as you may probably know, the most widely used public key cryptography algorithm. It can be used for signing and encryption, RSA-PSS is about signing (something similar, RSA-OAEP, exists for encryption, but that's not my main topic).

The formula for the RSA-algorithm is S = M^k mod N (S is the signature, M the input, k the private key and N the product of two big prime numbers). One important thing is that M is not the Message itself, but some encoding of the message. A simple way of doing this encoding is using a hash-function, for example SHA256. This is basically how old standards (like PKCS #1 1.5) worked. While no attacks exist against this scheme, it's believed that this can be improved. One reason is that while the RSA-function accepts an input of size N (which is the same length as the keysize, for example 2048/4096 bit), hash-functions usually produce much smaller inputs (something like 160/256 bit).

An improved scheme for that is the Probabilistic Signature Scheme (PSS), (Bellare/Rogaway 1996/1998). PSS is "provable secure". It does not mean that the outcoming algorithm is "provable secure" (that's impossible with today's math), but that the outcome is as secure as the input algorithm RSA and the used hash function (so-called "random oracle model"). A standard for PSS-encryption is PKCS #1 2.1 (republished as RFC 3447) So PSS in general is a good idea as a security measure, but as there is no real pressure to implement it, it's still not used very much. Just an example, the new DNSSEC ressource records just published last year still use the old PKCS #1 1.5 standard.

For SSL/TLS, standards to use PSS exist (RFC 4055, RFC 5756), but implementation is widely lacking. Just recently, openssl got support for PSS verification. The only implementation of signature creation I'm aware of is the java-library bouncycastle (yes, this forced me to write some lines of java code).

The nss library is used by the Mozilla products (Firefox, Thunderbird), so an implementation there is crucial for a more widespread use of PSS.

RSA is, as you may probably know, the most widely used public key cryptography algorithm. It can be used for signing and encryption, RSA-PSS is about signing (something similar, RSA-OAEP, exists for encryption, but that's not my main topic).

The formula for the RSA-algorithm is S = M^k mod N (S is the signature, M the input, k the private key and N the product of two big prime numbers). One important thing is that M is not the Message itself, but some encoding of the message. A simple way of doing this encoding is using a hash-function, for example SHA256. This is basically how old standards (like PKCS #1 1.5) worked. While no attacks exist against this scheme, it's believed that this can be improved. One reason is that while the RSA-function accepts an input of size N (which is the same length as the keysize, for example 2048/4096 bit), hash-functions usually produce much smaller inputs (something like 160/256 bit).

An improved scheme for that is the Probabilistic Signature Scheme (PSS), (Bellare/Rogaway 1996/1998). PSS is "provable secure". It does not mean that the outcoming algorithm is "provable secure" (that's impossible with today's math), but that the outcome is as secure as the input algorithm RSA and the used hash function (so-called "random oracle model"). A standard for PSS-encryption is PKCS #1 2.1 (republished as RFC 3447) So PSS in general is a good idea as a security measure, but as there is no real pressure to implement it, it's still not used very much. Just an example, the new DNSSEC ressource records just published last year still use the old PKCS #1 1.5 standard.

For SSL/TLS, standards to use PSS exist (RFC 4055, RFC 5756), but implementation is widely lacking. Just recently, openssl got support for PSS verification. The only implementation of signature creation I'm aware of is the java-library bouncycastle (yes, this forced me to write some lines of java code).

The nss library is used by the Mozilla products (Firefox, Thunderbird), so an implementation there is crucial for a more widespread use of PSS.

Posted by Hanno Böck

in Code, Cryptography, English, Linux, Security

at

23:22

| Comments (0)

| Trackbacks (0)

Monday, April 5. 2010

Easterhegg in Munich

I visited this year's easterhegg in Munich. The easterhegg is an event by the chaos computer club.

I visited this year's easterhegg in Munich. The easterhegg is an event by the chaos computer club.I held a talk expressing some thoughts I had in mind for quite a long time about free licenses. The conclusion is mainly that I think it very often may make more sense to use public domain "licensing" instead of free licenses with restrictions. The slides can be downloaded here (video recording here in high quality / 1024x576 and here in lower quality / 640x360). Talk was in german, but the slides are english. I plan to write down a longer text about the subject, but I don't know when I'll find time for that.

I also had a 5 minute lightning-talk about RSA-PSS and RSA-OAEP, slides are here (german). I will probably write my diploma thesis about PSS, so you may read more about that here in the future.

From the other talks, I want to mention one because I think it's a very interesting project about an important topic: The mySmartGrid project is working on an opensource based solution for local smart grids. It's a research project by Fraunhofer ITWM Kaiserslautern and it sounds very promising. Smart grids will almost definitely come within the next years and if people stick to the solutions provided by big energy companies, this will most likely be a big thread to privacy and will most probably prefer old centralized electricity generation.

Posted by Hanno Böck

in Code, Computer culture, Copyright, Ecology, English, Politics, Security

at

20:58

| Comments (4)

| Trackbacks (0)

Defined tags for this entry: ccc, copyright, easterhegg, licenses, mysmartgrid, publicdomain, rsa, rsaoaep, rsapss

Monday, February 1. 2010

SSL-Certificates with SHA256 signature

At least since 2005 it's well known that the cryptographic hash function SHA1 is seriously flawed and it's only a matter of time until it will be broken. However, it's still widely used and it can be expected that it'll be used long enough to allow real world attacks (as it happened with MD5 before). The NIST (the US National Institute of Standards and Technology) suggests not to use SHA1 after 2010, the german BSI (Bundesamt für Sicherheit in der Informationstechnik) says they should've been fadet out by the end of 2009.

The probably most widely used encryption protocol is SSL. It is a protocol that can operate on top of many other internet protocols and is for example widely used for banking accounts.

As SSL is a pretty complex protocol, it needs hash functions at various places, here I'm just looking at one of them. The signatures created by the certificate authorities. Every SSL certificate is signed by a CA, even if you generate SSL certificates yourself, they are self-signed, meaning that the certificate itself is it's own CA. From what I know, despite the suggestions mentioned above no big CA will give you certificates signed with anything better than SHA1. You can check this with:

openssl x509 -text -in [your ssl certificate]

Look for "Signature Algorithm". It'll most likely say sha1WithRSAEncryption. If your CA is good, it'll show sha256WithRSAEncryption. If your CA is really bad, it may show md5WithRSAEncryption.

When asking for SHA256 support, you often get the answer that the software still has problems, it's not ready yet. When asking for more information I never got answers. So I tried it myself. On an up-to-date apache webserver with mod_ssl, it was no problem to install a SHA256 signed certificate based on a SHA256 signed test CA. All browsers I've tried (Firefox 3.6, Konqueror 4.3.5, Opera 10.10, IE8 and even IE6) had no problem with it. You can check it out at [removed]. You will get a certificate warning (obviously, as it's signed by my own test CA), but you'll be able to view the page. If you want to test it without warnings, you can also import the CA certificate.

I'd be interested if this causes any problems (on server or on client side), so please leave a comment if you are aware of any incompatibilities.

Update: By request in the comments, I've also created a SHA512 testcase.

Update 2: StartSSL wrote me that they tried providing SHA256-certificates about a year ago and had too many problems - it wasn't very specific but they mentioned that earlier Windows XP and Windows 2003 Server versions may have problems.

The probably most widely used encryption protocol is SSL. It is a protocol that can operate on top of many other internet protocols and is for example widely used for banking accounts.

As SSL is a pretty complex protocol, it needs hash functions at various places, here I'm just looking at one of them. The signatures created by the certificate authorities. Every SSL certificate is signed by a CA, even if you generate SSL certificates yourself, they are self-signed, meaning that the certificate itself is it's own CA. From what I know, despite the suggestions mentioned above no big CA will give you certificates signed with anything better than SHA1. You can check this with:

openssl x509 -text -in [your ssl certificate]

Look for "Signature Algorithm". It'll most likely say sha1WithRSAEncryption. If your CA is good, it'll show sha256WithRSAEncryption. If your CA is really bad, it may show md5WithRSAEncryption.

When asking for SHA256 support, you often get the answer that the software still has problems, it's not ready yet. When asking for more information I never got answers. So I tried it myself. On an up-to-date apache webserver with mod_ssl, it was no problem to install a SHA256 signed certificate based on a SHA256 signed test CA. All browsers I've tried (Firefox 3.6, Konqueror 4.3.5, Opera 10.10, IE8 and even IE6) had no problem with it. You can check it out at [removed]. You will get a certificate warning (obviously, as it's signed by my own test CA), but you'll be able to view the page. If you want to test it without warnings, you can also import the CA certificate.

I'd be interested if this causes any problems (on server or on client side), so please leave a comment if you are aware of any incompatibilities.

Update: By request in the comments, I've also created a SHA512 testcase.

Update 2: StartSSL wrote me that they tried providing SHA256-certificates about a year ago and had too many problems - it wasn't very specific but they mentioned that earlier Windows XP and Windows 2003 Server versions may have problems.

Posted by Hanno Böck

in Cryptography, English, Gentoo, Linux, Security

at

23:23

| Comments (15)

| Trackback (1)

Thursday, March 5. 2009

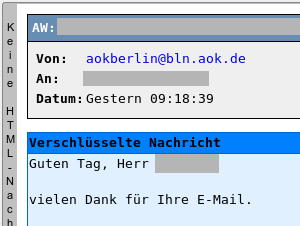

Verschlüsselte Mail von der AOK

Ich hatte vor kurzem per eMail Kontakt mit der AOK Berlin.

Ich hatte vor kurzem per eMail Kontakt mit der AOK Berlin.Durchaus gross war meine Überraschung, als ich von dieser eine Mail bekam, die PGP-Verschlüsselt war. Wohlgemerkt, ich hatte nicht mit irgendeiner Security- oder Computerabteilung, sondern mit der gewöhnlichen Kundenbetreuung zu tun. Da mein Initialkontakt via Webformular stattfand, war auch keine Mailsignatur von mir dort angekommen, insofern kann ich nur annehmen, dass deren Mailsystem automatisiert auf einem Keyserver meinen Key gesucht hat und diesen verwendet. Oder ein motivierter Mitarbeiter hat diesen hier von der Webseite.

Dass sämtliche Mails an Mailadressen, für die Schlüssel existieren, automatisiert verschlüsselt werden, kann ich mir kaum vorstellen, weil hier erstens vermutlich ein erheblicher Supportaufwand entsteht (passiert mir selber ja oft genug dass ich Nachrichten der Form »bitte nicht verschlüsseln, ich hab meinen Key verlegt / grad nicht da«) und zweitens ja die Mailadressen in den Keys in keinster Weise verifiziert werden. Allerdings existiert beispielsweise das PGP Global Directory, in dem nur Keys mit regelmäßig verifizierten Mailadressen landen. Das erscheint mir im Moment die plausibelste Erklärung.

Auch wenn ich nicht genau weiss, wie die AOK an den passenden Key kam, lobenswert finde ich es allemal, dass sich zur Abwechslung mal jemand in einem aus Datenschutzgründen sehr sensiblen Bereich von selbst um verschlüsselte Kommunikation bemüht.

Posted by Hanno Böck

in Computer culture, Security

at

19:28

| Comments (5)

| Trackbacks (0)

Defined tags for this entry: aok, datenschutz, email, encryption, gpg, pgp, privacy, security, verschlüsselung

Tuesday, January 13. 2009

Study research project about session cookies, SSL and session hijacking

In the last weeks, I made a study research project at the EISS at the University of Karlsruhe. The subject was »Session Cookies and SSL«, investigating the problems that arise when trying to secure a web application with HTTPS and using session cookies.

I already wrote about this in the past, presenting vulnerabilities in various web applications.

One of the notable results is probably that ebay has just no measurements against those issues at all, so it's pretty trivial to hijack a session (and use that to do bids and even change the address of the hijacked account).

Download »Session Cookies and SSL« (PDF, 317 KB)

I already wrote about this in the past, presenting vulnerabilities in various web applications.

One of the notable results is probably that ebay has just no measurements against those issues at all, so it's pretty trivial to hijack a session (and use that to do bids and even change the address of the hijacked account).

Download »Session Cookies and SSL« (PDF, 317 KB)

Friday, December 26. 2008

Anfragen die man so erhält

Ich erhielt nachfolgende Mail, ich dachte ich poste das einfach mal.

Hallo Hanno,

Hab Deine Adresse auf Deinem Blog gefunden.

Ich hab eine Frage:

Ist es moeglich in eine Webseite zu gehen, die Daten darin zu klauen, das Passwort zu aendern und die Webseite fuer immer zu blockieren?

Wer koennte sowas machen und fuer welchen Preis??

Freue mich auf Deine Antwort

Bis bald

Ich leite natürlich Angebote gerne weiter ;-)

Hallo Hanno,

Hab Deine Adresse auf Deinem Blog gefunden.

Ich hab eine Frage:

Ist es moeglich in eine Webseite zu gehen, die Daten darin zu klauen, das Passwort zu aendern und die Webseite fuer immer zu blockieren?

Wer koennte sowas machen und fuer welchen Preis??

Freue mich auf Deine Antwort

Bis bald

Ich leite natürlich Angebote gerne weiter ;-)

Thursday, September 25. 2008

SSL Session hijacking

Recently, two publications raised awareness of a problem with ssl secured websites.

If a website is configured to always forward traffic to ssl, one would assume that all traffic is safe and nothing can be sniffed. Though, if one is able to sniff network traffic and also has the ability to forward the victim to a crafted site (which can, e. g., be just sending him some »hey, read this, interesting text« message), he can then force the victim to open a http-connection. If the cookie has not set the secured flag, the attacker can sniff the cookie and take over the session of the user (assuming it's using some kind of cookie-based session, which is pretty standard on today's webapps).

The solution to this is that a webapp should always check if the connection is ssl and set the secured flag accordingly. For PHP, this could be something like this:

I've recently investigated all web applications I'm using myself and reported the issue (Mantis / CVE-2008-3102, Drupal / CVE-2008-3661, Gallery / CVE-2008-3662, Squirrelmail / CVE-2008-3663). I have some more pending I want to investigate further.

I call security researchers to add this issue to their list of common things one has to look after. I find the firefox-extension CookieMonster very useful for this.

The result of my reports was quite mixed. While the gallery team took the issue very serious (and even payed me a bounty for my report, many thanks for that), the drupal team thinks there is no issue and did nothing. The others have not released updates yet, but fixed the issue in their code.

And for a final word, I want to share a mail with you I got after posting the gallery issue to full disclosure:

for fuck's sake dude! half of the planet, military, government, financial sites suffer from this and the best you could come up with is a fucking photo album no one uses! do everybody a favor and die you lame fuck!

If a website is configured to always forward traffic to ssl, one would assume that all traffic is safe and nothing can be sniffed. Though, if one is able to sniff network traffic and also has the ability to forward the victim to a crafted site (which can, e. g., be just sending him some »hey, read this, interesting text« message), he can then force the victim to open a http-connection. If the cookie has not set the secured flag, the attacker can sniff the cookie and take over the session of the user (assuming it's using some kind of cookie-based session, which is pretty standard on today's webapps).

The solution to this is that a webapp should always check if the connection is ssl and set the secured flag accordingly. For PHP, this could be something like this:

if ($_SERVER['HTTPS']) session_set_cookie_params( 0, '/', '', true, true );

I've recently investigated all web applications I'm using myself and reported the issue (Mantis / CVE-2008-3102, Drupal / CVE-2008-3661, Gallery / CVE-2008-3662, Squirrelmail / CVE-2008-3663). I have some more pending I want to investigate further.

I call security researchers to add this issue to their list of common things one has to look after. I find the firefox-extension CookieMonster very useful for this.

The result of my reports was quite mixed. While the gallery team took the issue very serious (and even payed me a bounty for my report, many thanks for that), the drupal team thinks there is no issue and did nothing. The others have not released updates yet, but fixed the issue in their code.

And for a final word, I want to share a mail with you I got after posting the gallery issue to full disclosure:

for fuck's sake dude! half of the planet, military, government, financial sites suffer from this and the best you could come up with is a fucking photo album no one uses! do everybody a favor and die you lame fuck!

Sunday, September 7. 2008

Fuzzing is easy

I recently played around with the possibilities of fuzzing. It's a simple way to find bugs in applications.

What you do: You have some application that parses some kind of file format. You create lots (thousands) of files which have small errors. The simplest approach is to just change random bits. If the app crashes, you've found a bug, it's quite likely that it's a security relevant one. This is especially crucial for apps like mail scanners (antivirus), but pretty much works for every app that parses foreign input. It works especially well on uncommon file formats, because their code is often not well maintained.

My fuzzing tool of choice is zzuf.

I am impressed and a bit shocked how easy it is to find crashers and potential overflows in common, security relevant applications. My last discovery was a crasher in the chm parser of clamav.

What you do: You have some application that parses some kind of file format. You create lots (thousands) of files which have small errors. The simplest approach is to just change random bits. If the app crashes, you've found a bug, it's quite likely that it's a security relevant one. This is especially crucial for apps like mail scanners (antivirus), but pretty much works for every app that parses foreign input. It works especially well on uncommon file formats, because their code is often not well maintained.

My fuzzing tool of choice is zzuf.

I am impressed and a bit shocked how easy it is to find crashers and potential overflows in common, security relevant applications. My last discovery was a crasher in the chm parser of clamav.

Tuesday, April 29. 2008

Hash-collissions in real world scenarios

I just read an article about the recent wordpress vulnerability (if you're running wordpress, please update to 2.5.1 NOW), one point raised my attention: The attack uses MD5-collisions.

I wrote some articles about hash collisions a while back. Short introduction: A cryptographic hash-function is a function where you can put in any data and you'll get a unique, fixed-size value. »unique« in this case scenario means that it's very hard to calculate two different strings matching to the same hash value. If you can do that, the function should be considered broken.

The MD5 function got broken some years back (2004) and it's more or less a question of time when the same will happen to SHA1. There have been scientific results claiming that an attacker with enough money could easily create a supercomputer able to create collisions on SHA1. The evil thing is: Due to the design of both functions, if you have one collision, you can create many more easily.

Although those facts are well known, SHA1 is still widely used (just have a look at your SSL connections or at the way the PGP web of trust works) and MD5 isn't dead either. The fact that a well-known piece of software got issues depending on hash collisions should raise attention. Pretty much all security considerations on cryptographic protocols rely on the collision resistance of hash functions.

The NIST plans to define new hash functions until 2012, until then it's probably a safe choice to stick with SHA256 or SHA512.

I wrote some articles about hash collisions a while back. Short introduction: A cryptographic hash-function is a function where you can put in any data and you'll get a unique, fixed-size value. »unique« in this case scenario means that it's very hard to calculate two different strings matching to the same hash value. If you can do that, the function should be considered broken.

The MD5 function got broken some years back (2004) and it's more or less a question of time when the same will happen to SHA1. There have been scientific results claiming that an attacker with enough money could easily create a supercomputer able to create collisions on SHA1. The evil thing is: Due to the design of both functions, if you have one collision, you can create many more easily.

Although those facts are well known, SHA1 is still widely used (just have a look at your SSL connections or at the way the PGP web of trust works) and MD5 isn't dead either. The fact that a well-known piece of software got issues depending on hash collisions should raise attention. Pretty much all security considerations on cryptographic protocols rely on the collision resistance of hash functions.

The NIST plans to define new hash functions until 2012, until then it's probably a safe choice to stick with SHA256 or SHA512.

Posted by Hanno Böck

in Code, Cryptography, English, Security

at

21:44

| Comments (3)

| Trackbacks (0)

Monday, April 21. 2008

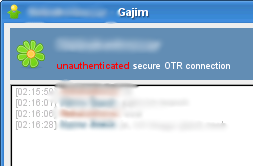

gajim with otr encryption

In the instant messaging world, encryption is a bit of a problem. There is no single standard that all clients share, mostly two methods of encryption are out there: pgp over jabber (as defined in the xmpp standard) and otr.

In the instant messaging world, encryption is a bit of a problem. There is no single standard that all clients share, mostly two methods of encryption are out there: pgp over jabber (as defined in the xmpp standard) and otr.Most clients only support either otr (pidgin, adium) or pgp (gajim, psi), for a long time I was looking for a solution where both methods work. psi has otr-patches, but they didn't work when I tried them. kopete also has an otr-plugin, but I've not tested that yet.

Today I found that there is an otr-branch of gajim, which is my everyday client, so this would be great. As you can see on the picture, it seems to work on a connection with an ICQ user using pidgin.

I've created some ebuilds in my overlay (the code is stored in bazaar, I've copied the bzr eclass from the desktop effects overlay):

svn co https://svn.hboeck.de/overlay

Friday, April 18. 2008

Kassenzettel

Normalerweise versuche ich aus Datenschutzgründen, nach Möglichkeit mit Bargeld und nicht mit EC-Karte zu bezahlen. Gestern jedoch merkte ich erst im Laden, dass ich viel zu wenig Bargeld bei mir hatte. Also doch mit Karte.

Beim rausgehen warf ich ein Stück überflüssige Verpackung gleich in den Müll und wollte schon mit dem Kassenzettel ebenso verfahren, hielt jedoch kurz inne. Steht da was möglicherweise sensibles drauf? Geschaut, tatsächlich, BLZ, Kontonummer (kein Name, sonst wär's noch problematischer). Ich weiss nicht ob das üblich ist, werde aber in Zukunft darauf achten.

Welche möglicherweise spannenden Datensätze man generieren könnte, durch schlichtes Wühlen in den Papierkörben vor großen Geschäften, das überlasse ich der Phantasie meiner Leser.

Beim rausgehen warf ich ein Stück überflüssige Verpackung gleich in den Müll und wollte schon mit dem Kassenzettel ebenso verfahren, hielt jedoch kurz inne. Steht da was möglicherweise sensibles drauf? Geschaut, tatsächlich, BLZ, Kontonummer (kein Name, sonst wär's noch problematischer). Ich weiss nicht ob das üblich ist, werde aber in Zukunft darauf achten.

Welche möglicherweise spannenden Datensätze man generieren könnte, durch schlichtes Wühlen in den Papierkörben vor großen Geschäften, das überlasse ich der Phantasie meiner Leser.

Thursday, April 10. 2008

Wordpress mass hacks for pagerank

Today heise security brought a news that a growing number of old wordpress installations get's misused by spammers to improve their pagerank. I've more or less waited for something like that, because it's quite obvious: If you have an automated mechanism to use security holes in a popular web application, you can search for them with a search engine (google, the mighty hacktool) and usually it's quite trivial to detect both application and version.

This isn't a wordpress-thing only, this can happen to pretty much every widespread web application. Wordpress had a lot of security issues recently and is very widespread, so it's an obvious choice. But other incidents like this will follow and future ones probably will affect more different web applications.

The conclusion is quite simple: If you're installing a web application yourself, you are responsible for it! You need to look for security updates and you need to install them, else you might be responsible for spammers actions. And there's no »nobody is interested in my little blog«-excuse, as these are not attacks against an individual page, but mass attacks.

For administrators, I wrote a little tool a while back, where I had such incidents in mind: freewvs, it checks locally on the filesystem for web applications and knows about vulnerabilities, so it'll tell you which web applications need updates. It already detects a whole bunch of apps, while more is always better and if you'd like to help, I'd gladly accept patches for more applications (the format is quite simple).

With it, server administrators can check the webroots of thier users and nag them if they have outdated cruft laying around.

This isn't a wordpress-thing only, this can happen to pretty much every widespread web application. Wordpress had a lot of security issues recently and is very widespread, so it's an obvious choice. But other incidents like this will follow and future ones probably will affect more different web applications.

The conclusion is quite simple: If you're installing a web application yourself, you are responsible for it! You need to look for security updates and you need to install them, else you might be responsible for spammers actions. And there's no »nobody is interested in my little blog«-excuse, as these are not attacks against an individual page, but mass attacks.

For administrators, I wrote a little tool a while back, where I had such incidents in mind: freewvs, it checks locally on the filesystem for web applications and knows about vulnerabilities, so it'll tell you which web applications need updates. It already detects a whole bunch of apps, while more is always better and if you'd like to help, I'd gladly accept patches for more applications (the format is quite simple).

With it, server administrators can check the webroots of thier users and nag them if they have outdated cruft laying around.

Posted by Hanno Böck

in Computer culture, English, Linux, Security

at

02:44

| Comment (1)

| Trackbacks (0)

Thursday, March 27. 2008

Blog-Spam abusing XSS

I got some spam in the comment fields of my blog that raised my interest.

Some sample how they looked like:

http://www.unicef.org/voy/search/search.php?q=some+advertising%3Cscript%3Eparent%2elocation%2ereplace%28%22http%3A%2F%2Fgoogle%2ede22%29%3C%2Fscript%3E

I've replaced the forwarding URL and the advertising words (cause I don't want to raise interest on spammers pages). I got several similar spam comments the following days all with the same scheme. Using a Cross Site Scripting vulnerability, mostly on pages that might raise trust to forward to a medical selling page.

This is probably a good reason why XSS should be fixed, no matter what attack vectors there are. It can always be used by spammers to use your pages fame / authority to advertise their services. Same goes for redirectors or frame injections. Some where already reported at some public place (for the above see here). I've re-reported them all, but got just one reply by a webmaster who fixed it. True reality on the internet today, even webmasters of famous public organizations don't seem to care about internet security.

For the record, the others:

http://adventisthealth.org/utilities/search.asp?Yider=<script>alert(1)</script>

http://www.loc.gov/rr/ElectronicResources/search.php?search_term=<script>alert(1)</script>

And thanks to iconfactory, they fixed their XSS.

Some sample how they looked like:

http://www.unicef.org/voy/search/search.php?q=some+advertising%3Cscript%3Eparent%2elocation%2ereplace%28%22http%3A%2F%2Fgoogle%2ede22%29%3C%2Fscript%3E

I've replaced the forwarding URL and the advertising words (cause I don't want to raise interest on spammers pages). I got several similar spam comments the following days all with the same scheme. Using a Cross Site Scripting vulnerability, mostly on pages that might raise trust to forward to a medical selling page.

This is probably a good reason why XSS should be fixed, no matter what attack vectors there are. It can always be used by spammers to use your pages fame / authority to advertise their services. Same goes for redirectors or frame injections. Some where already reported at some public place (for the above see here). I've re-reported them all, but got just one reply by a webmaster who fixed it. True reality on the internet today, even webmasters of famous public organizations don't seem to care about internet security.

For the record, the others:

http://adventisthealth.org/utilities/search.asp?Yider=<script>alert(1)</script>

http://www.loc.gov/rr/ElectronicResources/search.php?search_term=<script>alert(1)</script>

And thanks to iconfactory, they fixed their XSS.

« previous page

(Page 5 of 8, totaling 113 entries)

» next page